线性回归 假设

Linear Regression is the bicycle of regression models. It’s simple yet incredibly useful. It can be used in a variety of domains. It has a nice closed formed solution, which makes model training a super-fast non-iterative process.

线性回归是回归模型的基础。 这很简单,但却非常有用。 它可以用于多种领域。 它具有良好的封闭式解决方案,这使得模型训练成为超快速的非迭代过程。

A Linear Regression model’s performance characteristics are well understood and backed by decades of rigorous research. The model’s predictions are easy to understand, easy to explain and easy to defend.

线性回归模型的性能特征已得到数十年的严格研究的很好理解和支持。 该模型的预测易于理解,易于解释和易于捍卫。

If there only one regression model that you have time to learn inside-out, it should be the Linear Regression model.

如果只有一个回归模型可供您内外学习,则应该使用线性回归模型。

If your data satisfies the assumptions that the Linear Regression model, specifically the Ordinary Least Squares Regression (OLSR) model makes, in most cases you need look no further.

如果您的数据满足线性回归模型(特别是普通最小二乘回归(OLSR)模型)所做的假设,则在大多数情况下,您无需再进行任何研究。

Which brings us to the following four assumptions that the OLSR model makes:

这使我们得出OLSR模型做出的以下四个假设:

Linear functional form: The response variable y should be a linearly related to the explanatory variables X.

线性函数形式:响应变量y应该与解释变量X线性相关。

Residual errors should be i.i.d.: After fitting the model on the training data set, the residual errors of the model should be independent and identically distributed random variables.

残留误差应该被消除:将模型拟合到训练数据集之后,模型的残留误差应该是独立的并且分布均匀的随机变量。

Residual errors should be normally distributed: The residual errors should be normally distributed.

残留误差应呈正态分布:残留误差应呈正态分布。

Residual errors should be homoscedastic: The residual errors should have constant variance.

残留误差应为等方差:残留误差应具有恒定的方差。

Let’s look at the four assumptions in detail and how to test them.

让我们详细看一下这四个假设以及如何测试它们。

假设1:线性函数形式 (Assumption 1: Linear functional form)

Linearity requires little explanation. After all, if you have chosen to do Linear Regression, you are assuming that the underlying data exhibits linear relationships, specifically the following linear relationship:

线性几乎不需要解释。 毕竟,如果您选择进行线性回归,则假定基础数据具有线性关系,特别是以下线性关系:

y = β*X + ϵ

y = β * X + ϵ

Where y is the dependent variable vector, X is the matrix of explanatory variables which includes the intercept, β is the vector of regression coefficients and ϵ is the vector of error terms i.e. the portion of y that X is unable to explain.

其中y是因变量矢量, X是解释变量的矩阵,其中包括截距, β是回归系数的向量, ϵ是误差项的向量,即y中X不能解释的部分。

How to test the linearity assumption using Python

如何使用Python测试线性假设

This can be done in two ways:

这可以通过两种方式完成:

An easy way is to plot y against each explanatory variable x_j and visually inspect the scatter plot for signs of non-linearity.

一种简单的方法是针对每个解释变量x_j绘制y并目视检查散点图是否存在非线性迹象。

One could also use the

DataFrame.corr()method in Pandas to get the Pearson’s correlation coefficient ‘r’ between the response variable y and each explanatory variable x_j to get a quantitative feel for the degree of linear correlation.还可以在Pandas中使用

DataFrame.corr()方法来获得响应变量y与每个解释变量x_j之间的皮尔逊相关系数'r' ,从而获得线性相关程度的定量感觉。

Note that Pearson’s ‘r’ should be used only when the the relation between y and X is known to be linear.

请注意,仅当已知y和X之间的关系为线性时,才应使用Pearson的“ r”。

Let’s test the linearity assumption on the following data set of 9568 observations of 4 operating parameters of a combined cycle power plant taken over 6 years:

让我们根据以下6组观察结果得出的线性假设,这些数据是对联合循环发电厂在6年内进行的4个运行参数的9568个观测值的:

The explanatory variables x_j are as the following 4 power plant parameters:

说明变量x_j如下四个电厂参数:

Ambient_Temp in CelsiusExhaust_Volume in column height of Mercury in centimetersAmbient_Pressure in millibars of MercuryRelative_Humidity expressed as a percentage

Ambient_Temp摄氏Exhaust_Volume在厘米水银柱高度Ambient_Pressure水星Relative_Humidity的毫巴,以百分比表示

The response variable y is Power_Output of the power plant in MW.

响应变量y是发电厂的Power_Output ,单位为MW。

Let’s load the data set into a Pandas DataFrame.

让我们将数据集加载到Pandas DataFrame中。

import pandas as pdfrom patsy import dmatricesfrom matplotlib import pyplot as pltimport numpy as npdf = pd.read_csv('power_plant_output.csv', header=0)Plot the scatter plots of each explanatory variable against the response variable Power_Output.

绘制每个解释变量相对于响应变量Power_Output的散点图。

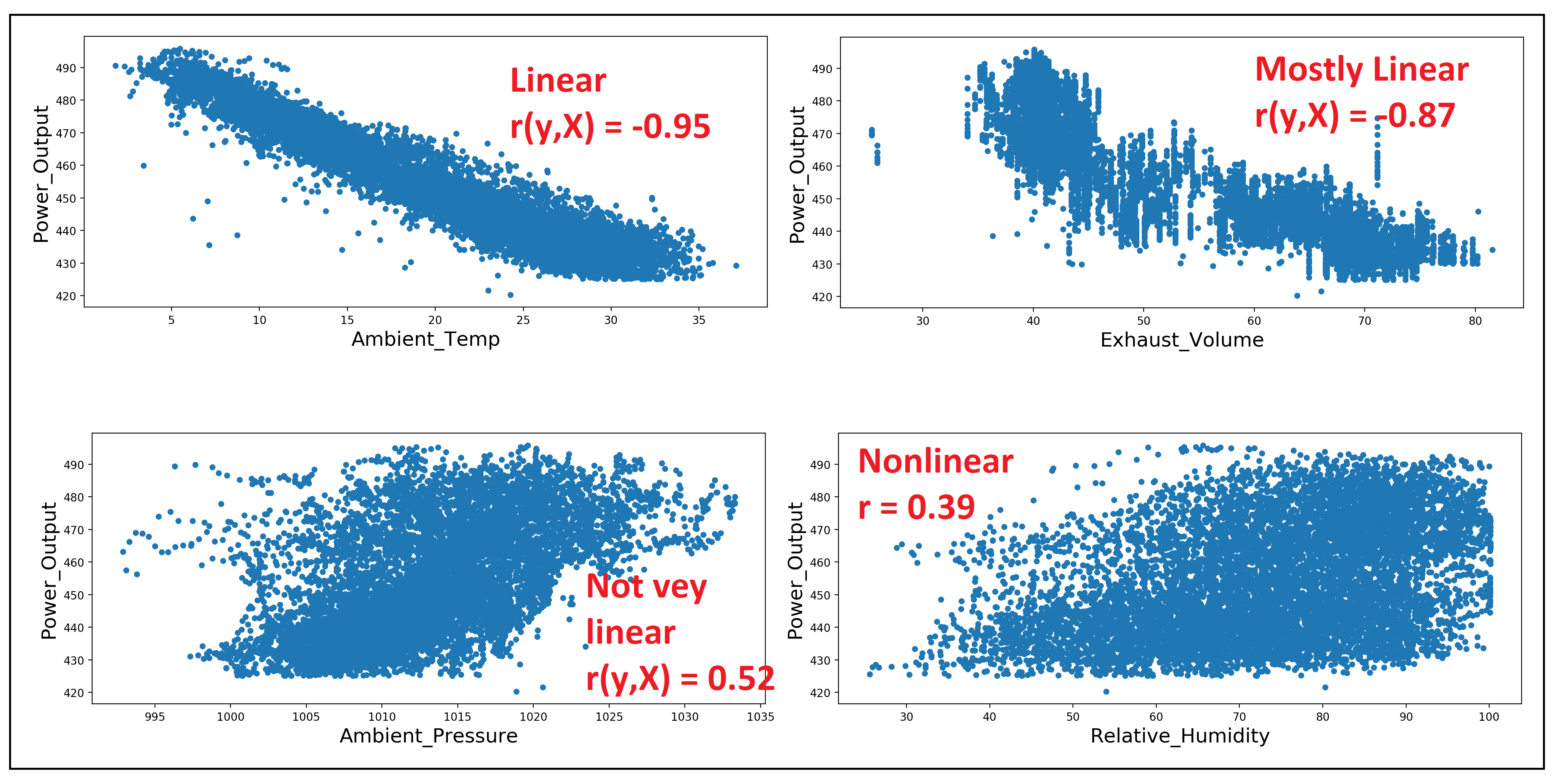

df.plot.scatter(x='Ambient_Temp', y='Power_Output')plt.xlabel('Ambient_Temp', fontsize=18)plt.ylabel('Power_Output', fontsize=18)plt.show()df.plot.scatter(x='Exhaust_Volume', y='Power_Output')plt.xlabel('Exhaust_Volume', fontsize=18)plt.ylabel('Power_Output', fontsize=18)plt.show()df.plot.scatter(x='Ambient_Pressure', y='Power_Output')plt.xlabel('Ambient_Pressure', fontsize=18)plt.ylabel('Power_Output', fontsize=18)plt.show()df.plot.scatter(x='Relative_Humidity', y='Power_Output')plt.xlabel('Relative_Humidity', fontsize=18)plt.ylabel('Power_Output', fontsize=18)plt.show()Here is a collage of the four plots:

这是四个情节的拼贴画:

You can see that Ambient_Temp and Exhaust_Volume seem to be most linearly related to the power plant’s Power_Output, followed by Ambient_Pressure and Relative_Humidity in that order.

您可以看到,Ambient_Temp和Exhaust_Volume似乎与发电厂的Power_Output线性关系最大,其次是Ambient_Pressure和Relative_Humidity。

Let’s also print out the Pearson’s ‘r’:

让我们也打印出皮尔逊的“ r”:

df.corr()['Power_Output']We get the following output, which backs up our visual intuition:

我们得到以下输出,它支持我们的视觉直觉:

Ambient_Temp -0.948128Exhaust_Volume -0.869780Ambient_Pressure 0.518429Relative_Humidity 0.389794Power_Output 1.000000Name: Power_Output, dtype: float64Related read: The Intuition Behind Correlation, for an in-depth explanation of the Pearson’s correlation coefficient.

相关阅读: 相关性背后的直觉 ,深入了解皮尔逊相关系数。

假设2:iid残差 (Assumption 2: i.i.d. residual errors)

The second assumption that one makes while fitting OLSR models is that the residual errors left over from fitting the model to the data are independent, identically distributed random variables.

在拟合OLSR模型时做出的第二个假设是,将模型拟合到数据后剩下的残留误差是独立的 , 均匀分布的 随机变量 。

We break this assumption into three parts:

我们将此假设分为三个部分:

- The residual errors are random variables, 残留误差是随机变量,

They are independent random variables, and

它们是独立的随机变量,并且

Their probability distributions are identical.

它们的概率分布是相同的 。

为什么残留误差是随机变量? (Why are residual errors random variables?)

After we train a Linear Regression model on a data set, if we run the training data through the same model, the model will generate predictions. Let’s call them y_pred. For each predicted value y_pred in the vector y_pred, there is a corresponding actual value y from the response variable vector y. The difference (y — y_pred) is the residual error ‘ε’. There are as many of these ε as the number of rows in the training set and together they form the residual errors vector ε.

在数据集上训练线性回归模型后,如果通过同一模型运行训练数据,则该模型将生成预测。 我们称它们为y_pred。 在y_pred矢量y_pred各预测值,存在来自响应变量矢量y相应的实际值y。 差(y_y_pred)是残余误差“ ε” 。 这些ε与训练集中的行数一样多,它们一起形成了残留误差向量ε 。

Each residual error ε is a random variable. To understand why, recollect that our training set (y_train, X_train) is just a sample of n values drawn from some very large population of values.

每个残余误差ε是一个随机变量 。 要了解原因,请回想一下我们的训练集(y_train,X_train)只是从一些非常大的值总体中得出的n个值的样本 。

If we had drawn a different sample (y_train’, X_train’) from the same population, the model would have fitted somewhat differently on this second sample, thereby producing a different set of predictions y_pred’, and therefore a different set of residual errors ε = (y’ — y_pred’).

如果我们从同一总体中抽取了不同的样本(y_train',X_train') ,则该模型在第二个样本上的拟合将有所不同,从而产生一组不同的预测y_pred' ,从而产生一组不同的残差ε = (y'— y_pred') 。

A third training sample drawn from the population would have, after training the model on it, generated a third set of residual errors ε = (y’’ — y_pred’’), and so on.

在对模型进行训练之后,从总体中提取的第三次训练样本将产生第三组残差误差ε=(y''-y_pred''),依此类推。

One can now see how each residual error in the vector ε can take a random value from as many set of values as the number of sample training data sets one is willing to train the model on, thereby making each residual error ε a random variable.

现在可以看到向量ε中的每个残留误差 可以从一个愿意训练模型的样本训练数据集的数量中选取一个任意值作为随机值,从而使每个残差ε 一个随机变量。

Why do residual errors need to be independent?

为什么残留误差需要独立?

Two random variables are independent if the probability of one of them taking up some value doesn’t depend on what value the other variable has taken. When you roll a die twice, the probability of its coming up as one, two,…,six in the second throw does not depend on the value it came up on the first throw. So the two throws are independent random variables that can each take a value of 1 thru 6 independent of the other throw.

如果两个随机变量占据一个值的概率不取决于另一个变量取什么值,则两个变量是独立的。 当您掷骰子两次时,其在第二次掷骰中出现的概率为一,二,...,六,这并不取决于它在第一掷掷骰中获得的值。 因此,两次抛出是独立的随机变量,可以独立于另一次抛出而分别取值1到6。

In the context of regression, we have seen why the residual errors of the regression model are random variables. If the residual errors are not independent, they will likely demonstrate some sort of a pattern (which is not always obvious to the naked eye). There is information in this pattern that the regression model wasn’t able to capture during its training on the training set, thereby making the model sub-optimal.

在回归的背景下,我们已经看到了为什么回归模型的残差是随机变量。 如果残留误差不是独立的,则它们可能会显示出某种模式(肉眼并不总是很明显)。 在这种模式下,有信息表明,回归模型在训练集上的训练过程中无法捕获,因此使模型次优。

If the residual errors aren’t independent, it may mean a number of things:

如果残留错误不是独立的,则可能意味着很多事情:

- One or more important explanatory variables are missing from your model. The effect of the missing variables is showing through as a pattern in the residual errors. 您的模型中缺少一个或多个重要的解释变量。 遗漏变量的影响通过模式显示在残余误差中。

The linear model you have built is just the wrong kind of model for the data set. For e.g. if the data set shows obvious non-linearity and you try to fit a linear regression model on such a data set, the nonlinear relationships between y and X will show through in the residual errors of regression in the form of a distinct pattern.

您建立的线性模型只是错误的数据集模型。 例如,如果数据集显示出明显的非线性,并且您尝试在此类数据集上拟合线性回归模型,则y和X之间的非线性关系将以不同模式的形式显示在回归的残留误差中。

A third interesting cause of non-independence of residual errors is what’s known as multicolinearity which means that the explanatory variables are themselves linearly related to each other. Multicolinearity causes the model’s coefficients to become unstable, i.e. they will swing wildly from one training run to next when trained on different training sets. This can make the model’s overall goodness-of-fit statistics questionable. Another serious effect of multicoliearity, especially extreme multicolinearity, is that tha model’s least squares solver may throw up infinities during the model fitting process thereby making it impossible to fit the model on the training data.

残余误差不独立的第三个有趣原因是所谓的多重共线性 ,这意味着解释变量本身彼此之间呈线性关系。 多重共线性导致模型的系数变得不稳定,即,当在不同的训练集上训练时,它们将从一次训练奔跑到下一次训练。 这可能会使模型的整体拟合优度统计数据令人怀疑。 多共线性(尤其是极端多线性)的另一个严重影响是,模型的最小二乘法求解器可能会在模型拟合过程中抛出无穷大,从而无法将模型拟合到训练数据上。

How to test for independence of residual errors?

如何测试残差的独立性?

It’s not easy to verify independence. But sometimes one can detect patterns in the plot of residual errors versus the predicted values or the plot of residual errors versus actual values.

验证独立性并不容易。 但是有时人们可以在残留误差与预测值的关系图或残留误差与实际值的 关系图中检测模式。

Another common technique is to use the Dubin-Watson test which measures the degree of correlation of each residual error with the ‘previous’ residual error. This is known as lag-1 auto-correlation and it is a useful technique to find out if residual errors of a time series regression model are independent.

另一种常见的技术是使用Dubin-Watson检验 ,该检验测量每个残差与“先前”残差的相关程度。 这被称为lag-1自相关 ,它是一种有用的技术,可用来确定时间序列回归模型的残差是否独立。

Let’s fit a linear regression model to the Power Plant data and inspect the residual errors of regression.

让我们将线性回归模型拟合到电厂数据并检查回归的残留误差。

We’ll start by creating the model expression using the Patsy library as follows:

我们将从使用Patsy库创建模型表达式开始,如下所示:

model_expr = 'Power_Output ~ Ambient_Temp + Exhaust_Volume + Ambient_Pressure + Relative_Humidity'In the above model expression, we are telling Patsy that Power_Output is the response variable while Ambient_Temp, Exhaust_Volume, Ambient_Pressure and Relative_Humidity are the explanatory variables. Patsy will add the regression intercept by default.

在上面的模型表达式中,我们告诉Patsy,Power_Output是响应变量,而Ambient_Temp,Exhaust_Volume,Ambient_Pressure和Relative_Humidity是解释变量。 Patsy将默认添加回归截距。

We’ll use patsy to carve out the y and X matrices as follows:

我们将使用patsy来划分y和X矩阵,如下所示:

y, X = dmatrices(model_expr, df, return_type='dataframe')Let’s also carve out the train and test data sets. The training data set will be 80% of the size of the overall (y, X) and the rest will be the testing data set:

让我们也分析一下训练和测试数据集。 训练数据集将是整体( y,X )大小的80%,其余的将是测试数据集:

mask = np.random.rand(len(X)) < 0.8X_train = X[mask]y_train = y[mask]X_test = X[~mask]y_test = y[~mask]Finally, build and train an Ordinary Least Squares Regression Model on the training data and print the model summary:

最后,在训练数据上构建和训练普通最小二乘回归模型并打印模型摘要:

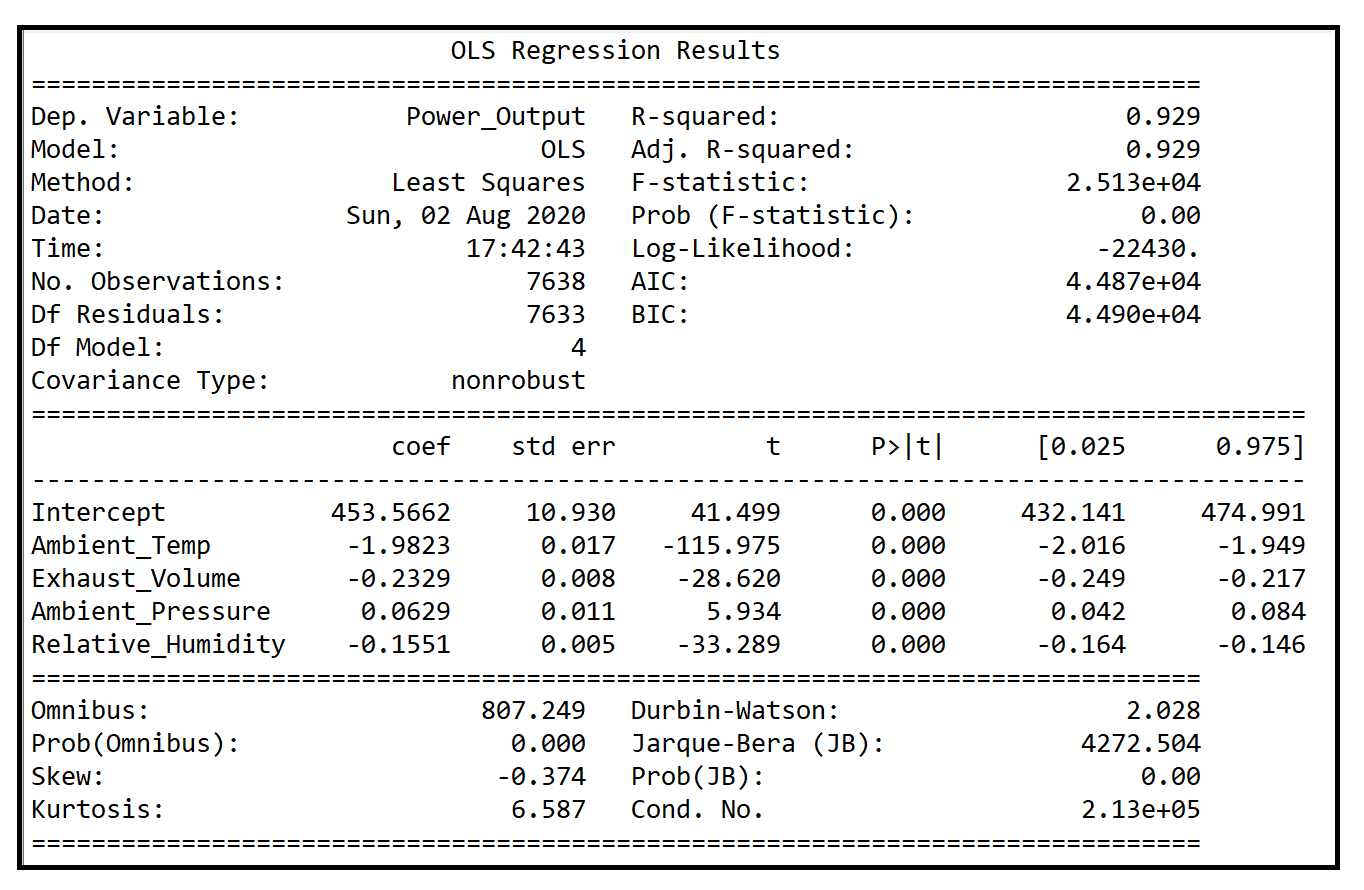

olsr_results = linear_model.OLS(y_train, X_train).fit()print('Training completed')print(olsr_results.summary())We get the following output:

我们得到以下输出:

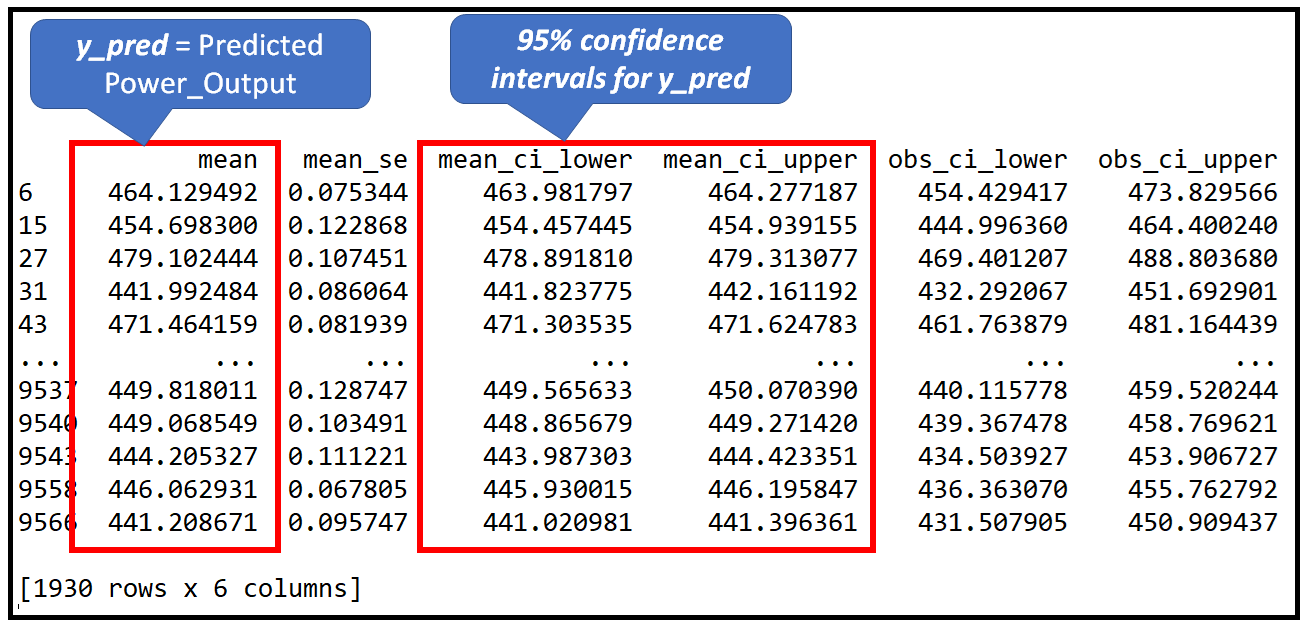

Next, let’s get the predictions of the model on test data set and get its predictions:

接下来,让我们在测试数据集上获得模型的预测并获得其预测:

olsr_predictions = olsr_results.get_prediction(X_test)olsr_predictions is of type statsmodels.regression._prediction.PredictionResult and the predictions can obtained from the PredictionResult.summary_frame() method:

olsr_predictions的类型为statsmodels.regression._prediction.PredictionResult,并且可以从PredictionResult.summary_frame()方法获得预测 :

prediction_summary_frame = olsr_predictions.summary_frame()print(prediction_summary_frame)

Let’s calculate the residual errors of regression ε = (y_test — y_pred):

让我们计算回归残差ε = (y_test — y_pred):

resid = y_test['Power_Output'] - prediction_summary_frame['mean']Finally, let’s plot resid against the predicted value y_pred=prediction_summary_frame[‘mean’]:

最后,让我们绘制resid 针对预测值y_pred=prediction_summary_frame['mean'] :

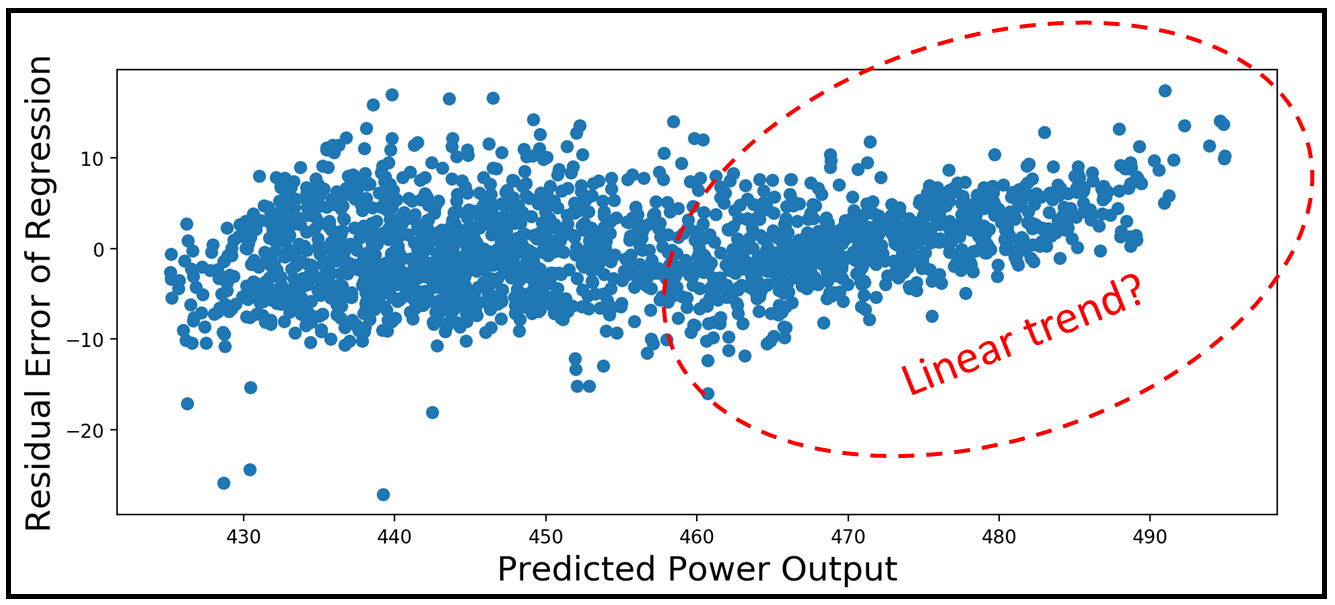

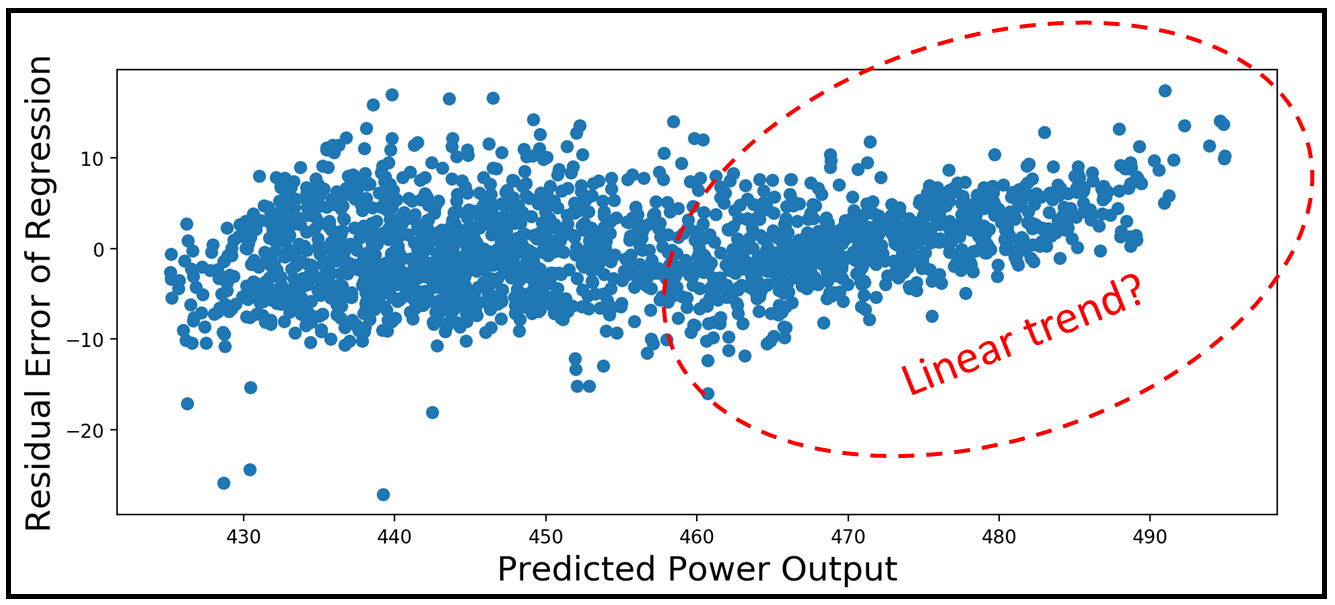

plt.xlabel('Predicted Power Output', fontsize=18)plt.ylabel('Residual Error of Regression', fontsize=18)plt.scatter(y_test['Power_Output'], resid)plt.show()We get the following plot:

我们得到以下图:

One can see that the residuals are more or less pattern-less for smaller values of Power Output, but they seem to be showing a linear pattern at the higher end of the Power Output scale. It indicates that the model’s predictions at the higher end of the power output scale are less reliable than at the lower end of the scale.

可以看到,对于较小的Power Output值,残差或多或少地没有模式,但它们似乎在Power Output标度的高端显示线性模式。 它表明模型在功率输出比例尺高端的预测不如在尺度下端的可靠。

为什么残留错误应该具有相同的分布? (Why should residual errors have Identical distributions?)

What identically distributed means is that residual error ε_i corresponding to the prediction for each data row, has the same probability distribution. If the distribution of errors is not identical, one cannot reliably use tests of significance such as the F-test for regression analysis or perform confidence interval testing on the predictions. Many of these tests depend on the residual errors being identically, and normally distributed. This brings us to the next assumption.

均匀分布的意思是与每个数据行的预测相对应的残留误差ε_i具有相同的概率分布。 如果误差的分布不相同,则无法可靠地使用重要性检验(例如F检验)进行回归分析或对预测进行置信区间检验。 这些测试中的许多测试都取决于残留误差是否相同且呈正态分布 。 这将我们带入下一个假设。

假设3:残留误差应正态分布 (Assumption 3: Residual errors should be normally distributed)

In the previous section, we saw how and why the residual errors of the regression are assumed to be independent, identically distributed (i.i.d.) random variables. Assumption 3 imposes an additional constraint. The errors should all have a normal distribution with a mean of zero. In statistical language:

在上一节中,我们了解了如何以及为什么将回归的残差误差假定为独立的,均匀分布的(iid)随机变量。 假设3施加了附加约束。 误差均应具有均值为零的正态分布。 用统计语言:

∀ i ∈ n, ε_i ~ N(0, σ²)

∀ 我 ∈N,ε_i〜N(0,σ²)

This notation is read as follows:

该符号的含义如下:

For all i in the data set of length n rows, the ith residual error of regression is a random variable that is normally distributed (that’s why the N() notation). This distribution has a mean of zero and a variance of σ². Furthermore, all ε_i have the same variance σ², i.e. they are identically distributed.

对于长度为n行的数据集中的所有i ,回归的第i个残差是一个正态分布的随机变量(这就是N ()表示法的原因)。 该分布的平均值为零,方差为σ²。 此外,所有ε_i具有相同的方差σ² ,即它们具有相同的分布。

It is a common misconception that linear regression models require the explanatory variables and the response variable to be normally distributed.

一个普遍的误解是,线性回归模型要求解释变量和响应变量呈正态分布。

More often than not, x_j and y will not even be identically distributed, leave alone normally distributed.

通常, x_j和y甚至不会均匀分布,更不用说正态分布了。

In Linear Regression, Normality is required only from the residual errors of the regression.

在线性回归中,仅从回归的残留误差中需要正态性。

In fact, normality of residual errors is not even strictly required. Nothing will go horribly wrong with your regression model if the residual errors ate not normally distributed. Normality is only a desirable property.

实际上,甚至没有严格要求残差的正态性。 如果残留误差的吃法不是正态分布的,那么您的回归模型将不会出现任何可怕的错误。 常态只是一个理想的属性。

What’s normally is telling you is that most of the prediction errors from your model are zero or close to zero and large errors are much less frequent than the small errors.

通常告诉您的是,模型中的大多数预测误差为零或接近零,大误差的发生频率远小于小误差。

如果残留误差未分配N(0,σ²),会发生什么? (What happens if the residual errors are not N(0, σ²) distributed?)

If the residual errors of regression are not N(0, σ²), then statistical tests of significance that depend on the errors having an N(0, σ²) distribution, simply stop working.

如果回归的残留误差不是N(0,σ²) ,则根据具有N(0,σ²)分布的误差的显着性统计检验,只需停止工作即可。

For example,

例如,

The F-statistic used by the F-test for regression analysis has the required Chi-squared distribution only if the regression errors are N(0, σ²) distributed. If regression errors are not normally distributed, the F-test cannot be used to determine if the model’s regression coefficients are jointly significant. You will then have to use some other test to figure out if your regression model did a better job than a straight line through the data set mean.

F 检验用于回归分析的F统计量 仅当回归误差为N(0,σ²)分布时,才具有所需的卡方分布。 如果回归误差不是正态分布的,则F检验不能用于确定模型的回归系数是否共同显着。 然后,您将不得不使用其他测试来确定您的回归模型是否比通过数据集平均值的直线做得更好。

Similarly, the computation of t-values and confidence intervals assumes that regression errors are N(0, σ²) distributed. If the regression errors are not normally distributed, t-values for the model’s coefficients and the model’s predictions become inaccurate and you should not put too much faith into the confidence intervals for the coefficients or the predictions.

类似地, t值和置信区间的计算假定回归误差为N(0,σ²)分布。 如果回归误差不是正态分布的,则模型系数的t值和模型的预测将变得不准确,并且您不应在系数或预测的置信区间中过分置信。

A special case of non-normality: bimodally distributed residual errors

非正态的特殊情况:双峰分布残差

Sometimes, one finds that the model’s residual errors have a bimodal distribution i.e. they have two peaks. This may point to a badly specified model or a crucial explanatory variable that is missing from the model.

有时,人们发现模型的残留误差具有双峰分布,即它们具有两个峰值。 这可能表明模型指定不正确或模型中缺少关键的解释变量。

For example, consider the following situation:

例如,请考虑以下情况:

Your dependent variable is a binary variable such as Won (encoded as 1.0) or Lost (encoded as 0.0). But your linear regression model is giong to generate predictions on the continuous real number scale. If the model generates most of its predictions along a narrow range of this scale around 0.5, for e.g. 0.55, 0.58, 0.6, 0.61, etc. the regression errors will peak either on one side of zero (when the true value is 0), or on the other side of zero (when the true value is 1). This is a sign that your model is not able to decide whether the output should be 1 or 0, so it’s predicting a value that is around the average of 1 and 0.

您的因变量是一个二进制变量,例如Won(编码为1.0)或Lost(编码为0.0)。 但是您的线性回归模型可以在连续实数范围内生成预测。 如果模型在0.5左右的狭窄范围(例如0.55、0.58、0.6、0.61等)上生成大部分预测,则回归误差将在零的一侧达到峰值(当真实值为0时),或在零的另一侧(当真实值为1时)。 这表明您的模型无法决定输出应为1还是0,因此它预测的值约为1和0的平均值。

This can happen if you are missing a key binary variable, known as an indicator variable, which influences the output value in the following way:

如果缺少关键的二进制变量(称为指示符变量),则会以以下方式影响输出值:

When the variable’s value is 0, the output ranges within a certain range, say close to 0.

当变量的值为0时,输出范围在一定范围内,例如接近0。

When the variable’s value is 1, the output takes on a whole new range of values that are not there in the earlier range, say around 1.0.

当变量的值为1时,输出将采用一个较新的值范围,该范围不在较早的范围内,例如1.0。

If this variable is missing in your model, the predicted value will average out between the two ranges, leading to two peaks in the regression errors. Once this variable is added, the model is well specified, and it will correctly differentiate between the two possible ranges of the explanatory variable.

如果模型中缺少此变量,则预测值将在两个范围之间求平均值,从而导致回归误差出现两个峰值。 一旦添加了此变量,就可以很好地指定模型,并且可以正确地区分解释变量的两个可能范围。

Related read: When Your Regression Model’s Errors Contain Two Peaks如何测试残差的正常性? (How to test for normality of residual errors?)

There are number of tests of normality available. The easiest way to check for normality is to measure the Skewness and the Kurtosis of the distribution of residual errors.

有许多正常性测试。 检查正态性的最简单方法是测量残差分布的偏度和峰度。

The Skewness of a perfectly normal distribution is 0 and its kurtosis is 3.0.

完全正态分布的“偏度”为0,峰度为3.0。

Any departures, positive or negative from these values indicates a departure from normality. It is of course impossible to get a perfectly normal distribution. Some departure from normality is expected. But how much is a ‘little’ departure? How to judge if the departure is significant?

偏离这些值的正值或负值都表示偏离正常值。 当然不可能获得完全正态分布。 预计会偏离正常状态。 但是,“小”偏离是多少? 如何判断偏离是否重大?

Whether the departure is significant is answered by statistical tests of normality such as the Jarque Bera Test and the Omnibus Test. A p-value of ≤ 0.05 on these tests indicates that the distribution is normal at a confidence level of ≥ 95%.

偏离是否显着可通过Jarque Bera检验和Omnibus检验等正态性统计检验来回答。 这些测试的p值≤0.05表示在≥95%的置信度下分布是正态的。

Let’s run the Jarque-Bera normality test on the linear regression model that we have trained on the Power Plant data set. Recollect that the residual errors were stored in the variable resid and they were obtained by running the model on the test data and by subtracting the predicted value y_pred from the observed value y_test.

让我们在我们根据电厂数据集训练的线性回归模型上运行Jarque-Bera正态检验。 回想一下残留误差已存储在变量resid ,可以通过在测试数据上运行模型并从观测值y_test中减去预测值y_pred来获得它们 。

from statsmodels.compat import lzipimport statsmodels.stats.api as smsname = ['Jarque-Bera test', 'Chi-squared(2) p-value', 'Skewness', 'Kurtosis']#run the Jarque-Bera test for Normality on the residuals vectortest = sms.jarque_bera(resid)#print out the test results. This will also print the Skewness and Kurtosis of the resid vectorlzip(name, test)This prints out the following:

打印出以下内容:

[('Jarque-Bera test', 1863.1641805048084), ('Chi-squared(2) p-value', 0.0), ('Skewness', -0.22883430693578996), ('Kurtosis', 5.37590904238288)]The skewness of the residual errors is -0.23 and their Kurtosis is 5.38. The Jarque-Bera test has judged them to not be different than 0.0 and 3.0 in a statistically significant manner, thereby implying that the residuals of the linear regression model are, for all practical purposes normally distributed.

残留误差的偏度为-0.23,峰度为5.38。 Jarque-Bera检验以统计学上显着的方式判断它们与0.0和3.0没有区别,从而暗示了对于所有实际目的,线性回归模型的残差都是正态分布的。

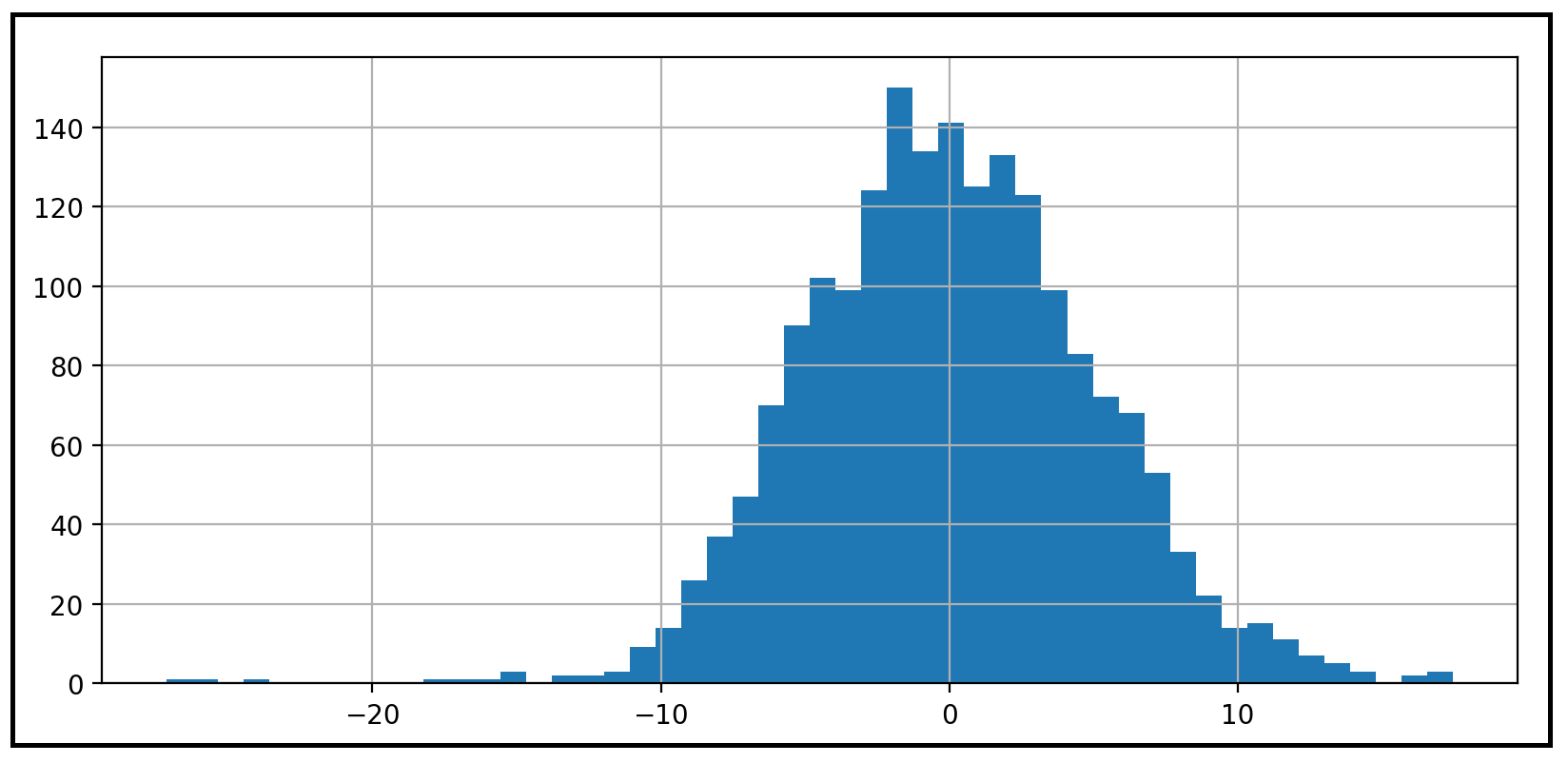

Let’s plot the frequency distribution of the residual errors:

让我们绘制残留误差的频率分布:

resid.hist(bins=50)plt.show()We get the following histogram showing us that the residual errors do seem to be normally distributed:

我们得到以下直方图,向我们显示残差似乎确实是正态分布的:

Related read: Testing for Normality using Skewness and Kurtosis, for an in-depth explanation of Normality and statistical tests of normality.

相关阅读: 使用偏度和峰度进行正态性测试 ,以深入解释正态性和正态性的统计检验。

Related read: When Your Regression Model’s Errors Contain Two Peaks: A Python tutorial on dealing with bimodal residuals.

相关阅读: 当回归模型的错误包含两个峰值时 :处理双峰残差的Python教程。

假设4:残差应该是同余的 (Assumption 4: Residual errors should be homoscedastic)

In the previous section we saw why the residual errors should be N(0, σ²) distributed, i.e. normally distributed with mean zero and variance σ². In this section we impose an additional constraint on them: the variance σ² should be constant. Particularly, σ² should not be a function of the response variable y, and thereby indirectly the explanatory variables X.

在上一节中,我们看到了为什么残余误差应为N(0,σ²)分布,即均值为零且方差为σ²的正态分布。 在本节中,我们对它们施加一个附加约束: 方差σ²应该是恒定的。 特别地,σ2不应是响应变量 y 的函数 ,从而不能间接地成为解释变量 X 的函数 。

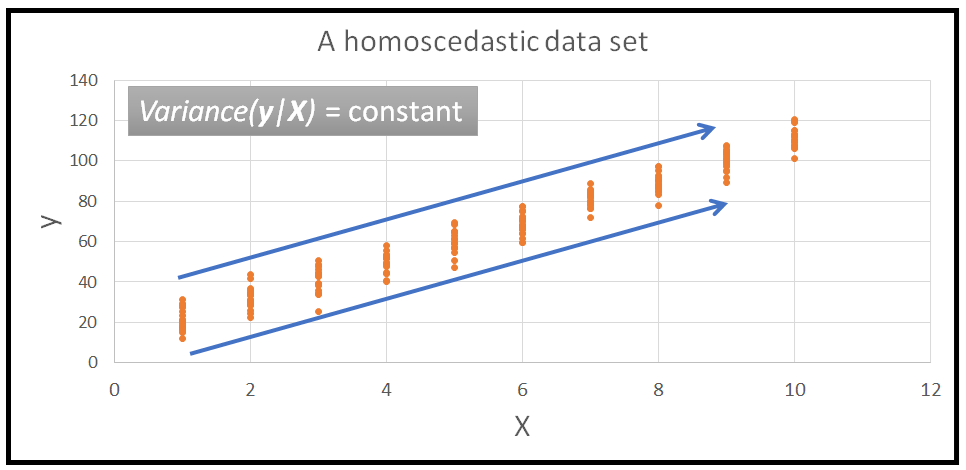

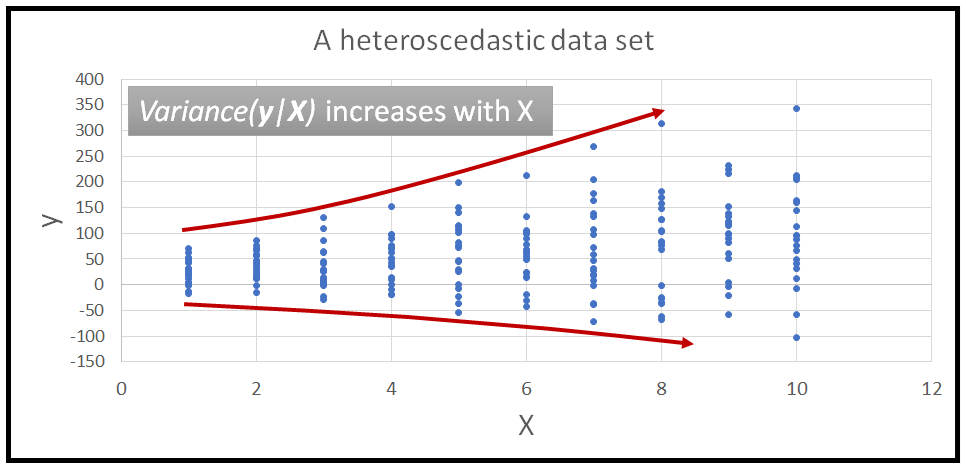

The property of a data set to have constant variance is called homoscedasticity. And it’s opposite, where the variance is a function of explanatory variables X is called heteroscedasticity.

数据集具有恒定方差的属性称为均 方差 。 相反,方差是解释变量 X 的函数, 称为 异方差 。

Here is an illustration of a data set showing homoscedastic variance:

这是显示同高方差的数据集的图示:

And here’s one that displays a heteroscedastic variance:

这是一个显示异方差的方差:

While talking about homoscedastistic or heteroscedastic variances, we always consider the conditional variance: Var(y|X=x_i), or Var(ε|X=x_i). This is read as variance of y or variance of residual errors ε for a certain value of X=x_i.

在谈论同方差或异方差时,我们总是考虑条件方差: Var( y | X = x_i )或Var( ε | X = x_i ) 。 这被理解为y的方差或残差ε的方差 对于X = x_i的某个值。

Related read:Three Conditionals Every Data Scientist Should Know: Conditional expectation, conditional probability & conditional variance: practical insights for regression modelers

相关阅读: 每个数据科学家都应该知道的三个条件: 条件期望,条件概率和条件方差:回归建模者的实用见解

为什么我们希望残差是同余的? (Why do we want the residual errors to be homoscedastic?)

The immediate consequence of residual errors having a variance that is a function of y (and so X) is that the residual errors are no longer identically distributed. The variance of ε for each X=x_i will be different, thereby leading to non-identical probability distributions for each ε_i in ε.

具有作为y (因此X )的函数的方差的残余误差的直接结果是,残余误差不再相同地分布。 每个X = x_i的ε的方差将不同,从而导致ε中每个ε_i的概率分布不同。

We have seen that if the residual errors are not identifically distributed, we cannot use tests of significance such as the F-test for regression analysis or perform confidence interval checking on the regrssion model’s coefficients or the model’s predictions. Many of these tests depend on the residual errors being independent, identically distributed random variables.

我们已经看到,如果残差误差没有均匀分布,则无法使用F检验等重要检验进行回归分析 ,也不能对回归模型的系数或模型的预测进行置信区间检查。 这些测试中的许多测试都取决于残留误差是独立的, 均匀分布的随机变量。

什么会导致残留错误为异方差? (What can cause residual errors to be heteroscedastic?)

Heteroscedastic errors frequently occur when a linear model is fitted to data in which the fluctuation in the response variable y is some function of the current value y, for e.g. it is a percentage of the current value of y. Such data sets commonly occur in the monetary domain. An example is where the absolute amount of variation in a company’s stock price is proportional to the current stock price. Another example is of seasonal variations in the sales of some product being proportional to the sales level.

当将线性模型拟合到其中响应变量y的波动是当前值y的某个函数的数据时(例如,它是y的当前值的百分比),经常会发生异方差错误。 这样的数据集通常出现在货币领域。 一个示例是,公司股价的绝对变化量与当前股价成正比。 另一个例子是某些产品的销售季节性变化与销售水平成正比。

Heteroscedasticity can also be introduced by errors in the data gathering process. For example, if the measuring instrument introduces a noise in the measured value that is proportional to the measured value, the measurements will contain heteroscedastic variance.

数据收集过程中的错误也可能导致异方差。 例如,如果测量仪器在测量值中引入了与测量值成比例的噪声,则测量结果将包含异方差。

Another reason heteroscedasticity is introduced in the model’s errors is by simply using the wrong kind of model for the data set or by leaving out important explanatory variables.

在模型错误中引入异方差的另一个原因是,仅对数据集使用了错误的模型类型,或者通过省略了重要的解释变量。

如何解决模型的残差中的异方差问题? (How to fix heteroscedasticity in the model’s residual errors?)

There are three main approaches to dealing with heteroscedastic errors:

处理异方差错误的主要方法有三种:

Transform the dependent variable so as to linearize it and dampen down the heteroscedastic variance. Commonly used transforms are log(y) and square-root(y).

变换因变量以使其线性化并抑制异方差方差。 常用的转换是log( y )和平方根( y ) 。

- Identify important variables that may be missing from the model, and which are causing the variance in the errors to develop a pattern, and add those variables into the model. Alternately, stop using the linear model and switch to a completely different model such as a Generalized Linear Model, or a neural net model. 确定可能从模型中丢失的重要变量,这些重要变量会导致误差中的差异形成模式,然后将这些变量添加到模型中。 或者,停止使用线性模型,然后切换到完全不同的模型,例如广义线性模型或神经网络模型。

- Simply accept the heteroscedasticity present in the residual errors. 只需接受残差中存在的异方差即可。

如何检测残差中的异方差? (How to detect heteroscedasticity in the residual errors?)

There are several tests of homoscedasticity available. Here are a few:

有几种同方差测试。 这里有一些:

Testing for heteroscedastic variance using Python

使用Python测试异方差

Let’s test the model’s residual errors for heteroscedastic variance by using the White test. We’ll use the errors from the linear model we built earlier for predicting the power plant’s output.

让我们通过使用White检验来测试模型的残差误差以用于异方差方差。 我们将使用先前构建的线性模型中的误差来预测电厂的输出。

The White test for heteroscedasticity uses the following line of reasoning to detect heteroscedatsicity:

White测试异方差性使用以下推理来检测异方差性:

If the residual errors ε are heteroscedastic, their variance can be ‘explained’ by y (and therefore by a combination of the model’s explanatory variables X and their squares (X²) and cross-products (X X X).

如果残差误差ε是异方差的,则它们的方差可以用y来“解释”(因此可以通过模型的解释变量X及其平方( X²)和叉积( X X X ) 。

Therefore, when an auxillary linear model is fitted on the errors ε and (X, X², X x X), it is expected that the aux linear model will be able to explain at least some of the relationship that is assumed to be present between errors ε and X.

因此,当将辅助线性模型拟合到误差ε和(X , X² , X x X )上时,可以预期辅助线性模型将至少能够解释假定之间存在的一些关系。误差ε和X。

If we run the F-test for regression on the aux-model, and the F-test returns a p-value that is ≤ 0.05, it will lead us to accept the F-test’s alternate hypothesis that the aulliary model’s coefficients are jointly significant. Hence the fitted aux model is indeed able to capture a meaningful relationship between the residual errors ε of the primary model and the model’s explanatory variables X. This leads us to conclude that the residual errors of the primary model ε are heteroscedastic.

如果我们运行F检验进行回归 在aux模型上,F检验返回的p值≤0.05,这将使我们接受F检验的替代假设,即肛门模型系数共同显着。 因此,配合AUX模式的确能够捕获有意义的关系之间的残差ε主模型和模型的解释变量X,因为这使我们得出这样的结论主要模式ε是异方差的残差。

On the other hand, if the F-test returns a p-value that is ≥ 0.05, then we accept the F-test’s null hypothesis that there is no meaningful relationship between the residual errors ε of the primary model and the model’s explanatory variables X. Thus, the residual errors of the primary model ε are homoscedastic.

另一方面,如果F检验返回的p值≥0.05,则我们接受F检验的原假设,即原始模型的残差ε与模型的解释变量X之间没有有意义的关系。 。 因此,主要模型ε的残差是等方的。

Let’s run the White test on the residual errors that we got earlier from running the fitted Power Plant Output model on the test data set. These residual errors are stored in the variable resid.

让我们对在测试数据集上运行拟合的电厂输出模型所获得的残差进行怀特测试。 这些残留错误存储在变量resid.

from statsmodels.stats.diagnostic import het_whitekeys = ['Lagrange Multiplier statistic:', 'LM test\'s p-value:', 'F-statistic:', 'F-test\'s p-value:']#run the White testresults = het_white(resid, X_test)#print the results. We will get to see the values of two test-statistics and the corresponding p-valueslzip(keys, results)We see the following out:

我们看到以下内容:

[('Lagrange Multiplier statistic:', 33.898672268600926), ("LM test's p-value:", 2.4941917488321856e-06), ('F-statistic:', 6.879489454587562), ("F-test's p-value:", 2.2534296887344e-06)]You can see that the F-test for regression has returned a p-value of 2.25e-06 which is much smaller than even 0.01.

您可以看到回归的F检验返回的p值为2.25e-06,甚至比0.01小得多。

So with 99% confidence, we can say that the auxillary model used by the White test was able to explain a meaningful relationship between the residual errors residof the primary model and the primary model’s explanatory variables (in this case X_test).

因此,与99%的信心,我们可以说,由白试验中使用的auxillary模型能够解释一个有意义的关系之间的残差resid主模型和主模型的解释变量(在这种情况下X_test )。

So we reject the null hypothesis of the F-test that the residuals errors of the Power Plant Output model are homoscedastic and accept the alternate hypothesis that the residual errors of the model are heteroscedastic.

因此,我们拒绝发电厂输出模型的残差误差为等方差的F检验的零假设 ,并接受该模型的残差误差为异方差 的替代假设 。

Recollect that we had seen the following linear pattern of sorts in the plot of residual errors versus the predicted value y_pred:

回忆一下,我们在残差与预测值y_pred的关系图中看到了以下线性模式:

From this plot, we should have expected the residual errors of our linear model to be heteroscedastic. The White test just confirmed this expectation!

从该图可以看出,我们的线性模型的残余误差应该是异方差的。 白色测试只是证实了这一期望!

Related Read: Heteroscedasticity is nothing to be afraid of for an in-depth look at Heteroscedasticity and its consequences.

Further reading: Robust Linear Regression Models for Nonlinear, Heteroscedastic Data: A step-by-step tutorial in Python

进一步阅读: 非线性,异方差数据的鲁棒线性回归模型 :Python分步指南

摘要 (Summary)

The Ordinary Least Squares regression model (a.k.a. the linear regression model) is a simple and powerful model that can be used on many real world data sets.

普通最小二乘回归模型(又称线性回归模型)是一种简单且功能强大的模型,可用于许多现实世界的数据集。

The OLSR model is based on strong theorotical foundations. It’s predictions are explanable and defendable.

OLSR模型基于强大的理论基础。 它的预测是可解释的和可辩护的。

To get the most out of an OLSR model, we need to make and verify the following four assumptions:

为了充分利用OLSR模型,我们需要做出并验证以下四个假设:

The response variable y should be linearly related to the explanatory variables X.

响应变量y应该与解释变量X 线性相关 。

The residual errors of regression should be independent, identifically distributed random variables.

回归的残留误差应该是独立的,相同分布的随机变量 。

The residual errors should be normally distributed.

残留误差应呈正态分布 。

The residual errors should have constant variance, i.e. they should be homoscedastic.

残余误差应具有恒定的方差,即,它们应是等方差的 。

引用和版权 (Citations and Copyrights)

Combined Cycle Power Plant Data Set: downloaded from UCI Machine Learning Repository used under the following citation requests:

联合循环电厂数据集 :从UCI机器学习存储库下载,用于以下引用请求:

Pınar Tüfekci, Prediction of full load electrical power output of a base load operated combined cycle power plant using machine learning methods, International Journal of Electrical Power & Energy Systems, Volume 60, September 2014, Pages 126–140, ISSN 0142–0615, [Web Link],

PınarTüfekci,使用机器学习方法预测基本负荷运行的联合循环电厂的满负荷电力输出,国际电力与能源系统杂志,第60卷,2014年9月,第126–140页,ISSN 0142–0615, [网站链接],

(

(

- Heysem Kaya, Pınar Tüfekci , Sadık Fikret Gürgen: Local and Global Learning Methods for Predicting Power of a Combined Gas & Steam Turbine, Proceedings of the International Conference on Emerging Trends in Computer and Electronics Engineering ICETCEE 2012, pp. 13–18 (Mar. 2012, Dubai Heysem Kaya,PınarTüfekci,SadıkFikretGürgen:预测燃气和蒸汽轮机联合发电能力的本地和全球学习方法,《计算机和电子工程新兴趋势国际会议论文集》,ICETCEE 2012,第13–18页(3月。 2012年,迪拜

翻译自: https://towardsdatascience.com/assumptions-of-linear-regression-5d87c347140

线性回归 假设