- 1巴比特 | 元宇宙每日必读:腾讯将公布大模型和 AI 聊天机器人;阿里妈妈发布两款 AI 新品...

- 2线性表的实现(C语言版)——详细代码_c语言实现线性表

- 3Python爬取京东商品信息以及评论存进MySQL_怎么用python爬去京东的商品信息和价格并存储到mysql

- 4C++中的动态内存管理

- 5程序员怎么升职加薪?十年技术高管的过来人经验!_程序员能升职吗

- 6基于SpringBoot和PotsGIS的各省地震震发可视化分析

- 7【计算机视觉 | 目标检测】术语理解8:模型的实时处理能力,Panoptic Segmentation(全景分割),解耦结构,Anchor-Based,Anchor-Free,特征金字塔网络

- 8又来两款神器,无需魔法直接使用 ChatGPT!_火狐浏览器chatgpt for google怎么用

- 9电脑文件msvcr71.dll丢失的解决方法,4种方法教你速度修复msvcr71.dll_msvcp71.dll

- 10LLM大语言模型:为数据分析师提供自然语言处理能力_llm和nlp的关系

【卷积神经网络实例 实现数字图像分类】_vgg卷积神经网络做图像分类

赞

踩

VGG19 实现数字图像分类

使用VGG19网络模型实现图像分类:

- 输入:数字图像(一张猫,狗,等图像)

- 输出:图像中猫,狗对应ImageNet 数据集类别编号

VGGNet介绍

1.基本概念

VGGNet是牛津大学计算机视觉组和Google DeepMind公司一起研发的深度卷积神经网络,并取得了2014年Imagenet比赛定位项目第一名和分类项目第二名。该网络主要是泛化性能很好,容易迁移到其他的图像识别项目上,可以下载VGGNet训练好的参数进行很好的初始化权重操作,很多卷积神经网络都是以该网络为基础,比如FCN,UNet,SegNet等。vgg版本很多,常用的是VGG16,VGG19网络。

2.网络结构

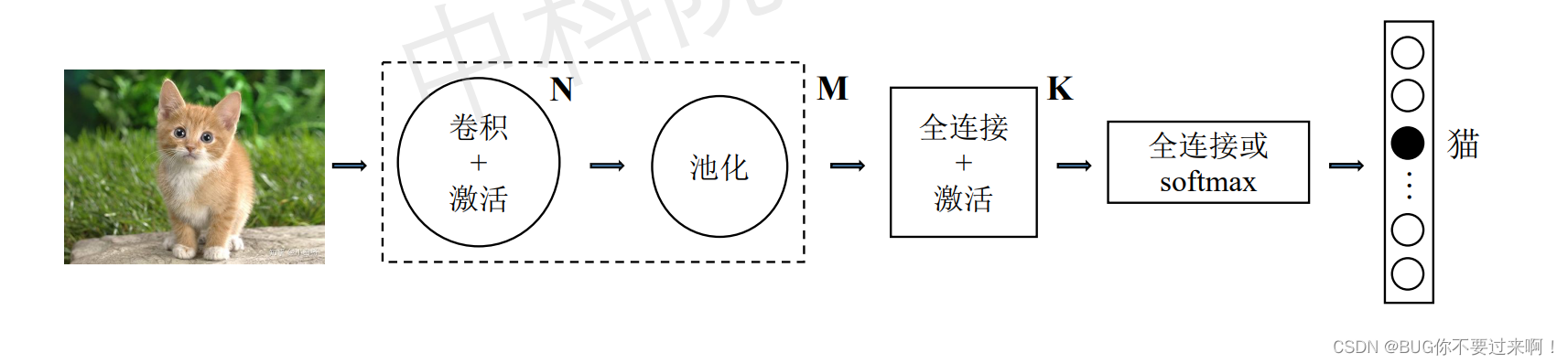

(1)常见的神经网络结构

常见的卷积神经网络结构如图所示。卷积层后面会使用 ReLU 等激活函数,N 个卷

积层后通常会使用一个最大池化层(也有使用平均池化的);卷积和池化组合出现 M 次之后,提取出来的卷积特征会经过 K 个全连接层映射到若干个输出特征上,最后再经过一个全连接层或 Softmax 层来决定最终的输出。在第2.1节实验中,已经介绍了全连接层、ReLU激活函数、Softmax 层,本节介绍本实验中新增的基本单元:卷积层和最大池化层。

(2)卷积层

与全连接层类似,卷积层中的参数包括权重(即卷积核参数)和偏置。VGG19 中使用

的都是多输入输出特征图的卷积运算。假设输入特征图 X 的维度为 N ×Cin × Hin × Win,其中 N 是输入的样本个数(在本实验中 N = 1),Cin 是输入的通道数,Hin 和 Win 是输入特征图的高和宽。卷积核张量 W 用四维矩阵表示,维度为 Cin × K × K × Cout,其中 K × K 为卷积核的高度 × 宽度,Cout 为输出特征图的通道数。卷积层的偏置 b 用一维向量表示,维度为 Cout。同时定义输入特征图的边界扩充大小 p、卷积步长 s。输出特征图 Y 由输入 X 与卷积核 W 内积并加偏置 b 计算得到,Y 的维度为 N × Cout × Hout × Wout,其中 Hout 和Wout 是输出特征图的高和宽。

(3)最大池化层

为了有效地减少计算量,CNN使用的另一个有效的工具被称为“池化(Pooling)”。池化就是将输入图像进行缩小,减少像素信息,只保留重要信息。

池化(pool)即下采样(downsamples),目的是为了减少特征图。

假设最大池化层的输入特征图 X 的维度为 N × C × Hin × Win,其中 N 是输入的样本个

数(在本实验中 N = 1),C 是输入的通道数,Hin 和 Win 是输入特征图的高和宽。池化窗口的高和宽均为 K,池化步长为 s,输出特征图 Y 的维度为 N ×C × Hout × Wout,其中 Hout和 Wout 是输出特征图的高和宽。

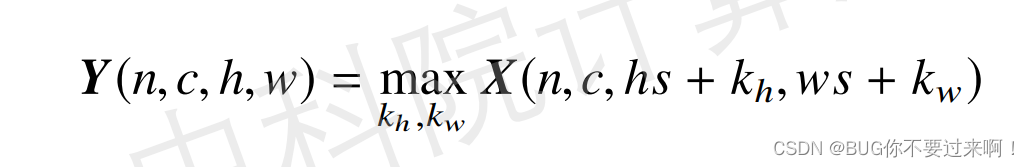

前向传播计算时,输出特征图 Y 中某一位置的值是输入特征图 X 的对应池化窗口内的

最大值,计算公式为

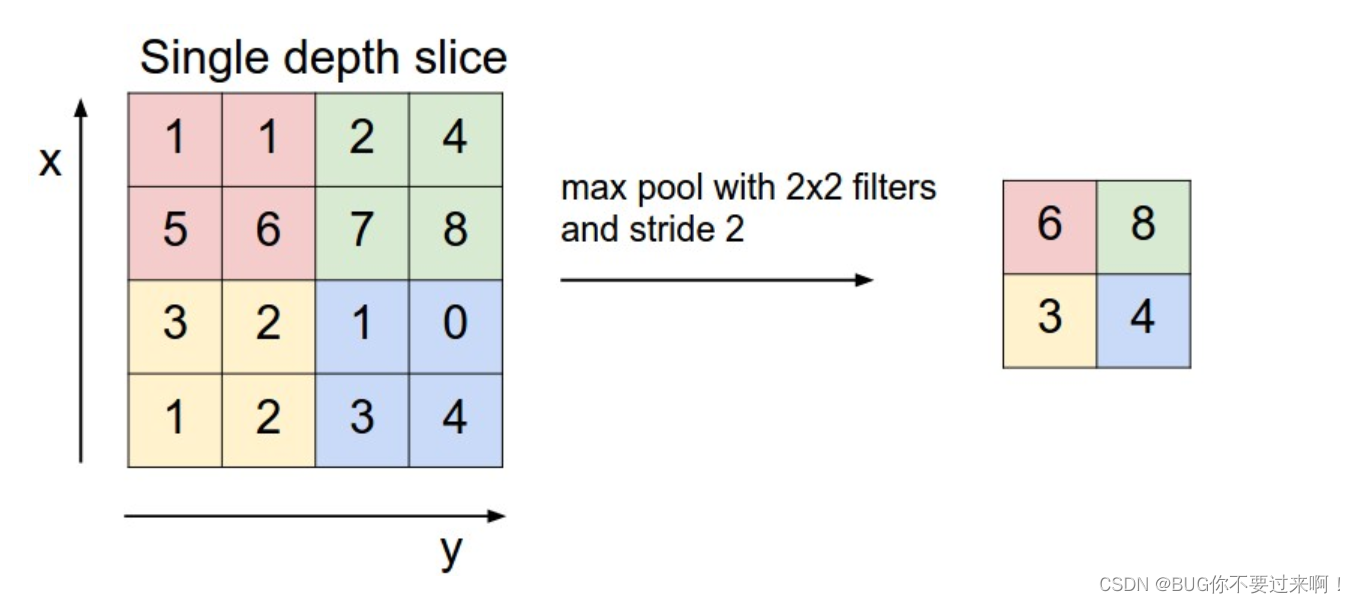

池化窗口 和 池化步长

池化窗口一般为2x2,步幅为2,相对于卷积层进行卷积运算,池化层进行的运算一般有以下几种:

- 最大池化(Max Pooling)。取4个点的最大值。这是最常用的池化方法。

- 均值池化(Mean Pooling)。取4个点的均值。

- 高斯池化。借鉴高斯模糊的方法。不常用。

- 可训练池化。训练函数 ff ,接受4个点为输入,出入1个点。不常用。

举例

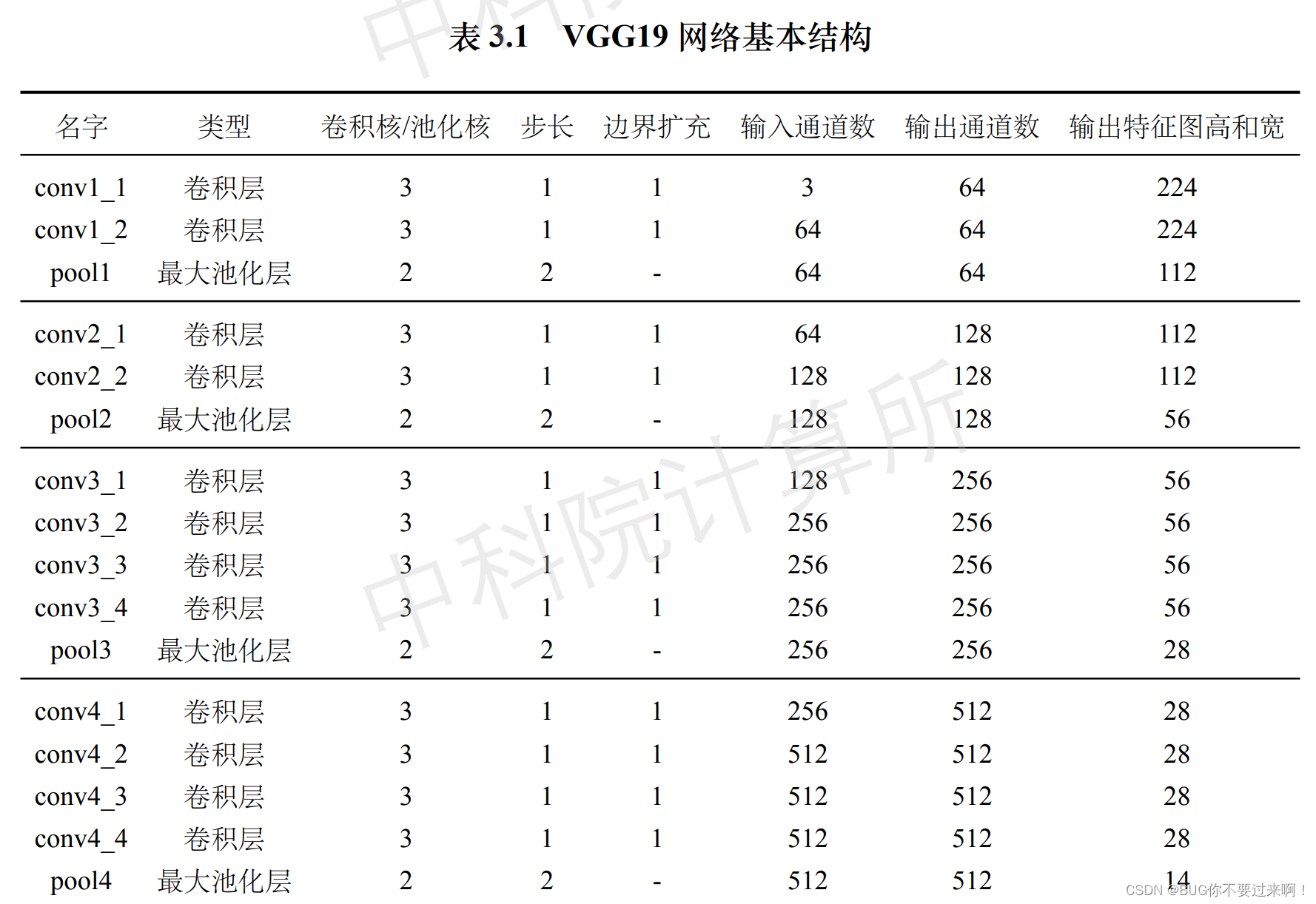

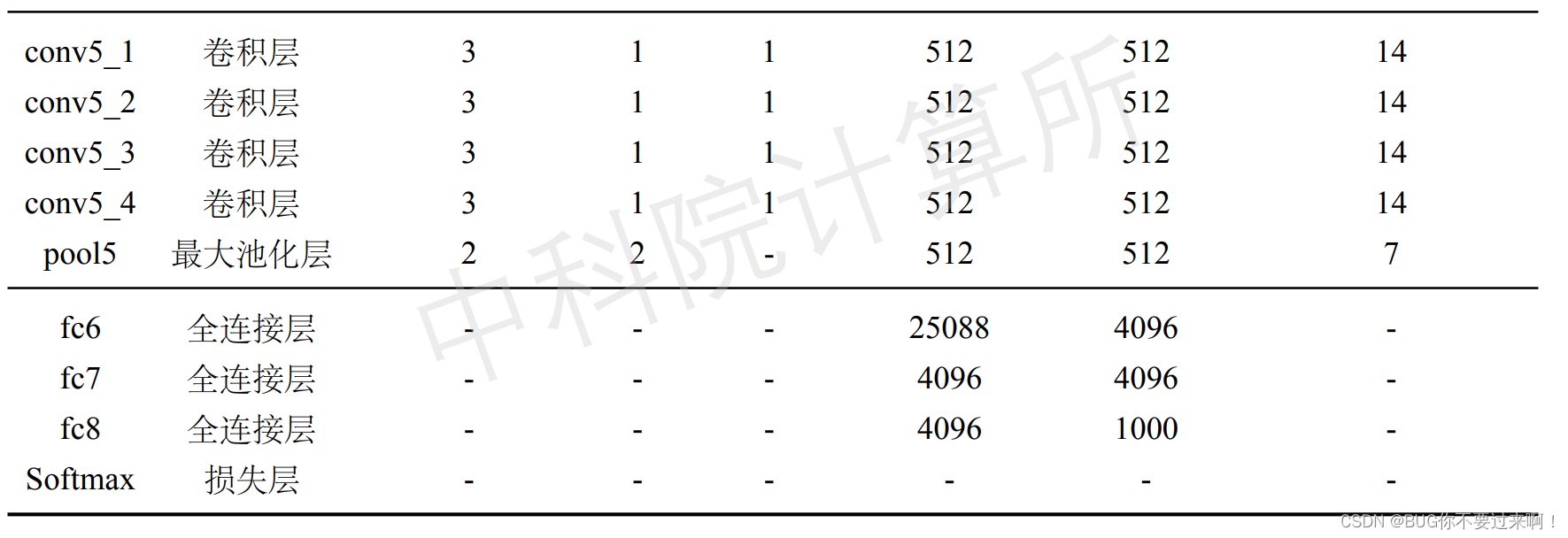

3.VGG19网络结构

VGG19是经典的深度卷积神经网络结构,包含 5 个阶段共 16 个卷积层和 3 个全连接层,如表所示。前 2 个阶段各有 2 个卷积层,后 3 个阶段各有 4 个卷积层。每个卷积层均使用 3 × 3 大小的卷积核,边界扩充大小为 1,步长为 1,即保持输入输出特征图的高

和宽不变。每个阶段的卷积层的通道数在不断变化。在每个阶段的第一个卷积层,输入通

道数为上一个卷积层的输出通道数(第一个阶段的输入通道数为原始图像通道数)。5 个阶段的卷积层输出通道数分别为 64、128、256、512、512。每个阶段除第一个卷积层外,其他卷积层均保持输入和输出通道数相同。每个卷积层后面都跟随有 ReLU 层作为激活函数,每个阶段最后都跟随有一个最大池化层,将特征图的高和宽缩小为原来的 1/2。3 个全连接层中前 2 个全连接层后面也跟随有 ReLU 层。值得注意的是,第五阶段输出的特征图会进行变形,将四维特征图变形为二维矩阵作为全连接层的输入。网络最后是 Softmax 层计算分类概率。VGG19 的超参数配置详见表3.1,注意表中省略了卷积层和全连接层后的 ReLU层。

(1)数据加载模块

本实验采用 ImageNet 图像数据集,该数据集以.jpg 或.png 压缩文件格式存放每张 RGB 图像,且不同图像的尺寸可能不同。为了统一神经网络输入的大小,读入图像数据后,首先需要将图像缩放到 224×224大小,并存储在矩阵中。其次,需要对输入图像做标准化,将输入值范围从 [0,255] 标准化为均值为 0 的区间,从而提高神经网络的训练速度和稳定性。具体做法是图像的每个像素值减去 ImageNet 数据集的像素均值,该图像均值在加载 VGG19 模型参数的同时读入。本实验中使用 VGG19 模型中自带的图像均值进行输入图像标准化,是为了确保与官方使用VGG19 网络时的预处理方式保持一致。最后,将标准化后的图像矩阵转换为神经网络输入的统一维度,即 N × C × H × W,其中 N 是输入的样本数(由于图像是逐张读入的,因此N = 1),C 是输入的通道数(本实验输入图像是 RGB 彩色图像,因此 C = 3),H 和 W 分别表示输入的高和宽(缩放后的图像的高和宽均为 224)。

def load_image(self, image_dir):

print('Loading and preprocessing image from ' + image_dir)

self.input_image = scipy.misc.imread(image_dir)

self.input_image = scipy.misc.imresize(self.input_image,[224,224,3])

self.input_image = np.array(self.input_image).astype(np.float32)

self.input_image -= self.image_mean

self.input_image = np.reshape(self.input_image, [1]+list(self.input_image.shape))

# input dim [N, channel, height, width]

self.input_image = np.transpose(self.input_image, [0, 3, 1, 2])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

(2)Flatten 层

- 层的初始化:flatten 层用于改变特征图的维度,将输入特征图中每个样本的特征平铺

成一个向量。初始化 flatten 层时需要定义输入特征图和输出特征图的维度。 - 前向传播计算:假设输入特征图 X 的维度为 N × C × H × W,其中 N 是输入的样

本个数(在本实验中 N = 1),C 是输入的通道数,H 和 W 是输入特征图的高和宽。将输入特征图中每个样本的特征平铺成一个向量后,输出特征图的维度变为 N × (CHW)。注意 VGG19 官方模型所使用的深度学习平台 MatConvNet[6] 的特征图存储方式与本实验中不同。MatConvNet 中特征图维度为 N×H×W×C,而本实验中特征图 X 的维度为 N×C×H×W。因此为避免使用官方模型计算出现错误,flatten 层在改变输入特征图的维度前,需要将输入特征图进行维度交换,保持与 MatConvNet 的特征图存储方式一致。

class FlattenLayer(object):

def __init__(self, input_shape, output_shape): # 扁平化层的初始化

self.input_shape = input_shape

self.output_shape = output_shape

assert np.prod(self.input_shape) == np.prod(self.output_shape)

print('\tFlatten layer with input shape %s, output shape %s.' % (str(self.input_shape), str(self.output_shape)))

def forward(self, input): # 前向传播的计算

assert list(input.shape[1:]) == list(self.input_shape)

# matconvnet feature map dim: [N, height, width, channel]

# ours feature map dim: [N, channel, height, width]

self.input = np.transpose(input, [0, 2, 3, 1])

self.output = self.input.reshape([self.input.shape[0]] + list(self.output_shape))

show_matrix(self.output, 'flatten out ')

return self.output

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

(3)卷积层 与 池化层

这里不再过多赘述,直接上代码

# 卷积层

class ConvolutionalLayer(object):

def __init__(self, kernel_size, channel_in, channel_out, padding, stride):

# 卷积层的初始化

self.kernel_size = kernel_size

self.channel_in = channel_in

self.channel_out = channel_out

self.padding = padding

self.stride = stride

print('\tConvolutional layer with kernel size %d, input channel %d, output channel %d.' % (self.kernel_size, self.channel_in, self.channel_out))

def init_param(self, std=0.01): # 参数初始化

self.weight = np.random.normal(loc=0.0, scale=std, size=(self.channel_in, self.kernel_size, self.kernel_size, self.channel_out))

self.bias = np.zeros([self.channel_out])

def forward(self, input): # 前向传播的计算

start_time = time.time()

self.input = input # [N, C, H, W]

height = self.input.shape[2] + self.padding * 2

width = self.input.shape[3] + self.padding * 2

self.input_pad = np.zeros([self.input.shape[0], self.input.shape[1], height, width])

self.input_pad[:, :, self.padding:self.padding+self.input.shape[2], self.padding:self.padding+self.input.shape[3]] = self.input

height_out = (height - self.kernel_size) / self.stride + 1

width_out = (width - self.kernel_size) / self.stride + 1

self.output = np.zeros([self.input.shape[0], self.channel_out, height_out, width_out])

for idxn in range(self.input.shape[0]):

for idxc in range(self.channel_out):

for idxh in range(height_out):

for idxw in range(width_out):

# TODO: 计算卷积层的前向传播,特征图与卷积核的内积再加偏置

self.output[idxn, idxc, idxh, idxw] = _______________________

return self.output

def load_param(self, weight, bias): # 参数加载

assert self.weight.shape == weight.shape

assert self.bias.shape == bias.shape

self.weight = weight

self.bias = bias

# 最大池化层

class MaxPoolingLayer(object):

def __init__(self, kernel_size, stride): # 最大池化层的初始化

self.kernel_size = kernel_size

self.stride = stride

print('\tMax pooling layer with kernel size %d, stride %d.' % (self.kernel_size, self.stride))

def forward_raw(self, input): # 前向传播的计算

start_time = time.time()

self.input = input # [N, C, H, W]

self.max_index = np.zeros(self.input.shape)

height_out = (self.input.shape[2] - self.kernel_size) / self.stride + 1

width_out = (self.input.shape[3] - self.kernel_size) / self.stride + 1

self.output = np.zeros([self.input.shape[0], self.input.shape[1], height_out, width_out])

for idxn in range(self.input.shape[0]):

for idxc in range(self.input.shape[1]):

for idxh in range(height_out):

for idxw in range(width_out):

# TODO: 计算最大池化层的前向传播, 取池化窗口内的最大值

self.output[idxn, idxc, idxh, idxw] = _______________________

return self.output

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

(4)网络结构模块

- 神经网络初始化:确定神经网络相关的超参数。为方便起见,本实验在网络初始化

时仅设定每层的名称,在建立网络结构时再设定每层的具体超参数。 - 建立网络结构:定义整个神经网络的拓扑结构,设定每层的超参数,实例化基本单

元模块中定义的层并将这些层堆叠,组成 VGG19 网络结构。根据表3.1中 VGG19 的网络结构和每层的超参数进行实例化。注意每个卷积层和 3 个全连接层中的前 2 个全连接层后面都跟随有 ReLU 层作为激活函数。此外,pool5 层和 fc6 层中间有一个 flatten 层改变特征图的维度。最后是 Softmax 层计算分类概率。 - 神经网络参数初始化:依次调用神经网络中包含参数的层的参数初始化函数。在本

实验中,VGG19 中的 16 个卷积层和 3 个全连接层包含参数,因此需要依次调用其参数初始化函数

class VGG19(object):

def __init__(self, param_path='../../imagenet-vgg-verydeep-19.mat'):

self.param_path = param_path

self.param_layer_name = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3', 'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3', 'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3', 'relu5_3', 'conv5_4', 'relu5_4', 'pool5',

'flatten', 'fc6', 'relu6', 'fc7', 'relu7', 'fc8', 'softmax'

)

def build_model(self):

# TODO:定义VGG19 的网络结构

print('Building vgg-19 model...')

self.layers = {}

self.layers['conv1_1'] = ConvolutionalLayer(3, 3, 64, 1, 1)

self.layers['relu1_1'] = ReLULayer()

self.layers['conv1_2'] = ConvolutionalLayer(3, 64, 64, 1, 1)

self.layers['relu1_2'] = ReLULayer()

self.layers['pool1'] = MaxPoolingLayer(2, 2)

_______________________

self.layers['conv5_4'] = ConvolutionalLayer(3, 512, 512, 1, 1)

self.layers['relu5_4'] = ReLULayer()

self.layers['pool5'] = MaxPoolingLayer(2, 2)

self.layers['flatten'] = FlattenLayer([512, 7, 7], [512*7*7])

self.layers['fc6'] = FullyConnectedLayer(512*7*7, 4096)

self.layers['relu6'] = ReLULayer()

_______________________

self.layers['fc8'] = FullyConnectedLayer(4096, 1000)

self.layers['softmax'] = SoftmaxLossLayer()

self.update_layer_list = []

for layer_name in self.layers.keys():

if 'conv' in layer_name or 'fc' in layer_name:

self.update_layer_list.append(layer_name)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

(5)网络推断模块

- 神经网络的前向传播:前向传播的输入是预处理后的图像。首先将预处理后的图像

输入到 VGG19 网络的第一层;然后根据之前定义的 VGG19 网络的结构,顺序依次调用每层的前向传播函数,每层的输出作为下一层的输入。由于 VGG19 中的网络层数较多,可以利用网络初始化时定义的层队列,建立循环实现前向传播。 - 神经网络参数的加载:利用官方训练好的 VGG19 模型参数,依次将其中的参数加载到 VGG19 对应的层中。本实验使用的官方模型的下载地址为http://www.vlfeat.org/

matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat。VGG19 中包含参数的网络层是卷积层和全连接层,可以根据层的编号依次读入对应卷积层和全连接层的权重和偏置。注意在本实验的网络初始化中,在 pool5 层和 fc6 层之间添加了 flatten 层来改变特征图的维度,而官方提供的模型不包含 flatten 层,因此 fc6 层及之后的层在读取参数时需要偏移。同时值得注意的是,VGG19 官方模型使用的深度学习平台 MatConvNet[6] 的卷积权重的存储方式与本实验不同。MatConvNet 中卷积权重维度为 H × W ×Cin ×Cout,而本实验中权重的维度为 Cin × H × W × Cout。为防止使用官方模型计算出现错误,在读取卷积层权重时需要对输入权重做维度交换,保持与 MatConvNet 的权重存储方式一致。此外还可以从该模型中读取预处理图像时使用的图像均值。 - 神经网络推断函数主体:本实验仅需要对给定的一张图像进行分类,因此给定一张

预处理好的图像,执行网络前向传播函数即可获得 VGG19 预测的 1000 个类别的分类概率,然后取其中概率最大的类别作为最终预测的分类类别。在实际应用中,可能需要对一个数据集中的多张测试图像依次进行分类,然后与测试图像对应的标记进行比对,即可得到测试数据集的分类正确率。

def init_model(self):

print('Initializing parameters of each layer in vgg-19...')

for layer_name in self.update_layer_list:

self.layers[layer_name].init_param()

def load_model(self):

print('Loading parameters from file ' + self.param_path)

params = scipy.io.loadmat(self.param_path)

self.image_mean = params['normalization'][0][0][0]

self.image_mean = np.mean(self.image_mean, axis=(0, 1))

print('Get image mean: ' + str(self.image_mean))

for idx in range(43):

if 'conv' in self.param_layer_name[idx]:

weight, bias = params['layers'][0][idx][0][0][0][0]

# matconvnet: weights dim [height, width, in_channel, out_channel]

# ours: weights dim [in_channel, height, width, out_channel]

weight = np.transpose(weight,[2,0,1,3])

bias = bias.reshape(-1)

self.layers[self.param_layer_name[idx]].load_param(weight, bias)

if idx >= 37 and 'fc' in self.param_layer_name[idx]:

weight, bias = params['layers'][0][idx-1][0][0][0][0]

weight = weight.reshape([weight.shape[0]*weight.shape[1]*weight.shape[2], weight.shape[3]])

self.layers[self.param_layer_name[idx]].load_param(weight, bias)

def load_image(self, image_dir):

print('Loading and preprocessing image from ' + image_dir)

self.input_image = scipy.misc.imread(image_dir)

self.input_image = scipy.misc.imresize(self.input_image,[224,224,3])

self.input_image = np.array(self.input_image).astype(np.float32)

self.input_image -= self.image_mean

self.input_image = np.reshape(self.input_image, [1]+list(self.input_image.shape))

# input dim [N, channel, height, width]

self.input_image = np.transpose(self.input_image, [0, 3, 1, 2])

def forward(self):

print('Inferencing...')

start_time = time.time()

current = self.input_image

for idx in range(len(self.param_layer_name)):

print('Inferencing layer: ' + self.param_layer_name[idx])

current = self.layers[self.param_layer_name[idx]].forward(current)

print('Inference time: %f' % (time.time()-start_time))

return current

def evaluate(self):

prob = self.forward()

top1 = np.argmax(prob[0])

print('Classification result: id = %d, prob = %f' % (top1, prob[0, top1]))

if __name__ == '__main__':

vgg = VGG19()

vgg.build_model()

vgg.init_model()

vgg.load_model()

vgg.load_image('../../cat1.jpg')

prob = vgg.evaluate()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

4.图像分类实例

使用VGG19卷积神经网络模型查看下列图像分类:

(1) 将图像与模型加载

def test_conv_and_pool_layer():

test_data = np.random.rand(1, 3, 22, 22)

test_filter = np.random.rand(3, 3, 3, 64)

test_bias = np.random.rand(64)

conv = ConvolutionalLayer(3, 3, 64, 1, 1)

conv.init_param()

conv.load_param(test_filter, test_bias)

conv_result = conv.forward(test_data)

std_conv = standardConv(3, 3, 64, 1, 1)

std_conv.init_param()

std_conv.load_param(test_filter, test_bias)

std_conv_result = std_conv.forward(test_data)

conv_mse = computeMse(conv_result.flatten(), std_conv_result.flatten())

print('test conv err rate: %f%%'%(conv_mse*100))

pool = MaxPoolingLayer(2, 2)

pool_result = pool.forward(test_data)

std_pool = standardPool(2, 2)

std_pool_result = std_pool.forward(test_data)

pool_mse = computeMse(pool_result.flatten(), std_pool_result.flatten())

print('test pool err rate: %f%%'%(pool_mse*100))

if conv_mse < 0.003 and pool_mse < 0.003:

print('TEST CONV AND POOL LAYER PASS.')

else:

print('TEST CONV AND POOL LAYER FAILED.')

exit()

def forward(vgg):

print('Inferencing...')

start_time = time.time()

current = vgg.input_image

pool5 = np.array([])

for idx in range(len(vgg.param_layer_name)):

print('Inferencing layer: ' + vgg.param_layer_name[idx])

current = vgg.layers[vgg.param_layer_name[idx]].forward(current)

if 'pool5' in vgg.param_layer_name[idx]:

pool5 = current

print('Inference time: %f' % (time.time()-start_time))

return current, pool5

def check_pool5(stu_pool5):

data = np.load('pool5_dump.npy')

pool5_mse = computeMse(stu_pool5.flatten(), data.flatten())

print('test pool5 mse: %f'%pool5_mse)

if pool5_mse < 0.003:

print('CHECK POOL5 PASS.')

else:

print('CHECK POOL5 FAILED.')

exit()

def evaluate(vgg):

prob, pool5 = forward(vgg)

top1 = np.argmax(prob[0])

print('Classification result: id = %d, prob = %f' % (top1, prob[0, top1]))

return pool5

if __name__ == '__main__':

test_conv_and_pool_layer()

print('-------------------------------')

vgg = VGG19(param_path='../imagenet-vgg-verydeep-19.mat')

vgg.build_model()

vgg.init_model()

vgg.load_model()

vgg.load_image('../cat1.jpg')

pool5 = evaluate(vgg)

print('-------------------------------')

check_pool5(pool5)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

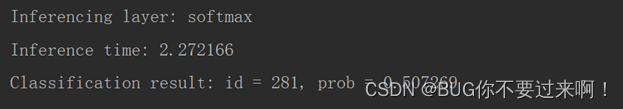

实验结果

图像分类编号为281,预测准确率50.7269%