- 1c 语言easyx图形库飞机大战的源码_飞机大战c语言图片声音源代码

- 2【Python】#2 基本数据类型

- 3【ENVI入门系列】15.遥感动态监测_envi变化检测

- 4双网卡电脑共享网络的实现:通过网线,将A电脑的网络共享给B电脑,既能共享文件和网络还能用A/B电脑远程控制B/A电脑_双网卡共享网络给其他电脑

- 5CentOS镜像要怎么选择?

- 6【NLP】python进行word文档编辑——构建不同层级标题_python如何编辑文件的主题属性

- 7hadoop 高可用(HA)、HDFS HA、Yarn HA

- 8营收恢复加速增长,盐津铺子上演自我升级“好戏”

- 9代码随想录【day 14 二叉树】| 层序遍历 226.翻转二叉树 101.对称二叉树

- 10基于python的几种中文分词-词性获取_python中文词性分类

Ubuntu18.04 NX下用ZED2 双目立体相机进行SLAM_使用zed2做vslam

赞

踩

安装流程

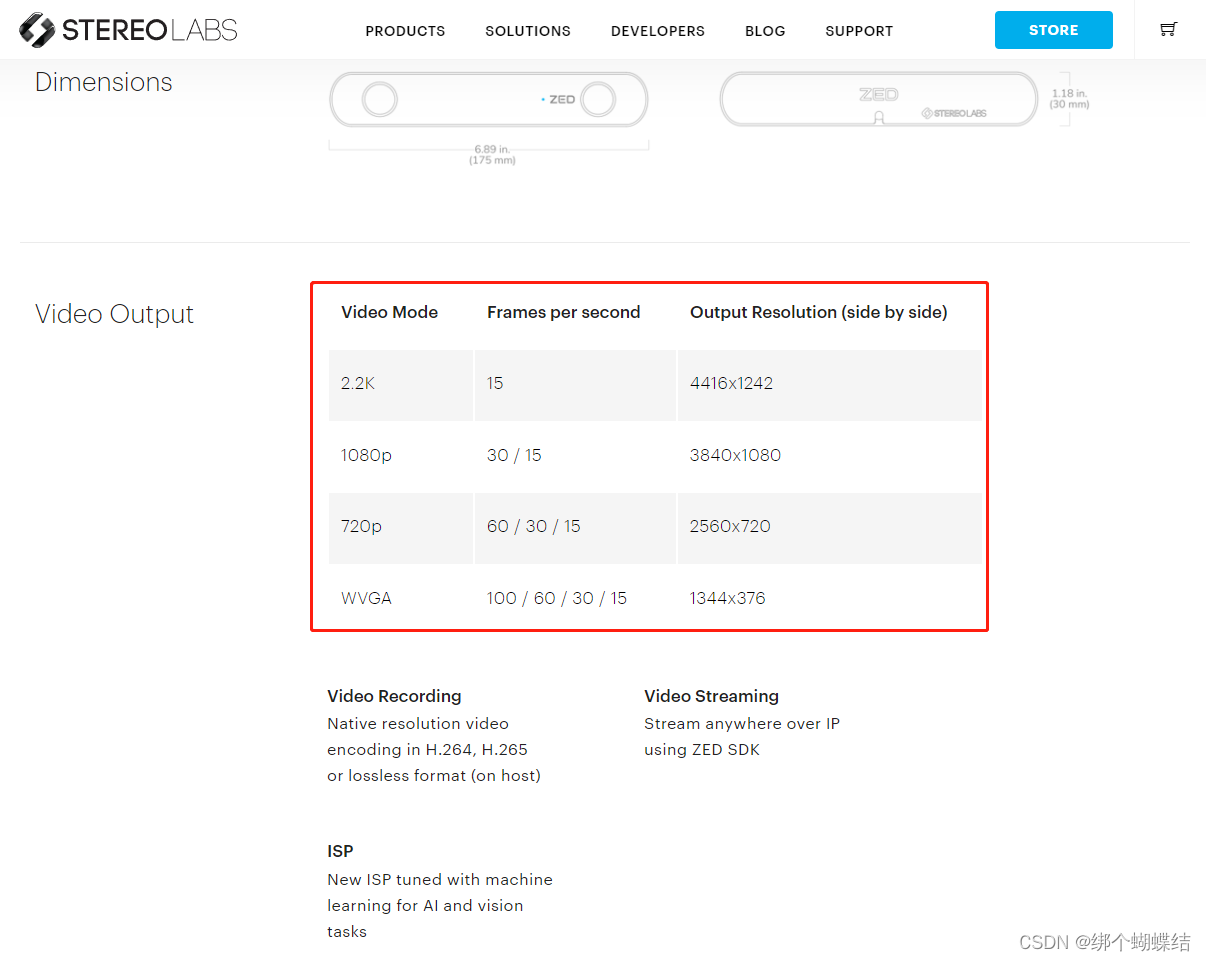

了解zed参数

因为网上的安装流程还是不太完整,我补充一下,希望对其他人也有帮助

部分流程参考这位:博主

自己的安装流程:

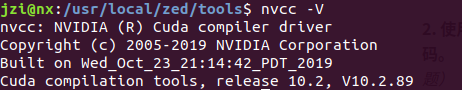

因为NX本来就自带cuda,所以无需安装cuda,所以首先你要知道自己cuda

nvcc -V

- 1

可以看到我的版本是10.2

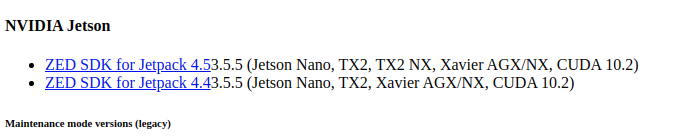

现在去下载 SDK

查看自己的jetpack 版本

jtop

- 1

可以看到我用的是jetpack 4.4.1版本

所以下载的是jetson jetpack 4.4 cuda10.2 的版本,也就是下图第二个

下载完成后,打开终端,进入文件目录

chmod +x ZED_SDK_Ubuntu16_v2.7.1.run

./ZED_SDK_Ubuntu16_v2.7.1.run

- 1

- 2

全部Y就行

问题开始了

- 给电脑插上zed相机,注意要USB3.0的接口。

安装成功后,软件会在/usr/local/zed文件夹下。

打开终端:

cd "/usr/local/zed/sample/positional tracking"

- 1

编译

mkdir build

cd build

cmake ..

make

- 1

- 2

- 3

- 4

此时没有生成可执行文件./ZED_Positional_Tracking

解决方法:去cpp文件下新建build 再编译

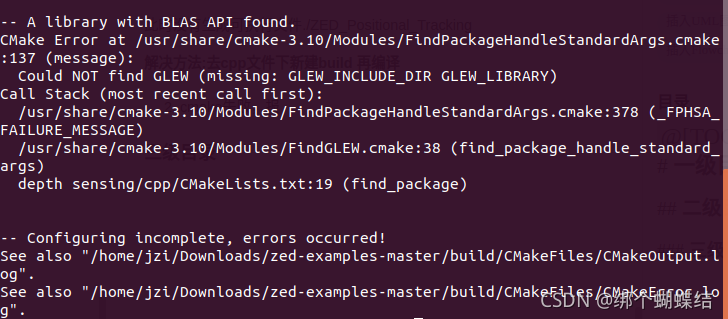

- cmake失败,报错:Could NOT find GLEW (missing: GLEW_INCLUDE_DIR GLEW_LIBRARY)

解决方法:安装libglew-dev软件包就可以解决这个问题了

sudo apt install libglew-dev

- 1

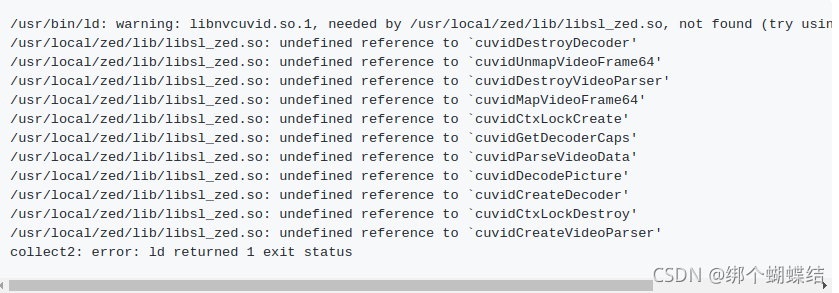

- cmake解决之后,make的时候失败了,报了类似这样的没有定义指向的错误

解决方法:使用camke选项来解决

cmake -DCMAKE_LIBRARY_PATH=/usr/local/cuda/lib64/stubs -DCMAKE_CXX_FLAGS="-Wl,--allow-shlib-undefined" ..

- 1

链接路径需要指向 CUDA 存根文件夹,由于nvcuvid在编译时可能不可用,我们告诉编译器忽略未定义的符号。

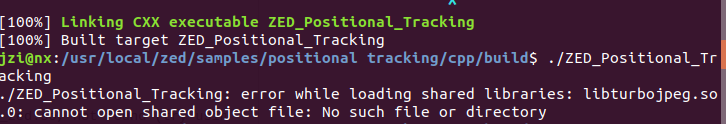

- make编译成功了,可是执行./ZED_Positional_Tracking却找不到libturbojpeg.so.0(其实在执行/usr/local/zed/tools里面的部分程序时候也是出现这个错误)

解决方法:安装libjpeg-turbo 2.0版本

转载这位博主

-

第一步

进入下载官网页面,网址链接

下载2.0.x版本的libjpeg-turbo-2.0.2.tar.gz

-

第二步

使用tar -zxvf libjpeg-turbo-2.0.2.tar.gz 将压缩包解压到当前目录中, 接着执行以下命令:

cd libjpeg-turbo-2.0.2

mkdir build

cmake -G"Unix Makefiles" ..

make

- 1

- 2

- 3

- 4

- 5

- 6

- 7

到这里, libjpeg-turbo就已经编译安装好了, 如果你是以root身份执行的,那么编译好后的文件在/usr/local/lib64 里面

-

第三步(这一步做法和转载的博主不一样)

参考这位博主教程我是修改共享库配置文件/etc/ld.so.conf.

-

1、设置:

sudo gedit /etc/ld.so.conf

- 1

- 2、添加库路径:

include /etc/ld.so.conf.d/*.conf

/home/xxx/Downloads/libjpeg-turbo-2.0.90

- 1

- 2

保存退出;

- 3、使配置立即生效

sudo ldconfig

- 1

现在执行./ZED_Positional_Tracking成功了,tools里面的程序全部都可以执行成功.

看效果

对了,记得相机标定时候,下面和右边的圆圈要在中间才能标定成功.

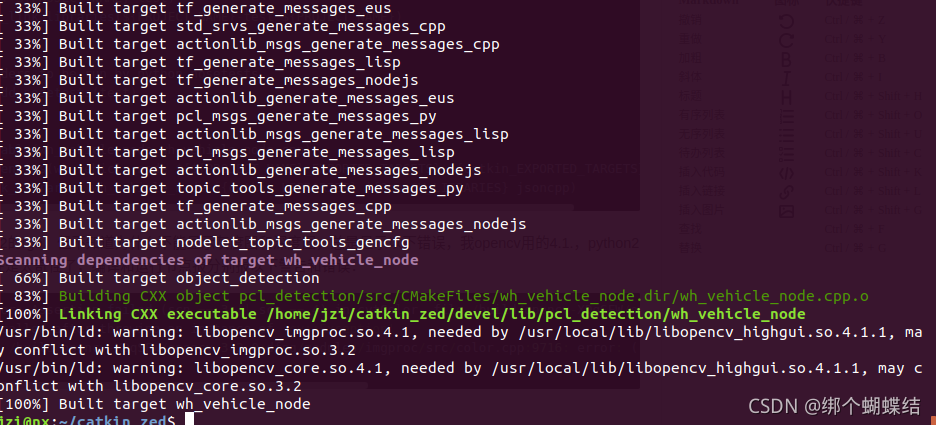

安装ZED2 ROS工具

$ cd ~/ZED_WS/src

$ git clone https://github.com/stereolabs/zed-ros-wrapper.git

$ cd ../

$ rosdep install --from-paths src --ignore-src -r -y

$ catkin_make -DCMAKE_BUILD_TYPE=Release

$ source ./devel/setup.bash

- 1

- 2

- 3

- 4

- 5

- 6

- 7

如果zed-ros-interfaces缺失,那么

git clone https://github.com/stereolabs/zed-ros-interfaces

- 1

git clone 太慢,可以参考下列方式:

//这是我们要clone的

git clone https://github.com/Hackergeek/architecture-samples

//使用镜像

git clone https://github.com.cnpmjs.org/Hackergeek/architecture-samples

//或者

//其他镜像

git clone https://git.sdut.me/Hackergeek/architecture-samples

git clone https://hub.fastgit.org/stereolabs/zed-ros-wrapper.git

git clone https://github.91chi.fun//https://github.com/stereolabs/zed-ros-wrapper.git

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

编译安装包顺序是:

zed_interfaces:catkin_make -DCMAKE_BUILD_TYPE=Release

zed_wrapper:catkin_make -DCATKIN_WHITELIST_PACKAGES="zed_wrapper"

zed_nodelets:catkin_make -DCATKIN_WHITELIST_PACKAGES="zed_nodelets"

节点详情描述可以查看官方文档http://wiki.ros.org/zed-ros-wrapper,里面列举了该package中可以发布的话题:

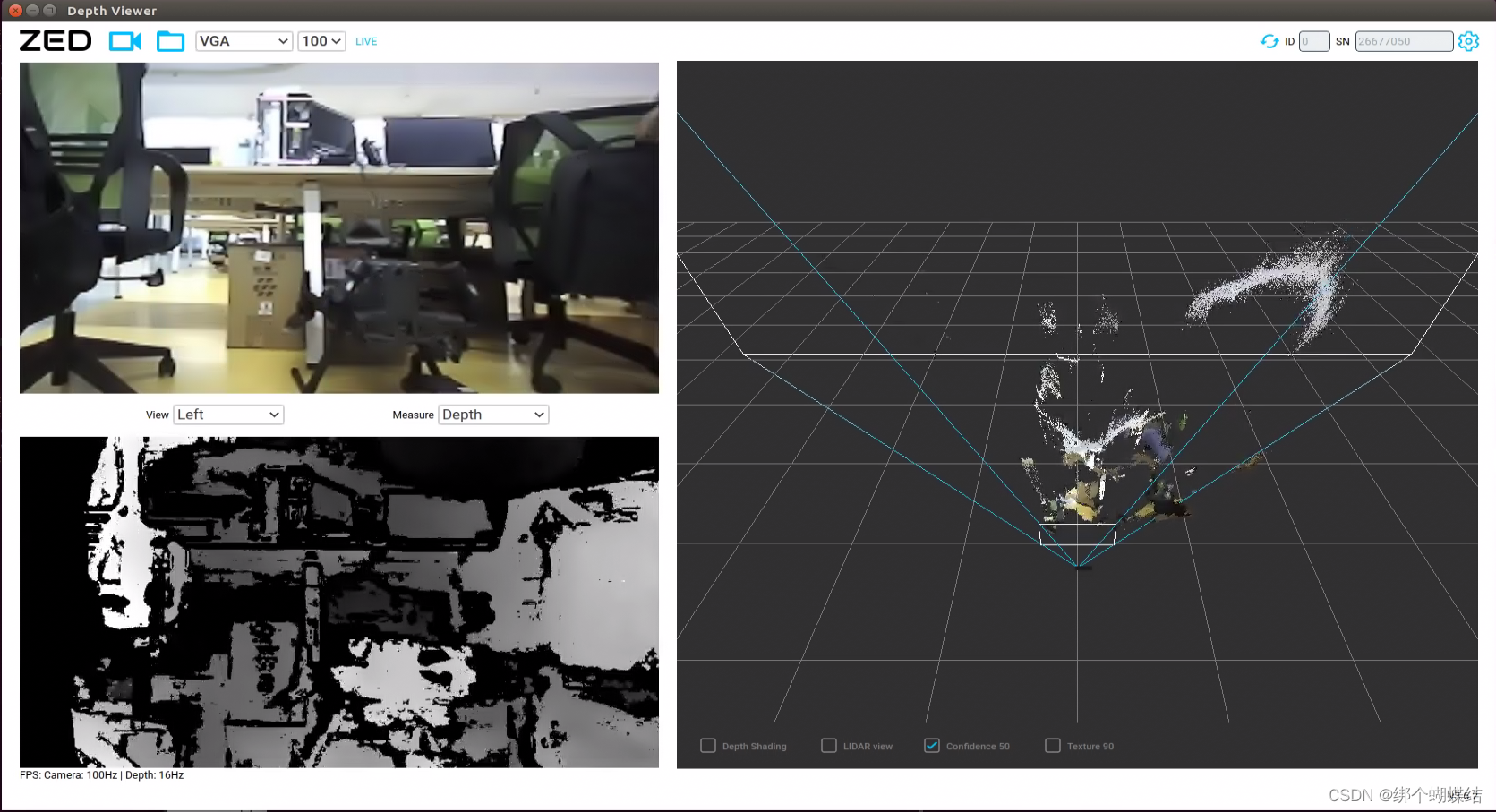

相机自带的工具查看视频流

cd /usr/local/zed/tools

- 1

执行命令./ZED_Depth_Viewer

如果不能看到720和1080和2k,lsusb查看是不是用了usb2.0,也可以用zed2自带工具检测是否用了usb2.0,2.0会导致只能用VGA视频流

启动zed节点

roslaunch zed_wrapper zed2.launch

- 1

查看画面

rosrun image_view image_view image:=/zed2/zed_node/rgb_raw/image_raw_color

- 1

用rviz看看点云

roscore

rosrun rviz rviz

- 1

- 2

查看视差图(相同颜色表示到相机距离相同)

rosrun image_view disparity_view image:=/zed2/zed_node/disparity/disparity_image

- 1

查看rgb(立体纠正的图像)+深度图(左相机为原点)+视差图

ros下命令行打开.pcd文件

sudo apt install pcl-tools

pcl_viewer camera_test.pcd

- 1

- 2

新故事篇章-zed2测距

故事到这里,我才开始慢慢了解双目摄像头

zed2、

深度相机—TOF、双相机立体视觉、结构光立体视觉原理及优势对比、

点云的概念、

三维计算视觉研究、

双目原理、

聚类算法、

激光点云与图像融合的车辆检测方法、

双目立体视觉(4)- ZED2双目视觉开发理论与实践 with examples 0.1 object detection(这位博主很强啊)、

YOLO v5与双目测距结合,实现目标的识别和定位测距、

ORB-SLAM2系统的实时点云地图构建、

然后选择查看这两篇文章开始了三维建模的实践

基于ZED 2和ORB_SLAM2的SLAM实践

ZED 相机 && ORB-SLAM2安装环境配置与ROS下的调试

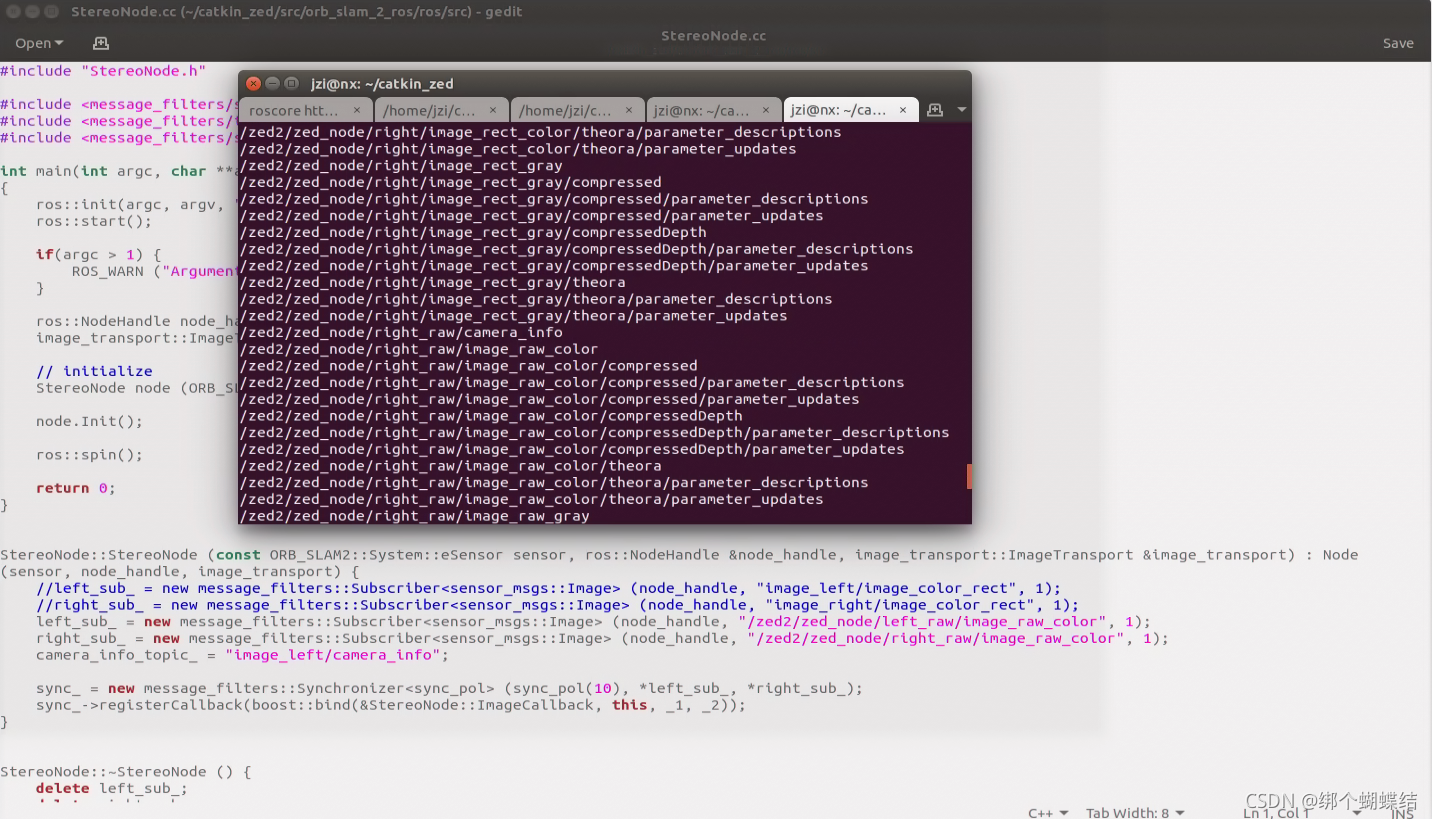

ROS Topic更改

在完成以步骤后我们仍需要更改ROS或ZED ROS Wrapper中topic的名称才能使得ORB-SLAM2 ROS与ZED相机相配合。

根据ZED ROS Wrapper官网(http://www.stereolabs.com/blog/index.php/2015/09/07/use-your-zed-camera-with-ros/)中的信息,我们可以查询到ZED ROS Wrapper所发布的topic名称。我们可以选择更改ZED的相关文件使得其匹配ORB-SLAM2 ROS的topic名字,也可以反过来更改更改ORB-SLAM2的topic名字。我选择的是更改ORB-SLAM2 ROS所接收的Topic名称。

ORB-SLAM2 ROS相关的Topic名称在路径/home/xxx/catkin_zed/src/orb_slam_2_ros/ros/src/StereoNode.cc中的相关文件中

- 1

- 2

- 3

- 4

- 5

运行流程:开启zed2->打开slam2->rviz可视化

roslaunch zed_wrapper zed2.launch

roslaunch orb_slam2_ros orb_slam2_zed2_stereo.launch

rviz rviz

- 1

- 2

- 3

运行这两个节点后就可以看到物体特征点以及相机的姿态以及点云。貌似这个特征点如果缺失就会导致slam2的点云和姿态都停下。

突然项目要求居然从实时检测测距变成离线二维图测距,所以以上的东西又要放一放了。

题外话:项目要求是物外双目拍摄,然后回来再二维图上取点测距,这个和realsense深度摄像头很像,只是realsense测距只有10m,而这个zed2可以达到40米。好了,之后的内容就是直接拿zed2的深度图和rgb,然后三维映射到二维,然后opencv取点显示距离。搞好之后我再回来弄实时建图,融合图像识别加双目来测量每个聚类物的距离。但感觉户外用双目的点云测距肯定不如雷达,为什么不用雷达啊,因为穷。另外ZED2相机标定及运行VINS-mono、VINS-FUSION建模我也想跑一下,图像标定的话我想自己搞一下,那些都是后话。。。。

ZED2相机标定及运行VINS-mono

VINS-FUSION

开始实现

zed-ros的中心测距

中心测距是直接拿深度图,图像中心的深度值是通过图像像素中心的坐标然后转成深度图下的坐标来得到的。代码如下:

/// // // Copyright (c) 2018, STEREOLABS. // // All rights reserved. // // THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS // "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT // LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR // A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT // OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, // SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT // LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, // DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY // THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT // (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE // OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. // /// /** * This tutorial demonstrates simple receipt of ZED depth messages over the ROS system. */ #include <ros/ros.h> #include <sensor_msgs/Image.h> /** * Subscriber callback */ void depthCallback(const sensor_msgs::Image::ConstPtr& msg) { // Get a pointer to the depth values casting the data // pointer to floating point float* depths = (float*)(&msg->data[0]); // Image coordinates of the center pixel int u = msg->width / 2; int v = msg->height / 2; // Linear index of the center pixel int centerIdx = u + msg->width * v; // Output the measure ROS_INFO("Center distance : %g m", depths[centerIdx]); } /** * Node main function */ int main(int argc, char** argv) { /** * The ros::init() function needs to see argc and argv so that it can perform * any ROS arguments and name remapping that were provided at the command line. * For programmatic remappings you can use a different version of init() which takes * remappings directly, but for most command-line programs, passing argc and argv is * the easiest way to do it. The third argument to init() is the name of the node. * * You must call one of the versions of ros::init() before using any other * part of the ROS system. */ ros::init(argc, argv, "zed_video_subscriber"); /** * NodeHandle is the main access point to communications with the ROS system. * The first NodeHandle constructed will fully initialize this node, and the last * NodeHandle destructed will close down the node. */ ros::NodeHandle n; /** * The subscribe() call is how you tell ROS that you want to receive messages * on a given topic. This invokes a call to the ROS * master node, which keeps a registry of who is publishing and who * is subscribing. Messages are passed to a callback function, here * called imageCallback. subscribe() returns a Subscriber object that you * must hold on to until you want to unsubscribe. When all copies of the Subscriber * object go out of scope, this callback will automatically be unsubscribed from * this topic. * * The second parameter to the subscribe() function is the size of the message * queue. If messages are arriving faster than they are being processed, this * is the number of messages that will be buffered up before beginning to throw * away the oldest ones. */ ros::Subscriber subDepth = n.subscribe("depth", 10, depthCallback); /** * ros::spin() will enter a loop, pumping callbacks. With this version, all * callbacks will be called from within this thread (the main one). ros::spin() * will exit when Ctrl-C is pressed, or the node is shutdown by the master. */ ros::spin(); return 0; }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

改为自己的

#include <iostream> #include "ros/ros.h" #include "image_transport/image_transport.h" #include "opencv2/highgui/highgui.hpp" #include "cv_bridge/cv_bridge.h" #include "sensor_msgs/image_encodings.h" image_transport::Subscriber rgbsub; image_transport::Subscriber depthsub; image_transport::Publisher image_pub; void cvtColor_src(cv::Mat &src, cv::Mat &src_gray) { // 转换单通道 if (src.channels() == 4) { cv::cvtColor(src, src_gray, CV_BGRA2GRAY); } else if (src.channels() == 3) { cv::cvtColor(src, src_gray, CV_BGR2GRAY); } else if (src.channels() == 2) { cv::cvtColor(src, src_gray, CV_BGR5652GRAY); } else if (src.channels() == 1) {// 单通道的图片直接就不需要处理 src_gray = src; } else { // 负数,说明图有问题 直接返回 src_gray = src; } } void showColorGrayView(const sensor_msgs::ImageConstPtr& msgImg) //672 x 376 { cv_bridge::CvImagePtr cvImgPtr; try{ cvImgPtr=cv_bridge::toCvCopy(msgImg,sensor_msgs::image_encodings::BGR8); } catch(cv_bridge::Exception e){ ROS_ERROR_STREAM("Cv_bridge Exception:"<<e.what()); return; } cv::Mat cvColorImgMat=cvImgPtr->image; cv::Mat cvGrayImgMat; // Draw an example circle on the video stream if (cvColorImgMat.rows > 60 && cvColorImgMat.cols > 60) cv::circle(cvColorImgMat, cv::Point(50, 50), 10, CV_RGB(255,0,0)); cv::imshow("colorview",cvColorImgMat); //imshow函数只支持8位灰度图像、8位彩色图像和32位灰度图像(像素值范围0-1) cvtColor_src(cvColorImgMat,cvGrayImgMat); image_pub.publish(cvImgPtr->toImageMsg()); //OpenCV图像转换为ROS图像 cv::imshow("grayview",cvGrayImgMat); cv::waitKey(20); } void showDepthView(const sensor_msgs::ImageConstPtr& msgImg) { cv::Mat image; try{ image=cv_bridge::toCvCopy(msgImg, sensor_msgs::image_encodings::TYPE_32FC1) -> image; } catch(cv_bridge::Exception e){ ROS_ERROR_STREAM("Cv_bridge Exception:"<<e.what()); return; } ROS_INFO("center dis:%f m ", image.at<float>(image.rows / 2, image.cols / 2)); cv::imshow("depth_view", image); cv::waitKey(20); } int main(int argc, char **argv) { ros::init(argc, argv, "grayview"); ros::NodeHandle nh; image_transport::ImageTransport it(nh); cv::namedWindow("colorview",cv::WINDOW_NORMAL); cv::moveWindow("colorview",100,100); cv::namedWindow("grayview",cv::WINDOW_NORMAL); cv::moveWindow("grayview",100,100); cv::namedWindow("depth_view",cv::WINDOW_NORMAL); cv::moveWindow("depth_view",100,100); rgbsub = it.subscribe("/zed2/zed_node/rgb/image_rect_color", 1, showColorGrayView); depthsub = it.subscribe("/zed2/zed_node/depth/depth_registered", 1, showDepthView); image_pub = it.advertise("/test", 1); ros::spin(); return 0; }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

cmakelist

cmake_minimum_required(VERSION 3.0.2) project(pcl_detection) ## Compile as C++11, supported in ROS Kinetic and newer # add_compile_options(-std=c++11) ## Find catkin macros and libraries ## if COMPONENTS list like find_package(catkin REQUIRED COMPONENTS xyz) ## is used, also find other catkin packages find_package(catkin REQUIRED COMPONENTS roscpp rospy std_msgs zed_interfaces pcl_ros cv_bridge image_transport ) ## Find opencv lib find_package(OpenCV REQUIRED) ## System dependencies are found with CMake's conventions # find_package(Boost REQUIRED COMPONENTS system) ## Uncomment this if the package has a setup.py. This macro ensures ## modules and global scripts declared therein get installed ## See http://ros.org/doc/api/catkin/html/user_guide/setup_dot_py.html # catkin_python_setup() ################################################ ## Declare ROS messages, services and actions ## ################################################ ## To declare and build messages, services or actions from within this ## package, follow these steps: ## * Let MSG_DEP_SET be the set of packages whose message types you use in ## your messages/services/actions (e.g. std_msgs, actionlib_msgs, ...). ## * In the file package.xml: ## * add a build_depend tag for "message_generation" ## * add a build_depend and a exec_depend tag for each package in MSG_DEP_SET ## * If MSG_DEP_SET isn't empty the following dependency has been pulled in ## but can be declared for certainty nonetheless: ## * add a exec_depend tag for "message_runtime" ## * In this file (CMakeLists.txt): ## * add "message_generation" and every package in MSG_DEP_SET to ## find_package(catkin REQUIRED COMPONENTS ...) ## * add "message_runtime" and every package in MSG_DEP_SET to ## catkin_package(CATKIN_DEPENDS ...) ## * uncomment the add_*_files sections below as needed ## and list every .msg/.srv/.action file to be processed ## * uncomment the generate_messages entry below ## * add every package in MSG_DEP_SET to generate_messages(DEPENDENCIES ...) ## Generate messages in the 'msg' folder # add_message_files( # FILES # Message1.msg # Message2.msg # ) ## Generate services in the 'srv' folder # add_service_files( # FILES # Service1.srv # Service2.srv # ) ## Generate actions in the 'action' folder # add_action_files( # FILES # Action1.action # Action2.action # ) ## Generate added messages and services with any dependencies listed here # generate_messages( # DEPENDENCIES # std_msgs # ) ################################################ ## Declare ROS dynamic reconfigure parameters ## ################################################ ## To declare and build dynamic reconfigure parameters within this ## package, follow these steps: ## * In the file package.xml: ## * add a build_depend and a exec_depend tag for "dynamic_reconfigure" ## * In this file (CMakeLists.txt): ## * add "dynamic_reconfigure" to ## find_package(catkin REQUIRED COMPONENTS ...) ## * uncomment the "generate_dynamic_reconfigure_options" section below ## and list every .cfg file to be processed ## Generate dynamic reconfigure parameters in the 'cfg' folder # generate_dynamic_reconfigure_options( # cfg/DynReconf1.cfg # cfg/DynReconf2.cfg # ) ################################### ## catkin specific configuration ## ################################### ## The catkin_package macro generates cmake config files for your package ## Declare things to be passed to dependent projects ## INCLUDE_DIRS: uncomment this if your package contains header files ## LIBRARIES: libraries you create in this project that dependent projects also need ## CATKIN_DEPENDS: catkin_packages dependent projects also need ## DEPENDS: system dependencies of this project that dependent projects also need catkin_package( INCLUDE_DIRS include # LIBRARIES pcl_detection CATKIN_DEPENDS roscpp rospy std_msgs # DEPENDS system_lib ) ########### ## Build ## ########### ## Specify additional locations of header files ## Your package locations should be listed before other locations include_directories( include ${catkin_INCLUDE_DIRS} ${PCL_INCLUDE_DIRS} ${OpenCV_LIBRARIES} ) FILE(GLOB SOURCE_FILES src/*/*.c src/*/*.cpp src/object_detection.cpp) add_library(zed_pcl_src ${SOURCE_FILES}) link_libraries(zed_pcl_src) add_subdirectory(src) ## Declare a C++ library # add_library(${PROJECT_NAME} # src/${PROJECT_NAME}/pcl_detection.cpp # ) ## Add cmake target dependencies of the library ## as an example, code may need to be generated before libraries ## either from message generation or dynamic reconfigure # add_dependencies(${PROJECT_NAME} ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS}) ## Declare a C++ executable ## With catkin_make all packages are built within a single CMake context ## The recommended prefix ensures that target names across packages don't collide # add_executable(${PROJECT_NAME}_node src/pcl_detection_node.cpp) ## Rename C++ executable without prefix ## The above recommended prefix causes long target names, the following renames the ## target back to the shorter version for ease of user use ## e.g. "rosrun someones_pkg node" instead of "rosrun someones_pkg someones_pkg_node" # set_target_properties(${PROJECT_NAME}_node PROPERTIES OUTPUT_NAME node PREFIX "") ## Add cmake target dependencies of the executable ## same as for the library above # add_dependencies(${PROJECT_NAME}_node ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS}) ## Specify libraries to link a library or executable target against # target_link_libraries(${PROJECT_NAME}_node # ${catkin_LIBRARIES} # ) ############# ## Install ## ############# # all install targets should use catkin DESTINATION variables # See http://ros.org/doc/api/catkin/html/adv_user_guide/variables.html ## Mark executable scripts (Python etc.) for installation ## in contrast to setup.py, you can choose the destination # catkin_install_python(PROGRAMS # scripts/my_python_script # DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION} # ) ## Mark executables for installation ## See http://docs.ros.org/melodic/api/catkin/html/howto/format1/building_executables.html # install(TARGETS ${PROJECT_NAME}_node # RUNTIME DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION} # ) ## Mark libraries for installation ## See http://docs.ros.org/melodic/api/catkin/html/howto/format1/building_libraries.html # install(TARGETS ${PROJECT_NAME} # ARCHIVE DESTINATION ${CATKIN_PACKAGE_LIB_DESTINATION} # LIBRARY DESTINATION ${CATKIN_PACKAGE_LIB_DESTINATION} # RUNTIME DESTINATION ${CATKIN_GLOBAL_BIN_DESTINATION} # ) ## Mark cpp header files for installation # install(DIRECTORY include/${PROJECT_NAME}/ # DESTINATION ${CATKIN_PACKAGE_INCLUDE_DESTINATION} # FILES_MATCHING PATTERN "*.h" # PATTERN ".svn" EXCLUDE # ) ## Mark other files for installation (e.g. launch and bag files, etc.) # install(FILES # # myfile1 # # myfile2 # DESTINATION ${CATKIN_PACKAGE_SHARE_DESTINATION} # ) ############# ## Testing ## ############# ## Add gtest based cpp test target and link libraries # catkin_add_gtest(${PROJECT_NAME}-test test/test_pcl_detection.cpp) # if(TARGET ${PROJECT_NAME}-test) # target_link_libraries(${PROJECT_NAME}-test ${PROJECT_NAME}) # endif() ## Add folders to be run by python nosetests # catkin_add_nosetests(test) add_executable(wh_vehicle_node wh_vehicle_node.cpp) add_dependencies(wh_vehicle_node ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS}) target_link_libraries(wh_vehicle_node ${catkin_LIBRARIES} ${OpenCV_LIBRARIES} jsoncpp)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

运行后可以看到zed2的rgb,但不知道为什么不能显示灰度图和深度图,我opencv用的4.1.,python2来编译,实在是太奇怪了,编译和运行节点报分别报以下警告和错误:

OpenCV Error: Assertion failed (scn == 3 || scn == 4) in cvtColor, file /build/opencv-XDqSFW/opencv-3.2.0+dfsg/modules/imgproc/src/color.cpp, line 9716

terminate called after throwing an instance of 'cv::Exception'

what(): /build/opencv-XDqSFW/opencv-3.2.0+dfsg/modules/imgproc/src/color.cpp:9716: error: (-215) scn == 3 || scn == 4 in function cvtColor

- 1

- 2

- 3

- 4

找了一天,找到解决方法了,ROS自带的opencv版本为3.2.0,在安装opencv4后导致使用不同版本的opencv报错,需要给ROS指定统一版本的opencv,解决方法是修改cv_bridge配置文件,使ROS去调用自己安装的opencv版本。(详细操作看这篇文章)

记得Cmakelist.txt里面OpenCV改为4.1

## Find opencv lib

find_package(OpenCV 4.1 REQUIRED)

- 1

- 2

代码没修改,最后显示rgb+灰度图+深度图+rviz显示ROS发布画圈后的图。

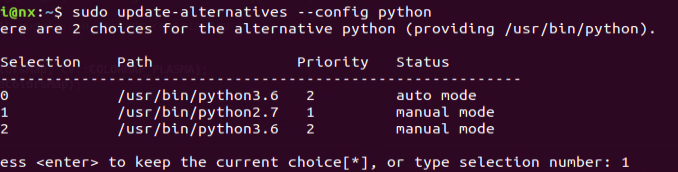

上面的操作虽然一时可以,但治标不治本,会导致roscore从2.7切换到3.6启动节点失败,而且启动zed2.launch也会失败。之后再想想怎么解决。要解决roscore和zed2.launch的问题,那还是要切回原来的python2.7,操着如下:

sudo update-alternatives --config python

- 1

参考:

渲染深度图

单通道深度图像转化为彩色图发布

ros_多消息同步回调

近似时间算法

cv_bridge

录制多个话题,查看zed2深度和rgb的发布速率,这是为了等一下利用时间戳同步数据。录制过程会出现空间不够情况,我也没做压缩或者扩容等处理,简单验证一下自己问题就好。

rosbag record -o subset1 /zed2/zed_node/rgb/image_rect_colo /zed2/zed_node/depth/depth_registered

- 1

rqt_bag查看数据

rqt_bag subset_2021-10-27-09-33-27.bag subset_2021-10-27-09-33-27.bag

- 1

rgb显示是有的,但显示似乎有点问题。但我们可以看到1s内深度和rgb每一帧发布的时间都差不多,1s20帧。

所以之后可以利用ExactTime Policy或者ApproximateTime Policy来进行消息匹配(详情看:ros_多消息同步回调)

对于这个zed2摄像头,通过/zed2/zed_node/depth/depth_registered 获取的像素值不是直接深度值,而是0-255像素的间接深度值,这个是通过image_transport将[0-255]的像素深度值、相机内参发布出去。

我们可以通过cv_bridge::toCvCopy()来得到深度值,里面转换公式是通过z=f.b/d 来得到深度值的。(详情请看双目模型及深度值计算以及zed2通过相机内参对图像的纠正)

`cv::Mat depthImage = cv_bridge::toCvCopy(depth_image, sensor_msgs::image_encodings::TYPE_32FC1) -> image;`

- 1

之后我用cv::imwrite保存深度图depthImage ,但这样会导致深度图数据缺失,因为它会全部转成8UC1**(cv::imwrite对于深度图是只能保存为8UC1,16UC1)**

所以我保存depthImage数据为.xml,但是这样输出会出现inf、inf、Nan(如下图所示),这个应该是点双目匹配时候失败导致的。也就是说不是每个像素点你都有深度值的。

题外话:cv::imwrite保存png是可以保存16位的,数据是无损的(也可以设置压缩等级),保存jpg只能8位且数据有损的。

其实这个是深度值, 你要需要相机内参才能将深度值转成三维坐标。待更.