- 1人工智能导论笔记-第二章-知识表示_谓词与产生式的符号定义

- 2张fool语录 (某高中老师,学生上课的时候记录的),蛮搞_含紧一点h.边做边走

- 3Python|Pyppeteer获取去哪儿酒店数据(20)_python爬取酒店数据

- 4[OpenCV学习笔记]Qt+OpenCV实现图像灰度反转、对数变换和伽马变换

- 5Neo4基础语法学习_neo4j语法教程

- 6SAP FIORI开发的Eclipse环境配置篇_eclipse sap fiori

- 7docker 部署 Epusdt - 独角数卡 dujiaoka 的 usdt 支付插件

- 8哇塞,可以用Python实现电脑自动写小说了!!!_python写小说自动生成

- 9开源一个微信小程序,支持蓝牙配网+WiFi双控制ESP32-C3应用示范;(附带Demo)_blufiesp32wechat使用

- 10数据库锁表解决办法

基于飞桨的猫狗识别_百度飞桨动物图片识别模型完成训练后生成的精确率图

赞

踩

数据的预处理

首先从aistudio的官方库中对数据进行下载:

!mkdir -p /home/aistudio/.cache/paddle/dataset/cifar/

!wget "http://ai-atest.bj.bcebos.com/cifar-10-python.tar.gz" -O cifar-10-python.tar.gz

!mv cifar-10-python.tar.gz /home/aistudio/.cache/paddle/dataset/cifar/

!ls -a /home/aistudio/.cache/paddle/dataset/cifar/

- 1

- 2

- 3

- 4

本次使用数据集为 CIFAR10 数据集,CIFAR-10 数据集由 10 个类的 60000 个 32x32 的彩色图像组成,即每个类有 6000 个图像。数据如下所示:

从上图可以看到,这 10 个类分别是:飞机、汽车、鸟、猫、鹿、狗、青蛙、马、船和卡车。每个类存在 6000 张图像(其中5000 在训练集中,1000 在测试集中)。即训练集中的图像总数为

5000

×

10

=

50000

5000\times10 = 50000

5000×10=50000 张,测试数据集共有图像 10000 张。

我们的任务就是训练出一个较好的模型,使其能够对任意一张图像进行识别。换句话说,我们希望得到的模型为:将任意一张图像放入该模型中,该模型能够准确输出该图像所属的类别。

首先对数据进行加载器的制作,将数据集合进行分批与打乱:

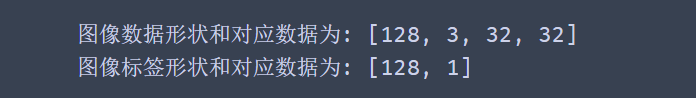

BATCH_SIZE=128 train_reader = paddle.batch( # 加载默认文件夹中,上面代码块下载的数据集合 paddle.reader.shuffle(paddle.dataset.cifar.train10(),buf_size=128*100), batch_size = BATCH_SIZE ) test_reader = paddle.batch( # 加载默认文件夹中,上面代码块下载的数据集合 paddle.dataset.cifar.test10(), batch_size = BATCH_SIZE ) with fluid.dygraph.guard(): ### 对制作的数据集进行测试 for batch_id,data in enumerate(train_reader()): # 获得图像数据,并转为float32类型的数组 img_arr_data = np.array([x[0] for x in data]).astype('float32').reshape(-1, 3, 32, 32) # 获得图像标签数据,并转为float32类型的数组 label_arr_data = np.array([x[1] for x in data]).astype('float32').reshape(-1, 1) # # 将数据转为 飞浆动态图格式(Tensor) img_data = fluid.dygraph.to_variable(img_arr_data) label = fluid.dygraph.to_variable(label_arr_data) # 打印数据形状 print("图像数据形状和对应数据为:", img_data.shape) print("图像标签形状和对应数据为:", label.shape) break

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

运行结果:

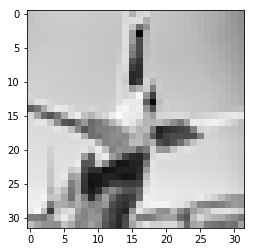

可以展示加载器中的任意一张图片:

### 图片的展示

img = img_arr_data[15]*255

img = img.reshape((3,32,32)).astype(np.uint8)

img = img.transpose(1,2,0)

plt.imshow(img)

- 1

- 2

- 3

- 4

- 5

模型的建立

本实验主要建立一个 VGG16 模型的改版,由于原图大小为32×32,因此本模型将VGG16的最后一组卷积层给删除,得到模型如下:

该模型主要还是基于 VGG16 的模型,不过由于原图大小为 3×32×32 ,因此将VGG16的最后一部分卷积进行了删除。为了防止过拟合,在后面的几个全连接层中还加入了 dropout 操作。

代码如下:

##VGG16 卷积神经网络 # VGG模型代码 from paddle.fluid.layer_helper import LayerHelper from paddle.fluid.dygraph.nn import Conv2D, Pool2D, BatchNorm, Linear from paddle.fluid.dygraph.base import to_variable # 定义vgg块,包含多层卷积和1层2x2的最大池化层 class vgg_block(fluid.dygraph.Layer): def __init__(self, num_convs, in_channels, out_channels): """ num_convs, 卷积层的数目 num_channels, 卷积层的输出通道数,在同一个Incepition块内,卷积层输出通道数是一样的 """ super(vgg_block, self).__init__() self.conv_list = [] for i in range(num_convs): conv_layer = self.add_sublayer('conv_' + str(i), Conv2D(num_channels=in_channels, num_filters=out_channels, filter_size=3, padding=1, act='relu')) self.conv_list.append(conv_layer) in_channels = out_channels self.pool = Pool2D(pool_stride=2, pool_size = 2, pool_type='max') def forward(self, x): for item in self.conv_list: x = item(x) return self.pool(x) class VGG(fluid.dygraph.Layer): def __init__(self, conv_arch=((2, 64), (2, 128), (3, 256), (3, 512))): super(VGG, self).__init__() self.vgg_blocks=[] iter_id = 0 # 添加vgg_block # 这里一共5个vgg_block,每个block里面的卷积层数目和输出通道数由conv_arch指定 in_channels = [3, 64, 128, 256, 512] for (num_convs, num_channels) in conv_arch: block = self.add_sublayer('block_' + str(iter_id), vgg_block(num_convs, in_channels=in_channels[iter_id], out_channels=num_channels)) self.vgg_blocks.append(block) iter_id += 1 self.fc1 = Linear(input_dim=512*2*2, output_dim=1024, act='relu') self.drop1_ratio = 0.5 self.fc2= Linear(input_dim=1024, output_dim=1024, act='relu') self.drop2_ratio = 0.5 self.fc3 = Linear(input_dim=1024, output_dim=10,act='softmax') def forward(self, x, label): for item in self.vgg_blocks: x = item(x) x = fluid.layers.reshape(x, [x.shape[0], -1]) # print(x.shape) x = fluid.layers.dropout(self.fc1(x), self.drop1_ratio) # print(x.shape) x = fluid.layers.dropout(self.fc2(x), self.drop1_ratio) x = self.fc3(x) if label is not None: acc = fluid.layers.accuracy(input=x, label=label) return x, acc else: return x model = VGG()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

模型的训练

模型的训练时的参数设置:

- 迭代次数:20

- 批次大小:128

- 损失保存次数:间隔200个batch保存一次

- 优化器:Adam优化器

- 学习率:0.001

是否正则化:开始使用L2 正则化,发现效果很差,然后就放弃了正则化。

Dorpout 率:0.5

代码如下:

# VGG模型的训练 place = fluid.CUDAPlace(0) with fluid.dygraph.guard(place): model = VGG() model.train() optimizer = fluid.optimizer.AdamOptimizer(learning_rate=0.001, parameter_list=model.parameters()) EPOCH_NUM =20 iter = 0 iters =[] losses=[] for epoch_id in range(EPOCH_NUM): for batch_id,data in enumerate(train_reader()): # 获得图像数据,并转为float32类型的数组 img_data = np.array([x[0] for x in data]).astype('float32').reshape(-1, 3, 32, 32) # 获得图像标签数据,并转为float32类型的数组 label_data = np.array([x[1] for x in data]).reshape(-1, 1) # 将数据转为 飞浆动态图格式(Tensor) img_data = fluid.dygraph.to_variable(img_data) label = fluid.dygraph.to_variable(label_data) pre,acc = model(img_data, label) avg_acc = fluid.layers.mean(acc) loss = fluid.layers.cross_entropy(pre,label) avg_loss = fluid.layers.mean(loss) #每训练了200批次的数据,打印下当前Loss的情况 if batch_id % 100 == 0: print("epoch: {}, batch: {}, loss is: {}, acc is {}".format(epoch_id, batch_id, avg_loss.numpy(), avg_acc.numpy())) iters.append(iter) losses.append(avg_loss.numpy()) iter = iter + 100 avg_loss.backward() optimizer.minimize(avg_loss) model.clear_gradients() print("epoch: {}, batch: {}, loss is: {}, acc is {}".format(epoch_id, batch_id, avg_loss.numpy(),avg_acc.numpy())) fluid.save_dygraph(model.state_dict(), './checkpoint/vgg_cifar_epoch{}'.format(epoch_id))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

结果如下:

损失的变化如下:

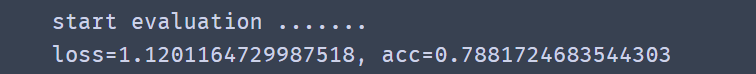

模型的测试

模型测试

with fluid.dygraph.guard(place): print('start evaluation .......') #加载模型参数 model = VGG() model_state_dict, _ = fluid.load_dygraph('checkpoint/vgg_cifar_epoch18') model.load_dict(model_state_dict) model.eval() acc_set = [] avg_loss_set = [] for batch_id, data in enumerate(test_reader()): # 获得图像数据,并转为float32类型的数组 img_data = np.array([x[0] for x in data]).astype('float32').reshape(-1, 3, 32, 32) # 获得图像标签数据,并转为float32类型的数组 label_data = np.array([x[1] for x in data]).reshape(-1, 1) # 将数据转为 飞浆动态图格式(Tensor) img_data = fluid.dygraph.to_variable(img_data) label = fluid.dygraph.to_variable(label_data) prediction, acc = model(img_data, label) loss = fluid.layers.cross_entropy(input=prediction, label=label) avg_loss = fluid.layers.mean(loss) acc_set.append(float(acc.numpy())) avg_loss_set.append(float(avg_loss.numpy())) #计算多个batch的平均损失和准确率 acc_val_mean = np.array(acc_set).mean() avg_loss_val_mean = np.array(avg_loss_set).mean() print('loss={}, acc={}'.format(avg_loss_val_mean, acc_val_mean))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

模型的应用

在百度上,随机选取了三种猫和狗的图片,如下图所示。将这三张图片放入模型2中进行预测,得到结果是:模型2能够很准确的对这三张图像进行分类。

结论

VGG16 确实能够对图片中的细节进行提取。且我们利用CIFAR的数据集合训练出来的模型,不仅能够应用于猫狗识别还可以应用于各个类别的识别。