- 1产品Axure的元组件以及案例_axure组件

- 2OpenCV图像处理技术之图像直方图_equr

- 3YOLO-World_yolo world

- 4微信支付 —— 公众号支付代码详解_nansystem:access_denied

- 5ubuntu安装完tensorrt后在conda虚拟环境调用_conda env no module named 'tensorrt

- 6NLP 自然语言处理实战_nlp项目实战

- 7重新认识快手:人工智能的从 0 到 1

- 8优化篇--vxe-table 大数据表格替代方案_vxetable性能

- 9CSS之margin塌陷问题_css margin塌陷

- 10【物联网天线选择攻略】2.4GHz 频段增益天线模块设备选择_物联网模块一定要有天线吗

【文献阅读】MSGAN——模式倒塌的进一步解决(Q. Mao等人,CVPR, 2019)_模式坍塌在哪篇文献中提到

赞

踩

一、文章情况介绍

文章题目:《Mode Seeking Generative Adversarial Networks for Diverse Image Synthesis》

CVPR2019年的一篇文章,偶然看到的,就作为精读文献记录下来。

文献引用格式:Qi Mao, Hsin-Ying Lee, Hung-Yu Tseng, et al. "Mode Seeking Generative Adversarial Networks for Diverse Image Synthesis." IEEE Conference on Computer Vision and Pattern Recognition(CVPR). 2019.

项目地址:https://github.com/HelenMao/MSGAN/

二、文章导读

开篇先放上MSGAN(Mode Seeking Generative Adversarial Networks)的效果图:

先来介绍下这篇文章的最主要工作就是提高了样本丰度,也可以说是更好的解决了模式倒塌问题(mode collapse,指生成器一旦学到某个特征骗过判别器,那么它的学习效果就会迅速向这个特征靠拢,导致最终生成的结果都非常类似)。左边是cGAN的结果,右边是MSGAN的结果,左边的很明显生成的结果都很类似,但是右边的多样性明显要更好一点,我们需要的样本也应该具有较好的多样性。

看一下文章的摘要部分:

Most conditional generation tasks expect diverse outputs given a single conditional context. However, conditional generative adversarial networks (cGANs) often focus on the prior conditional information and ignore the input noise vectors, which contribute to the output variations. Recent attempts to resolve the mode collapse issue for cGANs are usually task-specific and computationally expensive. In this work, we propose a simple yet effective regularization term to address the mode collapse issue for cGANs. The proposed method explicitly maximizes the ratio of the distance between generated images with respect to the corresponding latent codes, thus encouraging the generators to explore more minor modes during training. This mode seeking regularization term is readily applicable to various conditional generation tasks without imposing training overhead or modifying the original network structures. We validate the proposed algorithm on three conditional image synthesis tasks including categorical generation, image-to-image translation, and text-to-image synthesis with different baseline models. Both qualitative and quantitative results demonstrate the effectiveness of the proposed regularization method for improving diversity without loss of quality.

cGAN的最主要问题就是过于关注先验知识而忽略输入的噪声向量,而这个向量才是构成输出多样性的关键,因此作者提出了一个简单的正则项来解决cGAN的模式倒塌问题(mode collapse)。原理就是最大化生成图像和隐编码(latent code)之间的距离的比值。

作者将这种方法分别用于类别生成,image-to-image域迁移,text-to-image文本生成图像,都能够明显改善生成样本的多样性。

三、文章详细介绍

前面的introduction部分主要介绍了cGAN会一定程度上出现模式倒塌的问题。目前对于模式倒塌主要有两种解决办法,一种是判别器优化,引入散度矩阵,另一种是用多生成器和编码器,但是这些都不太适用于cGAN的模式倒塌:

There are two main approaches to address the mode collapse problem in GANs. A number of methods focus on discriminators by introducing different divergence metrics and optimization process. The other methods use auxiliary networks such as multiple generators and additional encoders. However, mode collapse is relatively less studied in cGANs. Some recent efforts have been made in the image-to-image translation task to improve diversity. Similar to the second category with the unconditional setting, these approaches introduce additional encoders and loss functions to encourage the one-to-one relationship between the output and the latent code. These methods either entail heavy computational overheads on training or require auxiliary networks that are often task-specific that cannot be easily extended to other frameworks.

下面是作者这篇文章的主要贡献:

• We propose a simple yet effective mode seeking regularization method to address the mode collapse problem in cGANs. This regularization scheme can be readily extended into existing frameworks with marginal training overheads and modifications. (提出了mode seeking regularization方法来解决cGAN的模式倒塌问题,且该正则项非常容易添加到现有模型中)

• We demonstrate the generalizability of the proposed regularization method on three different conditional generation tasks: categorical generation, image-to-image translation, and text-to-image synthesis. (在三种不同的领域验证了该正则方法的泛化能力)

• Extensive experiments show that the proposed method can facilitate existing models from different tasks achieving better diversity without sacrificing visual quality of the generated images. (验证该方法能够在确保图像质量的情况下容易引入到其他模型)

接下来是对cGAN的介绍,我之前也做过cGAN,所以这里就不多讲了,可以直接看这篇:对抗生成网络学习(十三)——conditionalGAN生成自己想要的手写数字(tensorflow实现)。出现问题的原因作者也大致用下面这张图表示了,输入向量z,但是由于模式倒塌,cGAN只学到了M2和M4特征,导致最后的结果主要是这两类图像。

目前对于cGAN模式倒塌也有一部分研究,主要是对生成图像和输入噪声进行bijection constraint(双射限制),但这样做的问题就在于计算量太大,泛化性较差。

接下来就是文章的核心了,mode seeking GAN。可以结合图2,假定输入的隐向量z对应的隐编码空间为Z,对应映射的图像为I,当发生模式倒塌时,如果两个隐编码z1和z2非常接近,那么他们就会映射到相同的区域,也就是Fig2中的M2,因此我们提出一个正则项,叫mode seeking regularization term:

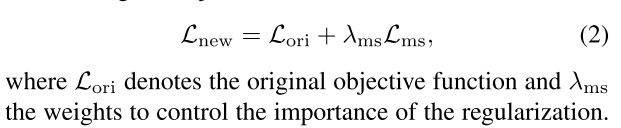

在Fig2中我们可以简单理解为要最大化(I1, I2)距离与(z1, z2)距离之间的比值。因此目标函数就可以修改为:

这里的超参数

对于不同的应用场景,第一项的设计也可以不同,比如:

关于如何将正则项添加到模型中,作者也简单的给出了一个示意图:

最后就是实验部分了,作者用了4种参数来描述生成图像质量的好坏:

FID(Fr´echet Inception Distance). To evaluate the quality of the generated images, we use FID to measure the distance between the generated distribution and the real one through features extracted by Inception Network. Lower FID values indicate better quality of the generated images. (值越低越好)

LPIPS. To evaluate diversity, we employ LPIPS following. LIPIS measures the average feature distances between generated samples. Higher LPIPS score indicates better diversity among the generated images. (值越高越好)

NDB (Number of Statistically-Different Bins)and JSD(Jensen-Shannon Divergence). To measure the similarity between the distribution between real images and generated one, we adopt two bin-based metrics, NDB and JSD. These metrics evaluate the extent of mode missing of generative models. Following [23], the training samples are first clustered using K-means into different bins which can be viewed as modes of the real data distribution. Then each generated sample is assigned to the bin of its nearest neighbor. We calculate the bin-proportions of the training samples and the synthesized samples to evaluate the difference between the generated distribution and the real data distribution. NDB score and JSD of the bin-proportion are then computed to measure the mode collapse. Lower NDB score and JSD mean the generated data distribution approaches the real data distribution better by fitting more modes. (值越低越好)

具体数值的大小这里就不细说了,下面主要看一下三类模型的效果图吧。

首先关于image-to-image,先来看看和pix2pix模型的比较:

还有和DRIT模型的比较:

最后是text-to-image,这里主要是和stackGAN++模型的比较:

效果十分亮眼。如果我们对输入的隐向量z设置为线性插值,那么以上两种模型的生成效果也是能够明显看出区别的:

四、小结

1. 做模型倒塌研究的成果很多,前面也提到过DRAGAN和WGAN,不过这些模型都是在训练过程中添加梯度惩罚,减少过于尖锐的梯度。这篇文章则是对输入向量和生成结果之间引入正则项,以确保相似的输入向量不会总落在相同的映射区域,这两类研究还是有所区别的。