- 1翻译:Practical Hidden Voice Attacks against Speech and Speaker Recognition Systems_speech over the air

- 2Springboot+Dubbo+Nacos实现RPC调用_dubbo rpc + nacos

- 3vscode网页版的正确打开方式(建立tunnel-p2p连接)

- 4聊聊前后端分离接口规范

- 5Vivado SDK报错Error while launching program: Memory write error at 0x100000. AP transaction timeout.

- 62024国际生物发酵展畅想未来-势拓伺服科技

- 7南京邮电大学操作系统实验三:虚拟内存页面置换算法_3、页面置换算法 (1)使用数组存储一组页面请求,页面请求的数量要50个以上,访问的

- 8【概率论】斗地主中出现炸弹的几率

- 9如何打造一个可躺赚的网盘项目,每天只需要2小时_网上躺赚项目

- 10osg qt5.15 osg3.6.3 osgEarth3.1 编译爬山

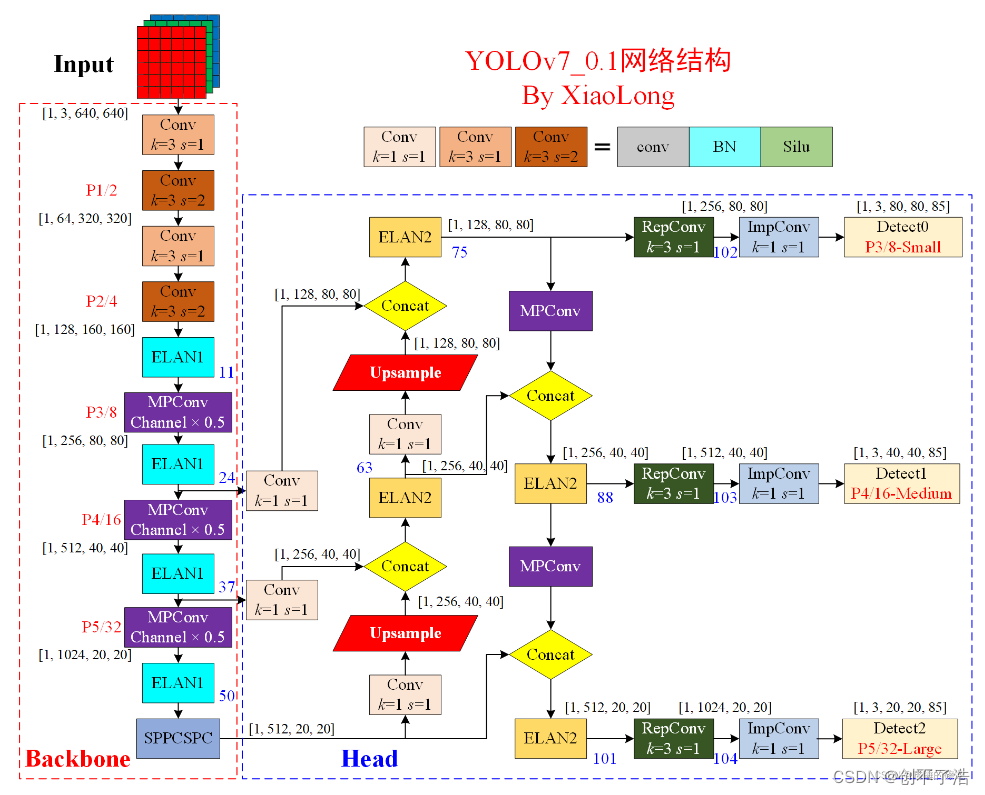

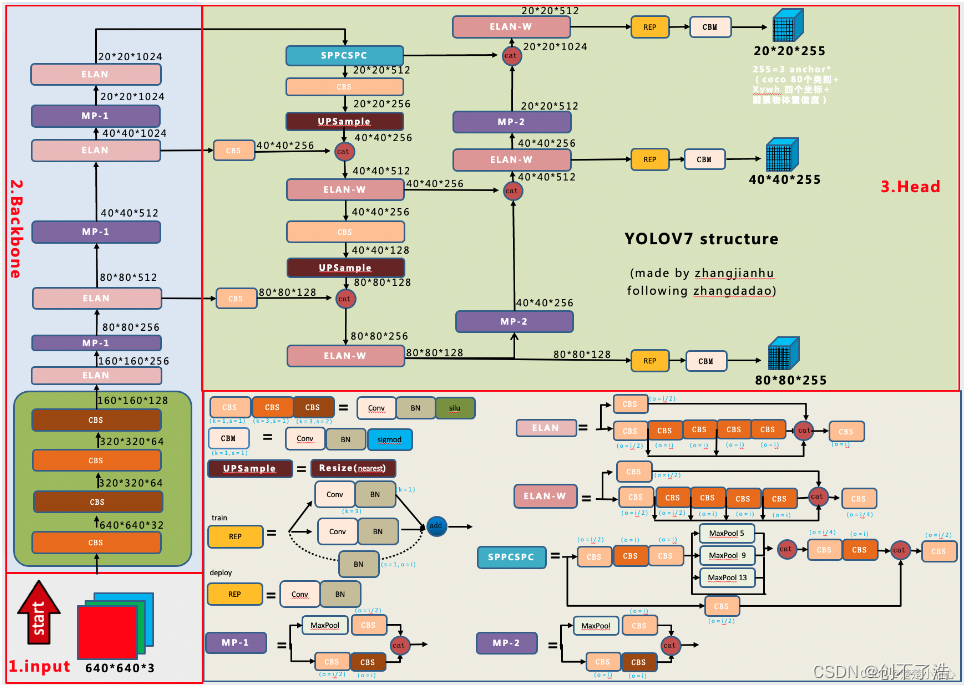

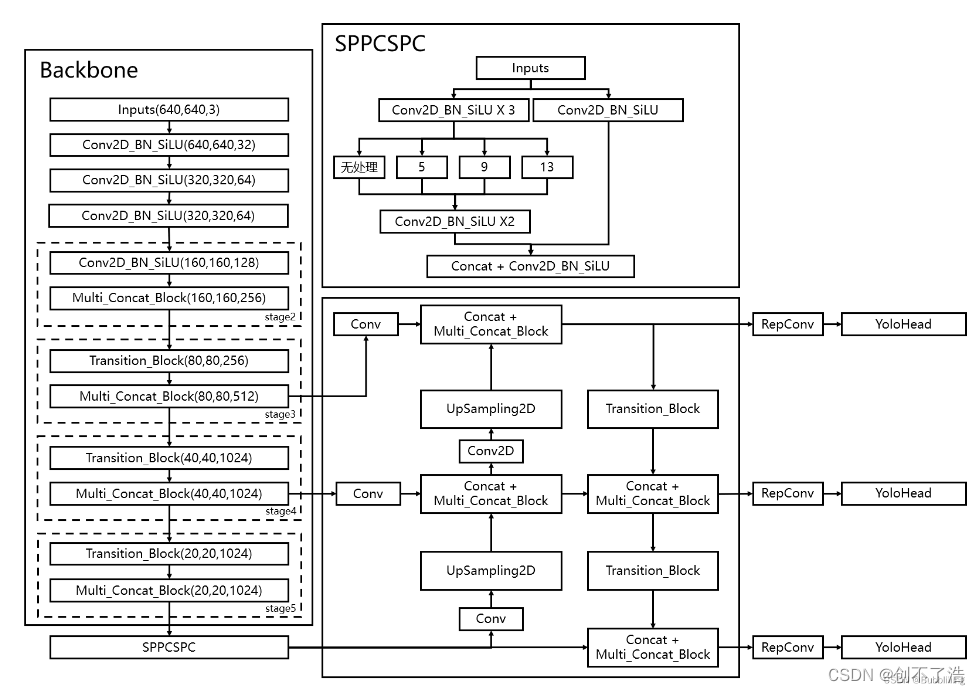

详解YOLOV7 网络结构

赞

踩

yolo.py 输出结构

输出的 arguments 和yaml文件的区别就是 多了第一列Conv输入的通道数

YOLOR v0.1-112-g55b90e1 torch 1.7.0 CUDA:0 (Quadro RTX 4000, 8191.6875MB) from n params module arguments 0 -1 1 928 models.common.Conv [3, 32, 3, 1] 1 -1 1 18560 models.common.Conv [32, 64, 3, 2] 2 -1 1 36992 models.common.Conv [64, 64, 3, 1] 3 -1 1 73984 models.common.Conv [64, 128, 3, 2] 4 -1 1 8320 models.common.Conv [128, 64, 1, 1] 5 -2 1 8320 models.common.Conv [128, 64, 1, 1] 6 -1 1 36992 models.common.Conv [64, 64, 3, 1] 7 -1 1 36992 models.common.Conv [64, 64, 3, 1] 8 -1 1 36992 models.common.Conv [64, 64, 3, 1] 9 -1 1 36992 models.common.Conv [64, 64, 3, 1] 10 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 11 -1 1 66048 models.common.Conv [256, 256, 1, 1] 12 -1 1 0 models.common.MP [] 13 -1 1 33024 models.common.Conv [256, 128, 1, 1] 14 -3 1 33024 models.common.Conv [256, 128, 1, 1] 15 -1 1 147712 models.common.Conv [128, 128, 3, 2] 16 [-1, -3] 1 0 models.common.Concat [1] 17 -1 1 33024 models.common.Conv [256, 128, 1, 1] 18 -2 1 33024 models.common.Conv [256, 128, 1, 1] 19 -1 1 147712 models.common.Conv [128, 128, 3, 1] 20 -1 1 147712 models.common.Conv [128, 128, 3, 1] 21 -1 1 147712 models.common.Conv [128, 128, 3, 1] 22 -1 1 147712 models.common.Conv [128, 128, 3, 1] 23 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 24 -1 1 263168 models.common.Conv [512, 512, 1, 1] 25 -1 1 0 models.common.MP [] 26 -1 1 131584 models.common.Conv [512, 256, 1, 1] 27 -3 1 131584 models.common.Conv [512, 256, 1, 1] 28 -1 1 590336 models.common.Conv [256, 256, 3, 2] 29 [-1, -3] 1 0 models.common.Concat [1] 30 -1 1 131584 models.common.Conv [512, 256, 1, 1] 31 -2 1 131584 models.common.Conv [512, 256, 1, 1] 32 -1 1 590336 models.common.Conv [256, 256, 3, 1] 33 -1 1 590336 models.common.Conv [256, 256, 3, 1] 34 -1 1 590336 models.common.Conv [256, 256, 3, 1] 35 -1 1 590336 models.common.Conv [256, 256, 3, 1] 36 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 37 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1] 38 -1 1 0 models.common.MP [] 39 -1 1 525312 models.common.Conv [1024, 512, 1, 1] 40 -3 1 525312 models.common.Conv [1024, 512, 1, 1] 41 -1 1 2360320 models.common.Conv [512, 512, 3, 2] 42 [-1, -3] 1 0 models.common.Concat [1] 43 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 44 -2 1 262656 models.common.Conv [1024, 256, 1, 1] 45 -1 1 590336 models.common.Conv [256, 256, 3, 1] 46 -1 1 590336 models.common.Conv [256, 256, 3, 1] 47 -1 1 590336 models.common.Conv [256, 256, 3, 1] 48 -1 1 590336 models.common.Conv [256, 256, 3, 1] 49 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 50 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1] 51 -1 1 7609344 models.common.SPPCSPC [1024, 512, 1] 52 -1 1 131584 models.common.Conv [512, 256, 1, 1] 53 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 54 37 1 262656 models.common.Conv [1024, 256, 1, 1] 55 [-1, -2] 1 0 models.common.Concat [1] 56 -1 1 131584 models.common.Conv [512, 256, 1, 1] 57 -2 1 131584 models.common.Conv [512, 256, 1, 1] 58 -1 1 295168 models.common.Conv [256, 128, 3, 1] 59 -1 1 147712 models.common.Conv [128, 128, 3, 1] 60 -1 1 147712 models.common.Conv [128, 128, 3, 1] 61 -1 1 147712 models.common.Conv [128, 128, 3, 1] 62[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 63 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 64 -1 1 33024 models.common.Conv [256, 128, 1, 1] 65 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 66 24 1 65792 models.common.Conv [512, 128, 1, 1] 67 [-1, -2] 1 0 models.common.Concat [1] 68 -1 1 33024 models.common.Conv [256, 128, 1, 1] 69 -2 1 33024 models.common.Conv [256, 128, 1, 1] 70 -1 1 73856 models.common.Conv [128, 64, 3, 1] 71 -1 1 36992 models.common.Conv [64, 64, 3, 1] 72 -1 1 36992 models.common.Conv [64, 64, 3, 1] 73 -1 1 36992 models.common.Conv [64, 64, 3, 1] 74[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 75 -1 1 65792 models.common.Conv [512, 128, 1, 1] 76 -1 1 0 models.common.MP [] 77 -1 1 16640 models.common.Conv [128, 128, 1, 1] 78 -3 1 16640 models.common.Conv [128, 128, 1, 1] 79 -1 1 147712 models.common.Conv [128, 128, 3, 2] 80 [-1, -3, 63] 1 0 models.common.Concat [1] 81 -1 1 131584 models.common.Conv [512, 256, 1, 1] 82 -2 1 131584 models.common.Conv [512, 256, 1, 1] 83 -1 1 295168 models.common.Conv [256, 128, 3, 1] 84 -1 1 147712 models.common.Conv [128, 128, 3, 1] 85 -1 1 147712 models.common.Conv [128, 128, 3, 1] 86 -1 1 147712 models.common.Conv [128, 128, 3, 1] 87[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 88 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 89 -1 1 0 models.common.MP [] 90 -1 1 66048 models.common.Conv [256, 256, 1, 1] 91 -3 1 66048 models.common.Conv [256, 256, 1, 1] 92 -1 1 590336 models.common.Conv [256, 256, 3, 2] 93 [-1, -3, 51] 1 0 models.common.Concat [1] 94 -1 1 525312 models.common.Conv [1024, 512, 1, 1] 95 -2 1 525312 models.common.Conv [1024, 512, 1, 1] 96 -1 1 1180160 models.common.Conv [512, 256, 3, 1] 97 -1 1 590336 models.common.Conv [256, 256, 3, 1] 98 -1 1 590336 models.common.Conv [256, 256, 3, 1] 99 -1 1 590336 models.common.Conv [256, 256, 3, 1] 100[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 101 -1 1 1049600 models.common.Conv [2048, 512, 1, 1] 102 75 1 328704 models.common.RepConv [128, 256, 3, 1] 103 88 1 1312768 models.common.RepConv [256, 512, 3, 1] 104 101 1 5246976 models.common.RepConv [512, 1024, 3, 1] 105 [102, 103, 104] 1 39550 IDetect [2, [[12, 16, 19, 36, 40, 28], [36, 75, 76, 55, 72, 146], [142, 110, 192, 243, 459, 401]], [256, 512, 1024]] Model Summary: 415 layers, 37201950 parameters, 37201950 gradients, 105.1 GFLOPS

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

整体图

整体图如下所示,这个有有yaml层数,下一张有具体输出,第三张b导的简洁一些,结合3张图起来看配合yaml文件,基本就很好理解了。

yolov7.yaml

[-1, 1, Conv, [32, 3, 1] 其中的[32, 3, 1] 表示输出通道数为32 ,卷积核为3*3,步长为2

边看整体网络结构图,边看yaml文件,对着看。

注意:

backbone 和 head中的模块MP-1和MP-2区别,backbone中尺寸减半通道数不变,head中尺寸减半通道数变成两倍

backbone 和 head中的模块ELAN-1和ELAN-2的区别,banbone中通道数变成两倍,head中减半

ELAN在backbone中扩张我估计是为了更好的提取特征,而MP-1通道数减半,可以把它理解为改进版本的下采样。

# parameters nc: 2 # number of classes depth_multiple: 1.0 # model depth multiple width_multiple: 1.0 # layer channel multiple # anchors anchors: - [12,16, 19,36, 40,28] # P3/8 - [36,75, 76,55, 72,146] # P4/16 - [142,110, 192,243, 459,401] # P5/32 # yolov7 backbone backbone: # [from, number, module, args] 640*640*3 [[-1, 1, Conv, [32, 3, 1]], # 0 640*640*32 [-1, 1, Conv, [64, 3, 2]], # 1-P1/2 320*320*64 [-1, 1, Conv, [64, 3, 1]], # 320*320*64 [-1, 1, Conv, [128, 3, 2]], # 3-P2/4 160*160*128 # ELAN1 [-1, 1, Conv, [64, 1, 1]], [-2, 1, Conv, [64, 1, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [[-1, -3, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [256, 1, 1]], # 11 160*160*256 # MPConv [-1, 1, MP, []], [-1, 1, Conv, [128, 1, 1]], [-3, 1, Conv, [128, 1, 1]], [-1, 1, Conv, [128, 3, 2]], [[-1, -3], 1, Concat, [1]], # 16-P3/8 80*80*256 # ELAN1 [-1, 1, Conv, [128, 1, 1]], [-2, 1, Conv, [128, 1, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [[-1, -3, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [512, 1, 1]], # 24 80*80*512 # MPConv [-1, 1, MP, []], [-1, 1, Conv, [256, 1, 1]], [-3, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [256, 3, 2]], [[-1, -3], 1, Concat, [1]], # 29-P4/16 40*40*512 # ELAN1 [-1, 1, Conv, [256, 1, 1]], [-2, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [[-1, -3, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [1024, 1, 1]], # 37 40*40*1024 # MPConv [-1, 1, MP, []], [-1, 1, Conv, [512, 1, 1]], [-3, 1, Conv, [512, 1, 1]], [-1, 1, Conv, [512, 3, 2]], [[-1, -3], 1, Concat, [1]], # 42-P5/32 20*20*1024 # ELAN1 [-1, 1, Conv, [256, 1, 1]], [-2, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [[-1, -3, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [1024, 1, 1]], # 50 20*20*1024 ] # yolov7 head head: [[-1, 1, SPPCSPC, [512]], # 51 20*20*512 [-1, 1, Conv, [256, 1, 1]],# 20*20*256 [-1, 1, nn.Upsample, [None, 2, 'nearest']], [37, 1, Conv, [256, 1, 1]], # route backbone P4 40*40*1024->40*40*256 [[-1, -2], 1, Concat, [1]], # 40*40*512 # ELAN2 注意:Head和Backbone的ELAN不一样 [-1, 1, Conv, [256, 1, 1]], [-2, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [256, 1, 1]], # 63 40*40*256 [-1, 1, Conv, [128, 1, 1]], # 80*80*128 [-1, 1, nn.Upsample, [None, 2, 'nearest']], [24, 1, Conv, [128, 1, 1]], # route backbone P3 80*80*512->80*80*128 [[-1, -2], 1, Concat, [1]],# 80*80*256 # ELAN2 [-1, 1, Conv, [128, 1, 1]], [-2, 1, Conv, [128, 1, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [-1, 1, Conv, [64, 3, 1]], [[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [128, 1, 1]], # 75 80*80*128 # MPConv Channel × 2 [-1, 1, MP, []], [-1, 1, Conv, [128, 1, 1]], [-3, 1, Conv, [128, 1, 1]], [-1, 1, Conv, [128, 3, 2]], [[-1, -3, 63], 1, Concat, [1]],# 40*40*256 # ELAN2 [-1, 1, Conv, [256, 1, 1]], [-2, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [-1, 1, Conv, [128, 3, 1]], [[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [256, 1, 1]], # 88 40*40*256 # MPConv Channel × 2 [-1, 1, MP, []], [-1, 1, Conv, [256, 1, 1]], [-3, 1, Conv, [256, 1, 1]], [-1, 1, Conv, [256, 3, 2]], [[-1, -3, 51], 1, Concat, [1]],# 40*40*512 # ELAN2 [-1, 1, Conv, [512, 1, 1]], [-2, 1, Conv, [512, 1, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [-1, 1, Conv, [256, 3, 1]], [[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], [-1, 1, Conv, [512, 1, 1]], # 101 20*20*512 [75, 1, RepConv, [256, 3, 1]],#102 80*80*256 [88, 1, RepConv, [512, 3, 1]],#103 40*40*512 [101, 1, RepConv, [1024, 3, 1]],#104 20*20*1024 [[102,103,104], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5) ]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

组件结构

CBS 模块

yaml 文件中的Conv表示卷积归一化激活

对于CBS模块,我们可以看从图中可以看出它是由一个Conv层,也就是卷积层,一个BN层,也就是Batch normalization层,还有一个Silu层,这是一个激活函数。silu激活函数是swish激活函数的变体。

class Conv(nn.Module):

# Standard convolution

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super(Conv, self).__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

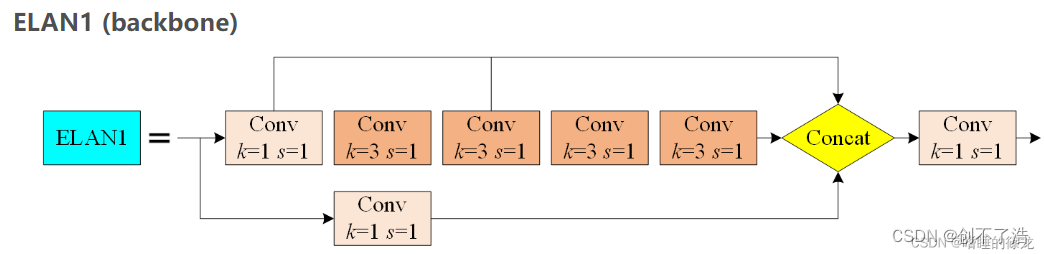

ELAN1

利用Conv构件围城的模块,在backbone中通道数扩张两倍

#[-1, 1, Conv, [128, 3, 2]], # 3-P2/4 160*160*128

# ELAN1

[-1, 1, Conv, [64, 1, 1]],

[-2, 1, Conv, [64, 1, 1]],

[-1, 1, Conv, [64, 3, 1]],

[-1, 1, Conv, [64, 3, 1]],

[-1, 1, Conv, [64, 3, 1]],

[-1, 1, Conv, [64, 3, 1]],

[[-1, -3, -5, -6], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1]], # 11 160*160*256

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

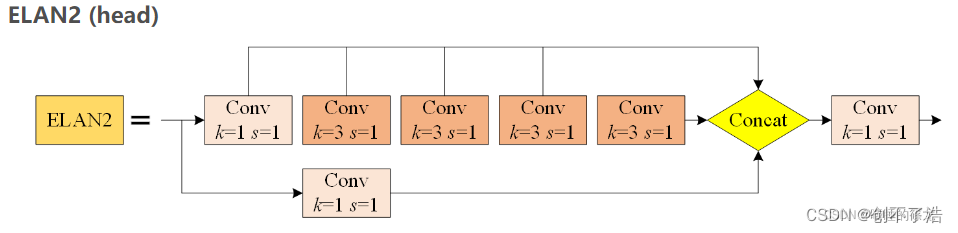

ELAN2

利用Conv构件围城的模块,在head中通道数减半

# [[-1, -2], 1, Concat, [1]], #55 40*40*512

# ELAN2 注意:Head和Backbone的ELAN不一样

[-1, 1, Conv, [256, 1, 1]],

[-2, 1, Conv, [256, 1, 1]],

[-1, 1, Conv, [128, 3, 1]],

[-1, 1, Conv, [128, 3, 1]],

[-1, 1, Conv, [128, 3, 1]],

[-1, 1, Conv, [128, 3, 1]],

[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1]], # 63 40*40*256

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

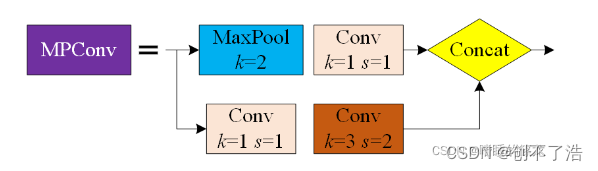

MP1&2

MP1

[-1, 1, Conv, [128, 3, 2]], 表述输出128,

#[-1, 1, Conv, [256, 1, 1]], # 11 160*160*256

# MPConv-1 backbone 下采样 通道数不变

[-1, 1, MP, []],

[-1, 1, Conv, [128, 1, 1]],

[-3, 1, Conv, [128, 1, 1]],

[-1, 1, Conv, [128, 3, 2]],

[[-1, -3], 1, Concat, [1]], # 16-P3/8 80*80*256

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

MP2

head部分,尺寸减半,通道数扩张为两倍

# [-1, 1, Conv, [256, 1, 1]], # 88 40*40*256

# MPConv Channel × 2

[-1, 1, MP, []],

[-1, 1, Conv, [256, 1, 1]],

[-3, 1, Conv, [256, 1, 1]],

[-1, 1, Conv, [256, 3, 2]],

[[-1, -3, 51], 1, Concat, [1]],# 40*40*512

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

SPPCSPC

类似于yolov5中的SPPF,不同的是,使用了5×5、9×9、13×13最大池化。

class SPPCSPC(nn.Module): # CSP https://github.com/WongKinYiu/CrossStagePartialNetworks def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)): super(SPPCSPC, self).__init__() c_ = int(2 * c2 * e) # hidden channels self.cv1 = Conv(c1, c_, 1, 1) self.cv2 = Conv(c1, c_, 1, 1) self.cv3 = Conv(c_, c_, 3, 1) self.cv4 = Conv(c_, c_, 1, 1) self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k]) self.cv5 = Conv(4 * c_, c_, 1, 1) self.cv6 = Conv(c_, c_, 3, 1) self.cv7 = Conv(2 * c_, c2, 1, 1) def forward(self, x): x1 = self.cv4(self.cv3(self.cv1(x))) y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1))) y2 = self.cv2(x) return self.cv7(torch.cat((y1, y2), dim=1))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

参考

【YOLOv7_0.1】网络结构与源码解析

https://blog.csdn.net/weixin_43799388/article/details/126164288

YOLOV7详细解读(一)网络架构解读

https://blog.csdn.net/qq128252/article/details/126673493

睿智的目标检测61——Pytorch搭建YoloV7目标检测平台

https://blog.csdn.net/weixin_44791964/article/details/125827160