热门标签

热门文章

- 1vue3 +ts 如何安装封装axios_vue3 ts axios

- 2起步篇-- 超强的Chatgpt桌面版来了!可以保存聊天记录以及内置128个聊天场景!

- 3AIGC重塑教育:AI大模型驱动的教育变革与实践_aigc驱动教育创新将涉及哪些方面的因素和核心要素?

- 4列举一些分析次级代谢物基因簇相关的数据库

- 5Ambari-2.7.6和HDP-3.3.1安装_hidataplus

- 6hadoop集群搭建(二)之集群配置_apache hadoop : core-site.xml 在那?

- 7算法-分治法-杭电oj1007_杭电oj分治算法

- 8多线程共同使用一个锁引发的死锁问题_两个线程都在等同一把锁

- 9Python中英文小说词频统计与情感分析_英文小说词频python

- 10基于微信开发的开源微信商城小程序源码下载_微信小程序开源代码下载

当前位置: article > 正文

【Python】科研代码学习:十四 wandb (可视化AI工具)_python wandb

作者:不正经 | 2024-06-11 20:18:08

赞

踩

python wandb

wandb 介绍

- 【wandb官网】

wandb是Weights & Biases的缩写(w and b) - 核心作用:

- 可视化重要参数

- 云端存储

- 提供各种工具

- 可以和其他工具配合使用,比如下面的

pytorch, HF transformers, tensorflow, keras等等

- 可以在里面使用

matplotlib - 貌似是

tensorboard的上位替代

注册账号

- 首先我们需要去官网注册账号,貌似不能使用vpn

注册号后,按照教程创建一个团队,然后来到这个界面

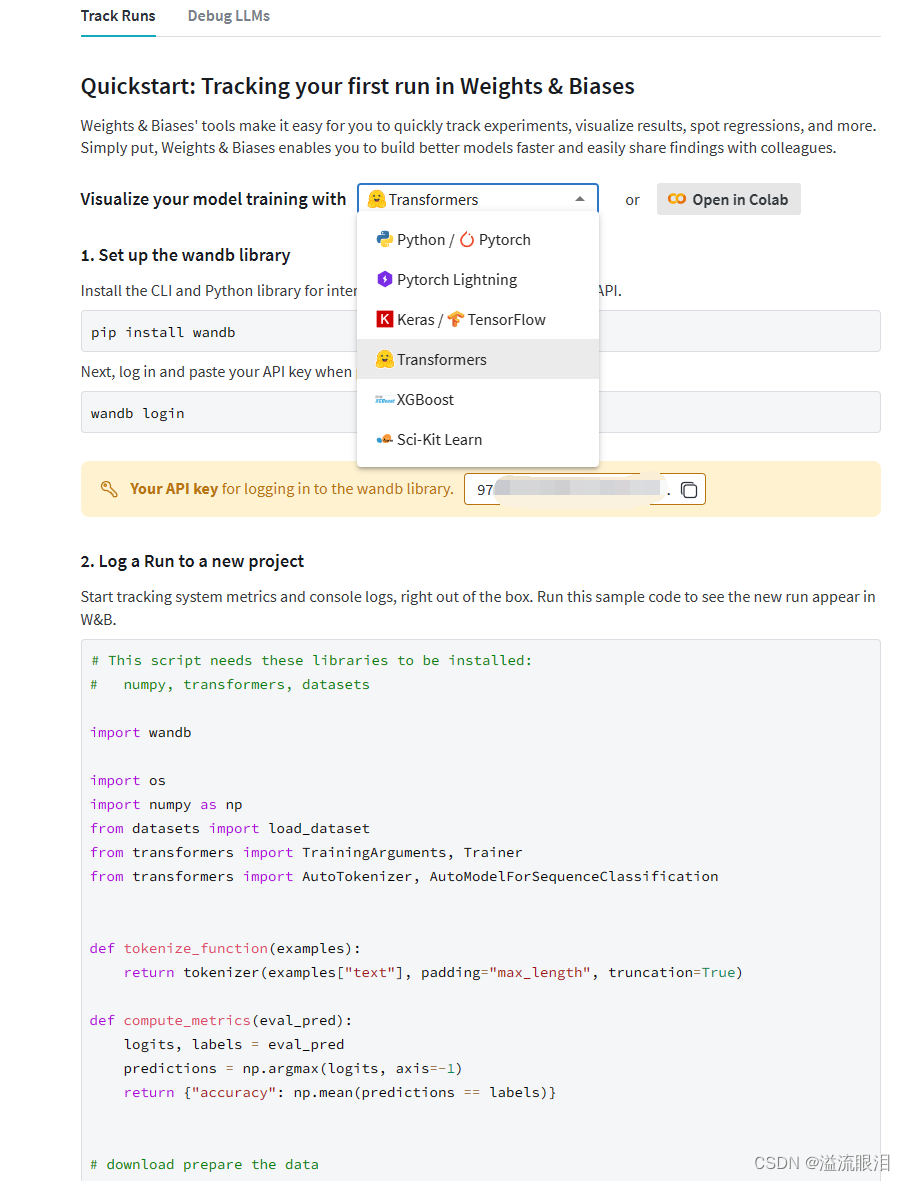

可以按照这个Quickstart的样例走一下。选择Track Runs,接下来可以选择使用哪个工具训练的模型

然后需要pip install wandb导包,以及wandb login登录

使用 HF Trainer + wandb 训练

- 我们调用官方给的样例

我们发现其实新添了这几个内容:

WANDB_PROJECT环境变量:项目名

WANDB_LOG_MODEL环境变量:是否保存中继到wandb

WANDB_WATCH环境变量 - 在

TrainingArguments中,设置了report_to="wandb"

最后调用wandb.finish(),整体变化不大

# This script needs these libraries to be installed: # numpy, transformers, datasets import wandb import os import numpy as np from datasets import load_dataset from transformers import TrainingArguments, Trainer from transformers import AutoTokenizer, AutoModelForSequenceClassification # 设置GPU编号 os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID" os.environ["CUDA_VISIBLE_DEVICES"] = "1,2" def tokenize_function(examples): return tokenizer(examples["text"], padding="max_length", truncation=True) def compute_metrics(eval_pred): logits, labels = eval_pred predictions = np.argmax(logits, axis=-1) return {"accuracy": np.mean(predictions == labels)} print("Loading Dataset") # download prepare the data dataset = load_dataset("yelp_review_full") print("Loading Tokenizer") tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased") small_train_dataset = dataset["train"].shuffle(seed=42).select(range(1000)) small_eval_dataset = dataset["test"].shuffle(seed=42).select(range(300)) small_train_dataset = small_train_dataset.map(tokenize_function, batched=True) small_eval_dataset = small_train_dataset.map(tokenize_function, batched=True) print("Loading Model") # download the model model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased", num_labels=5) # set the wandb project where this run will be logged os.environ["WANDB_PROJECT"]="my-awesome-project" # save your trained model checkpoint to wandb os.environ["WANDB_LOG_MODEL"]="true" # turn off watch to log faster os.environ["WANDB_WATCH"]="false" # pass "wandb" to the 'report_to' parameter to turn on wandb logging training_args = TrainingArguments( output_dir='models', report_to="wandb", logging_steps=5, per_device_train_batch_size=32, per_device_eval_batch_size=32, evaluation_strategy="steps", eval_steps=20, max_steps = 100, save_steps = 100 ) print("Loading Trainer") # define the trainer and start training trainer = Trainer( model=model, args=training_args, train_dataset=small_train_dataset, eval_dataset=small_eval_dataset, compute_metrics=compute_metrics, ) print("Training") trainer.train() # [optional] finish the wandb run, necessary in notebooks wandb.finish()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 在 wandb 网站中

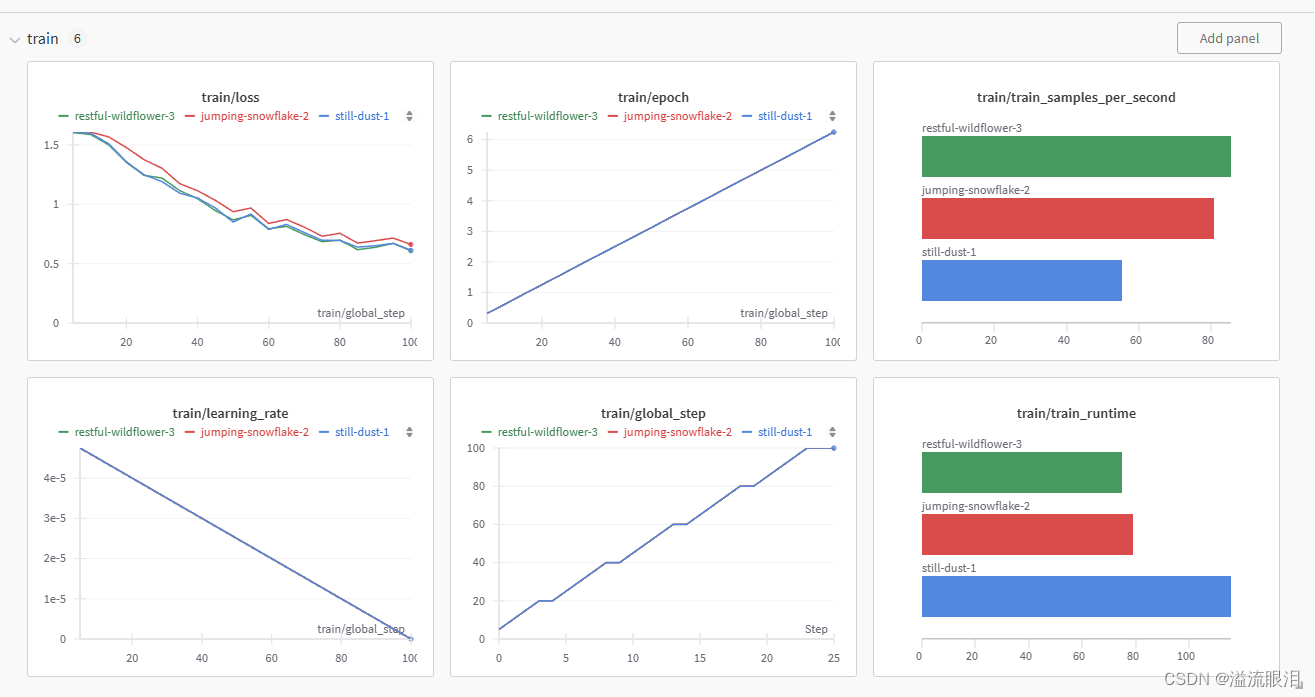

我们可以打开该 project。每一次运行相当于一次run,我这里跑了三次所以就有三条线。

这里主要是看eval验证集和train训练集的一些参数。

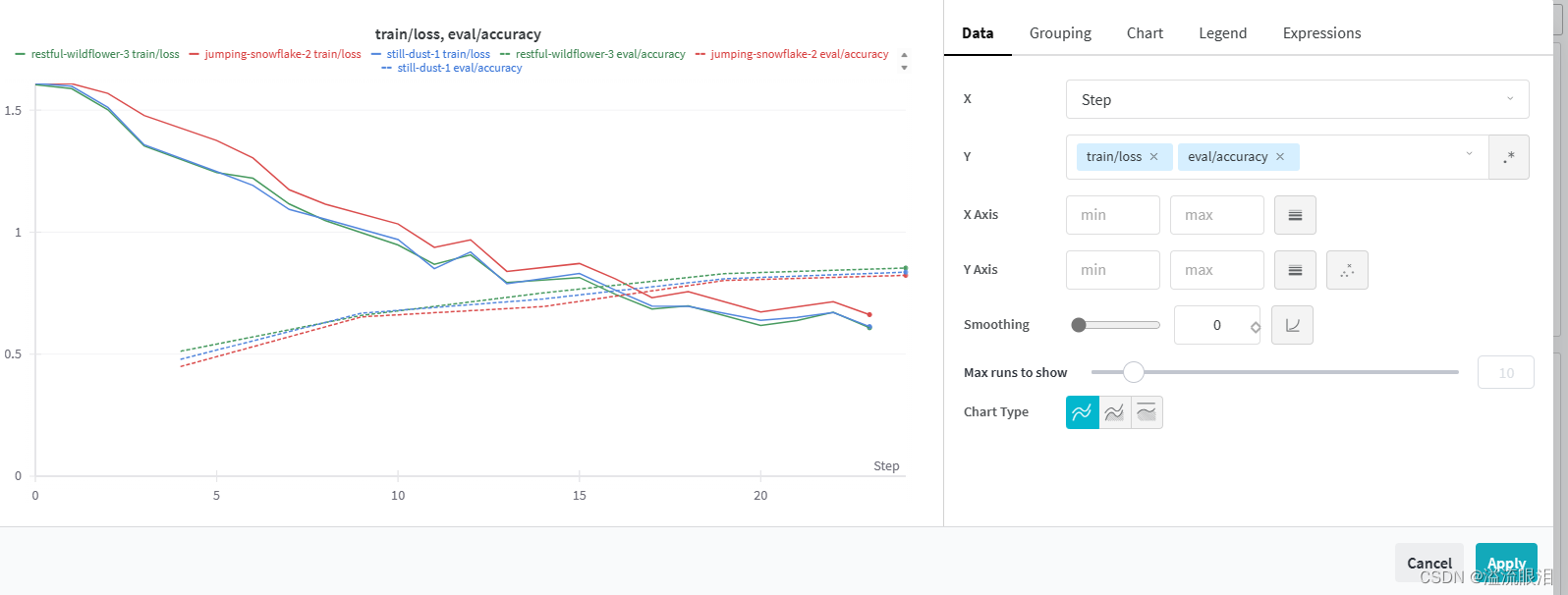

- 我们可以删掉不关心的面板,或者增添一个想看的面板

但如果两个参数的值域变化比较大的话,在一个图里面比较难看清,所以比较相关的参数才建议放在一个图里。

低级 API

- 这上面是封装比较高级的 API,一般我们也都配合

transformers库去用

如果想用比较原生的 API,一般用法如下:

首先调用wandb.init()方法

然后使用wandb.log(dict)输出你要可视化的参数即可。

# train.py import wandb import random # for demo script wandb.login() epochs = 10 lr = 0.01 run = wandb.init( # Set the project where this run will be logged project="my-awesome-project", # Track hyperparameters and run metadata config={ "learning_rate": lr, "epochs": epochs, }, ) offset = random.random() / 5 print(f"lr: {lr}") # simulating a training run for epoch in range(2, epochs): acc = 1 - 2**-epoch - random.random() / epoch - offset loss = 2**-epoch + random.random() / epoch + offset print(f"epoch={epoch}, accuracy={acc}, loss={loss}") wandb.log({"accuracy": acc, "loss": loss}) # run.log_code()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/不正经/article/detail/704651

推荐阅读

相关标签