- 1HarmonyOS/OpenHarmony应用开发-DevEco Studio 在MAC上启动报错

- 2图像生成式大模型领域研究_图像生成模型都很大

- 3藏头诗——_输出藏头诗 char*

- 4Matlab 归一化(normalization)/标准化 (standarization)_matlab中的standardlization

- 5ImageNet一作、李飞飞高徒邓嘉获最佳论文奖,ECCV 2020奖项全公布

- 6解决springboot项目请求出现非法字符问题 java.lang.IllegalArgumentException:Invalid character found in the request t_javaexception: java.lang.illegalargumentexception:

- 7使用ChatGPT面向岗位制作简历、扮演面试官_可以角色扮演面试官的cheatgtp

- 8HDFS集群搭建_任务一:安装部署一个具有3个节点的hdfs集群 任务二:编写一个java程序,向hdfs中上

- 9vue3 npm i 一直卡到不动_运行vue3 npm i 卡住了

- 10关于滑块验证码的问题_滑块验证码上机报告

【图像分类】实战——使用EfficientNetV2实现图像分类(Pytorch)_基于efficientnetv2的图像分类

赞

踩

目录

摘要

这几天学习了EfficientNetV2,对论文做了翻译,并复现了论文的代码。

论文翻译:【图像分类】 EfficientNetV2:更快、更小、更强——论文翻译_AI浩-CSDN博客

代码复现:【图像分类】用通俗易懂代码的复现EfficientNetV2,入门的绝佳选择(pytorch)_AI浩-CSDN博客

对EfficientNetV2想要了解的可以查看上面的文章,这篇文章着重介绍如何使用EfficientNetV2实现图像分类。Loss函数采用CrossEntropyLoss,可以通过更改最后一层的全连接方便实现二分类和多分类。数据集采用经典的猫狗大战数据集,做二分类的实现。

数据集地址:链接:https://pan.baidu.com/s/1kqhVPOqV5vklYYIFVAzAAA

提取码:3ch6

新建项目

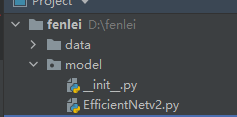

新建一个图像分类的项目,在项目的跟目录新建文件夹model,用于存放EfficientNetV2的模型代码,新建EfficientNetV2.py,将【图像分类】用通俗易懂代码的复现EfficientNetV2,入门的绝佳选择(pytorch)_AI浩-CSDN博客 复现的代码复制到里面,然后在model文件夹新建__init__.py空文件,model的目录结构如下:

在项目的根目录新建train.py,然后在里面写训练代码。

导入所需要的库

- import torch.optim as optim

- import torch

- import torch.nn as nn

- import torch.nn.parallel

- import torch.optim

- import torch.utils.data

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

- import torchvision.datasets as datasets

- from torch.autograd import Variable

- from model.EfficientNetv2 import efficientnetv2_s

设置全局参数

设置BatchSize、学习率和epochs,判断是否有cuda环境,如果没有设置为cpu。

- # 设置全局参数

- modellr = 1e-4

- BATCH_SIZE = 64

- EPOCHS = 20

- DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

图像预处理

在做图像与处理时,train数据集的transform和验证集的transform分开做,train的图像处理出了resize和归一化之外,还可以设置图像的增强,比如旋转、随机擦除等一系列的操作,验证集则不需要做图像增强,另外不要盲目的做增强,不合理的增强手段很可能会带来负作用,甚至出现Loss不收敛的情况。

- # 数据预处理

-

- transform = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

-

- ])

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

读取数据

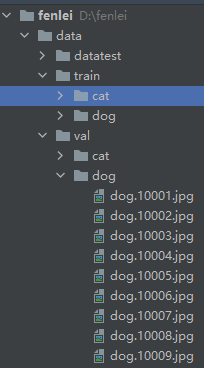

使用Pytorch的默认方式读取数据。数据的目录如下图:

训练集,取了猫狗大战数据集中,猫狗图像各一万张,剩余的放到验证集中。

- # 读取数据

- dataset_train = datasets.ImageFolder('data/train', transform)

- print(dataset_train.imgs)

- # 对应文件夹的label

- print(dataset_train.class_to_idx)

- dataset_test = datasets.ImageFolder('data/val', transform_test)

- # 对应文件夹的label

- print(dataset_test.class_to_idx)

-

- # 导入数据

- train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

- test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)

设置模型

使用CrossEntropyLoss作为loss,模型采用efficientnetv2_s,由于没有Pytorch的预训练模型,我们只能从头开始训练。更改最后一层的全连接,将类别设置为2,然后将模型放到DEVICE。优化器选用Adam。

- # 实例化模型并且移动到GPU

- criterion = nn.CrossEntropyLoss()

- model = efficientnetv2_s()

- num_ftrs = model.classifier.in_features

- model.classifier = nn.Linear(num_ftrs, 2)

- model.to(DEVICE)

- # 选择简单暴力的Adam优化器,学习率调低

- optimizer = optim.Adam(model.parameters(), lr=modellr)

-

-

- def adjust_learning_rate(optimizer, epoch):

- """Sets the learning rate to the initial LR decayed by 10 every 30 epochs"""

- modellrnew = modellr * (0.1 ** (epoch // 50))

- print("lr:", modellrnew)

- for param_group in optimizer.param_groups:

- param_group['lr'] = modellrnew

-

-

设置训练和验证

- # 定义训练过程

-

- def train(model, device, train_loader, optimizer, epoch):

- model.train()

- sum_loss = 0

- total_num = len(train_loader.dataset)

- print(total_num, len(train_loader))

- for batch_idx, (data, target) in enumerate(train_loader):

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- optimizer.zero_grad()

- loss.backward()

- optimizer.step()

- print_loss = loss.data.item()

- sum_loss += print_loss

- if (batch_idx + 1) % 50 == 0:

- print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

- epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

- 100. * (batch_idx + 1) / len(train_loader), loss.item()))

- ave_loss = sum_loss / len(train_loader)

- print('epoch:{},loss:{}'.format(epoch, ave_loss))

- #验证过程

- def val(model, device, test_loader):

- model.eval()

- test_loss = 0

- correct = 0

- total_num = len(test_loader.dataset)

- print(total_num, len(test_loader))

- with torch.no_grad():

- for data, target in test_loader:

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- _, pred = torch.max(output.data, 1)

- correct += torch.sum(pred == target)

- print_loss = loss.data.item()

- test_loss += print_loss

- correct = correct.data.item()

- acc = correct / total_num

- avgloss = test_loss / len(test_loader)

- print('\nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

- avgloss, correct, len(test_loader.dataset), 100 * acc))

-

-

- # 训练

-

- for epoch in range(1, EPOCHS + 1):

- adjust_learning_rate(optimizer, epoch)

- train(model, DEVICE, train_loader, optimizer, epoch)

- val(model, DEVICE, test_loader)

- torch.save(model, 'model.pth')

完成上面的代码后就可以开始训练,点击run开始训练,如下图:

验证

测试集存放的目录如下图:

第一步 定义类别,这个类别的顺序和训练时的类别顺序对应,一定不要改变顺序!!!!我们在训练时,cat类别是0,dog类别是1,所以我定义classes为(cat,dog)。

第二步 定义transforms,transforms和验证集的transforms一样即可,别做数据增强。

第三步 加载model,并将模型放在DEVICE里,

第四步 读取图片并预测图片的类别,在这里注意,读取图片用PIL库的Image。不要用cv2,transforms不支持。

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

-

- from torch.autograd import Variable

- import os

- from PIL import Image

-

- classes = ('cat', 'dog')

-

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

-

- DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- model = torch.load("model.pth")

- model.eval()

- model.to(DEVICE)

- path='data/test/'

- testList=os.listdir(path)

- for file in testList:

- img=Image.open(path+file)

- img=transform_test(img)

- img.unsqueeze_(0)

- img = Variable(img).to(DEVICE)

- out=model(img)

- # Predict

- _, pred = torch.max(out.data, 1)

- print('Image Name:{},predict:{}'.format(file,classes[pred.data.item()]))

-

运行结果:

其实在读取数据,也可以巧妙的用datasets.ImageFolder,下面我们就用datasets.ImageFolder实现对图片的预测。改一下test数据集的路径,在test文件夹外面再加一层文件件,取名为dataset,如下图所示:

然后修改读取图片的方式。代码如下:

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

- import torchvision.datasets as datasets

- from torch.autograd import Variable

-

- classes = ('cat', 'dog')

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

-

- DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- model = torch.load("model.pth")

- model.eval()

- model.to(DEVICE)

-

- dataset_test = datasets.ImageFolder('data/datatest', transform_test)

- print(len(dataset_test))

- # 对应文件夹的label

-

- for index in range(len(dataset_test)):

- item = dataset_test[index]

- img, label = item

- img.unsqueeze_(0)

- data = Variable(img).to(DEVICE)

- output = model(data)

- _, pred = torch.max(output.data, 1)

- print('Image Name:{},predict:{}'.format(dataset_test.imgs[index][0], classes[pred.data.item()]))

- index += 1

-

完整代码:

train.py

- import torch.optim as optim

- import torch

- import torch.nn as nn

- import torch.nn.parallel

- import torch.optim

- import torch.utils.data

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

- import torchvision.datasets as datasets

- from torch.autograd import Variable

- from model.EfficientNetv2 import efficientnetv2_s

- # 设置全局参数

- modellr = 1e-4

- BATCH_SIZE = 32

- EPOCHS = 50

- DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

-

- # 数据预处理

-

- transform = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

-

- ])

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

- # 读取数据

- dataset_train = datasets.ImageFolder('data/train', transform)

- print(dataset_train.imgs)

- # 对应文件夹的label

- print(dataset_train.class_to_idx)

- dataset_test = datasets.ImageFolder('data/val', transform_test)

- # 对应文件夹的label

- print(dataset_test.class_to_idx)

-

- # 导入数据

- train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

- test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)

-

-

- # 实例化模型并且移动到GPU

- criterion = nn.CrossEntropyLoss()

- model = efficientnetv2_s()

- num_ftrs = model.classifier.in_features

- model.classifier = nn.Linear(num_ftrs, 2)

- model.to(DEVICE)

- # 选择简单暴力的Adam优化器,学习率调低

- optimizer = optim.Adam(model.parameters(), lr=modellr)

-

-

- def adjust_learning_rate(optimizer, epoch):

- """Sets the learning rate to the initial LR decayed by 10 every 30 epochs"""

- modellrnew = modellr * (0.1 ** (epoch // 50))

- print("lr:", modellrnew)

- for param_group in optimizer.param_groups:

- param_group['lr'] = modellrnew

-

-

- # 定义训练过程

-

- def train(model, device, train_loader, optimizer, epoch):

- model.train()

- sum_loss = 0

- total_num = len(train_loader.dataset)

- print(total_num, len(train_loader))

- for batch_idx, (data, target) in enumerate(train_loader):

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- optimizer.zero_grad()

- loss.backward()

- optimizer.step()

- print_loss = loss.data.item()

- sum_loss += print_loss

- if (batch_idx + 1) % 50 == 0:

- print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

- epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

- 100. * (batch_idx + 1) / len(train_loader), loss.item()))

- ave_loss = sum_loss / len(train_loader)

- print('epoch:{},loss:{}'.format(epoch, ave_loss))

- #验证过程

- def val(model, device, test_loader):

- model.eval()

- test_loss = 0

- correct = 0

- total_num = len(test_loader.dataset)

- print(total_num, len(test_loader))

- with torch.no_grad():

- for data, target in test_loader:

- data, target = Variable(data).to(device), Variable(target).to(device)

- output = model(data)

- loss = criterion(output, target)

- _, pred = torch.max(output.data, 1)

- correct += torch.sum(pred == target)

- print_loss = loss.data.item()

- test_loss += print_loss

- correct = correct.data.item()

- acc = correct / total_num

- avgloss = test_loss / len(test_loader)

- print('\nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

- avgloss, correct, len(test_loader.dataset), 100 * acc))

-

-

- # 训练

-

- for epoch in range(1, EPOCHS + 1):

- adjust_learning_rate(optimizer, epoch)

- train(model, DEVICE, train_loader, optimizer, epoch)

- val(model, DEVICE, test_loader)

- torch.save(model, 'model.pth')

test1.py

-

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

-

- from torch.autograd import Variable

- import os

- from PIL import Image

-

- classes = ('cat', 'dog')

-

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

-

- DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- model = torch.load("model.pth")

- model.eval()

- model.to(DEVICE)

- path='data/test/'

- testList=os.listdir(path)

- for file in testList:

- img=Image.open(path+file)

- img=transform_test(img)

- img.unsqueeze_(0)

- img = Variable(img).to(DEVICE)

- out=model(img)

- # Predict

- _, pred = torch.max(out.data, 1)

- print('Image Name:{},predict:{}'.format(file,classes[pred.data.item()]))

-

test2.py

- import torch.utils.data.distributed

- import torchvision.transforms as transforms

- import torchvision.datasets as datasets

- from torch.autograd import Variable

-

- classes = ('cat', 'dog')

- transform_test = transforms.Compose([

- transforms.Resize((224, 224)),

- transforms.ToTensor(),

- transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

- ])

-

- DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- model = torch.load("model.pth")

- model.eval()

- model.to(DEVICE)

-

- dataset_test = datasets.ImageFolder('data/datatest', transform_test)

- print(len(dataset_test))

- # 对应文件夹的label

-

- for index in range(len(dataset_test)):

- item = dataset_test[index]

- img, label = item

- img.unsqueeze_(0)

- data = Variable(img).to(DEVICE)

- output = model(data)

- _, pred = torch.max(output.data, 1)

- print('Image Name:{},predict:{}'.format(dataset_test.imgs[index][0], classes[pred.data.item()]))

- index += 1