- 1解决idea [08S01] 无法连接 sql server 数据库,报错:驱动程序无法通过使用安全套接字层(SSL)加密与 SQL Server 建立安全连接_连接不上数据库08s01

- 2是面试官放水,还是公司实在是太缺人?这都没挂,华为原来这么容易进...

- 3c语言绝对值——abs和fabs_c语言什么情况用fabs或者abs

- 4南京事业单位计算机真题,南京市事业单位考试真题及参考答案.doc

- 503-stable diffusion国风小姐姐_stable diffusion国风美女关键字

- 6软考证到底有多大个鸟用?_软考有什么用

- 7命名实体识别(NER):自动识别文本中的关键信息

- 8毕业设计:基于机器学习的时间序列预测系统_机器学习做时间序列预测的背景与意义

- 9mysql中information_MYSQL中information_schema简介

- 10使用Gitee_gitee用户名密码读取文件

Fastgpt配合chatglm+m3e或ollama+m3e搭建个人知识库_fastgpt ollama

赞

踩

概述:

人工智能大语言模型是近年来人工智能领域的一项重要技术,它的出现标志着自然语言处理领域的重大突破。这些模型利用深度学习和大规模数据训练,能够理解和生成人类语言,为各种应用场景提供了强大的文本处理能力。AI大语言模型的技术原理主要基于深度学习和自然语言处理技术,通过自监督学习和大规模文本数据的预训练来学习语言的表示。训练完成后,可以通过微调等方法,将模型应用于特定的任务和应用场景。

未来,AI大语言模型有望在更多领域发挥作用,包括自然语言理解、文本生成、对话系统、语言翻译等。它们可以用于自动摘要、文档生成、智能客服、智能问答等多种应用场景,为用户提供了更加智能和个性化的服务。

本文为学习大语言模型及FastGPT部署的学习笔记。通过直接部署**ChatGML3大语言模型**或**OLLAMA模型管理工具**配合FastGPT私有化搭建知识库。其中**one-api**、**fastgpt**是两种方法都需要部署的,其他的更建议使用ollama直接进行部署,切换模型方便快捷,易于管理。

硬件要求

以下配置仅作参考

**chatglm3-6b+m3e:**3060 12 ↑

**qwen:4b+m3e:**3060 12 ↑

**qwen:2b+m3e:**1660 6g↑

总结:模型量级越大所需显卡性能越高,过小的量级的大模型在低端cpu亦可运行,只是推理的精准度很差,更不能配合m3e向量模型进行推理,速度会非常慢。

本文档涉及到的资源

conda

https://www.anaconda.com/

Conda 是一个运行在 Windows、MacOS 和 Linux 上的开源包管理系统和环境管理系统。Conda 可以:

- 快速安装、运行和更新包及其依赖项

- 轻松地在本地计算机上创建、保存、加载和切换环境

它是为 Python 程序创建的,但它可以为任何语言打包和分发软件。

简单总结一下就是 Conda 很好、很强大,使用 Conda 会让你很省心。(人生苦短,我选 “Conda”!)

one-api

https://github.com/songquanpeng/one-api

All in one 的 OpenAI 接口 整合各种 API 访问方式 一键部署,开箱即用

chatglm3

https://github.com/THUDM/ChatGLM3

ChatGLM3 是智谱 AI 和清华大学 KEG 实验室联合发布的对话预训练模型。

m3e

https://modelscope.cn/models/Jerry0/m3e-base/summary

M3E 是 Moka Massive Mixed Embedding 的缩写

ollama

https://ollama.com/

大语言模型管理工具

fastgpt

https://github.com/labring/FastGPT

FastGPT 是一个基于 LLM 大语言模型的知识库问答系统,提供开箱即用的数据处理、模型调用等能力。同时可以通过 Flow 可视化进行工作流编排,从而实现复杂的问答场景!

魔搭社区

https://modelscope.cn/home

ModelScope旨在打造下一代开源的模型即服务共享平台,为泛AI开发者提供灵活、易用、低成本的一站式模型服务产品,让模型应用更简单!

CONDA的使用

- 安装好后更新工具

conda update -n base -c defaults conda

- 更新各种库

conda update --all

- 创建虚拟环境

conda create --name windows_chatglm3-6b python=3.11 -y

- 激活并进入虚拟环境

conda activate windows_chatglm3-6b

- 安装pytorch

cmd:nvidia-smi查看最高支持的 CUDA Version,我的是12.2

- 安装pytorch

PyTorch是一个开源的Python机器学习库,基于Torch,用于自然语言处理等应用程序。PyTorch既可以看作加入了GPU支持的numpy,同时也可以看成一个拥有自动求导功能的强大的深度神经网络 。

- 在 https://pytorch.org/get-started/locally/ 查询自己电脑需要执行的命令

- 在conda虚拟环境内执行以下命令安装pytorch

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

chatglm3环境搭建(非ollama模式)

模型及DEMO下载

一共需要下载两个模型chatglm3 及m3e

chatglm3下载

模型地址:https://modelscope.cn/models/ZhipuAI/chatglm3-6b/summary

下载方法:git

下载时间较久,耐心等待

git lfs install

git clone https://www.modelscope.cn/ZhipuAI/chatglm3-6b.git

- 1

- 2

m3e-base下载

git clone https://www.modelscope.cn/Jerry0/m3e-base.git

- 1

官方ChatGLM3 DEMO下载

地址:https://github.com/THUDM/ChatGLM3

下载方法:git

git clone https://github.com/THUDM/ChatGLM3

- 1

配置及运行

-

进入刚刚clone的 ChatGLM3/openai_api_demo文件夹

-

打开

api_server.py的python文件 -

代码拉倒最下方

-

覆盖if name == “main”:方法内的代码 如下:

其中一些地方需要修改,`tokenizer`及`model`的地址对应的是[chatglm3](#eB5m3)的下载地址,`embedding_model`的地址对应的是[m3e](#FHYRP)的下载地址,`port`可根据个人需要自行配置- 1

tokenizer = AutoTokenizer.from_pretrained("E:\Work\HaoQue\FastGPT\models\chatglm3-6B-32k-int4", trust_remote_code=True)

model = AutoModel.from_pretrained("E:\Work\HaoQue\FastGPT\models\chatglm3-6B-32k-int4", trust_remote_code=True, device_map="auto").eval()

# load Embedding

embedding_model = SentenceTransformer("E:\Work\HaoQue\FastGPT\models\m3e-base", trust_remote_code=True, device="cuda")

uvicorn.run(app, host='0.0.0.0', port=8000, workers=1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 回到ChatGLM3根目录 进入刚刚创建的

windows_chatglm3-6bconda 虚拟环境 - cmd运行

pip install -r requirements.txt安装依赖 - 耐心等待安装完成

- 完成后运行通过python运行

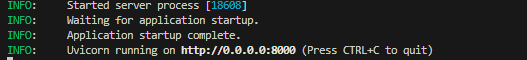

python openai_api_demo/api_server.py - 查看运行结果,以下为运行成功。

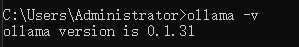

ollama环境搭建

ollama程序下载及模型安装安装

- 下载:https://ollama.com/download

- 安装直接下一步

- 安装完成后进入cmd 输入

ollama -v验证是否成功

通过ollama进行模型下载

- 模型列表:https://ollama.com/library

- 这里以qwen:1.8b为例

- cmd运行

ollama run qwen:1.8b - 耐心等待下载即可

- 下载完成模型会自动启动,无需其他操作

m3e环境搭建(ollama模式)

使用docker进行部署,docker安装在此不做介绍。

docker run -d --name m3e -p 6008:6008 --gpus all -e sk-key=123321 registry.cn-hangzhou.aliyuncs.com/fastgpt_docker/m3e-large-api

- 1

- 2

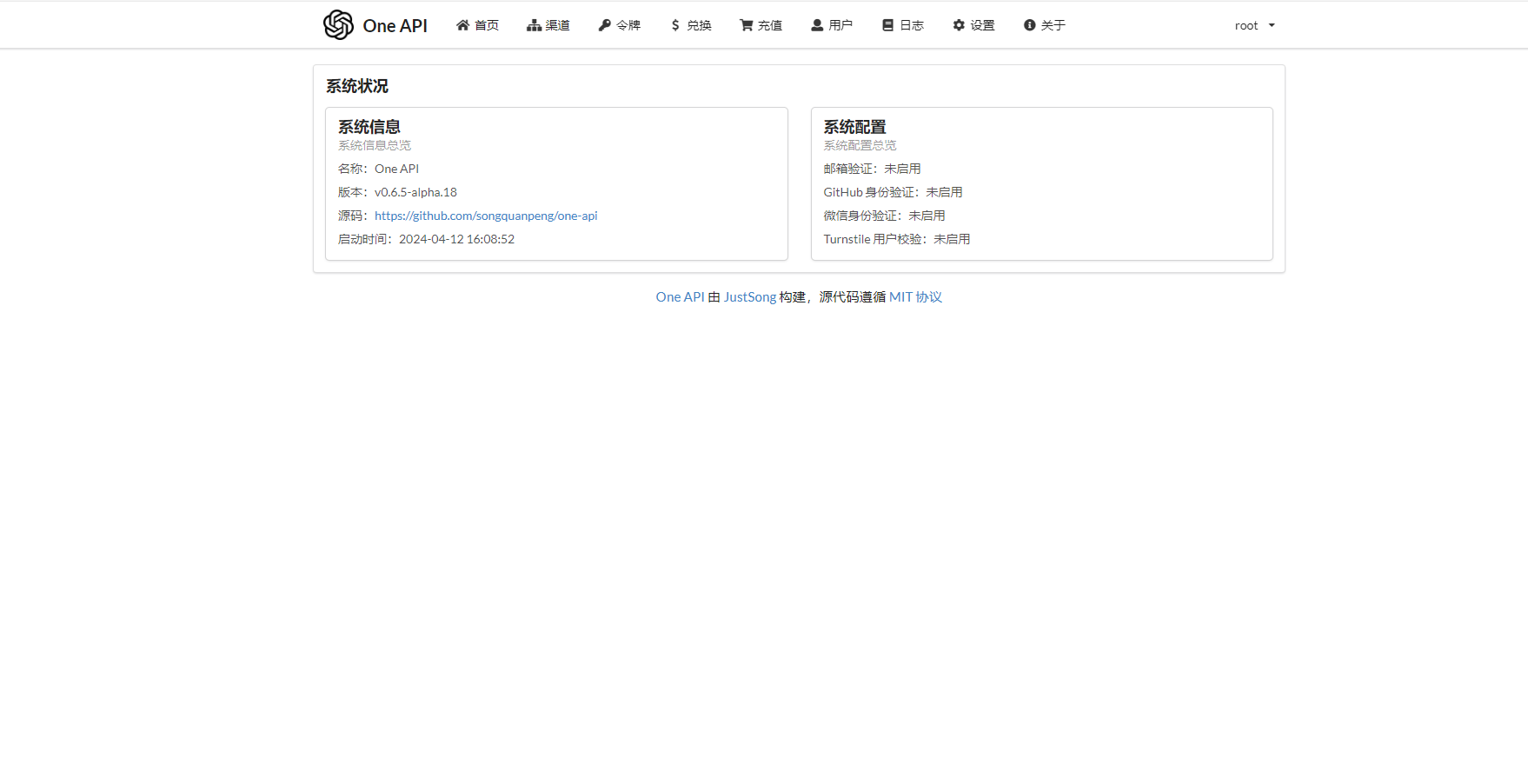

one-api环境部署及配置

- 使用docker进行部署,docker安装在此不做介绍。

docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api

- 1

- 然后访问

[http://localhost:3000/](http://localhost:3001/)端口为docker run 时候-p的端口,

- 登陆 初始账号用户名为 root,密码为 123456。

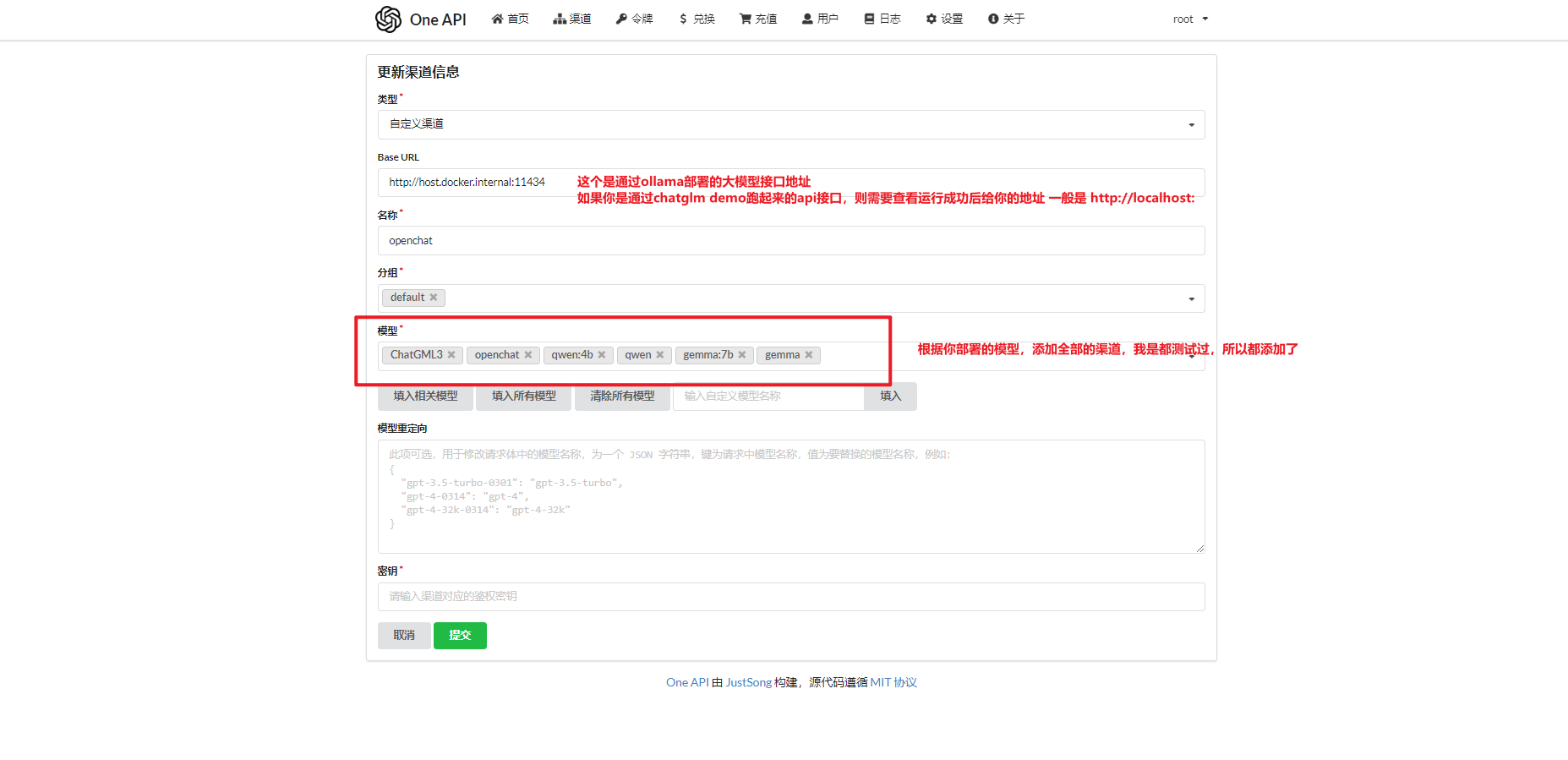

- 登陆后来到渠道页面添加渠道,此步骤添加的是大语言模型

- 如果你是通过ollama运行的大模型,则需要再次添加新渠道,本次添加m3e渠道

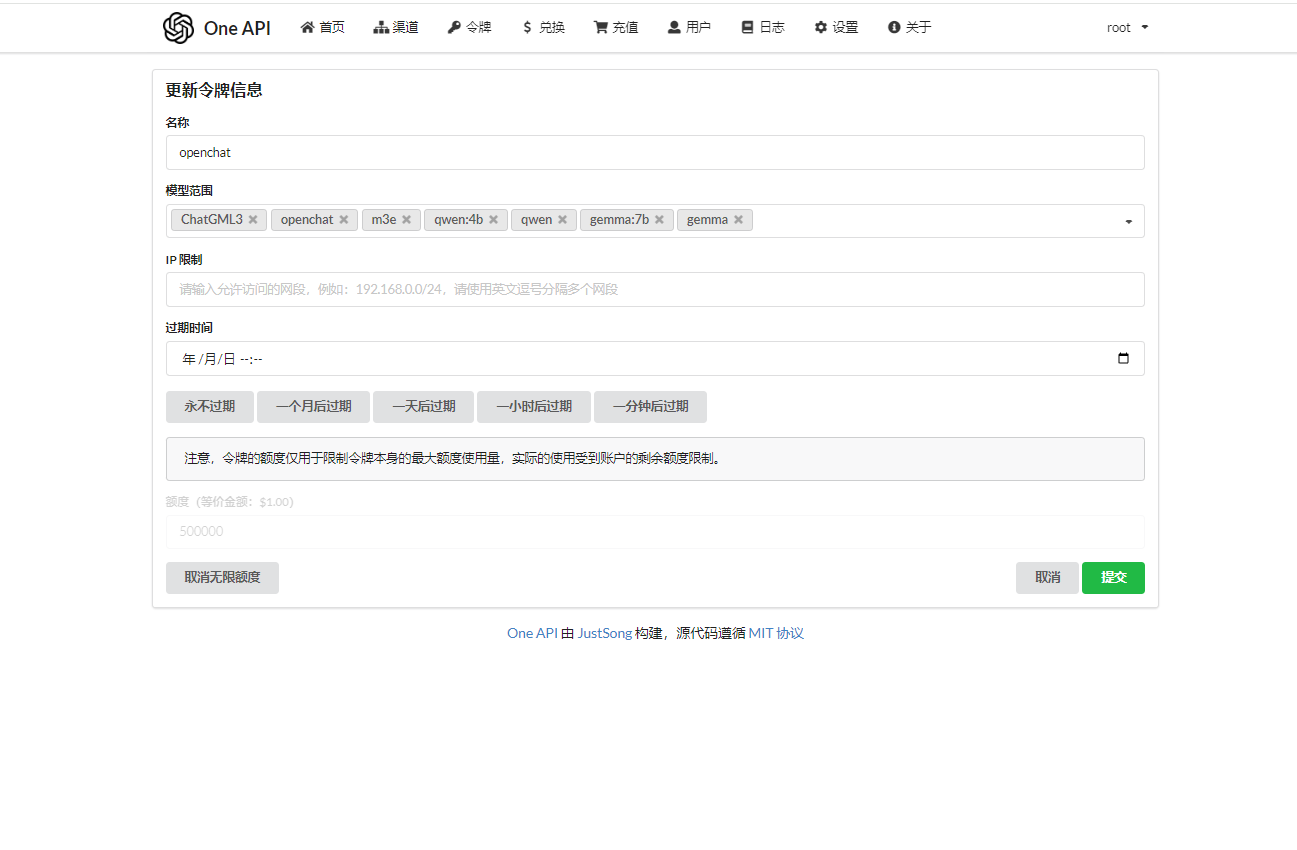

- 新建令牌

- 新建令牌后,复制令牌sdk备用

fastgpt环境搭建

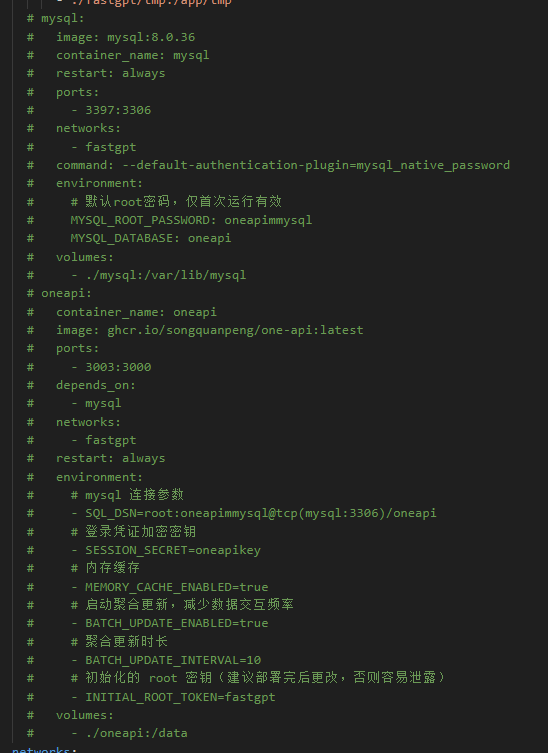

- 非 Linux 环境或无法访问外网环境,可手动创建一个目录,并下载下面2个链接的文件: docker-compose.yml,config.json

注意: docker-compose.yml 配置文件中 Mongo 为 5.x,部分服务器不支持,需手动更改其镜像版本为 4.4.24(需要自己在docker hub下载,阿里云镜像没做备份)

- config.json配置文件修改

- 打开下载的config.json

- 复制并替换

**llmModels**数组中的第一组数据,修改model和name属性为你部署的模型属性,其他可以不做修改

{ "model": "gemma:2b", "name": "gemma:2b", "maxContext": 16000, "avatar": "/imgs/model/openai.svg", "maxResponse": 4000, "quoteMaxToken": 13000, "maxTemperature": 1.2, "charsPointsPrice": 0, "censor": false, "vision": false, "datasetProcess": true, "usedInClassify": true, "usedInExtractFields": true, "usedInToolCall": true, "usedInQueryExtension": true, "toolChoice": true, "functionCall": true, "customCQPrompt": "", "customExtractPrompt": "", "defaultSystemChatPrompt": "", "defaultConfig": {} },

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

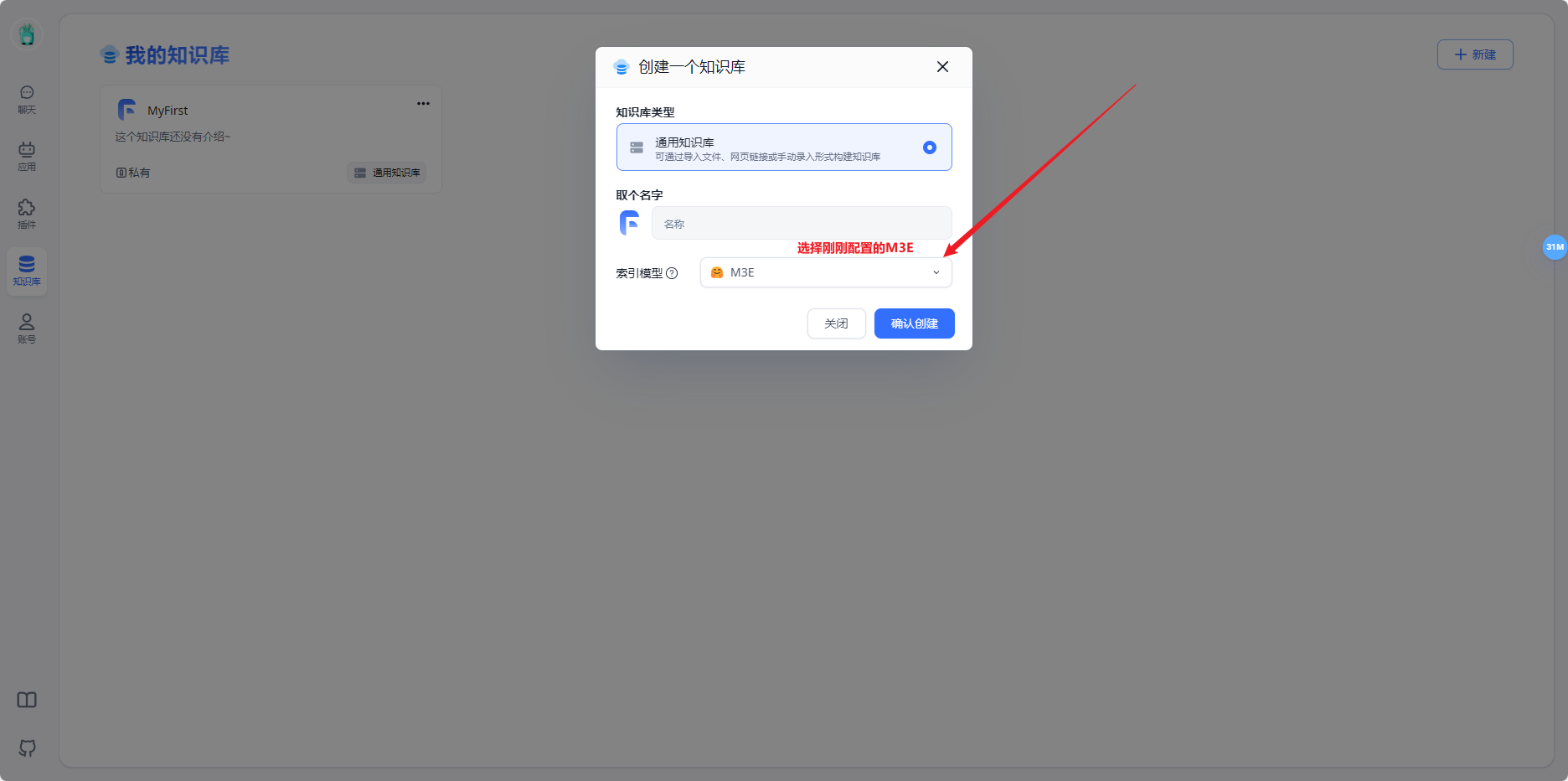

- 如果你是ollama部署的大模型

- 打开下载的config.json

- 在

**vectorModels**数组中添加以下数据

{

"model": "m3e",

"name": "M3E",

"inputPrice": 0,

"outputPrice": 0,

"defaultToken": 700,

"maxToken": 1800,

"weight": 100

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 打开docker-compose.yml

- 注释掉mysql 及 oneapi相关配置

- **启动容器 **

在 docker-compose.yml 同级目录下执行。请确保docker-compose版本最好在2.17以上,否则可能无法执行自动化命令。

# 启动容器

docker-compose up -d

# 等待10s,OneAPI第一次总是要重启几次才能连上Mysql

sleep 10

# 重启一次oneapi(由于OneAPI的默认Key有点问题,不重启的话会提示找不到渠道,临时手动重启一次解决,等待作者修复)

docker restart oneapi

- 1

- 2

- 3

- 4

- 5

- 6

- 访问 FastGPT

目前可以通过 ip:3000 直接访问(注意防火墙)。登录用户名为 root,密码为docker-compose.yml环境变量里设置的 DEFAULT_ROOT_PSW。

如果需要域名访问,请自行安装并配置 Nginx。

首次运行,会自动初始化 root 用户,密码为 1234

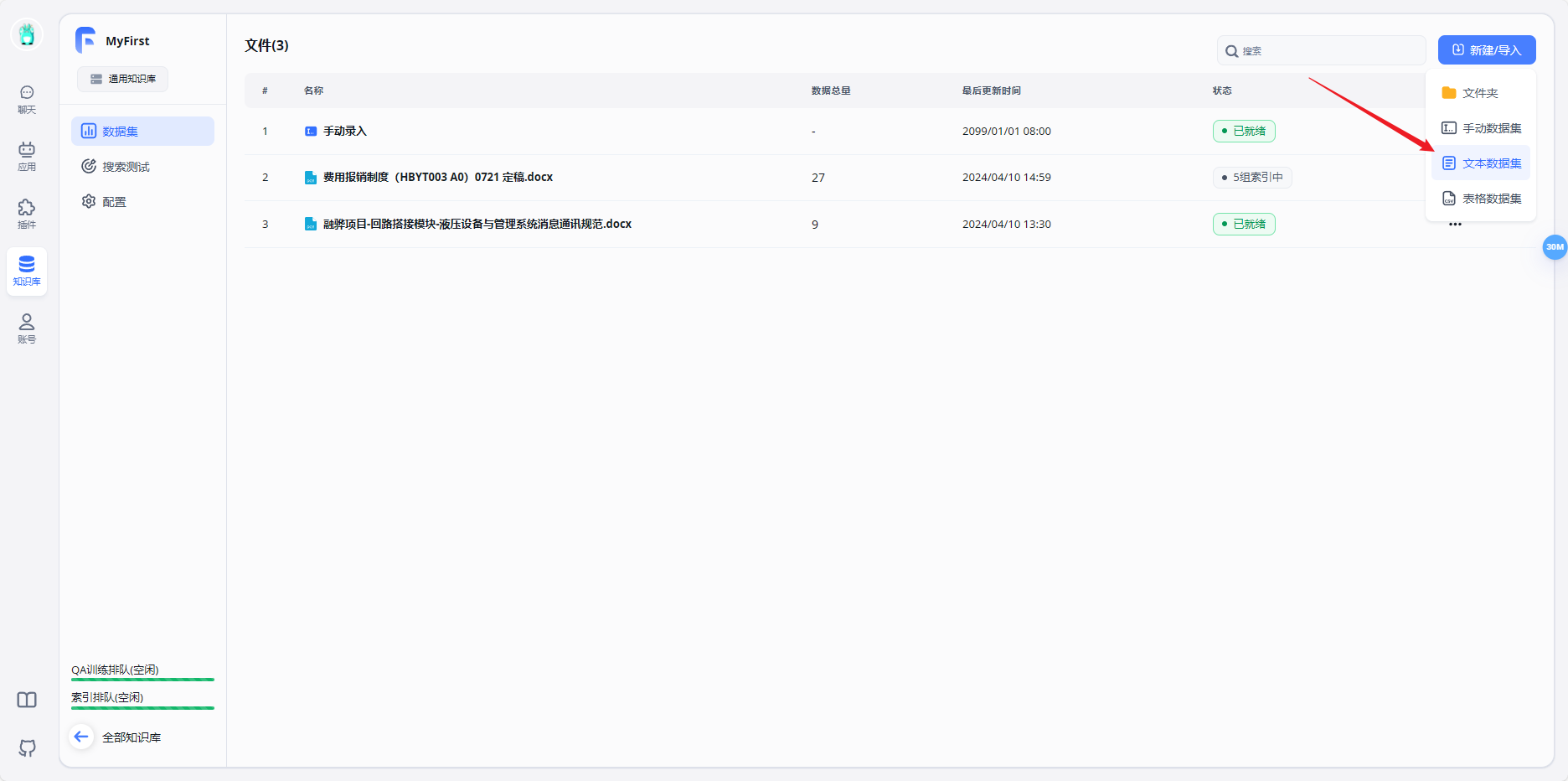

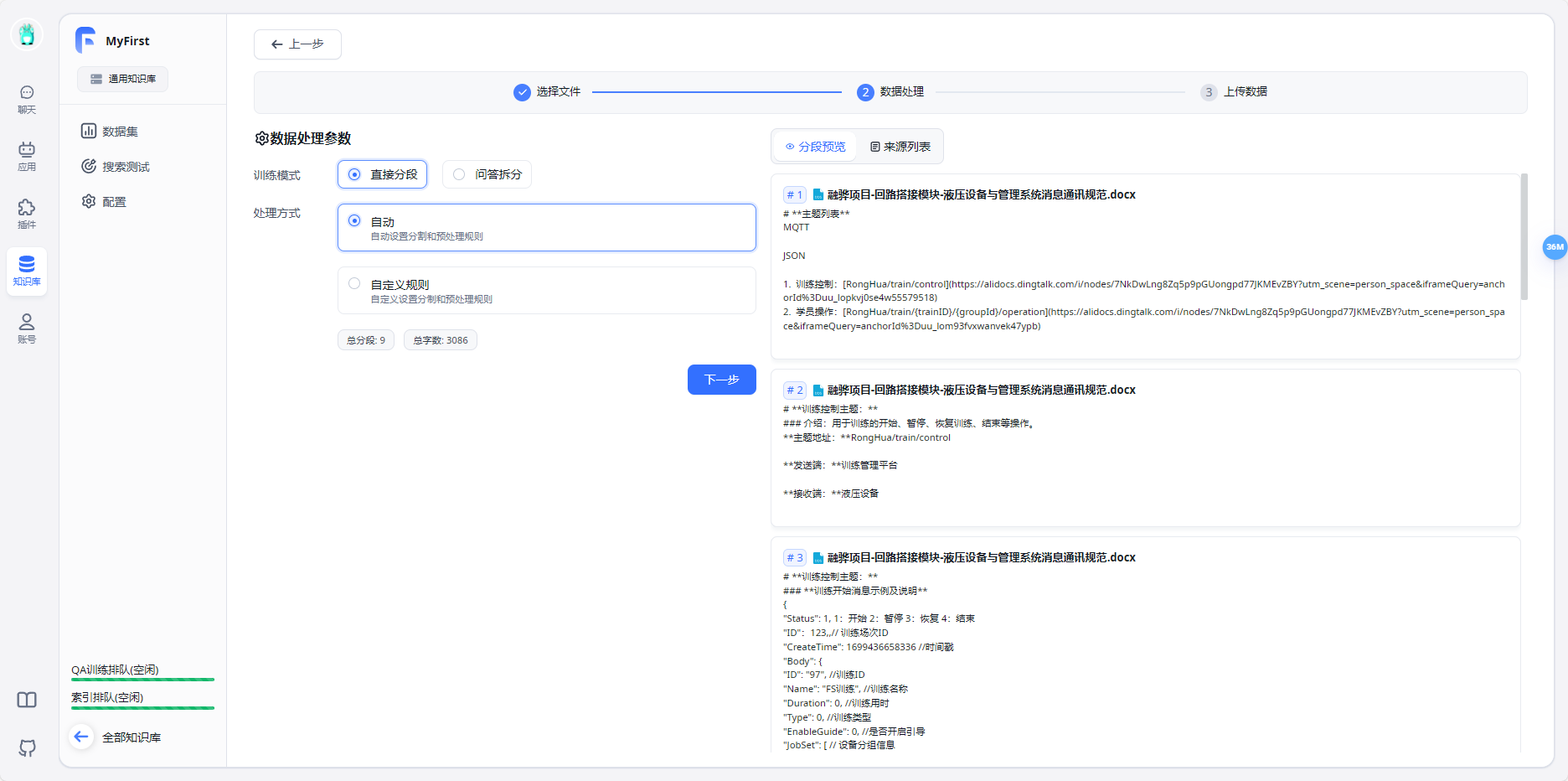

- 新建知识库

- 上传知识库文件

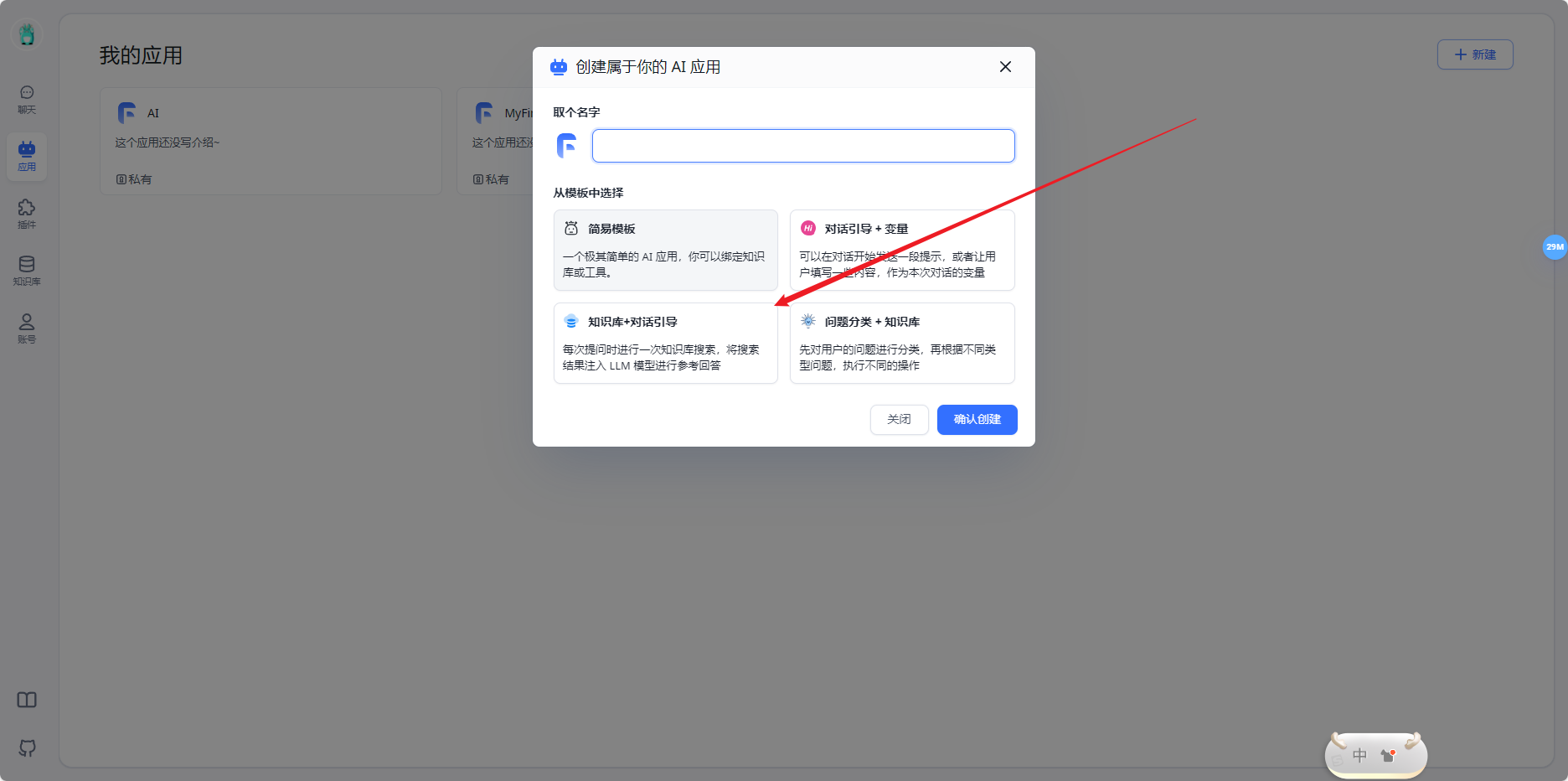

- 新建AI应用

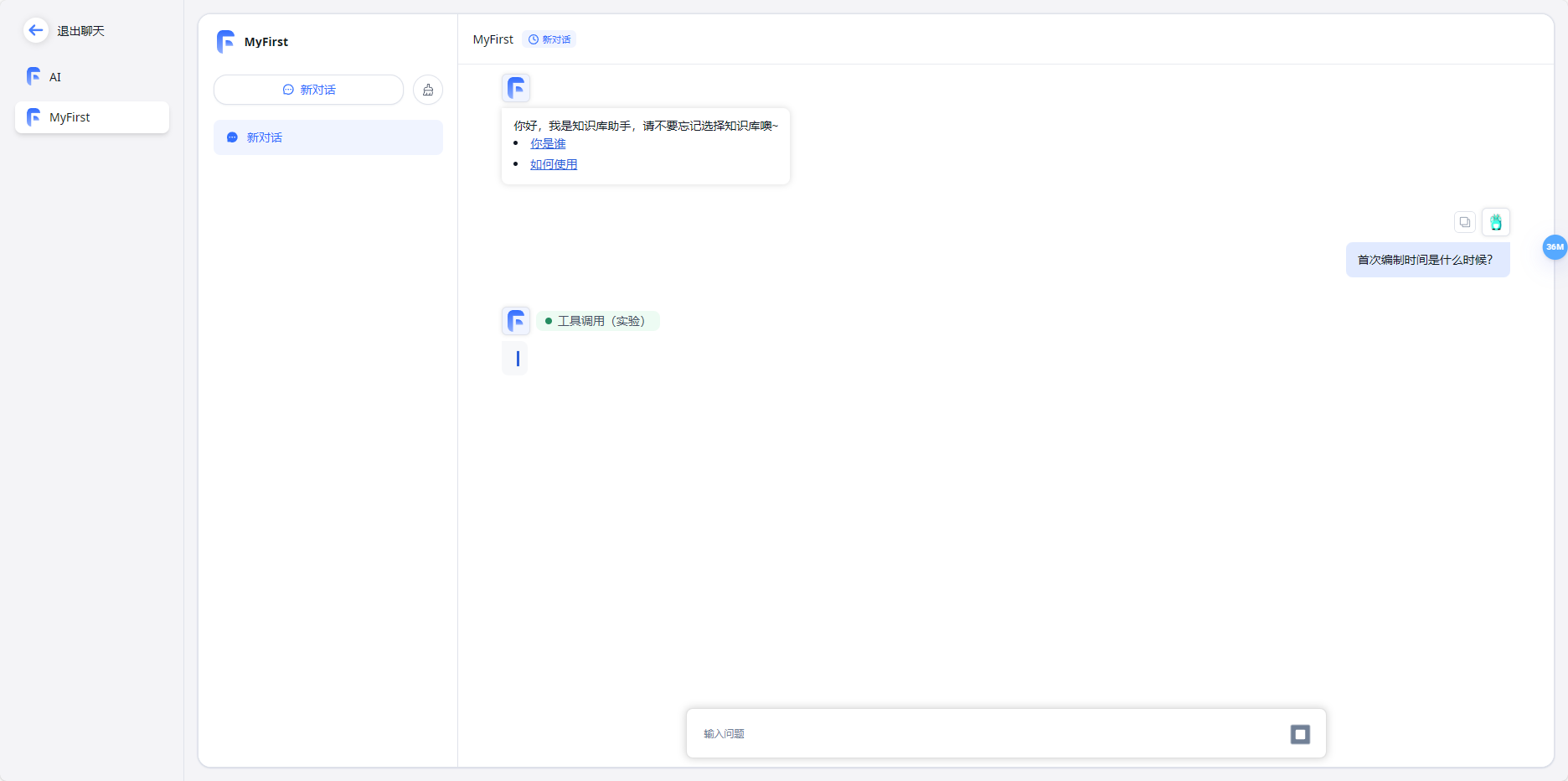

- 开始使用

运行报错

报huggingface-hub的错

pip install huggingface-hub==0.20.3

显存不足尝试设置环境变量

set PYTORCH_CUDA_ALLOC_COFF=expandable_segments:True

再次运行python api_server.py(经测试无用)

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 108.00 MiB. GPU 0 has a total capacity of 6.00 GiB of which 0 bytes is free. Of the allocated memory 12.31 GiB is allocated by PyTorch, and 1.27 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables))

api_server.py整体

""" This script implements an API for the ChatGLM3-6B model, formatted similarly to OpenAI's API (https://platform.openai.com/docs/api-reference/chat). It's designed to be run as a web server using FastAPI and uvicorn, making the ChatGLM3-6B model accessible through OpenAI Client. Key Components and Features: - Model and Tokenizer Setup: Configures the model and tokenizer paths and loads them. - FastAPI Configuration: Sets up a FastAPI application with CORS middleware for handling cross-origin requests. - API Endpoints: - "/v1/models": Lists the available models, specifically ChatGLM3-6B. - "/v1/chat/completions": Processes chat completion requests with options for streaming and regular responses. - "/v1/embeddings": Processes Embedding request of a list of text inputs. - Token Limit Caution: In the OpenAI API, 'max_tokens' is equivalent to HuggingFace's 'max_new_tokens', not 'max_length'. For instance, setting 'max_tokens' to 8192 for a 6b model would result in an error due to the model's inability to output that many tokens after accounting for the history and prompt tokens. - Stream Handling and Custom Functions: Manages streaming responses and custom function calls within chat responses. - Pydantic Models: Defines structured models for requests and responses, enhancing API documentation and type safety. - Main Execution: Initializes the model and tokenizer, and starts the FastAPI app on the designated host and port. Note: This script doesn't include the setup for special tokens or multi-GPU support by default. Users need to configure their special tokens and can enable multi-GPU support as per the provided instructions. Embedding Models only support in One GPU. Running this script requires 14-15GB of GPU memory. 2 GB for the embedding model and 12-13 GB for the FP16 ChatGLM3 LLM. """ import os import time import tiktoken import torch import uvicorn from fastapi import FastAPI, HTTPException, Response from fastapi.middleware.cors import CORSMiddleware from contextlib import asynccontextmanager from typing import List, Literal, Optional, Union from loguru import logger from pydantic import BaseModel, Field from transformers import AutoTokenizer, AutoModel from utils import process_response, generate_chatglm3, generate_stream_chatglm3 from sentence_transformers import SentenceTransformer from sse_starlette.sse import EventSourceResponse # Set up limit request time EventSourceResponse.DEFAULT_PING_INTERVAL = 1000000 # set LLM path MODEL_PATH = os.environ.get('MODEL_PATH', 'D:\WangMing\FastGPT\models\chatglm3-6b-copy') TOKENIZER_PATH = os.environ.get("TOKENIZER_PATH", 'D:\WangMing\FastGPT\models\chatglm3-6b-copy') # set Embedding Model path EMBEDDING_PATH = os.environ.get('EMBEDDING_PATH', 'D:\WangMing\FastGPT\models\m3e-base') @asynccontextmanager async def lifespan(app: FastAPI): yield if torch.cuda.is_available(): torch.cuda.empty_cache() torch.cuda.ipc_collect() app = FastAPI(lifespan=lifespan) app.add_middleware( CORSMiddleware, allow_origins=["*"], allow_credentials=True, allow_methods=["*"], allow_headers=["*"], ) class ModelCard(BaseModel): id: str object: str = "model" created: int = Field(default_factory=lambda: int(time.time())) owned_by: str = "owner" root: Optional[str] = None parent: Optional[str] = None permission: Optional[list] = None class ModelList(BaseModel): object: str = "list" data: List[ModelCard] = [] class FunctionCallResponse(BaseModel): name: Optional[str] = None arguments: Optional[str] = None class ChatMessage(BaseModel): role: Literal["user", "assistant", "system", "function"] content: str = None name: Optional[str] = None function_call: Optional[FunctionCallResponse] = None class DeltaMessage(BaseModel): role: Optional[Literal["user", "assistant", "system"]] = None content: Optional[str] = None function_call: Optional[FunctionCallResponse] = None ## for Embedding class EmbeddingRequest(BaseModel): input: List[str] model: str class CompletionUsage(BaseModel): prompt_tokens: int completion_tokens: int total_tokens: int class EmbeddingResponse(BaseModel): data: list model: str object: str usage: CompletionUsage # for ChatCompletionRequest class UsageInfo(BaseModel): prompt_tokens: int = 0 total_tokens: int = 0 completion_tokens: Optional[int] = 0 class ChatCompletionRequest(BaseModel): model: str messages: List[ChatMessage] temperature: Optional[float] = 0.8 top_p: Optional[float] = 0.8 max_tokens: Optional[int] = None stream: Optional[bool] = False tools: Optional[Union[dict, List[dict]]] = None repetition_penalty: Optional[float] = 1.1 class ChatCompletionResponseChoice(BaseModel): index: int message: ChatMessage finish_reason: Literal["stop", "length", "function_call"] class ChatCompletionResponseStreamChoice(BaseModel): delta: DeltaMessage finish_reason: Optional[Literal["stop", "length", "function_call"]] index: int class ChatCompletionResponse(BaseModel): model: str id: str object: Literal["chat.completion", "chat.completion.chunk"] choices: List[Union[ChatCompletionResponseChoice, ChatCompletionResponseStreamChoice]] created: Optional[int] = Field(default_factory=lambda: int(time.time())) usage: Optional[UsageInfo] = None @app.get("/health") async def health() -> Response: """Health check.""" return Response(status_code=200) @app.post("/v1/embeddings", response_model=EmbeddingResponse) async def get_embeddings(request: EmbeddingRequest): embeddings = [embedding_model.encode(text) for text in request.input] embeddings = [embedding.tolist() for embedding in embeddings] def num_tokens_from_string(string: str) -> int: """ Returns the number of tokens in a text string. use cl100k_base tokenizer """ encoding = tiktoken.get_encoding('cl100k_base') num_tokens = len(encoding.encode(string)) return num_tokens response = { "data": [ { "object": "embedding", "embedding": embedding, "index": index } for index, embedding in enumerate(embeddings) ], "model": request.model, "object": "list", "usage": CompletionUsage( prompt_tokens=sum(len(text.split()) for text in request.input), completion_tokens=0, total_tokens=sum(num_tokens_from_string(text) for text in request.input), ) } return response @app.get("/v1/models", response_model=ModelList) async def list_models(): model_card = ModelCard( id="chatglm3-6b" ) return ModelList( data=[model_card] ) @app.post("/v1/chat/completions", response_model=ChatCompletionResponse) async def create_chat_completion(request: ChatCompletionRequest): global model, tokenizer if len(request.messages) < 1 or request.messages[-1].role == "assistant": raise HTTPException(status_code=400, detail="Invalid request") gen_params = dict( messages=request.messages, temperature=request.temperature, top_p=request.top_p, max_tokens=request.max_tokens or 1024, echo=False, stream=request.stream, repetition_penalty=request.repetition_penalty, tools=request.tools, ) logger.debug(f"==== request ====\n{gen_params}") if request.stream: # Use the stream mode to read the first few characters, if it is not a function call, direct stram output predict_stream_generator = predict_stream(request.model, gen_params) output = next(predict_stream_generator) if not contains_custom_function(output): return EventSourceResponse(predict_stream_generator, media_type="text/event-stream") # Obtain the result directly at one time and determine whether tools needs to be called. logger.debug(f"First result output:\n{output}") function_call = None if output and request.tools: try: function_call = process_response(output, use_tool=True) except: logger.warning("Failed to parse tool call") # CallFunction if isinstance(function_call, dict): function_call = FunctionCallResponse(**function_call) """ In this demo, we did not register any tools. You can use the tools that have been implemented in our `tools_using_demo` and implement your own streaming tool implementation here. Similar to the following method: function_args = json.loads(function_call.arguments) tool_response = dispatch_tool(tool_name: str, tool_params: dict) """ tool_response = "" if not gen_params.get("messages"): gen_params["messages"] = [] gen_params["messages"].append(ChatMessage( role="assistant", content=output, )) gen_params["messages"].append(ChatMessage( role="function", name=function_call.name, content=tool_response, )) # Streaming output of results after function calls generate = predict(request.model, gen_params) return EventSourceResponse(generate, media_type="text/event-stream") else: # Handled to avoid exceptions in the above parsing function process. generate = parse_output_text(request.model, output) return EventSourceResponse(generate, media_type="text/event-stream") # Here is the handling of stream = False response = generate_chatglm3(model, tokenizer, gen_params) # Remove the first newline character if response["text"].startswith("\n"): response["text"] = response["text"][1:] response["text"] = response["text"].strip() usage = UsageInfo() function_call, finish_reason = None, "stop" if request.tools: try: function_call = process_response(response["text"], use_tool=True) except: logger.warning("Failed to parse tool call, maybe the response is not a tool call or have been answered.") if isinstance(function_call, dict): finish_reason = "function_call" function_call = FunctionCallResponse(**function_call) message = ChatMessage( role="assistant", content=response["text"], function_call=function_call if isinstance(function_call, FunctionCallResponse) else None, ) logger.debug(f"==== message ====\n{message}") choice_data = ChatCompletionResponseChoice( index=0, message=message, finish_reason=finish_reason, ) task_usage = UsageInfo.model_validate(response["usage"]) for usage_key, usage_value in task_usage.model_dump().items(): setattr(usage, usage_key, getattr(usage, usage_key) + usage_value) return ChatCompletionResponse( model=request.model, id="", # for open_source model, id is empty choices=[choice_data], object="chat.completion", usage=usage ) async def predict(model_id: str, params: dict): global model, tokenizer choice_data = ChatCompletionResponseStreamChoice( index=0, delta=DeltaMessage(role="assistant"), finish_reason=None ) chunk = ChatCompletionResponse(model=model_id, id="", choices=[choice_data], object="chat.completion.chunk") yield "{}".format(chunk.model_dump_json(exclude_unset=True)) previous_text = "" for new_response in generate_stream_chatglm3(model, tokenizer, params): decoded_unicode = new_response["text"] delta_text = decoded_unicode[len(previous_text):] previous_text = decoded_unicode finish_reason = new_response["finish_reason"] if len(delta_text) == 0 and finish_reason != "function_call": continue function_call = None if finish_reason == "function_call": try: function_call = process_response(decoded_unicode, use_tool=True) except: logger.warning( "Failed to parse tool call, maybe the response is not a tool call or have been answered.") if isinstance(function_call, dict): function_call = FunctionCallResponse(**function_call) delta = DeltaMessage( content=delta_text, role="assistant", function_call=function_call if isinstance(function_call, FunctionCallResponse) else None, ) choice_data = ChatCompletionResponseStreamChoice( index=0, delta=delta, finish_reason=finish_reason ) chunk = ChatCompletionResponse( model=model_id, id="", choices=[choice_data], object="chat.completion.chunk" ) yield "{}".format(chunk.model_dump_json(exclude_unset=True)) choice_data = ChatCompletionResponseStreamChoice( index=0, delta=DeltaMessage(), finish_reason="stop" ) chunk = ChatCompletionResponse( model=model_id, id="", choices=[choice_data], object="chat.completion.chunk" ) yield "{}".format(chunk.model_dump_json(exclude_unset=True)) yield '[DONE]' def predict_stream(model_id, gen_params): """ The function call is compatible with stream mode output. The first seven characters are determined. If not a function call, the stream output is directly generated. Otherwise, the complete character content of the function call is returned. :param model_id: :param gen_params: :return: """ output = "" is_function_call = False has_send_first_chunk = False for new_response in generate_stream_chatglm3(model, tokenizer, gen_params): decoded_unicode = new_response["text"] delta_text = decoded_unicode[len(output):] output = decoded_unicode # When it is not a function call and the character length is> 7, # try to judge whether it is a function call according to the special function prefix if not is_function_call and len(output) > 7: # Determine whether a function is called is_function_call = contains_custom_function(output) if is_function_call: continue # Non-function call, direct stream output finish_reason = new_response["finish_reason"] # Send an empty string first to avoid truncation by subsequent next() operations. if not has_send_first_chunk: message = DeltaMessage( content="", role="assistant", function_call=None, ) choice_data = ChatCompletionResponseStreamChoice( index=0, delta=message, finish_reason=finish_reason ) chunk = ChatCompletionResponse( model=model_id, id="", choices=[choice_data], created=int(time.time()), object="chat.completion.chunk" ) yield "{}".format(chunk.model_dump_json(exclude_unset=True)) send_msg = delta_text if has_send_first_chunk else output has_send_first_chunk = True message = DeltaMessage( content=send_msg, role="assistant", function_call=None, ) choice_data = ChatCompletionResponseStreamChoice( index=0, delta=message, finish_reason=finish_reason ) chunk = ChatCompletionResponse( model=model_id, id="", choices=[choice_data], created=int(time.time()), object="chat.completion.chunk" ) yield "{}".format(chunk.model_dump_json(exclude_unset=True)) if is_function_call: yield output else: yield '[DONE]' async def parse_output_text(model_id: str, value: str): """ Directly output the text content of value :param model_id: :param value: :return: """ choice_data = ChatCompletionResponseStreamChoice( index=0, delta=DeltaMessage(role="assistant", content=value), finish_reason=None ) chunk = ChatCompletionResponse(model=model_id, id="", choices=[choice_data], object="chat.completion.chunk") yield "{}".format(chunk.model_dump_json(exclude_unset=True)) choice_data = ChatCompletionResponseStreamChoice( index=0, delta=DeltaMessage(), finish_reason="stop" ) chunk = ChatCompletionResponse(model=model_id, id="", choices=[choice_data], object="chat.completion.chunk") yield "{}".format(chunk.model_dump_json(exclude_unset=True)) yield '[DONE]' def contains_custom_function(value: str) -> bool: """ Determine whether 'function_call' according to a special function prefix. For example, the functions defined in "tools_using_demo/tool_register.py" are all "get_xxx" and start with "get_" [Note] This is not a rigorous judgment method, only for reference. :param value: :return: """ return value and 'get_' in value if __name__ == "__main__": # Load LLM tokenizer = AutoTokenizer.from_pretrained("D:\WangMing\FastGPT\models\chatglm3-6b-copy", trust_remote_code=True) model = AutoModel.from_pretrained("D:\WangMing\FastGPT\models\chatglm3-6b-copy", trust_remote_code=True, device_map="auto").quantize(4).eval() # load Embedding embedding_model = SentenceTransformer("D:\WangMing\FastGPT\models\chatglm3-6b-copy", trust_remote_code=True, device="cuda") uvicorn.run(app, host='0.0.0.0', port=8000, workers=1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

- 344

- 345

- 346

- 347

- 348

- 349

- 350

- 351

- 352

- 353

- 354

- 355

- 356

- 357

- 358

- 359

- 360

- 361

- 362

- 363

- 364

- 365

- 366

- 367

- 368

- 369

- 370

- 371

- 372

- 373

- 374

- 375

- 376

- 377

- 378

- 379

- 380

- 381

- 382

- 383

- 384

- 385

- 386

- 387

- 388

- 389

- 390

- 391

- 392

- 393

- 394

- 395

- 396

- 397

- 398

- 399

- 400

- 401

- 402

- 403

- 404

- 405

- 406

- 407

- 408

- 409

- 410

- 411

- 412

- 413

- 414

- 415

- 416

- 417

- 418

- 419

- 420

- 421

- 422

- 423

- 424

- 425

- 426

- 427

- 428

- 429

- 430

- 431

- 432

- 433

- 434

- 435

- 436

- 437

- 438

- 439

- 440

- 441

- 442

- 443

- 444

- 445

- 446

- 447

- 448

- 449

- 450

- 451

- 452

- 453

- 454

- 455

- 456

- 457

- 458

- 459

- 460

- 461

- 462

- 463

- 464

- 465

- 466

- 467

- 468

- 469

- 470

- 471

- 472

- 473

- 474

- 475

- 476

- 477

- 478

- 479

- 480

- 481

- 482

- 483

- 484

- 485

- 486

- 487

- 488

- 489

- 490

- 491

- 492

- 493

- 494

- 495

- 496

- 497

- 498

- 499

- 500

- 501

- 502

- 503

- 504

- 505

- 506

- 507

- 508

- 509

- 510

- 511

- 512

- 513

- 514

- 515

- 516

- 517

- 518

- 519

- 520

- 521

- 522

- 523

- 524

- 525

- 526

- 527

- 528

- 529

- 530

- 531

- 532

- 533

- 534