- 1一、Redis入门之——介绍、安装,图形化界面(GUI)工具Redis Desktop Manager (RDM)安装_redis图形化界面

- 2ChatGPT Alpha 与 GPT-3.5 、GPT-4 详细对比评测

- 3python编程100个小程序,python简单的小程序_python小程序源代码

- 4论文复现和点评《基于随机森林模型的个人信用风险评估研究》

- 5Android Flutter 面试题_android flutter面试题

- 6Jquery选择器使用方法大全_jquery id选择器正确用法

- 7通过 docker-compose 快速部署 Kafka 保姆级教程_docker-compose kafka

- 8Python爬虫从入门到精通只需要三个月_学爬虫要多久

- 9Kafka的监控和报警机制_kafka监控

- 10php进销存源码云进销存管理系统_进销存管理系统源码php

NLP LLM(Pretraining + Finetuning)——HuggingFace Transformer代码篇_directionality": "bidi",

赞

踩

NLP LLM(Pretraining + Finetuning)——理论篇

NLP LLM(Pretraining + Finetuning)——HuggingFace Transformer代码篇

HuggingFace简介

hugging face在NLP领域最出名,其提供的模型大多都是基于Transformer的。为了易用性,Hugging Face还为用户提供了以下几个项目:

- Transformers(github, 官方文档): Transformers提供了上千个预训练好的模型可以用于不同的任务,例如文本领域、音频领域和CV领域。该项目是HuggingFace的核心,可以说学习HuggingFace就是在学习该项目如何使用。

- Datasets(github, 官方文档): 一个轻量级的数据集框架,主要有两个功能:①一行代码下载和预处理常用的公开数据集; ② 快速、易用的数据预处理类库。

- Accelerate(github, 官方文档): 帮助Pytorch用户很方便的实现 multi-GPU/TPU/fp16。

- Space:Space提供了许多好玩的深度学习应用,可以尝试玩一下。

Transformers库

1. Pipeline流水线

将数据预处理tokenizer、模型调用model、结果后处理组装成一个流水线

Pipeline原理

pipeline(data, model, tokenizer, divece)的原理:

Pipeline使用方法

一般使用较多的方法是分别构建model和tokenizer,并指定task任务类型将其分别加入pipeline:

(每类pipeline的具体使用方法可以点进具体Pipeline类的源码中查看!!)

2. Tokenizer分词器

Tokenizer将过去NLP这繁琐的text-to-token的过程进行简化:区分Tokenizer和Embedding

- 先Tokenizer将

(batch_size, sequence_length)的text word -> (batch_size, sequence_length)的id tensor

Tokenizer 将一句 text 中的每个 word(即token) 转换为一个 number(即id),完成 token -> id映射;

- 再 Embedding将

(batch_size, sequence_length)的id tensor -> (batch_size, sequence_length, token dimension))

Embedding 过程(将number转化为vector,一般在Model中设置embedding层),完成 id -> feature映射。embedding layer 的 forward() 其实是通过 one hot + 矩阵乘法 的形式实现的,矩阵embedding.weight 是的形式是一个 learnable matrix 的shape (v, h) =(v:vocabulary size,h:hidden dimension):输入tokens shape (b, s) 经过 one hot,得到 shape (b, s, v),将其与权重矩阵相乘 (b, s, v) @ (v, h) ==> (b, s, h),得到最终的text embedding (b, s, h),b是batch size,s是sequence length,h是hidden dimension即每个token的dim

tokenizer 工作的原理其实就是 根据 tokenizer.vocab字典,完成 token <=> id 的映射:

- token => id:

tokenizer.encode(test_senteces)或tokenizer(test_senteces),tokenizer()的底层调用了tokenizer.encode()

# 将字符串转换为id序列,又称之为编码

ids = tokenizer.encode(sen, add_special_tokens=True)

ids

# [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102]

- 1

- 2

- 3

- 4

- id => token:

tokenizer.decode(tokens)

# 将id序列转换为字符串,又称之为解码

str_sen = tokenizer.decode(ids, skip_special_tokens=False)

str_sen

# '[CLS] 弱 小 的 我 也 有 大 梦 想! [SEP]'

- 1

- 2

- 3

- 4

2.1 Tokenizer快速调用

tokenizer.encode() == tokenizer.tokenizer() == tokenizer.tokenize() + tokenizer.convert_tokens_to_ids()

ids = tokenizer.encode(sen, padding="max_length", max_length=15)

ids

# [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102, 0, 0, 0]

- 1

- 2

- 3

inputs = tokenizer(sen, padding="max_length", max_length=15)

inputs

"""

{'input_ids': [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102, 0, 0, 0],

'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0]}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

tokenizer.encode_plus()和tokenizer()效果相同,但多一个token_type_ids,表示第几个句子,0是前一句,1是后一句。(在下句预测等任务中使用)

inputs = tokenizer.encode_plus(sen, padding="max_length", max_length=15)

inputs

"""

{'input_ids': [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102, 0, 0, 0],

'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0]}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

处理 batch 数据(多句)

sens = ["弱小的我也有大梦想", "有梦想谁都了不起", "追逐梦想的心,比梦想本身,更可贵"]

res = tokenizer(sens)

res

"""

{'input_ids': [[101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 102], [101, 3300, 3457, 2682, 6443, 6963, 749, 679, 6629, 102], [101, 6841, 6852, 3457, 2682, 4638, 2552, 8024, 3683, 3457, 2682, 3315, 6716, 8024, 3291, 1377, 6586, 102]],

'token_type_ids': [[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]],

'attention_mask': [[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]]}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

2.2 Tokenizer的组件解析

Step1 加载与保存

调用时 Tokenizer, Model 要相匹配:因为 tokenizer 的 outputs => 作为 model 的 input

from_pertrain(model_name)的时候使用的是相同的 model_name

from transformers import AutoTokenizer

# 从HuggingFace加载,输入模型名称,即可加载对应的分词器

tokenizer = AutoTokenizer.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

"""

BertTokenizerFast(name_or_path='uer/roberta-base-finetuned-dianping-chinese', vocab_size=21128, model_max_length=1000000000000000019884624838656, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'}, clean_up_tokenization_spaces=True)

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

# tokenizer 保存到本地

tokenizer.save_pretrained("本地文件夹路径")

''' 文件夹内的文件格式

('./roberta_tokenizer\\tokenizer_config.json',

'./roberta_tokenizer\\special_tokens_map.json',

'./roberta_tokenizer\\vocab.txt',

'./roberta_tokenizer\\added_tokens.json',

'./roberta_tokenizer\\tokenizer.json')

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

# 从本地加载tokenizer

tokenizer = AutoTokenizer.from_pretrained("本地文件夹路径")

"""

BertTokenizerFast(name_or_path='uer/roberta-base-finetuned-dianping-chinese', vocab_size=21128, model_max_length=1000000000000000019884624838656, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'}, clean_up_tokenization_spaces=True)

"""

- 1

- 2

- 3

- 4

- 5

Step2 句子分词 :

tokenizer.tokenize() 对句子进行分词

sen = "弱小的我也有大梦想!"

tokens = tokenizer.tokenize(sen)

# ['弱', '小', '的', '我', '也', '有', '大', '梦', '想', '!']

- 1

- 2

- 3

Step3 查看词典:

词典 vocab

tokenizer.vocab

"""

{'湾': 3968,

'訴': 6260,

'##轶': 19824,

'洞': 3822,

' ̄': 8100,

'##劾': 14288,

'##care': 11014,

'asia': 8339,

'##嗑': 14679,

'##鹘': 20965,

'washington': 12262,

'##匕': 14321,

'##樟': 16619,

'癮': 4628,

'day3': 11649,

'##宵': 15213,

'##弧': 15536,

'##do': 8828,

'詭': 6279,

'3500': 9252,

'124': 9377,

'##価': 13957,

'##玄': 17428,

'##積': 18005,

'##肝': 18555,

...

'##维': 18392,

'與': 5645,

'##mark': 9882,

'偽': 984,

...}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

词典大小 vocab_size

tokenizer.vocab_size

# 21128

- 1

- 2

特殊token:[100, 102, 0, 101, 103] 对应 ['[UNK]', '[SEP]', '[PAD]', '[CLS]', '[MASK]']

tokenizer.special_tokens_map

'''

{'unk_token': '[UNK]',

'sep_token': '[SEP]',

'pad_token': '[PAD]',

'cls_token': '[CLS]',

'mask_token': '[MASK]'}

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

tokenizer.convert_tokens_to_ids([special for special in tokenizer.special_tokens_map.values()])

'''

[100, 102, 0, 101, 103]

'''

- 1

- 2

- 3

- 4

Step4 索引转换:

tokenizer.convert_tokens_to_ids()根据 vocabulary 将word转换为对应的number。tokenizer.convert_ids_to_tokens()根据 vocabulary 将number转换为对应的word。

# 将词序列转换为id序列

ids = tokenizer.convert_tokens_to_ids(tokens)

ids

# [2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106]

- 1

- 2

- 3

- 4

# 将id序列转换为token序列

tokens = tokenizer.convert_ids_to_tokens(ids)

tokens

# ['弱', '小', '的', '我', '也', '有', '大', '梦', '想', '!']

- 1

- 2

- 3

- 4

# 将token序列转换为string

str_sen = tokenizer.convert_tokens_to_string(tokens)

str_sen

# '弱 小 的 我 也 有 大 梦 想!'

- 1

- 2

- 3

- 4

Step5 填充与截断:

相对于 max_length 的truncation 和 padding 属性

# 填充

ids = tokenizer.encode(sen, padding="max_length", max_length=15)

ids

# [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102, 0, 0, 0]

- 1

- 2

- 3

- 4

# 截断

ids = tokenizer.encode(sen, max_length=5, truncation=True)

ids

# [101, 2483, 2207, 4638, 102]

- 1

- 2

- 3

- 4

attention mask 与 padding 相匹配: attention mask=0 表示改位置没有word,id 用 0 进行 padding。

attention_mask = [1 if idx != 0 else 0 for idx in ids]

token_type_ids = [0] * len(ids)

ids, attention_mask, token_type_ids

"""

([101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 106, 102, 0, 0, 0],

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0])

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

2.2 Fast / Slow Tokenizer

sen = "弱小的我也有大Dreaming!"

fast_tokenizer = AutoTokenizer.from_pretrained("uer/roberta-base-finetuned-dianping-chinese")

fast_tokenizer

# BertTokenizerFast(name_or_path='uer/roberta-base-finetuned-dianping-chinese', vocab_size=21128, model_max_length=1000000000000000019884624838656, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'}, clean_up_tokenization_spaces=True)

inputs = fast_tokenizer(sen, return_offsets_mapping=True)

inputs

# {'input_ids': [101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 10252, 8221, 106, 102], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], 'offset_mapping': [(0, 0), (0, 1), (1, 2), (2, 3), (3, 4), (4, 5), (5, 6), (6, 7), (7, 12), (12, 15), (15, 16), (0, 0)]}

inputs.word_ids()

# [None, 0, 1, 2, 3, 4, 5, 6, 7, 7, 8, None]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

slow_tokenizer = AutoTokenizer.from_pretrained("uer/roberta-base-finetuned-dianping-chinese", use_fast=False)

slow_tokenizer

# BertTokenizer(name_or_path='uer/roberta-base-finetuned-dianping-chinese', vocab_size=21128, model_max_length=1000000000000000019884624838656, is_fast=False, padding_side='right', truncation_side='right', special_tokens={'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'}, clean_up_tokenization_spaces=True)

- 1

- 2

- 3

3. Model模型

3.1 模型加载与保存

在线下载: 会遇到HTTP连接超时

from transformers import AutoConfig, AutoModel, AutoTokenizer

model = AutoModel.from_pretrained("hfl/rbt3", force_download=True)

- 1

- 2

离线下载 : 需要挂梯子自己进去下载,在本地创建文件夹

!git clone "https://huggingface.co/hfl/rbt3"

!git lfs clone "https://huggingface.co/hfl/rbt3" --include="*.bin"

- 1

- 2

离线加载:

model = AutoModel.from_pretrained("本地文件夹")

- 1

模型加载参数

model = AutoModel.from_pretrained("本地文件夹")

model.config

"""

BertConfig {

"_name_or_path": "rbt3",

"architectures": [

"BertForMaskedLM"

],

"attention_probs_dropout_prob": 0.1,

"classifier_dropout": null,

"directionality": "bidi",

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 3,

"output_past": true,

"pad_token_id": 0,

"pooler_fc_size": 768,

"pooler_num_attention_heads": 12,

"pooler_num_fc_layers": 3,

"pooler_size_per_head": 128,

"pooler_type": "first_token_transform",

...

"transformers_version": "4.28.1",

"type_vocab_size": 2,

"use_cache": true,

"vocab_size": 21128

}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

config = AutoConfig.from_pretrained("./rbt3/")

config

"""

BertConfig {

"_name_or_path": "rbt3",

"architectures": [

"BertForMaskedLM"

],

"attention_probs_dropout_prob": 0.1,

"classifier_dropout": null,

"directionality": "bidi",

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 3,

"output_past": true,

"pad_token_id": 0,

"pooler_fc_size": 768,

"pooler_num_attention_heads": 12,

"pooler_num_fc_layers": 3,

"pooler_size_per_head": 128,

"pooler_type": "first_token_transform",

...

"transformers_version": "4.28.1",

"type_vocab_size": 2,

"use_cache": true,

"vocab_size": 21128

}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

3.2 模型调用

sen = "弱小的我也有大梦想!"

tokenizer = AutoTokenizer.from_pretrained("rbt3")

inputs = tokenizer(sen, return_tensors="pt")

inputs

"""

{'input_ids': tensor([[ 101, 2483, 2207, 4638, 2769, 738, 3300, 1920, 3457, 2682, 8013, 102]]), 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]])}

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

不带Model Head的模型调用

model = AutoModel.from_pretrained("rbt3", output_attentions=True)

output = model(**inputs)

output

"""

BaseModelOutputWithPoolingAndCrossAttentions(last_hidden_state=tensor([[[ 0.6804, 0.6664, 0.7170, ..., -0.4102, 0.7839, -0.0262],

[-0.7378, -0.2748, 0.5034, ..., -0.1359, -0.4331, -0.5874],

[-0.0212, 0.5642, 0.1032, ..., -0.3617, 0.4646, -0.4747],

...,

[ 0.0853, 0.6679, -0.1757, ..., -0.0942, 0.4664, 0.2925],

[ 0.3336, 0.3224, -0.3355, ..., -0.3262, 0.2532, -0.2507],

[ 0.6761, 0.6688, 0.7154, ..., -0.4083, 0.7824, -0.0224]]],

grad_fn=<NativeLayerNormBackward0>), pooler_output=tensor([[-1.2646e-01, -9.8619e-01, -1.0000e+00, -9.8325e-01, 8.0238e-01,

-6.6268e-02, 6.6919e-02, 1.4784e-01, 9.9451e-01, 9.9995e-01,

-8.3051e-02, -1.0000e+00, -9.8865e-02, 9.9980e-01, -1.0000e+00,

9.9993e-01, 9.8291e-01, 9.5363e-01, -9.9948e-01, -1.3219e-01,

-9.9733e-01, -7.7934e-01, 1.0720e-01, 9.8040e-01, 9.9953e-01,

-9.9939e-01, -9.9997e-01, 1.4967e-01, -8.7627e-01, -9.9996e-01,

-9.9821e-01, -9.9999e-01, 1.9396e-01, -1.1277e-01, 9.9359e-01,

-9.9153e-01, 4.4752e-02, -9.8731e-01, -9.9942e-01, -9.9982e-01,

2.9360e-02, 9.9847e-01, -9.2014e-03, 9.9999e-01, 1.7111e-01,

4.5071e-03, 9.9998e-01, 9.9467e-01, 4.9726e-03, -9.0707e-01,

6.9056e-02, -1.8141e-01, -9.8831e-01, 9.9668e-01, 4.9800e-01,

1.2997e-01, 9.9895e-01, -1.0000e+00, -9.9990e-01, 9.9478e-01,

-9.9989e-01, 9.9906e-01, 9.9820e-01, 9.9990e-01, -6.8953e-01,

9.9990e-01, 9.9987e-01, 9.4563e-01, -3.7660e-01, -1.0000e+00,

1.3151e-01, -9.7371e-01, -9.9997e-01, -1.3228e-02, -2.9801e-01,

-9.9985e-01, 9.9662e-01, -2.0004e-01, 9.9997e-01, 3.6876e-01,

-9.9997e-01, 1.5462e-01, 1.9265e-01, 8.9871e-02, 9.9996e-01,

9.9998e-01, 1.5184e-01, -8.9714e-01, -2.1646e-01, -9.9922e-01,

...

1.7911e-02, 4.8672e-01],

[4.0732e-01, 3.8137e-02, 9.6832e-03, ..., 4.4490e-02,

2.2997e-02, 4.0793e-01],

[1.7047e-01, 3.6989e-02, 2.3646e-02, ..., 4.6833e-02,

2.5233e-01, 1.6721e-01]]]], grad_fn=<SoftmaxBackward0>)), cross_attentions=None)

"""

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

output.last_hidden_state.size()

# orch.Size([1, 12, 768])

len(inputs["input_ids"][0])

# 12

- 1

- 2

- 3

- 4

带Model Head的模型调用

from transformers import AutoModelForSequenceClassification, BertForSequenceClassification

clz_model = AutoModelForSequenceClassification.from_pretrained("rbt3", num_labels=10)

clz_model(**inputs)

# SequenceClassifierOutput(loss=None, logits=tensor([[-0.1776, 0.2208, -0.5060, -0.3938, -0.5837, 1.0171, -0.2616, 0.0495, 0.1728, 0.3047]], grad_fn=<AddmmBackward0>), hidden_states=None, attentions=None)

clz_model.config.num_labels

# 2

- 1

- 2

- 3

- 4

- 5

- 6

4. Dataset

用于加载、处理数据集。

4.1 加载数据集

加载在线数据集

from datasets import *

datasets = load_dataset("madao33/new-title-chinese")

datasets

'''

DatasetDict({

train: Dataset({

features: ['title', 'content'],

num_rows: 5850

})

validation: Dataset({

features: ['title', 'content'],

num_rows: 1679

})

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

加载数据集合集中的某一项任务

有的数据集看起来是一个数据集,其实是包含多种数据集的集合,每个子集用于不同是task。

boolq_dataset = load_dataset("super_glue", "boolq")

boolq_dataset

'''

DatasetDict({

train: Dataset({

features: ['question', 'passage', 'idx', 'label'],

num_rows: 9427

})

validation: Dataset({

features: ['question', 'passage', 'idx', 'label'],

num_rows: 3270

})

test: Dataset({

features: ['question', 'passage', 'idx', 'label'],

num_rows: 3245

})

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

按照数据集划分进行加载

默认按train、test、val的3:1:1进行划分,可以选择只加载train,或者只加载train的一部分。

dataset = load_dataset("madao33/new-title-chinese", split="train")

dataset

'''

Dataset({

features: ['title', 'content'],

num_rows: 5850

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

dataset = load_dataset("madao33/new-title-chinese", split="train[10:100]")

dataset

'''

Dataset({

features: ['title', 'content'],

num_rows: 90

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

dataset = load_dataset("madao33/new-title-chinese", split="train[:50%]")

dataset

'''

Dataset({

features: ['title', 'content'],

num_rows: 2925

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

dataset = load_dataset("madao33/new-title-chinese", split=["train[:50%]", "train[50%:]"])

dataset

'''

[Dataset({

features: ['title', 'content'],

num_rows: 2925

}),

Dataset({

features: ['title', 'content'],

num_rows: 2925

})]

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

4.2 查看数据集

datasets = load_dataset("madao33/new-title-chinese")

datasets

'''

DatasetDict({

train: Dataset({

features: ['title', 'content'],

num_rows: 5850

})

validation: Dataset({

features: ['title', 'content'],

num_rows: 1679

})

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

直接索引查询,可以切片

datasets["train"][0]

- 1

datasets["train"][:2]

- 1

datasets["train"]["title"][:5]

- 1

datasets["train"].column_names

# ['title', 'content']

datasets["train"].features

# {'title': Value(dtype='string', id=None),

# 'content': Value(dtype='string', id=None)}

- 1

- 2

- 3

- 4

- 5

4.3 数据集划分

直接使用dataset.train_test_split()方法,按比例划分。stratify_by_column使得标签是均衡的

dataset = boolq_dataset["train"]

dataset.train_test_split(test_size=0.1, stratify_by_column="label") # 分类数据集可以按照比例划分

'''

DatasetDict({

train: Dataset({

features: ['question', 'passage', 'idx', 'label'],

num_rows: 8484

})

test: Dataset({

features: ['question', 'passage', 'idx', 'label'],

num_rows: 943

})

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

4.4 数据选取与过滤

按index_list取指定的数据

# 选取

datasets["train"].select([0, 1])

'''

Dataset({

features: ['title', 'content'],

num_rows: 2

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

取满足lambda条件的数据

# 过滤

filter_dataset = datasets["train"].filter(lambda example: "中国" in example["title"])

filter_dataset["title"][:5]

'''

['聚焦两会,世界探寻中国成功秘诀',

'望海楼中国经济的信心来自哪里',

'“中国奇迹”助力世界减贫跑出加速度',

'和音瞩目历史交汇点上的中国',

'中国风采感染世界']

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

4.5 数据映射

map对每个数据都执行某个函数进行数据处理

def add_prefix(example):

example["title"] = 'Prefix: ' + example["title"]

return example

prefix_dataset = datasets.map(add_prefix)

prefix_dataset["train"][:10]["title"]

- 1

- 2

- 3

- 4

- 5

- 6

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-chinese")

def preprocess_function(example, tokenizer=tokenizer):

model_inputs = tokenizer(example["content"], max_length=512, truncation=True)

labels = tokenizer(example["title"], max_length=32, truncation=True)

# label就是title编码的结果

model_inputs["labels"] = labels["input_ids"]

return model_inputs

processed_datasets = datasets.map(preprocess_function)

processed_datasets

'''

DatasetDict({

train: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 5850

})

validation: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 1679

})

})

'''

processed_datasets = datasets.map(preprocess_function, num_proc=4) # 多线程处理加速

processed_datasets

'''

DatasetDict({

train: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 5850

})

validation: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 1679

})

})

'''

processed_datasets = datasets.map(preprocess_function, batched=True) # 批处理加速

processed_datasets

'''

DatasetDict({

train: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 5850

})

validation: Dataset({

features: ['title', 'content', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 1679

})

})

'''

processed_datasets = datasets.map(preprocess_function, batched=True, remove_columns=datasets["train"].column_names) # remove_columns删除某些字段

processed_datasets

'''

DatasetDict({

train: Dataset({

features: ['input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 5850

})

validation: Dataset({

features: ['input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 1679

})

})

'''

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

Coding

https://github.com/mlabonne/llm-course

Bert

https://www.bilibili.com/video/BV1xs4y1M72q/?spm_id_from=333.999.0.0

GPT

https://www.bilibili.com/video/BV1Gh411w7HC/?spm_id_from=333.999.0.0

ChatGLM

https://www.bilibili.com/video/BV1ju411T74Y/?spm_id_from=333.999.0.0

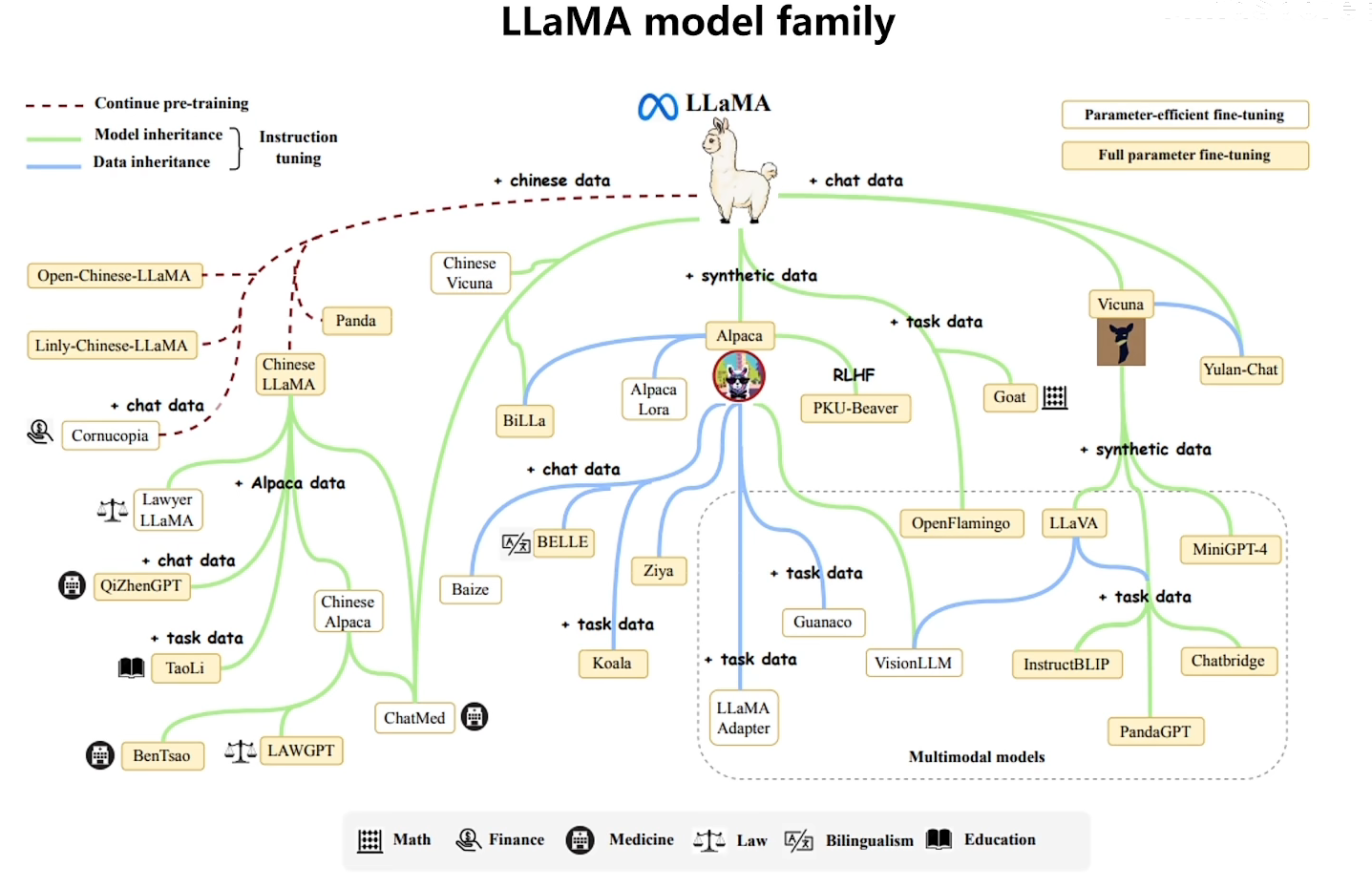

LLaMa

https://www.bilibili.com/video/BV1nN41157a9/?spm_id_from=333.999.0.0&vd_source=b2549fdee562c700f2b1f3f49065201b