- 1【threejs教程9】threejs加载360全景图(VR)的两种方法_threejs 全景图

- 2鸿蒙HarmonyOS项目实战开发:分布式画板_鸿蒙系统项目_harmonyos开发板

- 3使用Python进行音视频剪辑:半自动追踪人脸并添加马赛克_python自动剪辑

- 4数据科学、数据科学的应用、以及数据科学所涉及的相关基础知识 Towards Data Science

- 5YOLOv8目标跟踪环境配置笔记(完整记录一次成功)_yolov8适配python 3.8.0吗

- 6报错TypeError: Cannot assign to read only property ‘exports‘ of object ‘#<Object>‘ at Module.eval_typeerror: cannot assign to read only property 'ex

- 7Python爱心光波_python简单表白代码弹窗

- 8初级程序员对的简历撰写_如何撰写有效的简历

- 9python 写一个基于flask的下载服务器_基于flask的下载器

- 10大数据面试之MapReduce常见题目_mapreduce题目

微博数据采集,微博爬虫,微博网页解析,完整代码(主体内容+评论内容)

赞

踩

2024年3月27号更新版 修复问题

参加新闻比赛,需要获取大众对某一方面的态度信息,因此选择微博作为信息收集的一部分

完整代码

微博主体内容

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

from urllib import parse

# 设置为自己的cookies

cookies={

"SINAGLOBAL": "9465800464984.312.1711442432301",

"ALF": "1714034452",

"SUB": "_2A25LBvpEDeRhGeFJ4lIT9CzNyj6IHXVoenOMrDV8PUJbkNB-LWrikW1NfsmQ4XEegA2_AZQBGwZ_XDiJMNLvShSt",

"SUBP": "0033WrSXqPxfM725Ws9jqgMF55529P9D9W5Oj.LmOvr7_7fS8d6lYxiZ5JpX5KzhUgL.FoMN1K5EShzpeKz2dJLoIp7LxKML1KBLBKnLxKqL1hnLBoMNS0.7eoBEeK2E",

"_s_tentry": "www.weibo.com",

"Apache": "3202684512315.561.1711517716506",

"ULV": "1711517716586:2:2:2:3202684512315.561.1711517716506:1711442432303",

"PC_TOKEN": "79098b7d85",

"webim_unReadCount": "%7B%22time%22%3A1711517739025%2C%22dm_pub_total%22%3A0%2C%22chat_group_client%22%3A0%2C%22chat_group_notice%22%3A0%2C%22allcountNum%22%3A32%2C%22msgbox%22%3A0%7D"

}

def get_the_list_response(q='话题', the_type='综合', p='页码', timescope="2024-03-01-0:2024-03-27-16"):

"""

q表示的是话题,type表示的是类别,有:综合,实时,热门,高级,p表示的页码,timescope表示高级的时间,不用高级无需带入

"""

type_params_url = {

'综合': [{"q": q,"Refer": "weibo_weibo","page": p,},'https://s.weibo.com/weibo'],

'实时': [{"q": q,"rd": "realtime","tw": "realtime","Refer": "realtime_realtime", "page": p,}, 'https://s.weibo.com/realtime'],

'热门': [{"q": q,"xsort": "hot","suball": "1","tw": "hotweibo","Refer": "realtime_hot","page": p}, 'https://s.weibo.com/hot'],

# 高级中的xsort删除后就是普通的排序

'高级': [{"q": q,"xsort": "hot","suball": "1","timescope": f"custom:{timescope}","Refer": "g" ,"page": p}, 'https://s.weibo.com/weibo']

}

params, url = type_params_url[the_type]

headers = {

'authority': 's.weibo.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'referer': url + "?" + parse.urlencode(params).replace(f'&page={params["page"]}', f'&page={int(params["page"]) - 1}'),

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

}

response = requests.get(url, params=params, cookies=cookies, headers=headers)

return response

def parse_the_list(text):

"""该函数就是解析网页主题内容的"""

soup = BeautifulSoup(text)

divs = soup.select('div[action-type="feed_list_item"]')

lst = []

for div in divs:

mid = div.get('mid')

uid = div.select('div.card-feed > div.avator > a')

if uid:

uid = uid[0].get('href').replace('.com/', '?').split('?')[1]

else:

uid = None

time = div.select('div.card-feed > div.content > div.from > a:first-of-type')

if time:

time = time[0].string.strip()

else:

time = None

p = div.select('div.card-feed > div.content > p:last-of-type')

if p:

p = p[0].strings

content = '\n'.join([para.replace('\u200b', '').strip() for para in list(p)]).replace('收起\nd', '').strip()

else:

content = None

star = div.select('div.card-act > ul > li:nth-child(3) > a > button > span.woo-like-count')

if star:

star = list(star[0].strings)[0]

else:

star = None

comments = div.select('div.card-act > ul > li:nth-child(2) > a')

if comments:

comments = list(comments[0].strings)[0]

else:

comments = None

retweets = div.select('div.card-act > ul > li:nth-child(1) > a')

if retweets:

retweets = list(retweets[0].strings)[1]

else:

retweets = None

lst.append((mid, uid, content, retweets, comments, star, time))

df = pd.DataFrame(lst, columns=['mid', 'uid', 'content', 'retweets', 'comments', 'star', 'time'])

return df

def get_the_list(q,the_type,p):

df_list = []

for i in range(1, p+1):

response = get_the_list_response(q=q, the_type=the_type, p=i)

if response.status_code == 200:

df = parse_the_list(response.text)

df_list.append(df)

print(f'第{i}页解析成功!', flush=True)

return df_list

if __name__ == '__main__':

# 先设置cookie,换成自己的;

# the_type 有 综合,实时,热门,高级 具体介绍看get_the_list_response函数

the_type='热门'

q = '#地磁暴全责#'

p = 2

df_list = get_the_list(q,the_type,p)

df = pd.concat(df_list)

df.to_csv(f'{q}.csv', index=False)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

微博评论内容

一级评论内容

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

# 设置为自己的cookies

cookies = {

'SINAGLOBAL': '1278126679099.0298.1694199077980',

'SCF': 'ApDYB6ZQHU_wHU8ItPHSso29Xu0ZRSkOOiFTBeXETNm7k7YlpnahLGVhB90-mk0xFNznyCVsjyu9-7-Hk0jRULM.',

'SUB': '_2A25IaC_CDeRhGeFO61AY8i_NwzyIHXVrBC0KrDV8PUNbmtAGLVLckW9NQYCXlpjzhYwtC8sDM7giaMcMNIlWSlP6',

'SUBP': '0033WrSXqPxfM725Ws9jqgMF55529P9D9W5mzQcPEhHvorRG-l7.BSsy5JpX5KzhUgL.FoM7ehz4eo2p1h52dJLoI0qLxK-LBKBLBKMLxKnL1--L1heLxKnL1-qLBo.LxK-L1KeL1KzLxK-L1KeL1KzLxK-L1KeL1Kzt',

'ALF': '1733137172',

'_s_tentry': 'weibo.com',

'Apache': '435019984104.0236.1701606621998',

'ULV': '1701606622040:13:2:2:435019984104.0236.1701606621998:1701601199048',

}

# 开始页码,不用修改

page_num = 0

def get_content_1(uid, mid, the_first=True, max_id=None):

headers = {

'authority': 'weibo.com',

'accept': 'application/json, text/plain, */*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'client-version': 'v2.43.30',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'server-version': 'v2023.09.08.4',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-requested-with': 'XMLHttpRequest',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '0',

'count': '20',

'uid': f'{uid}',

'fetch_level': '0',

'locale': 'zh-CN',

}

if not the_first:

params['flow'] = 0

params['max_id'] = max_id

else:

pass

response = requests.get('https://weibo.com/ajax/statuses/buildComments', params=params, cookies=cookies, headers=headers)

return response

def get_content_2(get_content_1_url):

headers = {

'authority': 'weibo.com',

'accept': '*/*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'content-type': 'multipart/form-data; boundary=----WebKitFormBoundaryNs1Toe4Mbr8n1qXm',

'origin': 'https://weibo.com',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

s = '{"name":"https://weibo.com/ajax/statuses/buildComments?flow=0&is_reload=1&id=4944997453660231&is_show_bulletin=2&is_mix=0&max_id=139282732792325&count=20&uid=1762257041&fetch_level=0&locale=zh-CN","entryType":"resource","startTime":20639.80000001192,"duration":563,"initiatorType":"xmlhttprequest","nextHopProtocol":"h2","renderBlockingStatus":"non-blocking","workerStart":0,"redirectStart":0,"redirectEnd":0,"fetchStart":20639.80000001192,"domainLookupStart":20639.80000001192,"domainLookupEnd":20639.80000001192,"connectStart":20639.80000001192,"secureConnectionStart":20639.80000001192,"connectEnd":20639.80000001192,"requestStart":20641.600000023842,"responseStart":21198.600000023842,"firstInterimResponseStart":0,"responseEnd":21202.80000001192,"transferSize":7374,"encodedBodySize":7074,"decodedBodySize":42581,"responseStatus":200,"serverTiming":[],"dns":0,"tcp":0,"ttfb":557,"pathname":"https://weibo.com/ajax/statuses/buildComments","speed":0}'

s = json.loads(s)

s['name'] = get_content_1_url

s = json.dumps(s)

data = f'------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="entry"\r\n\r\n{s}\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="request_id"\r\n\r\n\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm--\r\n'

response = requests.post('https://weibo.com/ajax/log/rum', cookies=cookies, headers=headers, data=data)

return response.text

def get_once_data(uid, mid, the_first=True, max_id=None):

respones_1 = get_content_1(uid, mid, the_first, max_id)

url = respones_1.url

response_2 = get_content_2(url)

df = pd.DataFrame(respones_1.json()['data'])

max_id = respones_1.json()['max_id']

return max_id, df

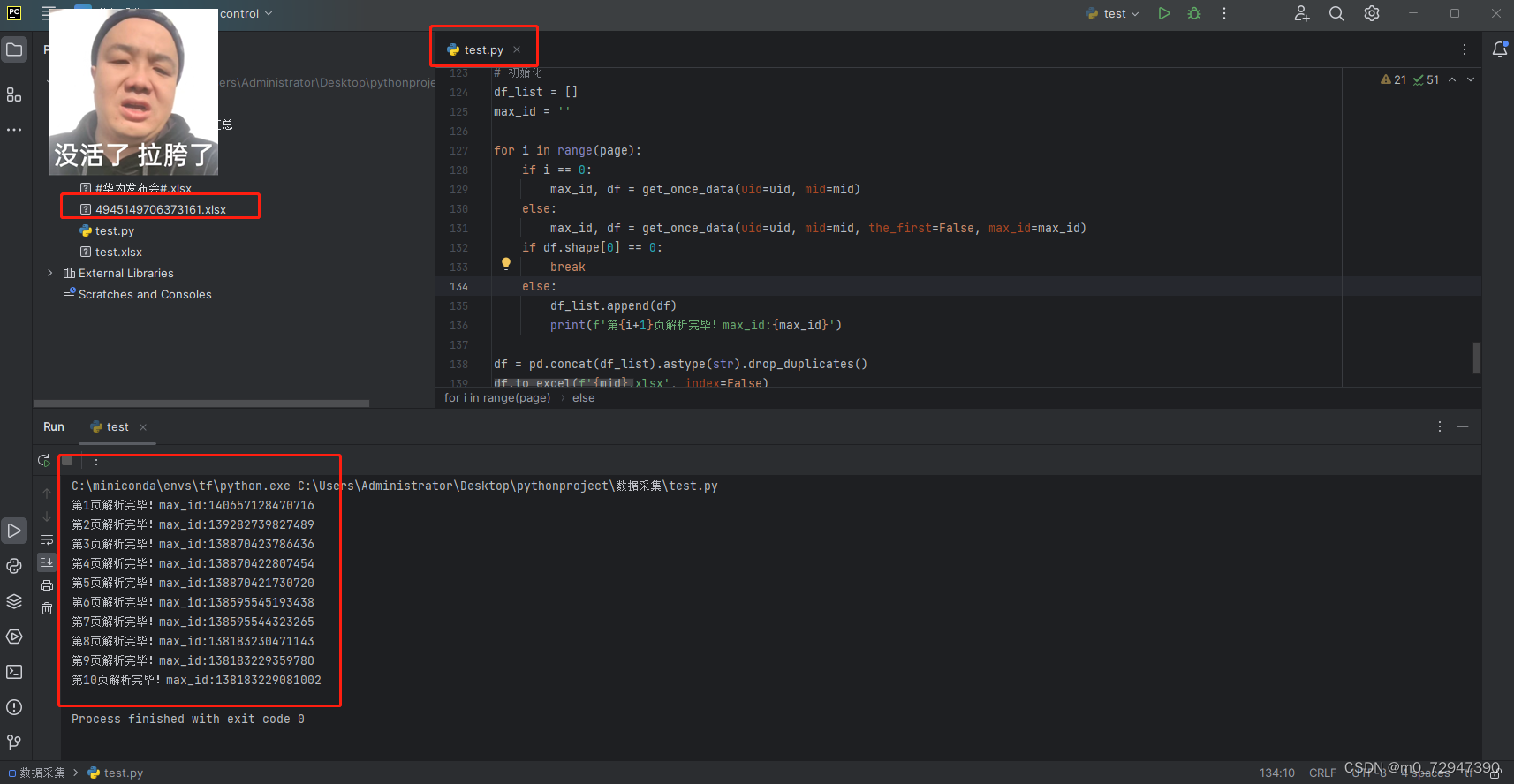

def get_all_data(mid,uid,name='',page=20):

if name == '':

name = mid

try:

df_list = []

max_id = ''

for i in range(page):

if i == 0:

max_id, df = get_once_data(uid=uid, mid=mid)

else:

max_id, df = get_once_data(uid=uid, mid=mid, the_first=False, max_id=max_id)

if df.shape[0] == 0 or max_id == 0:

break

else:

df_list.append(df)

print(f'第{i}页解析完毕!max_id:{max_id}')

df = pd.concat(df_list).astype(str).drop_duplicates()

df.to_csv(f'./cache/{name}.csv', index=False)

except Exception as e:

print(f'{ix}失败, {mid}无评论, {str(e)}')

if __name__ == '__main__':

# 先在上面设置cookies

# 设置好了再进行操作

# 自定义

name = '#邹振东诚邀张雪峰来厦门请你吃沙茶面#'

uid = '2610806555'

mid = '4914095331742409'

page = 100

get_all_data(mid, uid, name, page)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

二级评论内容

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

page_num = 0

cookies = {

'SINAGLOBAL': '1278126679099.0298.1694199077980',

'SUBP': '0033WrSXqPxfM725Ws9jqgMF55529P9D9W5mzQcPEhHvorRG-l7.BSsy5JpX5KMhUgL.FoM7ehz4eo2p1h52dJLoI0qLxK-LBKBLBKMLxKnL1--L1heLxKnL1-qLBo.LxK-L1KeL1KzLxK-L1KeL1KzLxK-L1KeL1Kzt',

'XSRF-TOKEN': '47NC7wE7TMhcqfh1K-4bacK-',

'ALF': '1697384140',

'SSOLoginState': '1694792141',

'SCF': 'ApDYB6ZQHU_wHU8ItPHSso29Xu0ZRSkOOiFTBeXETNm7IJXuI95RLbWORIsozuK4Ohxs_boeOIedEcczDT3uSAI.',

'SUB': '_2A25IAAmdDeRhGeFO61AY8i_NwzyIHXVrdHxVrDV8PUNbmtAGLU74kW9NQYCXlmPtQ1DG4kl_wLzqQqkPl_Do1sZu',

'_s_tentry': 'weibo.com',

'Apache': '3760261250067.669.1694792155706',

'ULV': '1694792155740:8:8:4:3760261250067.669.1694792155706:1694767801057',

'WBPSESS': 'X5DJqu8gKpwqYSp80b4XokKvi4u4_oikBqVmvlBCHvGwXMxtKAFxIPg-LIF7foS715Sa4NttSYqzj5x2Ms5ynKVOM5I_Fsy9GECAYh38R4DQ-gq7M5XOe4y1gOUqvm1hOK60dUKvrA5hLuONCL2ing==',

}

def get_content_1(uid, mid, the_first=True, max_id=None):

headers = {

'authority': 'weibo.com',

'accept': 'application/json, text/plain, */*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'client-version': 'v2.43.32',

'referer': 'https://weibo.com/1887344341/NhAosFSL4',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'server-version': 'v2023.09.14.1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-requested-with': 'XMLHttpRequest',

'x-xsrf-token': '-UX-uyKz0jmzbTnlkyDEMvSO',

}

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '1',

'fetch_level': '1',

'max_id': '0',

'count': '20',

'uid': f'{uid}',

'locale': 'zh-CN',

}

if not the_first:

params['flow'] = 0

params['max_id'] = max_id

else:

pass

response = requests.get('https://weibo.com/ajax/statuses/buildComments', params=params, cookies=cookies, headers=headers)

return response

def get_content_2(get_content_1_url):

headers = {

'authority': 'weibo.com',

'accept': '*/*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'content-type': 'multipart/form-data; boundary=----WebKitFormBoundaryNs1Toe4Mbr8n1qXm',

'origin': 'https://weibo.com',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

s = '{"name":"https://weibo.com/ajax/statuses/buildComments?flow=0&is_reload=1&id=4944997453660231&is_show_bulletin=2&is_mix=0&max_id=139282732792325&count=20&uid=1762257041&fetch_level=0&locale=zh-CN","entryType":"resource","startTime":20639.80000001192,"duration":563,"initiatorType":"xmlhttprequest","nextHopProtocol":"h2","renderBlockingStatus":"non-blocking","workerStart":0,"redirectStart":0,"redirectEnd":0,"fetchStart":20639.80000001192,"domainLookupStart":20639.80000001192,"domainLookupEnd":20639.80000001192,"connectStart":20639.80000001192,"secureConnectionStart":20639.80000001192,"connectEnd":20639.80000001192,"requestStart":20641.600000023842,"responseStart":21198.600000023842,"firstInterimResponseStart":0,"responseEnd":21202.80000001192,"transferSize":7374,"encodedBodySize":7074,"decodedBodySize":42581,"responseStatus":200,"serverTiming":[],"dns":0,"tcp":0,"ttfb":557,"pathname":"https://weibo.com/ajax/statuses/buildComments","speed":0}'

s = json.loads(s)

s['name'] = get_content_1_url

s = json.dumps(s)

data = f'------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="entry"\r\n\r\n{s}\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="request_id"\r\n\r\n\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm--\r\n'

response = requests.post('https://weibo.com/ajax/log/rum', cookies=cookies, headers=headers, data=data)

return response.text

def get_once_data(uid, mid, the_first=True, max_id=None):

respones_1 = get_content_1(uid, mid, the_first, max_id)

url = respones_1.url

response_2 = get_content_2(url)

df = pd.DataFrame(respones_1.json()['data'])

max_id = respones_1.json()['max_id']

return max_id, df

if __name__ == '__main__':

# 更新cookies

# 得到的一级评论信息

df = pd.read_csv('#邹振东诚邀张雪峰来厦门请你吃沙茶面#.csv')

# 过滤没有二级评论的一级评论

df = df[df['floor_number']>0]

os.makedirs('./二级评论数据/', exist_ok=True)

for i in range(df.shape[0]):

uid = df.iloc[i]['analysis_extra'].replace('|mid:',':').split(':')[1]

mid = df.iloc[i]['mid']

page = 100

if not os.path.exists(f'./二级评论数据/{mid}-{uid}.csv'):

print(f'不存在 ./二级评论数据/{mid}-{uid}.csv')

df_list = []

max_id_set = set()

max_id = ''

for j in range(page):

if max_id in max_id_set:

break

else:

max_id_set.add(max_id)

if j == 0:

max_id, df_ = get_once_data(uid=uid, mid=mid)

else:

max_id, df_ = get_once_data(uid=uid, mid=mid, the_first=False, max_id=max_id)

if df_.shape[0] == 0 or max_id == 0:

break

else:

df_list.append(df_)

print(f'{mid}第{j}页解析完毕!max_id:{max_id}')

if df_list:

outdf = pd.concat(df_list).astype(str).drop_duplicates()

print(f'文件长度为{outdf.shape[0]},文件保存为 ./二级评论数据/{mid}-{uid}.csv')

outdf.to_csv(f'./二级评论数据/{mid}-{uid}.csv', index=False)

else:

pass

else:

print(f'存在 ./二级评论数据/{mid}-{uid}.csv')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

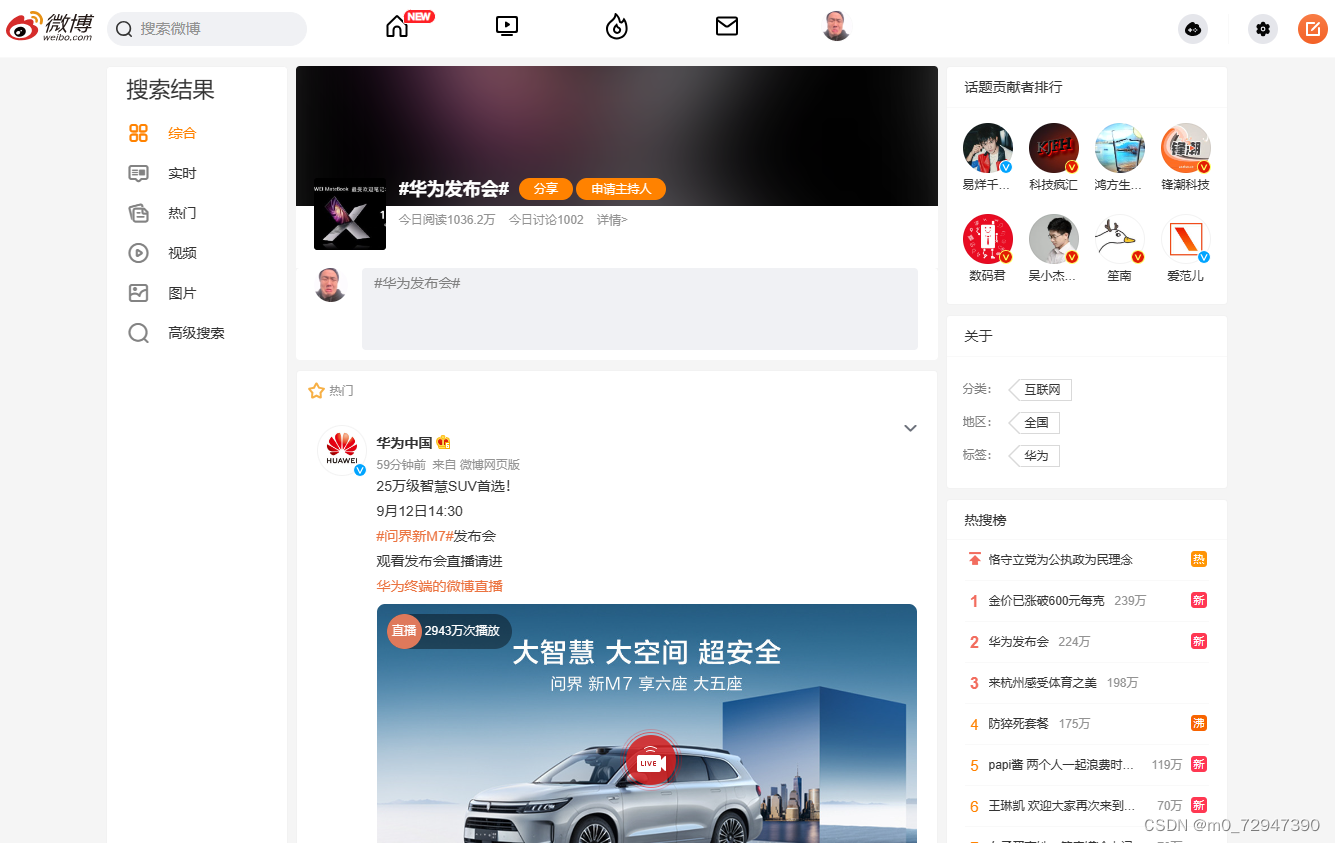

微博主体内容获取流程

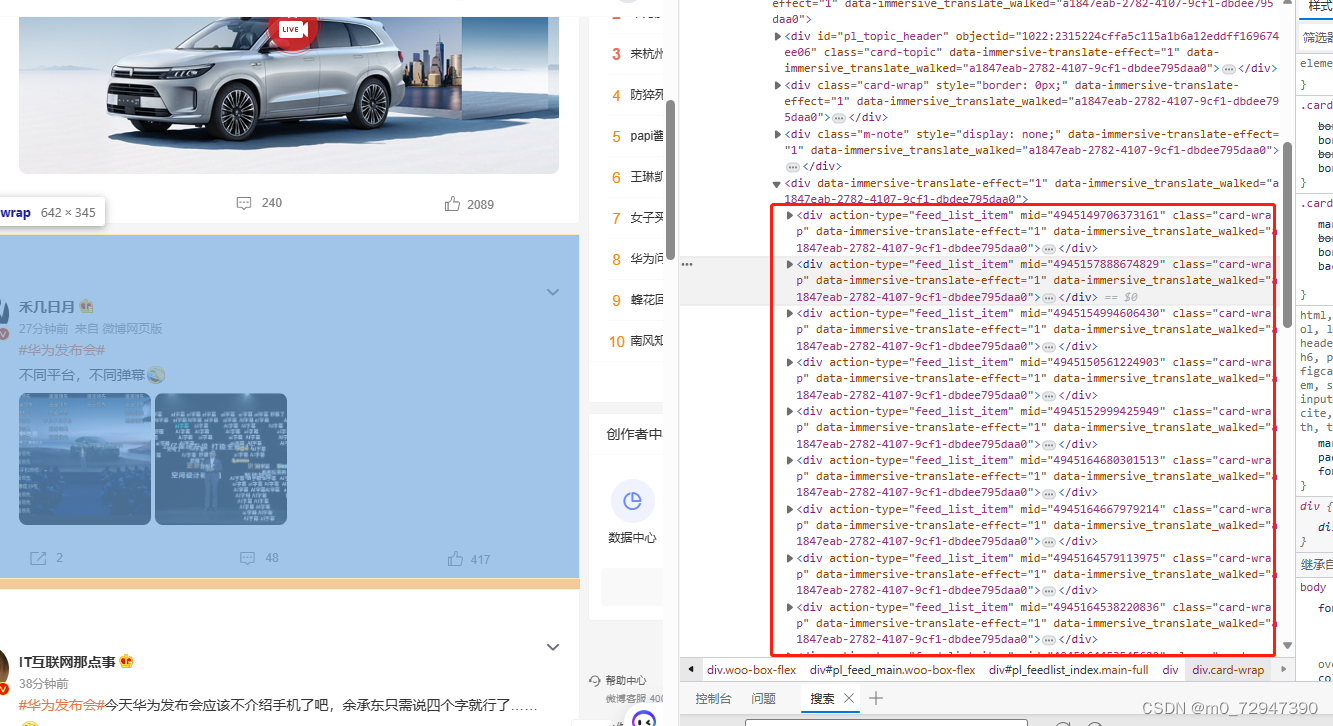

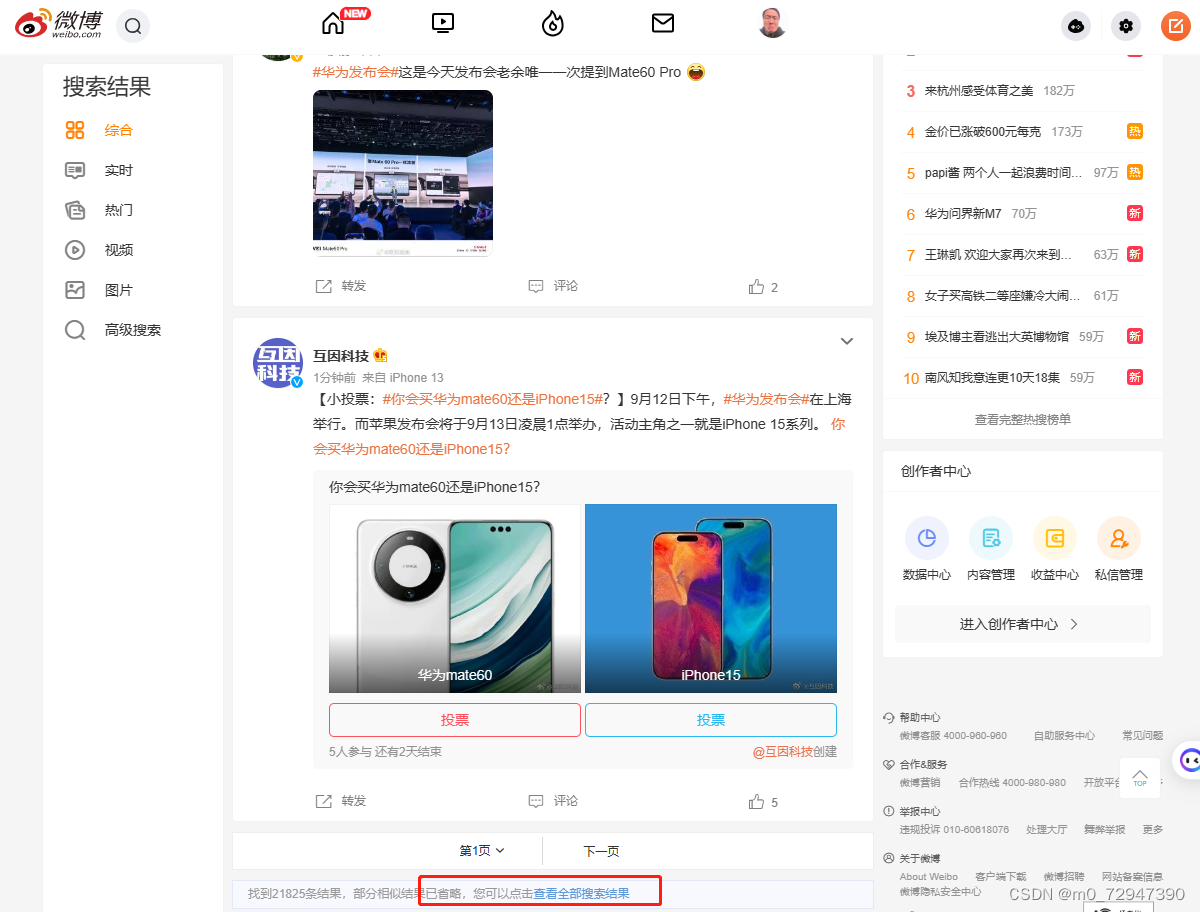

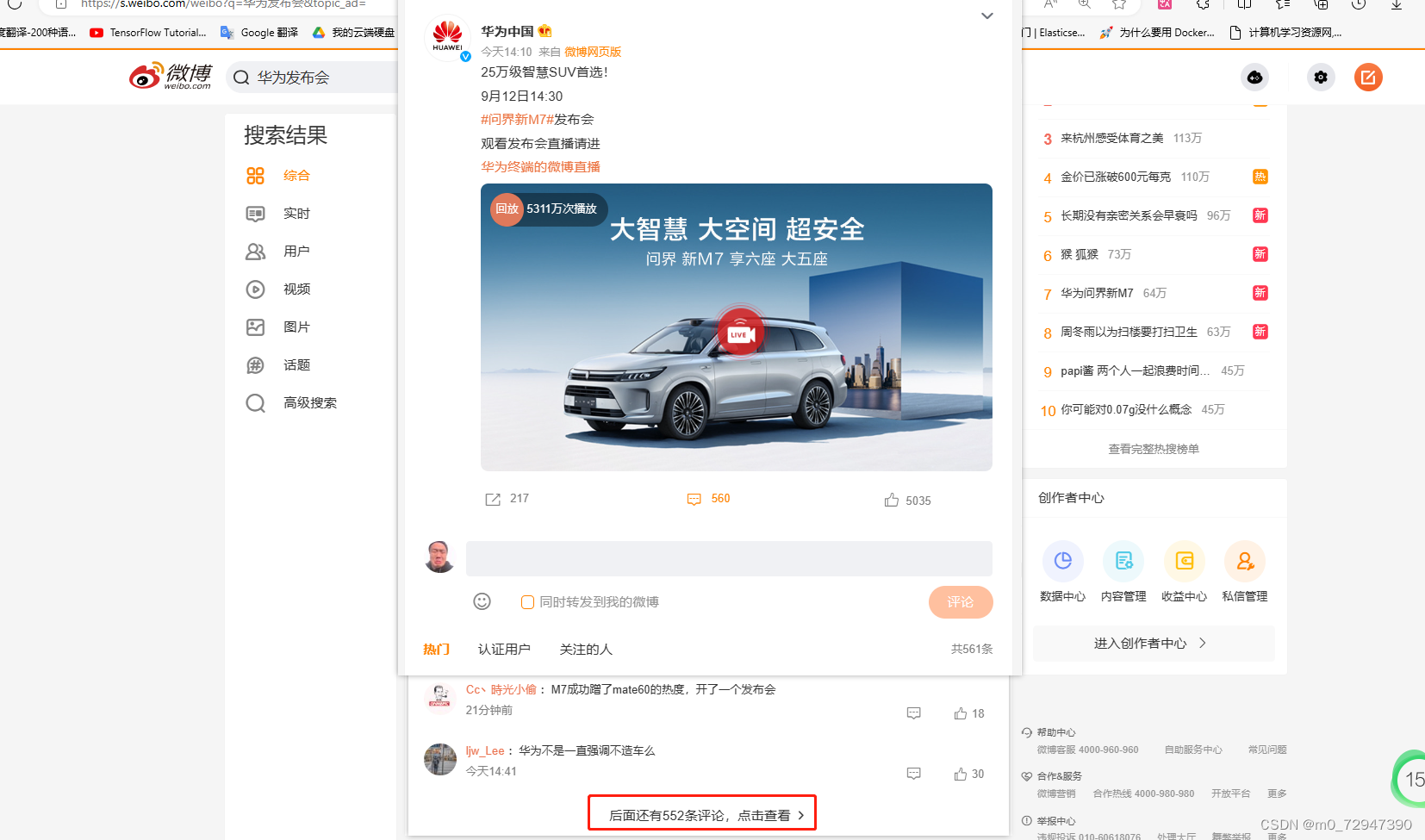

以华为发布会这一热搜为例子,我们可以通过开发者模式得到信息基本都包含在下面的 div tag中

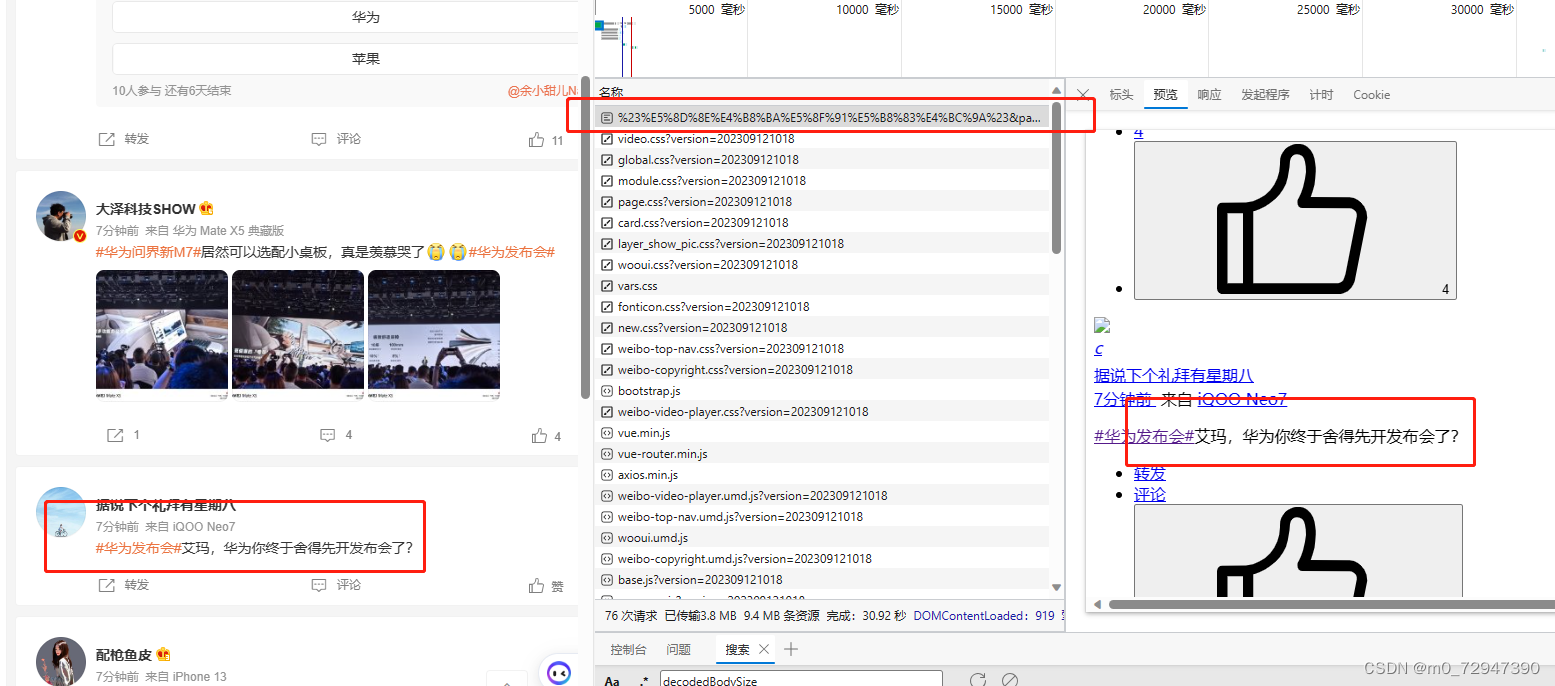

我们通过网络这一模块进行解析,发现信息基本都存储在 %23 开头的请求之中,接下来分析一下响应内容

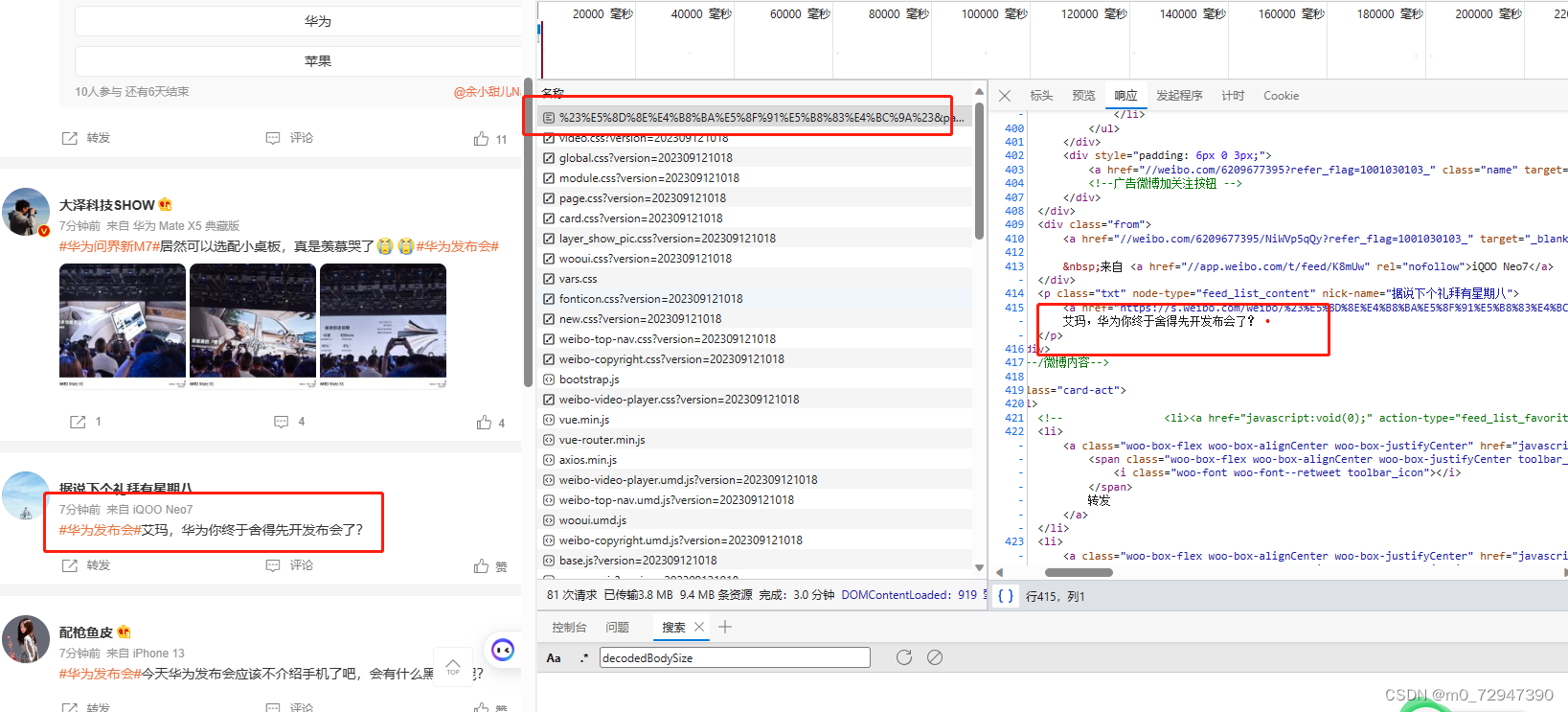

这里可以看出响应内容为 html 格式,因此我们可以用xpath或者css来进行解析,这里我们使用BeautifulSoup来解析,解析代码如下:

soup = BeautifulSoup(response.text, 'lxml')

divs = soup.select('div[action-type="feed_list_item"]')

lst = []

for div in divs:

mid = div.get('mid')

uid = div.select('div.card-feed > div.avator > a')

if uid:

uid = uid[0].get('href').replace('.com/', '?').split('?')[1]

else:

uid = None

time = div.select('div.card-feed > div.content > div.from > a:first-of-type')

if time:

time = time[0].string.strip()

else:

time = None

p = div.select('div.card-feed > div.content > p:last-of-type')

if p:

p = p[0].strings

content = '\n'.join([para.replace('\u200b', '').strip() for para in list(p)]).strip()

else:

content = None

star = div.select('ul > li > a > button > span.woo-like-count')

if star:

star = list(star[0].strings)[0]

else:

star = None

lst.append((mid, uid, content, star, time))

pd.DataFrame(lst, columns=['mid', 'uid', 'content', 'star', 'time'])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

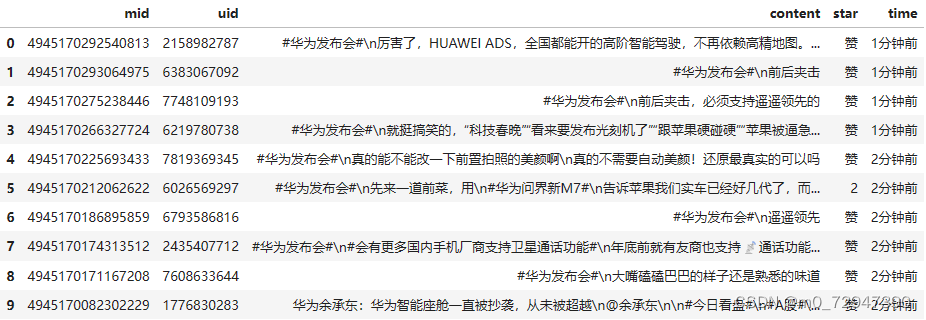

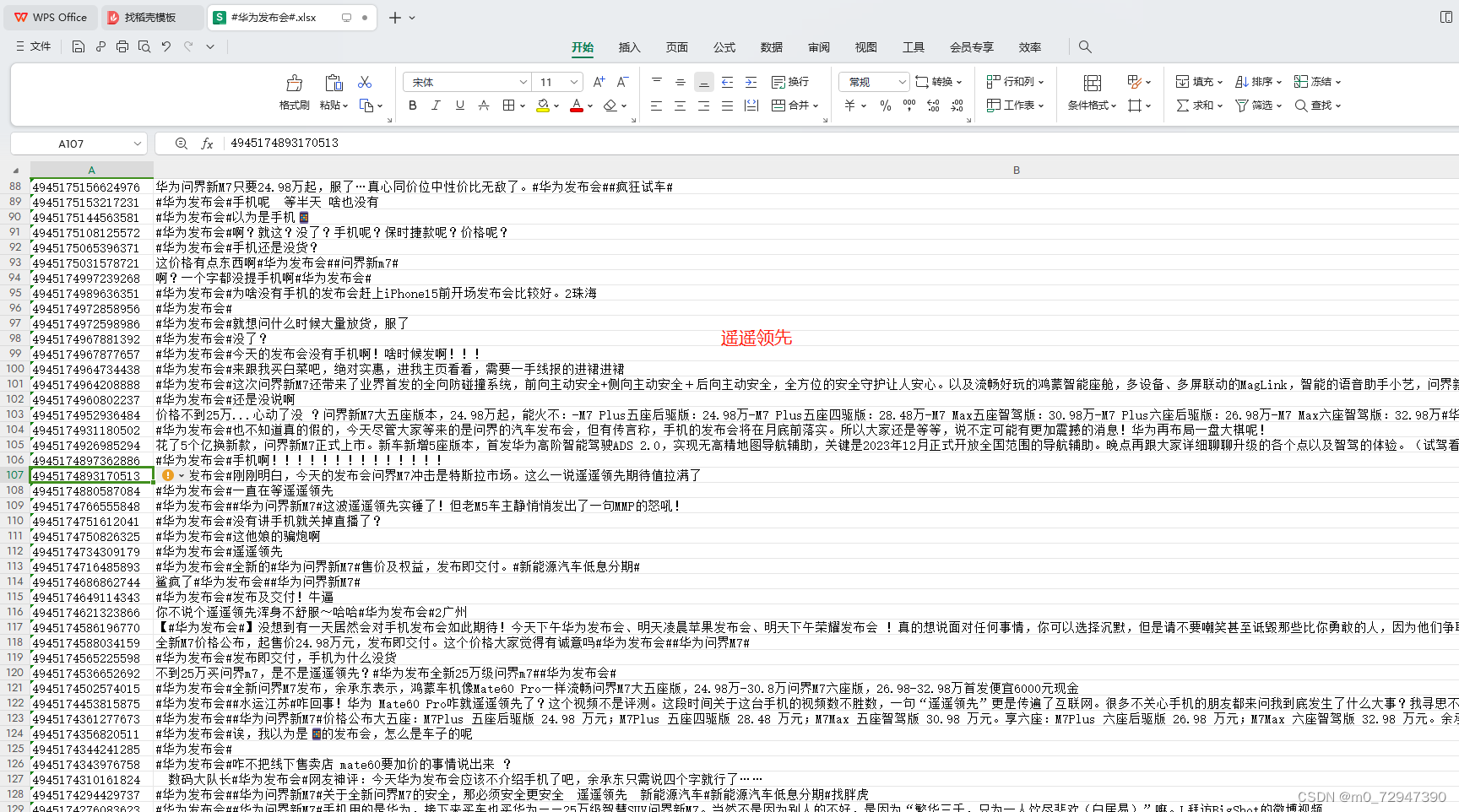

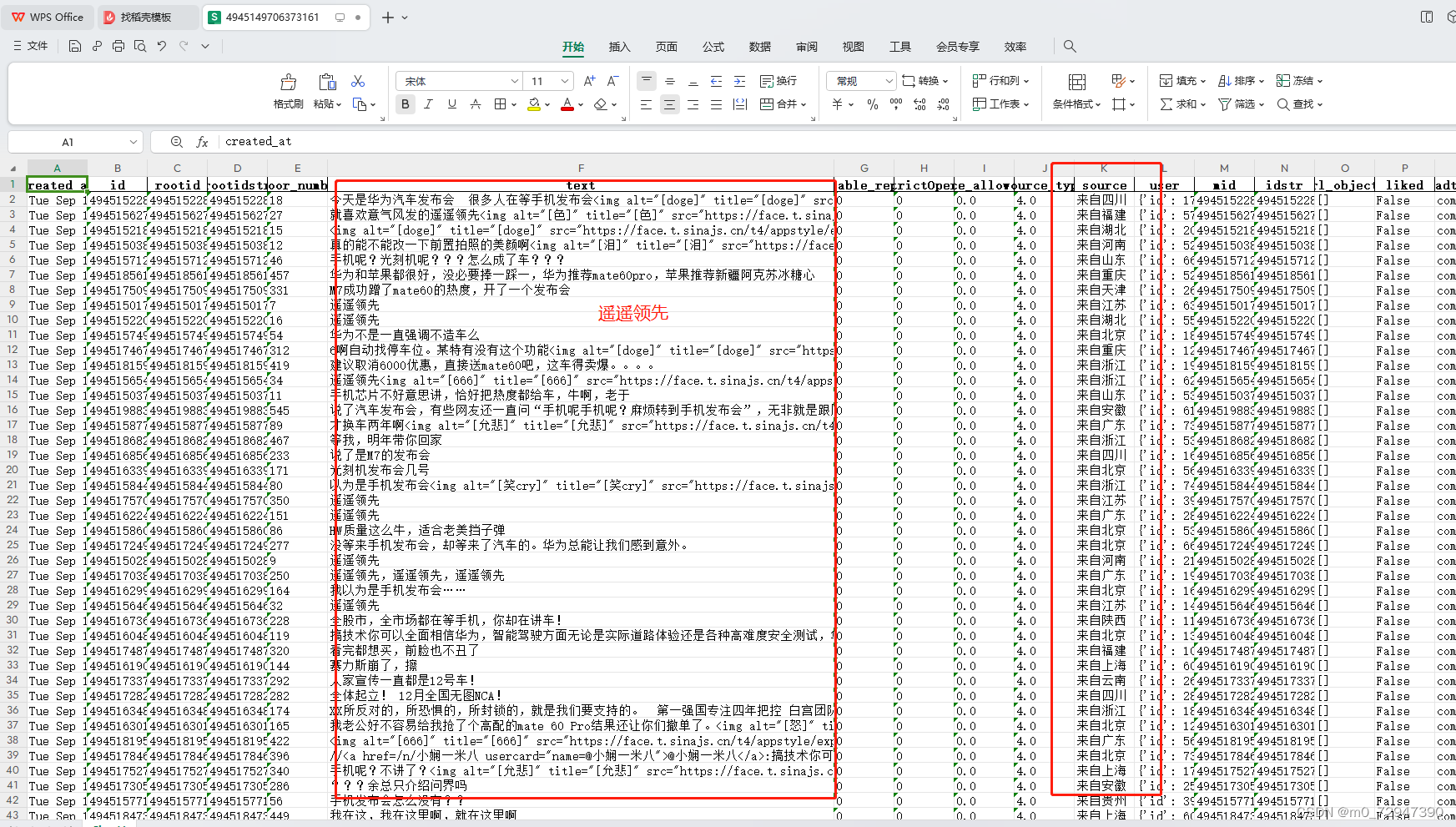

我们可以获得如下结果:

这里的 mid , uid 两个参数是为了下一节获取微博评论内容需要用到的参数,这里不多解释,如果不需要删除就好,接下来我们看一下请求内容。在开始之前,为了对请求解析方便,在这里我们点击一下 查看全部搜索结果

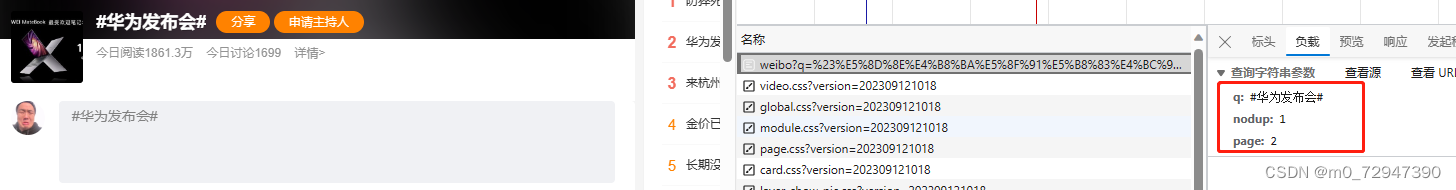

可以发现一个以 weibo 开头的新的请求,和 %23 开头的请求内容类似,但是带了参数 q 和nodup ,再翻页之后我们可以得到 page 这一个参数

我的解析如下:

1. q:话题

2. nudup:是否展示完整内容

3. page:页码

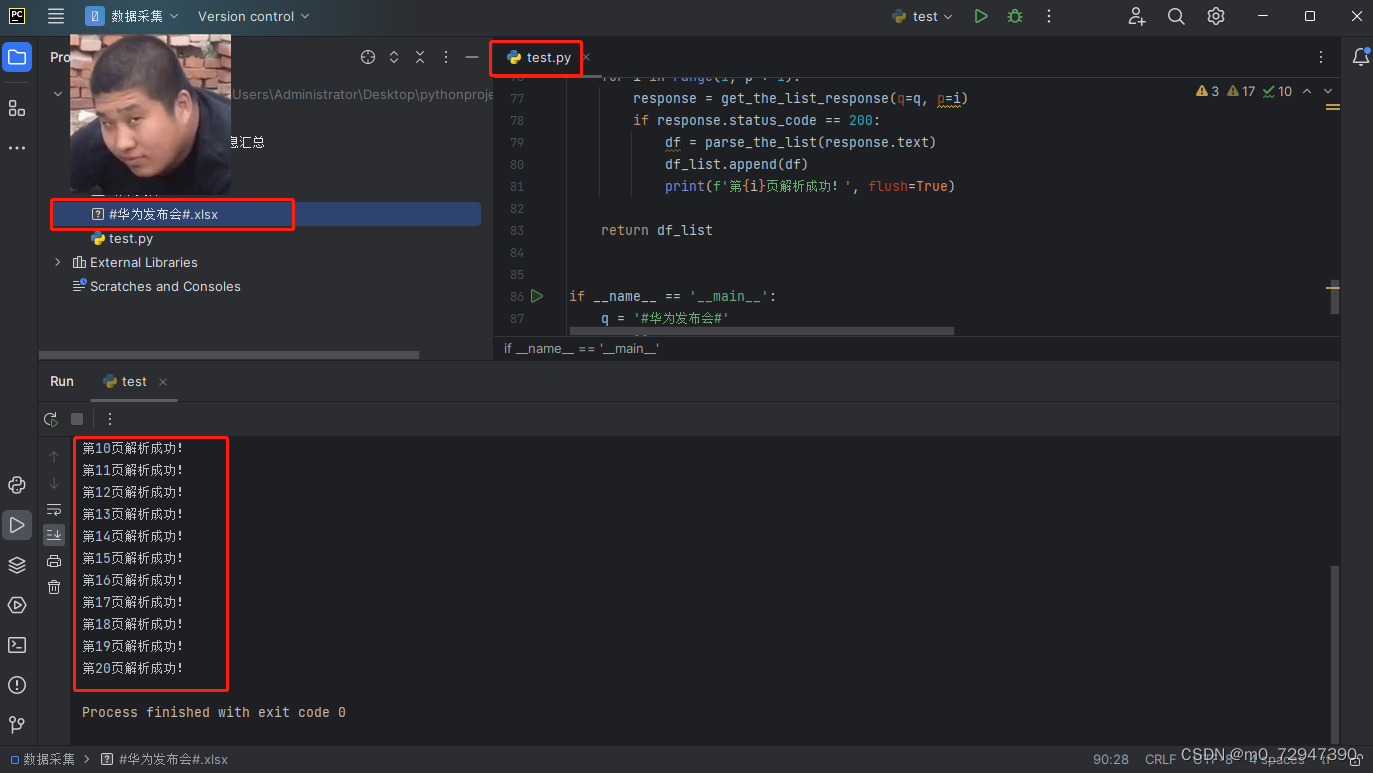

然后可以对这个请求进行模拟,写入 python 代码中,结合之前的解析,发现内容获取 成功!

完整代码如下:

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

# 设置为自己的cookies

cookies = {

'SINAGLOBAL': '1278126679099.0298.1694199077980',

'SCF': 'ApDYB6ZQHU_wHU8ItPHSso29Xu0ZRSkOOiFTBeXETNm7k7YlpnahLGVhB90-mk0xFNznyCVsjyu9-7-Hk0jRULM.',

'SUB': '_2A25IaC_CDeRhGeFO61AY8i_NwzyIHXVrBC0KrDV8PUNbmtAGLVLckW9NQYCXlpjzhYwtC8sDM7giaMcMNIlWSlP6',

'SUBP': '0033WrSXqPxfM725Ws9jqgMF55529P9D9W5mzQcPEhHvorRG-l7.BSsy5JpX5KzhUgL.FoM7ehz4eo2p1h52dJLoI0qLxK-LBKBLBKMLxKnL1--L1heLxKnL1-qLBo.LxK-L1KeL1KzLxK-L1KeL1KzLxK-L1KeL1Kzt',

'ALF': '1733137172',

'_s_tentry': 'weibo.com',

'Apache': '435019984104.0236.1701606621998',

'ULV': '1701606622040:13:2:2:435019984104.0236.1701606621998:1701601199048',

}

def get_the_list_response(q='话题', n='1', p='页码'):

headers = {

'authority': 's.weibo.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'referer': 'https://s.weibo.com/weibo?q=%23%E6%96%B0%E9%97%BB%E5%AD%A6%E6%95%99%E6%8E%88%E6%80%92%E6%80%BC%E5%BC%A0%E9%9B%AA%E5%B3%B0%23&nodup=1',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

}

params = {

'q': q,

'nodup': n,

'page': p,

}

response = requests.get('https://s.weibo.com/weibo', params=params, cookies=cookies, headers=headers)

return response

def parse_the_list(text):

soup = BeautifulSoup(text)

divs = soup.select('div[action-type="feed_list_item"]')

lst = []

for div in divs:

mid = div.get('mid')

time = div.select('div.card-feed > div.content > div.from > a:first-of-type')

if time:

time = time[0].string.strip()

else:

time = None

p = div.select('div.card-feed > div.content > p:last-of-type')

if p:

p = p[0].strings

content = '\n'.join([para.replace('\u200b', '').strip() for para in list(p)]).strip()

else:

content = None

star = div.select('ul > li > a > button > span.woo-like-count')

if star:

star = list(star[0].strings)[0]

else:

star = None

lst.append((mid, content, star, time))

df = pd.DataFrame(lst, columns=['mid', 'content', 'star', 'time'])

return df

def get_the_list(q, p):

df_list = []

for i in range(1, p+1):

response = get_the_list_response(q=q, p=i)

if response.status_code == 200:

df = parse_the_list(response.text)

df_list.append(df)

print(f'第{i}页解析成功!', flush=True)

return df_list

if __name__ == '__main__':

# 先设置cookie,换成自己的;

q = '#华为发布会#'

p = 20

df_list = get_the_list(q, p)

df = pd.concat(df_list)

df.to_csv(f'{q}.csv', index=False)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

微博评论内容获取流程

一级评论内容

上一节内容获取了微博主题内容,可以发现并没有什么难点,本来我以为都结束了,队长偏要评论内容,无奈我只好继续解析评论内容,接下来我们来获取微博评论内容,有一点点绕。

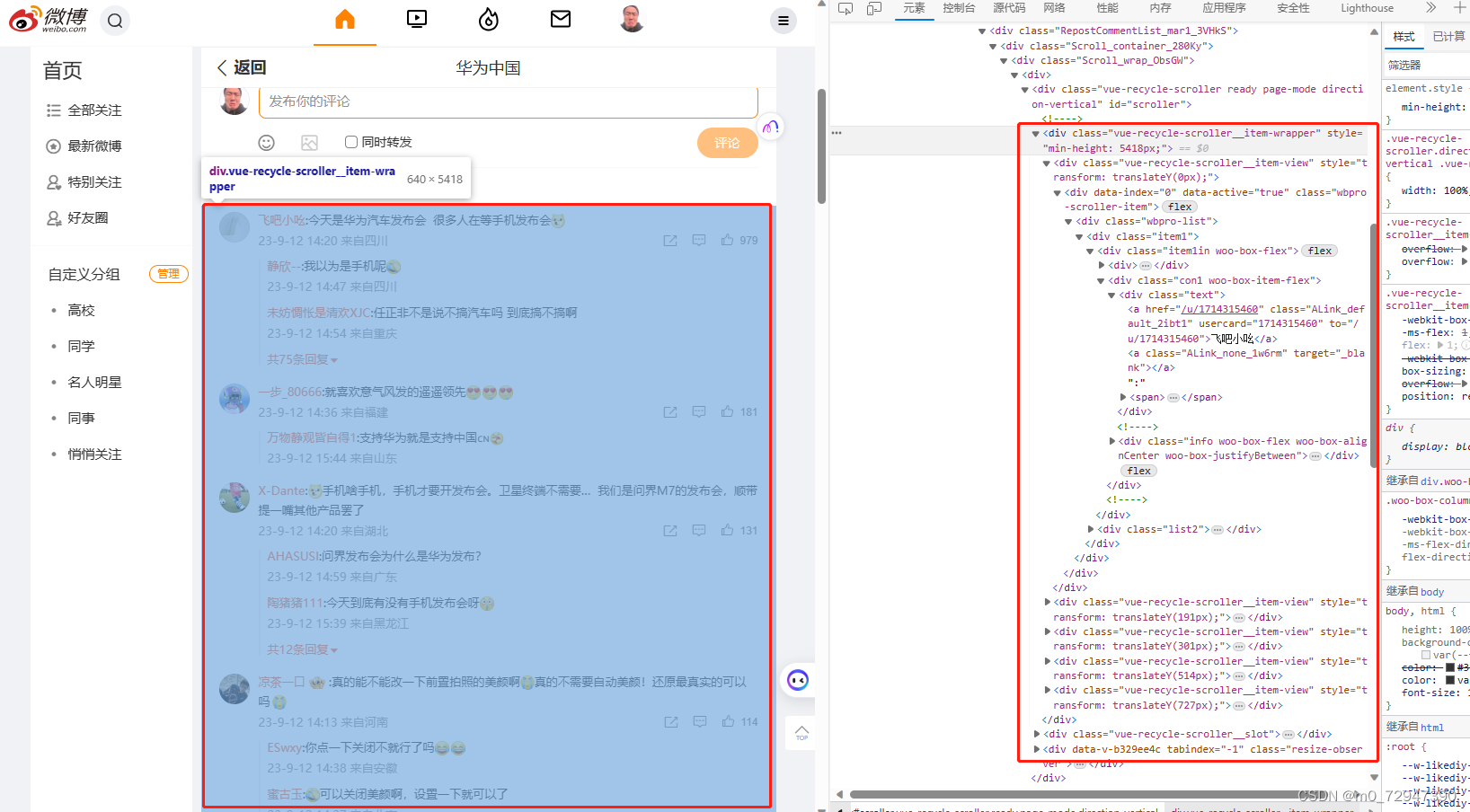

首先我们点开评论数较多的微博, 然后点击 后面还有552条评论,点击查看

看到 < div class=“vue-recycle-scroller__item-wrapper” > 这个内容是我们想要的

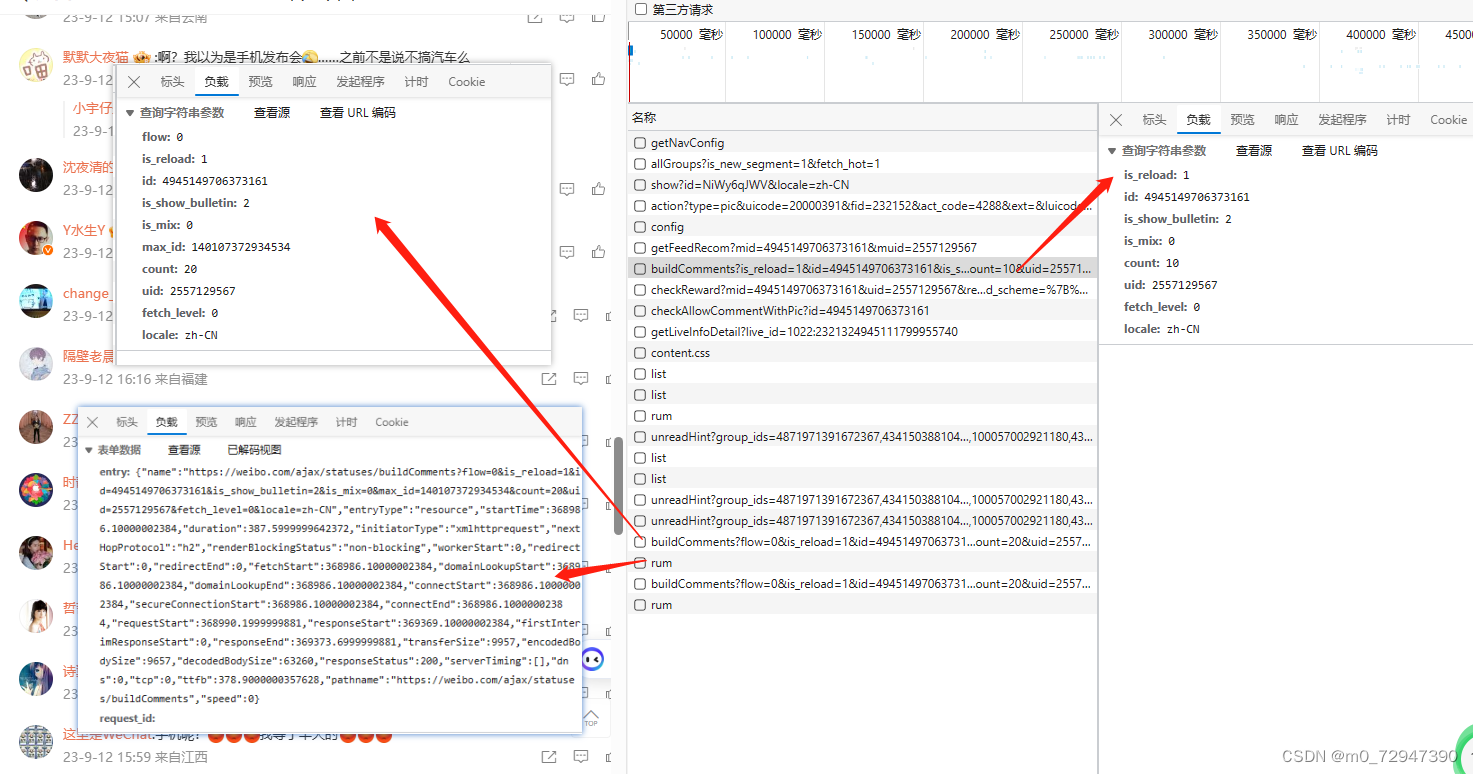

和上一节一样来查找请求, 发现 buildComments?is_reload=1&id= 这个请求包含了我们想要的信息,而且预览内容为 json 格式,省去了解析 html 的步骤,接下来只需要解析请求就ok了。

话不多说,往下滑动,多获得几个请求,对得到的请求,分析如下:

每次往下滑动都会出现两个请求,一个是 buildComments?flow=0&is_reload=1&id=49451497063731… ,一个是 rum 。同时 buildComments?flow=0&is_reload=1&id=49451497063731… 请求的参数发生了变化,第一次请求里面没有 flow 和 max_id 这两个参数,经过我一下午分析可以得到以下结果:

1. flow:判断是否第一次请求,第一次请求不能加

2. id:微博主体内容的id 上一节获取的mid

3. count:评论数

4. uid:微博主体内容的用户id 上一节获取的uid

5. max_id:上一次请求后最后一个评论的mid,第一次请求不能加

6. 其他参数保持不变

7. rum在buildComments之后验证请求是否人为发出,反爬机制

8. rum的参数围绕buildComments展开

9. rum构造完全凑巧,部分参数对结果无效,能用就行!

完整代码如下:

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

# 设置为自己的cookies

cookies = {

'SINAGLOBAL': '1278126679099.0298.1694199077980',

'SCF': 'ApDYB6ZQHU_wHU8ItPHSso29Xu0ZRSkOOiFTBeXETNm7k7YlpnahLGVhB90-mk0xFNznyCVsjyu9-7-Hk0jRULM.',

'SUB': '_2A25IaC_CDeRhGeFO61AY8i_NwzyIHXVrBC0KrDV8PUNbmtAGLVLckW9NQYCXlpjzhYwtC8sDM7giaMcMNIlWSlP6',

'SUBP': '0033WrSXqPxfM725Ws9jqgMF55529P9D9W5mzQcPEhHvorRG-l7.BSsy5JpX5KzhUgL.FoM7ehz4eo2p1h52dJLoI0qLxK-LBKBLBKMLxKnL1--L1heLxKnL1-qLBo.LxK-L1KeL1KzLxK-L1KeL1KzLxK-L1KeL1Kzt',

'ALF': '1733137172',

'_s_tentry': 'weibo.com',

'Apache': '435019984104.0236.1701606621998',

'ULV': '1701606622040:13:2:2:435019984104.0236.1701606621998:1701601199048',

}

# 开始页码,不用修改

page_num = 0

def get_content_1(uid, mid, the_first=True, max_id=None):

headers = {

'authority': 'weibo.com',

'accept': 'application/json, text/plain, */*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'client-version': 'v2.43.30',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'server-version': 'v2023.09.08.4',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-requested-with': 'XMLHttpRequest',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '0',

'count': '20',

'uid': f'{uid}',

'fetch_level': '0',

'locale': 'zh-CN',

}

if not the_first:

params['flow'] = 0

params['max_id'] = max_id

else:

pass

response = requests.get('https://weibo.com/ajax/statuses/buildComments', params=params, cookies=cookies, headers=headers)

return response

def get_content_2(get_content_1_url):

headers = {

'authority': 'weibo.com',

'accept': '*/*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'content-type': 'multipart/form-data; boundary=----WebKitFormBoundaryNs1Toe4Mbr8n1qXm',

'origin': 'https://weibo.com',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

s = '{"name":"https://weibo.com/ajax/statuses/buildComments?flow=0&is_reload=1&id=4944997453660231&is_show_bulletin=2&is_mix=0&max_id=139282732792325&count=20&uid=1762257041&fetch_level=0&locale=zh-CN","entryType":"resource","startTime":20639.80000001192,"duration":563,"initiatorType":"xmlhttprequest","nextHopProtocol":"h2","renderBlockingStatus":"non-blocking","workerStart":0,"redirectStart":0,"redirectEnd":0,"fetchStart":20639.80000001192,"domainLookupStart":20639.80000001192,"domainLookupEnd":20639.80000001192,"connectStart":20639.80000001192,"secureConnectionStart":20639.80000001192,"connectEnd":20639.80000001192,"requestStart":20641.600000023842,"responseStart":21198.600000023842,"firstInterimResponseStart":0,"responseEnd":21202.80000001192,"transferSize":7374,"encodedBodySize":7074,"decodedBodySize":42581,"responseStatus":200,"serverTiming":[],"dns":0,"tcp":0,"ttfb":557,"pathname":"https://weibo.com/ajax/statuses/buildComments","speed":0}'

s = json.loads(s)

s['name'] = get_content_1_url

s = json.dumps(s)

data = f'------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="entry"\r\n\r\n{s}\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="request_id"\r\n\r\n\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm--\r\n'

response = requests.post('https://weibo.com/ajax/log/rum', cookies=cookies, headers=headers, data=data)

return response.text

def get_once_data(uid, mid, the_first=True, max_id=None):

respones_1 = get_content_1(uid, mid, the_first, max_id)

url = respones_1.url

response_2 = get_content_2(url)

df = pd.DataFrame(respones_1.json()['data'])

max_id = respones_1.json()['max_id']

return max_id, df

if __name__ == '__main__':

# 先在上面设置cookies

# 设置好了再进行操作

# 自定义

name = '#邹振东诚邀张雪峰来厦门请你吃沙茶面#'

uid = '2610806555'

mid = '4914095331742409'

page = 100

# 初始化

df_list = []

max_id = ''

for i in range(page):

if i == 0:

max_id, df = get_once_data(uid=uid, mid=mid)

else:

max_id, df = get_once_data(uid=uid, mid=mid, the_first=False, max_id=max_id)

if df.shape[0] == 0 or max_id == 0:

break

else:

df_list.append(df)

print(f'第{i}页解析完毕!max_id:{max_id}')

df = pd.concat(df_list).astype(str).drop_duplicates()

df.to_csv(f'{name}.csv', index=False)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

结束!

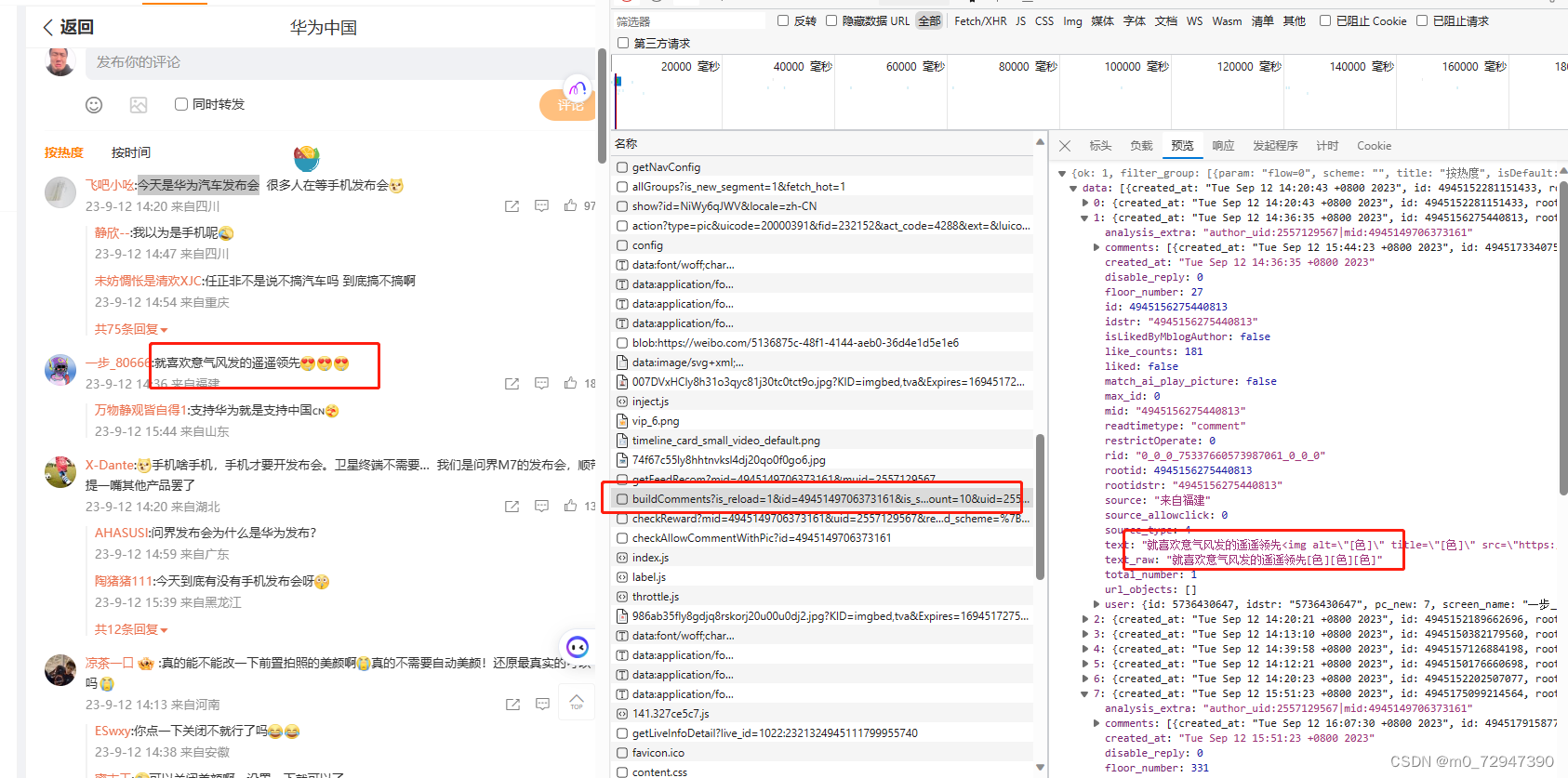

二级评论内容

二级评论的流程和一级评论一样,不同的是参数

一级评论的参数

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '0',

'count': '20',

'uid': f'{uid}',

'fetch_level': '0',

'locale': 'zh-CN',

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

二级评论的参数

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '1',

'fetch_level': '1',

'max_id': '0',

'count': '20',

'uid': f'{uid}',

'locale': 'zh-CN',

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

二级评论参数的uid指的是微博主体内容的作者uid,而mid指的是评论者的mid

完整代码如下:

import requests

import os

from bs4 import BeautifulSoup

import pandas as pd

import json

page_num = 0

cookies = {

'SINAGLOBAL': '1278126679099.0298.1694199077980',

'SUBP': '0033WrSXqPxfM725Ws9jqgMF55529P9D9W5mzQcPEhHvorRG-l7.BSsy5JpX5KMhUgL.FoM7ehz4eo2p1h52dJLoI0qLxK-LBKBLBKMLxKnL1--L1heLxKnL1-qLBo.LxK-L1KeL1KzLxK-L1KeL1KzLxK-L1KeL1Kzt',

'XSRF-TOKEN': '47NC7wE7TMhcqfh1K-4bacK-',

'ALF': '1697384140',

'SSOLoginState': '1694792141',

'SCF': 'ApDYB6ZQHU_wHU8ItPHSso29Xu0ZRSkOOiFTBeXETNm7IJXuI95RLbWORIsozuK4Ohxs_boeOIedEcczDT3uSAI.',

'SUB': '_2A25IAAmdDeRhGeFO61AY8i_NwzyIHXVrdHxVrDV8PUNbmtAGLU74kW9NQYCXlmPtQ1DG4kl_wLzqQqkPl_Do1sZu',

'_s_tentry': 'weibo.com',

'Apache': '3760261250067.669.1694792155706',

'ULV': '1694792155740:8:8:4:3760261250067.669.1694792155706:1694767801057',

'WBPSESS': 'X5DJqu8gKpwqYSp80b4XokKvi4u4_oikBqVmvlBCHvGwXMxtKAFxIPg-LIF7foS715Sa4NttSYqzj5x2Ms5ynKVOM5I_Fsy9GECAYh38R4DQ-gq7M5XOe4y1gOUqvm1hOK60dUKvrA5hLuONCL2ing==',

}

def get_content_1(uid, mid, the_first=True, max_id=None):

headers = {

'authority': 'weibo.com',

'accept': 'application/json, text/plain, */*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'client-version': 'v2.43.32',

'referer': 'https://weibo.com/1887344341/NhAosFSL4',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'server-version': 'v2023.09.14.1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-requested-with': 'XMLHttpRequest',

'x-xsrf-token': '-UX-uyKz0jmzbTnlkyDEMvSO',

}

params = {

'is_reload': '1',

'id': f'{mid}',

'is_show_bulletin': '2',

'is_mix': '1',

'fetch_level': '1',

'max_id': '0',

'count': '20',

'uid': f'{uid}',

'locale': 'zh-CN',

}

if not the_first:

params['flow'] = 0

params['max_id'] = max_id

else:

pass

response = requests.get('https://weibo.com/ajax/statuses/buildComments', params=params, cookies=cookies, headers=headers)

return response

def get_content_2(get_content_1_url):

headers = {

'authority': 'weibo.com',

'accept': '*/*',

'accept-language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'content-type': 'multipart/form-data; boundary=----WebKitFormBoundaryNs1Toe4Mbr8n1qXm',

'origin': 'https://weibo.com',

'referer': 'https://weibo.com/1762257041/NiSAxfmbZ',

'sec-ch-ua': '"Chromium";v="116", "Not)A;Brand";v="24", "Microsoft Edge";v="116"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36 Edg/116.0.1938.69',

'x-xsrf-token': 'F2EEQZrINBfzB2HPPxqTMQJ_',

}

s = '{"name":"https://weibo.com/ajax/statuses/buildComments?flow=0&is_reload=1&id=4944997453660231&is_show_bulletin=2&is_mix=0&max_id=139282732792325&count=20&uid=1762257041&fetch_level=0&locale=zh-CN","entryType":"resource","startTime":20639.80000001192,"duration":563,"initiatorType":"xmlhttprequest","nextHopProtocol":"h2","renderBlockingStatus":"non-blocking","workerStart":0,"redirectStart":0,"redirectEnd":0,"fetchStart":20639.80000001192,"domainLookupStart":20639.80000001192,"domainLookupEnd":20639.80000001192,"connectStart":20639.80000001192,"secureConnectionStart":20639.80000001192,"connectEnd":20639.80000001192,"requestStart":20641.600000023842,"responseStart":21198.600000023842,"firstInterimResponseStart":0,"responseEnd":21202.80000001192,"transferSize":7374,"encodedBodySize":7074,"decodedBodySize":42581,"responseStatus":200,"serverTiming":[],"dns":0,"tcp":0,"ttfb":557,"pathname":"https://weibo.com/ajax/statuses/buildComments","speed":0}'

s = json.loads(s)

s['name'] = get_content_1_url

s = json.dumps(s)

data = f'------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="entry"\r\n\r\n{s}\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm\r\nContent-Disposition: form-data; name="request_id"\r\n\r\n\r\n------WebKitFormBoundaryNs1Toe4Mbr8n1qXm--\r\n'

response = requests.post('https://weibo.com/ajax/log/rum', cookies=cookies, headers=headers, data=data)

return response.text

def get_once_data(uid, mid, the_first=True, max_id=None):

respones_1 = get_content_1(uid, mid, the_first, max_id)

url = respones_1.url

response_2 = get_content_2(url)

df = pd.DataFrame(respones_1.json()['data'])

max_id = respones_1.json()['max_id']

return max_id, df

if __name__ == '__main__':

# 更新cookies

# 得到的一级评论信息

df = pd.read_csv('#邹振东诚邀张雪峰来厦门请你吃沙茶面#.csv')

# 过滤没有二级评论的一级评论

df = df[df['floor_number']>0]

os.makedirs('./二级评论数据/', exist_ok=True)

for i in range(df.shape[0]):

uid = df.iloc[i]['analysis_extra'].replace('|mid:',':').split(':')[1]

mid = df.iloc[i]['mid']

page = 100

if not os.path.exists(f'./二级评论数据/{mid}-{uid}.csv'):

print(f'不存在 ./二级评论数据/{mid}-{uid}.csv')

df_list = []

max_id_set = set()

max_id = ''

for j in range(page):

if max_id in max_id_set:

break

else:

max_id_set.add(max_id)

if j == 0:

max_id, df_ = get_once_data(uid=uid, mid=mid)

else:

max_id, df_ = get_once_data(uid=uid, mid=mid, the_first=False, max_id=max_id)

if df_.shape[0] == 0 or max_id == 0:

break

else:

df_list.append(df_)

print(f'{mid}第{j}页解析完毕!max_id:{max_id}')

if df_list:

outdf = pd.concat(df_list).astype(str).drop_duplicates()

print(f'文件长度为{outdf.shape[0]},文件保存为 ./二级评论数据/{mid}-{uid}.csv')

outdf.to_csv(f'./二级评论数据/{mid}-{uid}.csv', index=False)

else:

pass

else:

print(f'存在 ./二级评论数据/{mid}-{uid}.csv')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

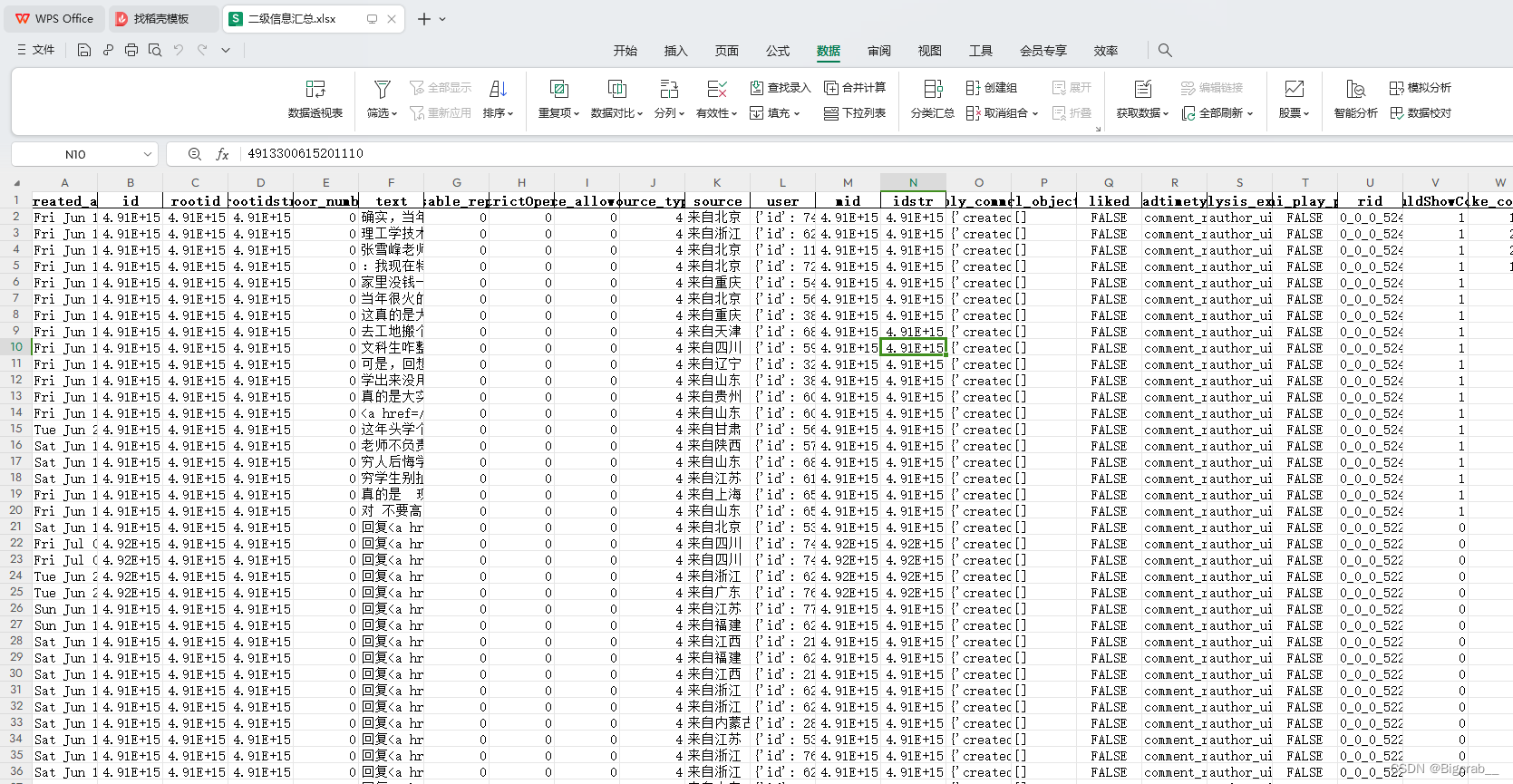

代码运行结果

完成!

问题汇总

csv文件乱码

把 df.to_csv(...) 改为 df.to_csv(..., encoding='utf_8_sig')