热门标签

热门文章

- 1类中静态容器对象如何初始化_c++类中静态容器如何初始化

- 2女文科生“弃文从理”转行做测试员,我是怎么做到工资涨了4倍的_文科转理科薪资大涨案例

- 3jeecgboot前台表格加载以及添加的执行流程_jeecglistmixin

- 4通过串口中断的方式进行ASR-01S模块与STM32通信(问题与解决)_lu-asr01与stm32如何通信

- 5《Redis-Windows平台下Redis安装配置及使用》_redis windows配置

- 6torch-scatter、torch-sparse、torch-cluster、torch-spline-conv安装失败问题解决_torch-sparse不支持1.7

- 7技术速递|为 .NET iOS 和 .NET MAUI 应用程序添加 Apple 隐私清单支持_ios 给动态sdk手动添加隐私清单

- 8SadTalker-Video-Lip-Sync: 创新的视频唇语同步技术

- 9微信小程序云开发--云存储的使用(一)_微信小程序 调用云盘怎么用

- 10达梦数据库使用图形化界面建简单模式、表、列、外键及索引_达梦数据库创建外键

当前位置: article > 正文

记录一个因spark不是纯净版导致hive跑spark的insert,update语句报类找不到的问题

作者:花生_TL007 | 2024-04-20 16:34:28

赞

踩

记录一个因spark不是纯净版导致hive跑spark的insert,update语句报类找不到的问题

【背景说明】

我hive能正常启动,建表没问题,我建了一个student表,没问题,但执行了下面一条insert语句后报如下错误:

- hive (default)> insert into table student values(1,'abc');

- Query ID = atguigu_20240417184003_f9d459d7-1993-487f-8d5f-eafdb48d94c1

- Total jobs = 1

- Launching Job 1 out of 1

- In order to change the average load for a reducer (in bytes):

- set hive.exec.reducers.bytes.per.reducer=<number>

- In order to limit the maximum number of reducers:

- set hive.exec.reducers.max=<number>

- In order to set a constant number of reducers:

- set mapreduce.job.reduces=<number>

- Failed to monitor Job[-1] with exception 'java.lang.IllegalStateException(Connection to remote Spark driver was lost)' Last known state = SENT

- Failed to execute spark task, with exception 'java.lang.IllegalStateException(RPC channel is closed.)'

- FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. RPC channel is closed.

因为我是yarn跑的,所以去yarn看报错日志。

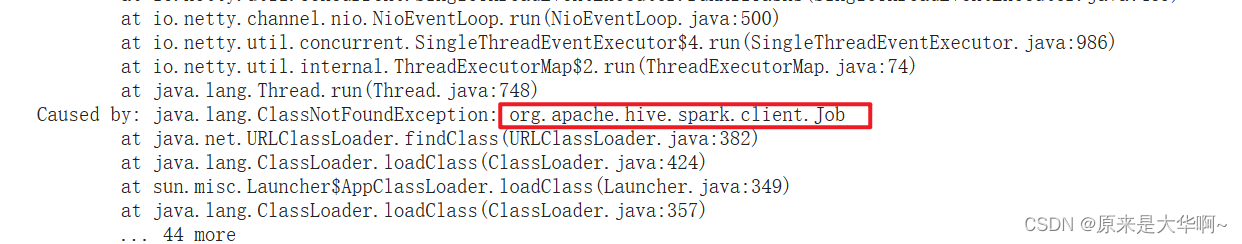

yarn报错日志如下:

它说是这个类找不到?!

它说是这个类找不到?!

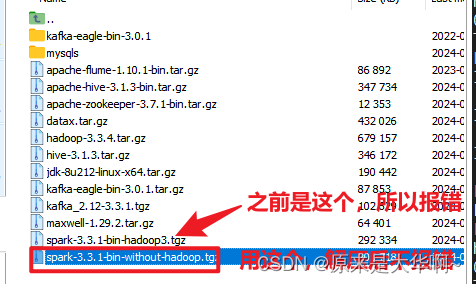

【原因及解决】:其实就是我spark的包弄错了,应该是用纯净版的spark包。重新解压到module目录下,其他操作和spark原来的都一样。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/花生_TL007/article/detail/458283

推荐阅读

相关标签