- 1微信小程序自定义弹窗组件

- 2python简易使用rabbitmq_python 是否可以像springboot一样使用rabbitmq

- 3轻快好用的Docker版云桌面(不到300M、运行快、省流量).md_docker 云桌面

- 4python库--pipreqs_python pipreqs ./

- 5word2vec实现训练自己的词向量及其参数详解_word2vec参数设置

- 6遥感数据集+YOLOV5_yolov5_obb

- 7chatgpt赋能python:爬取电影数据的Python代码_爬取chatgpt的回答

- 8最新SparkAI创作系统ChatGPT程序源码+详细图文搭建教程/支持GPT-4/支持AI绘画/Prompt应用_gpt4 prompt 绘画

- 9分类预测 | MATLAB实现基于Isomap降维算法与改进蜜獾算法IHBA的Adaboost-SVM集成多输入分类预测_matlab isomap

- 10中文译英文 模型_helsinki-nlp 中英文互译

LLM之LangChain(四)| 介绍LangChain 0.1在可观察性、可组合性、流媒体、工具、RAG和代理方面的改进_langchain_community

赞

踩

LangChain是大模型应用中非常火的一个框架,最近发布了LangChain 0.1版本,在以下方面带来显著改进:

- Observability

- Composability

- Streaming

- Tool Usage

- RAG

- Agents

在本文中,我们将使用一些代码示例来分别介绍一下这些改进的使用方法。

一、LangChain 0.1概述

在0.1发布之前,LangChain团队与100名开发人员进行了深入交流,从而为LangChain的升级提供了宝贵建议。

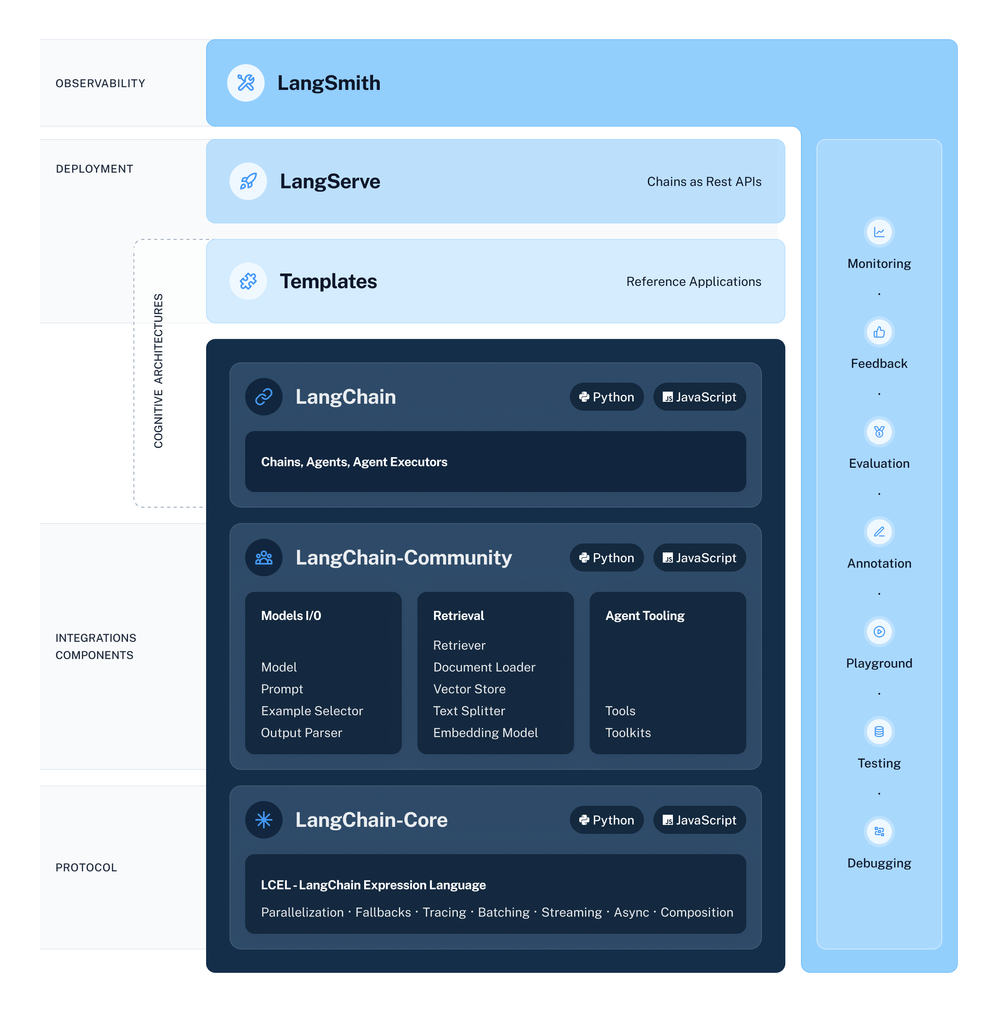

为了增强基础功能,他们将框架分为3个包:

langchain-core:构成主干,包括主要的抽象、接口和核心功能,包括langchain表达式语言(LCEL)。

langchain-community:旨在更好地管理依赖关系,该包包括第三方集成,增强langchain的健壮性和可扩展性,并改善整体开发者体验。

langchain:专注于更高级别的应用程序,比如Chain、Agent和检索算法的特定用例。

LangChain 0.1还引入了清晰的版本控制策略,以保持向后兼容性:

- 小版本更新(第二位数字)表示将提供一个突破性变更的公共API;

- 补丁版本(第三位数字)将表示错误修复或新增功能。

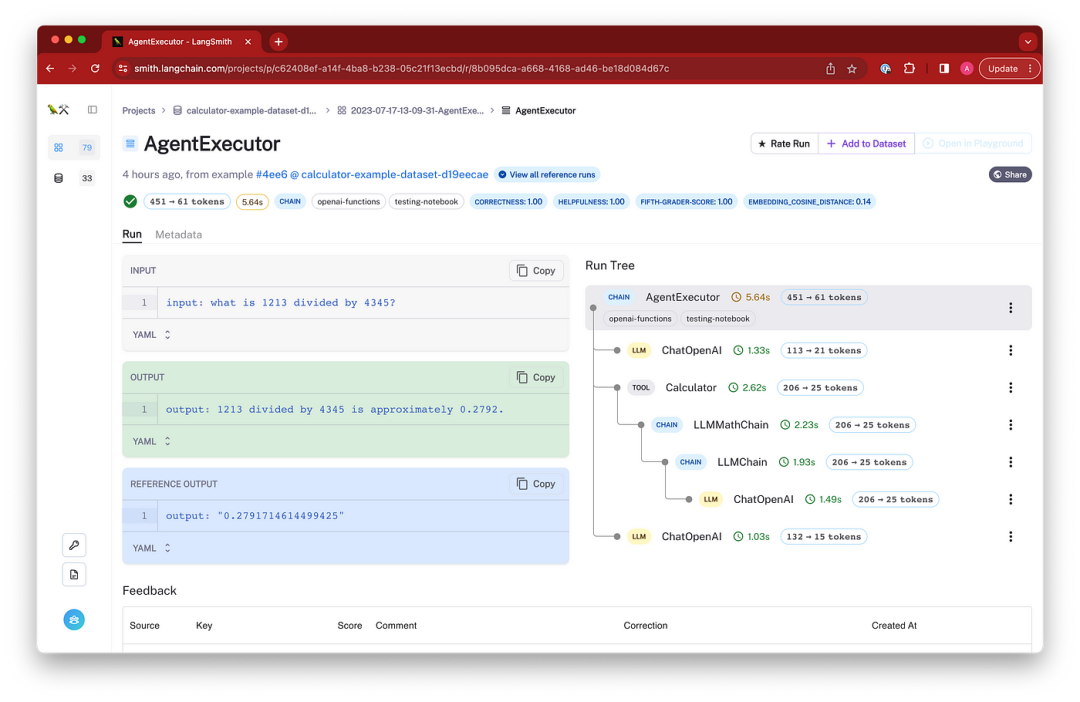

二、可观测性(Observability)

为了深入了解LangChain应用程序的内部工作,可以使用set_verbose和set_debug来了解引擎执行的细节。

from langchain.globals import set_debugset_verbose(False)set_debug(True)prompt = hub.pull("hwchase17/openai-functions-agent")llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)search = TavilySearchResults()tools = [search]agent = create_openai_functions_agent(llm, tools, prompt)agent_executor = AgentExecutor(agent=agent, tools=tools)agent_executor.invoke({"input": "what is the weather in sf?"})[chain/start] [1:chain:AgentExecutor] Entering Chain run with input:{ "input": "what is the weather in sf?"}[chain/start] [1:chain:AgentExecutor > 2:chain:RunnableSequence] Entering Chain run with input:{ "input": "what is the weather in sf?", "intermediate_steps": []}[chain/start] [1:chain:AgentExecutor > 2:chain:RunnableSequence > 3:chain:RunnableAssign<agent_scratchpad>] Entering Chain run with input:{ "input": "what is the weather in sf?", "intermediate_steps": []......[chain/end] [1:chain:AgentExecutor > 2:chain:RunnableSequence > 6:prompt:ChatPromptTemplate] [0ms] Exiting Prompt run with output:{ "lc": 1, "type": "constructor", "id": [ "langchain", "prompts", "chat", "ChatPromptValue" ], "kwargs": { "messages": [ { "lc": 1, "type": "constructor", "id": [ "langchain", "schema",...LangChain团队还构建了LangSmith,为LLM应用程序提供一流的调试体验。LangSmith现在是私人测试版,希望几个月后能公开测试。

import osimport getpassos.environ["LANGCHAIN_TRACING_V2"]="true"os.environ["LANGCHAIN_ENDPOINT"]="https://api.smith.langchain.com"os.environ["LANGCHAIN_API_KEY"] = getpass.getpass()set_debug(False)agent_executor.invoke({"input": "what is the weather in sf?"})# {'input': 'what is the weather in sf?',# 'output': "I'm sorry, but I couldn't find the current weather in San Francisco. However, you can check the weather forecast for San Francisco on websites like Weather.com or AccuWeather.com."}

如果没有参加LangSmith的私人测试版,也可以使用WandB来跟踪LLM应用程序。

三、可组合性(Composability)

最近几个月,该团队在LangChain表达式语言(LCEL)方面投入了大量资金,以实现更好的编排,因为我们都需要一种简单而声明的方式来组合链。

LCEL提供了许多优点:

- 流式支持

- 异步支持

- 优化的并行执行

- 中断和回退

- 访问中间结果

- 验证输入和输出schema

让我们看一个简单的例子:

from langchain_community.retrievers.tavily_search_api import TavilySearchAPIRetrieverretriever= TavilySearchAPIRetriever()prompt = ChatPromptTemplate.from_template("""Answer the question based only on the context provided:Context: {context}Question: {question}""")chain = prompt | model | output_parserquestion = "what is langsmith"context = "langsmith is a testing and observability platform built by the langchain team"chain.invoke({"question": question, "context": context})# 'Langsmith is a testing and observability platform developed by the Langchain team.'from langchain_core.runnables import RunnablePassthroughretrieval_chain = RunnablePassthrough.assign( context=(lambda x: x["question"]) | retriever) | chainretrieval_chain.invoke({"question": "what is langsmith"})# 'LangSmith is a platform that helps trace and evaluate language model applications and intelligent agents. It allows users to debug, test, evaluate, and monitor chains and intelligent agents built on any Language Model (LLM) framework. LangSmith seamlessly integrates with LangChain, an open source framework for building with LLMs.'注意:LCEL的组件位于langchain-core中,它们将逐渐取代先前存在的(现在为“Legacy”)链。

四、流式(Streaming)

用LCEL构建的所有链都使用了标准stream和astream方法,以及标准astream_log方法,该方法流式的传输LCEL链中的所有步骤,这些步骤可以被过滤以便很容易地获得所采取的中间步骤和其他信息。

from langchain_openai import ChatOpenAIfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_core.output_parsers import StrOutputParserprompt = ChatPromptTemplate.from_template("Tell me a joke about {topic}")model = ChatOpenAI()output_parser = StrOutputParser()chain = prompt | model | output_parserfor s in chain.stream({"topic": "bears"}): print(s)Why don't bears wear shoes?Because they have bear feet!五、工具使用情况(Tool Usage)

确保LLM返回下游应用程序中使用的结构化信息是至关重要。

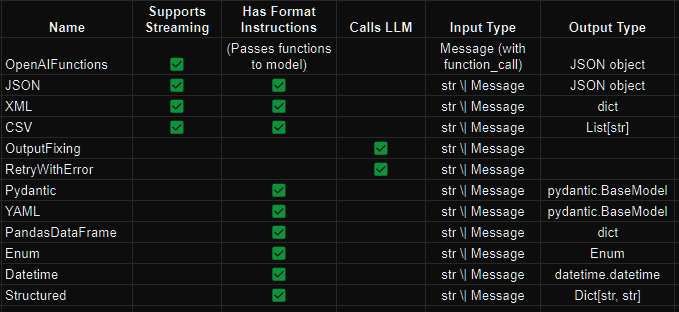

LangChain提供了许多输出解析器,用于将LLM输出转换为更合适的格式,其中许多解析器支持JSON、XML和CSV等结构化格式的流式部分结果。

可以指定输出格式(使用Pydantic、JSON模式,甚至函数),这样可以很轻松地处理响应。

from langchain.utils.openai_functions import ( convert_pydantic_to_openai_function,)from langchain_core.prompts import ChatPromptTemplatefrom langchain_core.pydantic_v1 import BaseModel, Field, validatorclass Joke(BaseModel): """Joke to tell user.""" setup: str = Field(description="question to set up a joke") punchline: str = Field(description="answer to resolve the joke")openai_functions = [convert_pydantic_to_openai_function(Joke)]from langchain.output_parsers.openai_functions import JsonOutputFunctionsParserparser = JsonOutputFunctionsParser()chain = prompt | model.bind(functions=openai_functions) | parserchain.invoke({"topic": "bears"})# {'setup': "Why don't bears wear shoes?",# 'punchline': 'Because they have bear feet!'}所有输出解析器都内置了一个get_format_instructions方法来获取指令,并告诉LLM如何响应。

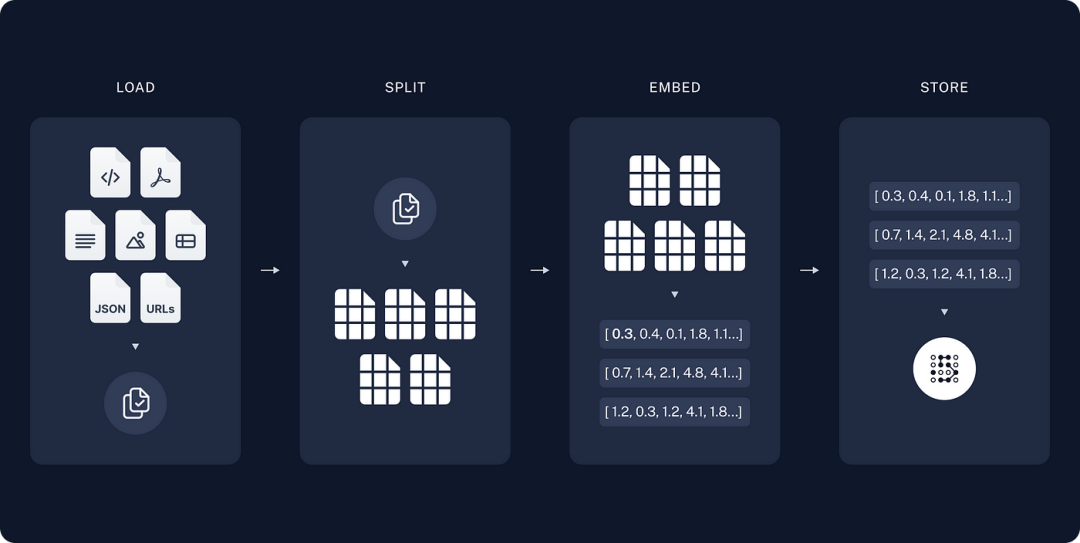

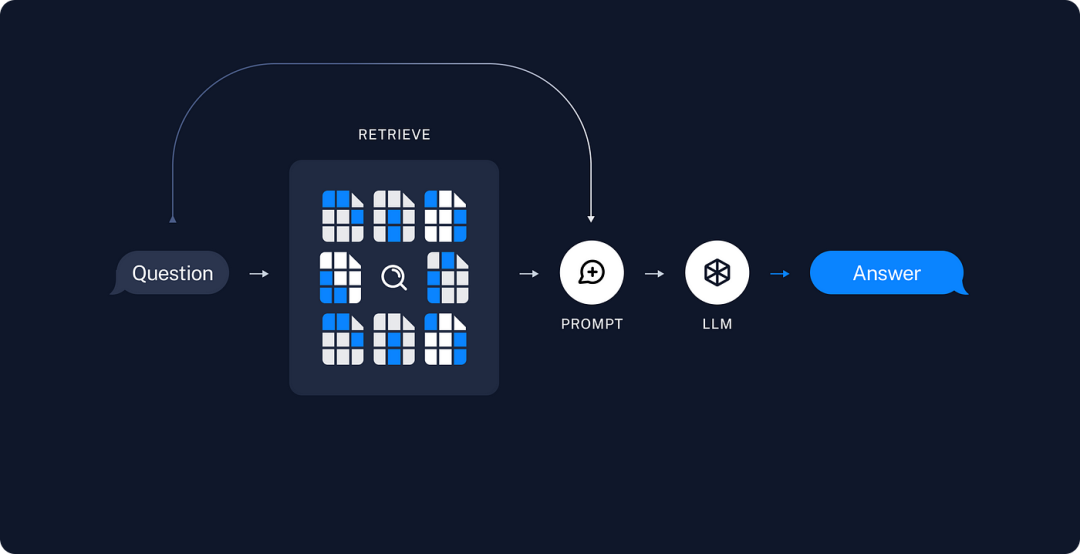

六、检索增强生成(RAG)

现在,让我们来谈谈RAG方面,能够轻松地将用户私有数据与LLM相结合是LangChain极其重要的一部分。

在数据加载方面,LangChain提供了15个不同的文本拆分器,并为特定的文档类型(如HTML和Markdown)提供优化。

对于大规模文本任务,LangChain提供了索引API,允许用户在忽略未更改的部分的同时重新插入内容,从而为大容量工作负载节省了时间和成本。

在检索方面,LangChain支持来自学术界先进策略,比如FLARE和Hyde,也支持LangChain的技术,比如Parent Document和Self Query,以及来自其他行业的解决方案,比如Multi-Query。

它还支持生成环境中的实际问题,比如按用户检索,这对于将多个用户的文档存储在一起的任何应用程序都至关重要。

还提供了更多的检索方法,如EmbedChain和GPTResearcher。

七、Agent

2024年将是Agent的元年,LangChain在Agent方面支持如下功能:

- 与第三方工具集成

- 构建LLM响应的方法

- 灵活的工具调用方式(LCEL)

- 提示策略,如ReAct

- 函数和工具调用

以下是一个简单的例子,展示了使用LangChain启动Agent程序有多容易:

from langchain_community.tools.tavily_search import TavilySearchResultsfrom langchain_openai import ChatOpenAIfrom langchain import hubfrom langchain.agents import create_openai_functions_agentfrom langchain.agents import AgentExecutor# Prompt# Get the prompt to use - you can modify this!prompt = hub.pull("hwchase17/openai-functions-agent")# LLMllm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)# Toolssearch = TavilySearchResults()tools = [search]# Agentagent = create_openai_functions_agent(llm, tools, prompt)result = agent.invoke({"input": "what's the weather in SF?", "intermediate_steps": []})result.tool# 'tavily_search_results_json'result.tool_input# {'query': 'weather in San Francisco'}result# AgentActionMessageLog(tool='tavily_search_results_json', tool_input={'query': 'weather in San Francisco'}, log="\nInvoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}`\n\n\n", message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{\n "query": "weather in San Francisco"\n}', 'name': 'tavily_search_results_json'}})])# Agent Executoragent_executor = AgentExecutor(agent=agent, tools=tools)agent_executor.invoke({"input": "what is the weather in sf?"})#{'input': 'what is the weather in sf?',# 'output': 'The weather in San Francisco is currently not available. However, you can check the weather in San Francisco in January 2024 [here](https://www.whereandwhen.net/when/north-america/california/san-francisco-ca/january/).'}# Streamingfor step in agent_executor.stream({"input": "what is the weather in sf?"}): print(step) # {'actions': [AgentActionMessageLog(tool='tavily_search_results_json', tool_input={'query': 'weather in San Francisco'}, log="\nInvoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}`\n\n\n", message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{\n "query": "weather in San Francisco"\n}', 'name': 'tavily_search_results_json'}})])], 'messages': [AIMe...还有一个值得注意的是langgraph库,它可以将语言Agent创建为图。

langgraph允许用户自定义循环行为:

- 明确的规划步骤

- 显式响应步骤

或者先对其进行硬编码以调用特定的工具,使用户能够构建更复杂、更高效的语言模型。

from langgraph.graph import END, Graphworkflow = Graph()# Add the agent node, we give it name `agent` which we will use laterworkflow.add_node("agent", agent)# Add the tools node, we give it name `tools` which we will use laterworkflow.add_node("tools", execute_tools)# Set the entrypoint as `agent`# This means that this node is the first one calledworkflow.set_entry_point("agent")# We now add a conditional edgeworkflow.add_conditional_edges( # First, we define the start node. We use `agent`. # This means these are the edges taken after the `agent` node is called. "agent", # Next, we pass in the function that will determine which node is called next. should_continue, # Finally we pass in a mapping. # The keys are strings, and the values are other nodes. # END is a special node marking that the graph should finish. # What will happen is we will call `should_continue`, and then the output of that # will be matched against the keys in this mapping. # Based on which one it matches, that node will then be called. { # If `tools`, then we call the tool node. "continue": "tools", # Otherwise we finish. "exit": END })# We now add a normal edge from `tools` to `agent`.# This means that after `tools` is called, `agent` node is called next.workflow.add_edge('tools', 'agent')# Finally, we compile it!# This compiles it into a LangChain Runnable,# meaning you can use it as you would any other runnablechain = workflow.compile()

langgraph还支持OpenGPTs。

参考文献:

[1] https://medium.com/@datadrifters/langchain-0-1-7b26a6012482

[2] https://www.youtube.com/watch?v=dlJQ-YiXgCs

[3] https://www.youtube.com/watch?v=b-jEUMt0ji0

[4] https://www.youtube.com/watch?v=ipwWmXa904w

[5] https://www.youtube.com/watch?v=gr5CGL4_jpY

[6] https://www.youtube.com/watch?v=dbjmhCWPKow

[7] https://www.youtube.com/watch?v=yK64dws8f6A

[8] https://www.youtube.com/watch?v=08qXj9w-CG4