- 1python绘制动态心电图_Python-Evoked地形图可视化

- 2利用rtsp-simple-server对Azure Kinect摄像头的RGBD视频推拉流

- 3基于海思Hi3559A或者Atlas_200模块,Hi3559A(主)+Atlas_200(从)开发AI加速边缘计算主板的三种模式_atlas200 hi3559c100

- 4USB控制器类型_usb pmsc pcdc hcdc hmsc hhid

- 5关于调用阿里AI语言大模型接口,从0-1创建方法,整合到SpringBoot项目_java对接阿里ai

- 6【Vue】VUE模板vue-admin-template-4.4.0(Vue + Element UI)使用攻略_vue-admin-template@4.4.0 no repository field.

- 7Java如何远程调试线上项目_java中怎么进行在线远程调试项目

- 8HTML5实现头像的上传_html设置上传头像及回显

- 9FLink-jdbcsink读取kafka数据写入postgres

- 10原生js实现文件下载并设置请求头header

AI智能体研发之路-工程篇(二):Dify智能体开发平台一键部署_dify部署教程

赞

踩

博客导读:

《AI—工程篇》

AI智能体研发之路-工程篇(一):Docker助力AI智能体开发提效

AI智能体研发之路-工程篇(二):Dify智能体开发平台一键部署

AI智能体研发之路-工程篇(三):大模型推理服务框架Ollama一键部署

AI智能体研发之路-工程篇(四):大模型推理服务框架Xinference一键部署

AI智能体研发之路-工程篇(五):大模型推理服务框架LocalAI一键部署

《AI—模型篇》

AI智能体研发之路-模型篇(一):大模型训练框架LLaMA-Factory在国内网络环境下的安装、部署及使用

AI智能体研发之路-模型篇(二):DeepSeek-V2-Chat 训练与推理实战

目录

1.引言

刚刚开始写CSDN,下班了研究研究CSDN的玩法,看到活动区发起了“Agent AI智能体的未来”话题,正好最近工作中比较多的经历集中在这里,所以借着这个话题活动写一些自己的看法。

目前的工作总结来看就是进行AI智能体的开发,组里基于langchain做了一些储备,搭建了内部的一个AI智能体开发平台,支持了知识库、工具调用、多种大模型的配置,但当后来字节的coze、百度的千帆发布,发现AI智能体开发平台真是发展的太快了,相比于coze,丰富的插件工具,稳定的模型,成熟的工作流,分析自己组内的人力情况,根本追不上:组内同学既要全力完成okr,又要每个人开发自己的AI智能体,剩下的精力才能继续完善AI智能体平台。

五一之前,领导在群里扔了一个创业公司基于Dify开发AI应用的介绍。我抱着学习的态度了解了一下Dify:可以称之为开源版的coze!支持多种大模型、插件工具的一键适配,支持知识库自动切割创建索引,支持工具流开发!赞啊,这些功能不正是组内AI智能体开发平台需要添加的功能么。于是马上开始了部署与调试。

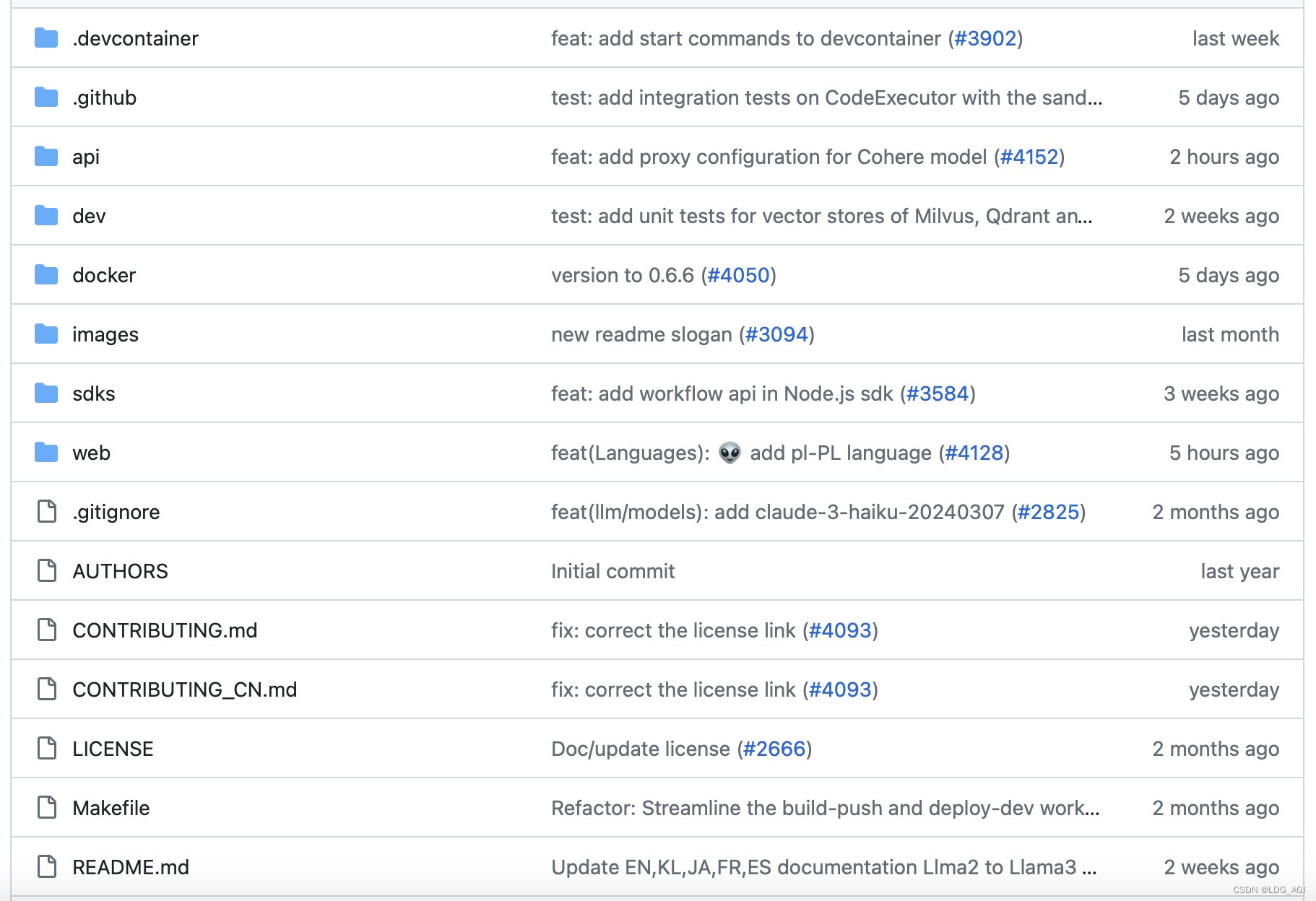

先分享dify项目的github:https://github.com/langgenius/dify

“项目由前腾讯云DevOps团队成员创建。我们发现基于OpenAI的API开发GPT应用程序有些麻烦。凭借我们多年在开发者效率工具方面的研发经验,我们希望能够让更多的人使用自然语言开发有趣的应用程序。”

dify由10+全职团队和100+社区贡献者共同维护,迭代非常快,我下载的时候还是0.6.3,现在已经升级为0.6.6,基本上每周都会升级一个版本。这里唠叨两句,对于迭代非常频繁的开源项目,在公司内部使用的时候,咱就先别二次开发了,否则你这边刚开发完一个点,另一边社区升级了,逻辑冲突合不到一块去,尴尬不尴尬。之前听说有个团队基于tensorflow1.x“自研”了深度学习训练框架,多年过去,社区都2.x了,那个团队还在tensorflow1.x上疲于奔命。哈哈哈,扯远了。

说回到本文,标题叫《Agent AI智能体的未来—Dify项目介绍》,其实是有些夸张的,但我认为,了解Dify以及其中依赖的上下游技术、架构,利用Dify快速建立AI智能体demo原型,其实对推进AI智能体开发是有意义的。今天我先简要介绍一下Dify的部署过程,后面的篇幅会具体分享基于Dify的AI智能体开发经验,以及AI智能体开发所依赖的底层技术。

2.docker compose一行命令部署Dify

首先将dify项目下载至服务器上,

git clone https://github.com/langgenius/dify.git项目主要分为api(后端)和web(前端)两大部分,具体代码后面再分析,进入docker目录

cd docker目录中包含几个文件,docker-compose.yaml可以通过docker compose直接启动所有服务和依赖,docker-compose.middleware.yaml可以先启动依赖的关系数据库、向量数据库等组件,再单独启动api和web端,隔离做得可以说太棒了。

先看一下docker-compose.yaml的源码

- version: '3'

- services:

- # API service

- api:

- image: langgenius/dify-api:0.6.6

- restart: always

- environment:

- # Startup mode, 'api' starts the API server.

- MODE: api

- # The log level for the application. Supported values are `DEBUG`, `INFO`, `WARNING`, `ERROR`, `CRITICAL`

- LOG_LEVEL: INFO

- # A secret key that is used for securely signing the session cookie and encrypting sensitive information on the database. You can generate a strong key using `openssl rand -base64 42`.

- SECRET_KEY: sk-9f73s3ljTXVcMT3Blb3ljTqtsKiGHXVcMT3BlbkFJLK7U

- # The base URL of console application web frontend, refers to the Console base URL of WEB service if console domain is

- # different from api or web app domain.

- # example: http://cloud.dify.ai

- CONSOLE_WEB_URL: ''

- # Password for admin user initialization.

- # If left unset, admin user will not be prompted for a password when creating the initial admin account.

- INIT_PASSWORD: ''

- # The base URL of console application api server, refers to the Console base URL of WEB service if console domain is

- # different from api or web app domain.

- # example: http://cloud.dify.ai

- CONSOLE_API_URL: ''

- # The URL prefix for Service API endpoints, refers to the base URL of the current API service if api domain is

- # different from console domain.

- # example: http://api.dify.ai

- SERVICE_API_URL: ''

- # The URL prefix for Web APP frontend, refers to the Web App base URL of WEB service if web app domain is different from

- # console or api domain.

- # example: http://udify.app

- APP_WEB_URL: ''

- # File preview or download Url prefix.

- # used to display File preview or download Url to the front-end or as Multi-model inputs;

- # Url is signed and has expiration time.

- FILES_URL: ''

- # When enabled, migrations will be executed prior to application startup and the application will start after the migrations have completed.

- MIGRATION_ENABLED: 'true'

- # The configurations of postgres database connection.

- # It is consistent with the configuration in the 'db' service below.

- DB_USERNAME: postgres

- DB_PASSWORD: difyai123456

- DB_HOST: db

- DB_PORT: 5432

- DB_DATABASE: dify

- # The configurations of redis connection.

- # It is consistent with the configuration in the 'redis' service below.

- REDIS_HOST: redis

- REDIS_PORT: 6379

- REDIS_USERNAME: ''

- REDIS_PASSWORD: difyai123456

- REDIS_USE_SSL: 'false'

- # use redis db 0 for redis cache

- REDIS_DB: 0

- # The configurations of celery broker.

- # Use redis as the broker, and redis db 1 for celery broker.

- CELERY_BROKER_URL: redis://:difyai123456@redis:6379/1

- # Specifies the allowed origins for cross-origin requests to the Web API, e.g. https://dify.app or * for all origins.

- WEB_API_CORS_ALLOW_ORIGINS: '*'

- # Specifies the allowed origins for cross-origin requests to the console API, e.g. https://cloud.dify.ai or * for all origins.

- CONSOLE_CORS_ALLOW_ORIGINS: '*'

- # CSRF Cookie settings

- # Controls whether a cookie is sent with cross-site requests,

- # providing some protection against cross-site request forgery attacks

- #

- # Default: `SameSite=Lax, Secure=false, HttpOnly=true`

- # This default configuration supports same-origin requests using either HTTP or HTTPS,

- # but does not support cross-origin requests. It is suitable for local debugging purposes.

- #

- # If you want to enable cross-origin support,

- # you must use the HTTPS protocol and set the configuration to `SameSite=None, Secure=true, HttpOnly=true`.

- #

- # The type of storage to use for storing user files. Supported values are `local` and `s3` and `azure-blob` and `google-storage`, Default: `local`

- STORAGE_TYPE: local

- # The path to the local storage directory, the directory relative the root path of API service codes or absolute path. Default: `storage` or `/home/john/storage`.

- # only available when STORAGE_TYPE is `local`.

- STORAGE_LOCAL_PATH: storage

- # The S3 storage configurations, only available when STORAGE_TYPE is `s3`.

- S3_ENDPOINT: 'https://xxx.r2.cloudflarestorage.com'

- S3_BUCKET_NAME: 'difyai'

- S3_ACCESS_KEY: 'ak-difyai'

- S3_SECRET_KEY: 'sk-difyai'

- S3_REGION: 'us-east-1'

- # The Azure Blob storage configurations, only available when STORAGE_TYPE is `azure-blob`.

- AZURE_BLOB_ACCOUNT_NAME: 'difyai'

- AZURE_BLOB_ACCOUNT_KEY: 'difyai'

- AZURE_BLOB_CONTAINER_NAME: 'difyai-container'

- AZURE_BLOB_ACCOUNT_URL: 'https://<your_account_name>.blob.core.windows.net'

- # The Google storage configurations, only available when STORAGE_TYPE is `google-storage`.

- GOOGLE_STORAGE_BUCKET_NAME: 'yout-bucket-name'

- GOOGLE_STORAGE_SERVICE_ACCOUNT_JSON_BASE64: 'your-google-service-account-json-base64-string'

- # The type of vector store to use. Supported values are `weaviate`, `qdrant`, `milvus`, `relyt`.

- VECTOR_STORE: weaviate

- # The Weaviate endpoint URL. Only available when VECTOR_STORE is `weaviate`.

- WEAVIATE_ENDPOINT: http://weaviate:8080

- # The Weaviate API key.

- WEAVIATE_API_KEY: WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih

- # The Qdrant endpoint URL. Only available when VECTOR_STORE is `qdrant`.

- QDRANT_URL: http://qdrant:6333

- # The Qdrant API key.

- QDRANT_API_KEY: difyai123456

- # The Qdrant client timeout setting.

- QDRANT_CLIENT_TIMEOUT: 20

- # The Qdrant client enable gRPC mode.

- QDRANT_GRPC_ENABLED: 'false'

- # The Qdrant server gRPC mode PORT.

- QDRANT_GRPC_PORT: 6334

- # Milvus configuration Only available when VECTOR_STORE is `milvus`.

- # The milvus host.

- MILVUS_HOST: 127.0.0.1

- # The milvus host.

- MILVUS_PORT: 19530

- # The milvus username.

- MILVUS_USER: root

- # The milvus password.

- MILVUS_PASSWORD: Milvus

- # The milvus tls switch.

- MILVUS_SECURE: 'false'

- # relyt configurations

- RELYT_HOST: db

- RELYT_PORT: 5432

- RELYT_USER: postgres

- RELYT_PASSWORD: difyai123456

- RELYT_DATABASE: postgres

- # Mail configuration, support: resend, smtp

- MAIL_TYPE: ''

- # default send from email address, if not specified

- MAIL_DEFAULT_SEND_FROM: 'YOUR EMAIL FROM (eg: no-reply <no-reply@dify.ai>)'

- SMTP_SERVER: ''

- SMTP_PORT: 587

- SMTP_USERNAME: ''

- SMTP_PASSWORD: ''

- SMTP_USE_TLS: 'true'

- # the api-key for resend (https://resend.com)

- RESEND_API_KEY: ''

- RESEND_API_URL: https://api.resend.com

- # The DSN for Sentry error reporting. If not set, Sentry error reporting will be disabled.

- SENTRY_DSN: ''

- # The sample rate for Sentry events. Default: `1.0`

- SENTRY_TRACES_SAMPLE_RATE: 1.0

- # The sample rate for Sentry profiles. Default: `1.0`

- SENTRY_PROFILES_SAMPLE_RATE: 1.0

- # Notion import configuration, support public and internal

- NOTION_INTEGRATION_TYPE: public

- NOTION_CLIENT_SECRET: you-client-secret

- NOTION_CLIENT_ID: you-client-id

- NOTION_INTERNAL_SECRET: you-internal-secret

- # The sandbox service endpoint.

- CODE_EXECUTION_ENDPOINT: "http://sandbox:8194"

- CODE_EXECUTION_API_KEY: dify-sandbox

- CODE_MAX_NUMBER: 9223372036854775807

- CODE_MIN_NUMBER: -9223372036854775808

- CODE_MAX_STRING_LENGTH: 80000

- TEMPLATE_TRANSFORM_MAX_LENGTH: 80000

- CODE_MAX_STRING_ARRAY_LENGTH: 30

- CODE_MAX_OBJECT_ARRAY_LENGTH: 30

- CODE_MAX_NUMBER_ARRAY_LENGTH: 1000

- depends_on:

- - db

- - redis

- volumes:

- # Mount the storage directory to the container, for storing user files.

- - ./volumes/app/storage:/app/api/storage

- # uncomment to expose dify-api port to host

- # ports:

- # - "5001:5001"

-

- # worker service

- # The Celery worker for processing the queue.

- worker:

- image: langgenius/dify-api:0.6.6

- restart: always

- environment:

- # Startup mode, 'worker' starts the Celery worker for processing the queue.

- MODE: worker

-

- # --- All the configurations below are the same as those in the 'api' service. ---

-

- # The log level for the application. Supported values are `DEBUG`, `INFO`, `WARNING`, `ERROR`, `CRITICAL`

- LOG_LEVEL: INFO

- # A secret key that is used for securely signing the session cookie and encrypting sensitive information on the database. You can generate a strong key using `openssl rand -base64 42`.

- # same as the API service

- SECRET_KEY: sk-9f73s3ljTXVcMT3Blb3ljTqtsKiGHXVcMT3BlbkFJLK7U

- # The configurations of postgres database connection.

- # It is consistent with the configuration in the 'db' service below.

- DB_USERNAME: postgres

- DB_PASSWORD: difyai123456

- DB_HOST: db

- DB_PORT: 5432

- DB_DATABASE: dify

- # The configurations of redis cache connection.

- REDIS_HOST: redis

- REDIS_PORT: 6379

- REDIS_USERNAME: ''

- REDIS_PASSWORD: difyai123456

- REDIS_DB: 0

- REDIS_USE_SSL: 'false'

- # The configurations of celery broker.

- CELERY_BROKER_URL: redis://:difyai123456@redis:6379/1

- # The type of storage to use for storing user files. Supported values are `local` and `s3` and `azure-blob`, Default: `local`

- STORAGE_TYPE: local

- STORAGE_LOCAL_PATH: storage

- # The S3 storage configurations, only available when STORAGE_TYPE is `s3`.

- S3_ENDPOINT: 'https://xxx.r2.cloudflarestorage.com'

- S3_BUCKET_NAME: 'difyai'

- S3_ACCESS_KEY: 'ak-difyai'

- S3_SECRET_KEY: 'sk-difyai'

- S3_REGION: 'us-east-1'

- # The Azure Blob storage configurations, only available when STORAGE_TYPE is `azure-blob`.

- AZURE_BLOB_ACCOUNT_NAME: 'difyai'

- AZURE_BLOB_ACCOUNT_KEY: 'difyai'

- AZURE_BLOB_CONTAINER_NAME: 'difyai-container'

- AZURE_BLOB_ACCOUNT_URL: 'https://<your_account_name>.blob.core.windows.net'

- # The type of vector store to use. Supported values are `weaviate`, `qdrant`, `milvus`, `relyt`.

- VECTOR_STORE: weaviate

- # The Weaviate endpoint URL. Only available when VECTOR_STORE is `weaviate`.

- WEAVIATE_ENDPOINT: http://weaviate:8080

- # The Weaviate API key.

- WEAVIATE_API_KEY: WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih

- # The Qdrant endpoint URL. Only available when VECTOR_STORE is `qdrant`.

- QDRANT_URL: http://qdrant:6333

- # The Qdrant API key.

- QDRANT_API_KEY: difyai123456

- # The Qdrant clinet timeout setting.

- QDRANT_CLIENT_TIMEOUT: 20

- # The Qdrant client enable gRPC mode.

- QDRANT_GRPC_ENABLED: 'false'

- # The Qdrant server gRPC mode PORT.

- QDRANT_GRPC_PORT: 6334

- # Milvus configuration Only available when VECTOR_STORE is `milvus`.

- # The milvus host.

- MILVUS_HOST: 127.0.0.1

- # The milvus host.

- MILVUS_PORT: 19530

- # The milvus username.

- MILVUS_USER: root

- # The milvus password.

- MILVUS_PASSWORD: Milvus

- # The milvus tls switch.

- MILVUS_SECURE: 'false'

- # Mail configuration, support: resend

- MAIL_TYPE: ''

- # default send from email address, if not specified

- MAIL_DEFAULT_SEND_FROM: 'YOUR EMAIL FROM (eg: no-reply <no-reply@dify.ai>)'

- # the api-key for resend (https://resend.com)

- RESEND_API_KEY: ''

- RESEND_API_URL: https://api.resend.com

- # relyt configurations

- RELYT_HOST: db

- RELYT_PORT: 5432

- RELYT_USER: postgres

- RELYT_PASSWORD: difyai123456

- RELYT_DATABASE: postgres

- # Notion import configuration, support public and internal

- NOTION_INTEGRATION_TYPE: public

- NOTION_CLIENT_SECRET: you-client-secret

- NOTION_CLIENT_ID: you-client-id

- NOTION_INTERNAL_SECRET: you-internal-secret

- depends_on:

- - db

- - redis

- volumes:

- # Mount the storage directory to the container, for storing user files.

- - ./volumes/app/storage:/app/api/storage

-

- # Frontend web application.

- web:

- image: langgenius/dify-web:0.6.6

- restart: always

- environment:

- # The base URL of console application api server, refers to the Console base URL of WEB service if console domain is

- # different from api or web app domain.

- # example: http://cloud.dify.ai

- CONSOLE_API_URL: ''

- # The URL for Web APP api server, refers to the Web App base URL of WEB service if web app domain is different from

- # console or api domain.

- # example: http://udify.app

- APP_API_URL: ''

- # The DSN for Sentry error reporting. If not set, Sentry error reporting will be disabled.

- SENTRY_DSN: ''

- # uncomment to expose dify-web port to host

- # ports:

- # - "3000:3000"

-

- # The postgres database.

- db:

- image: postgres:15-alpine

- restart: always

- environment:

- PGUSER: postgres

- # The password for the default postgres user.

- POSTGRES_PASSWORD: difyai123456

- # The name of the default postgres database.

- POSTGRES_DB: dify

- # postgres data directory

- PGDATA: /var/lib/postgresql/data/pgdata

- volumes:

- - ./volumes/db/data:/var/lib/postgresql/data

- # uncomment to expose db(postgresql) port to host

- # ports:

- # - "5432:5432"

- healthcheck:

- test: [ "CMD", "pg_isready" ]

- interval: 1s

- timeout: 3s

- retries: 30

-

- # The redis cache.

- redis:

- image: redis:6-alpine

- restart: always

- volumes:

- # Mount the redis data directory to the container.

- - ./volumes/redis/data:/data

- # Set the redis password when startup redis server.

- command: redis-server --requirepass difyai123456

- healthcheck:

- test: [ "CMD", "redis-cli", "ping" ]

- # uncomment to expose redis port to host

- # ports:

- # - "6379:6379"

-

- # The Weaviate vector store.

- weaviate:

- image: semitechnologies/weaviate:1.19.0

- restart: always

- volumes:

- # Mount the Weaviate data directory to the container.

- - ./volumes/weaviate:/var/lib/weaviate

- environment:

- # The Weaviate configurations

- # You can refer to the [Weaviate](https://weaviate.io/developers/weaviate/config-refs/env-vars) documentation for more information.

- QUERY_DEFAULTS_LIMIT: 25

- AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'false'

- PERSISTENCE_DATA_PATH: '/var/lib/weaviate'

- DEFAULT_VECTORIZER_MODULE: 'none'

- CLUSTER_HOSTNAME: 'node1'

- AUTHENTICATION_APIKEY_ENABLED: 'true'

- AUTHENTICATION_APIKEY_ALLOWED_KEYS: 'WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih'

- AUTHENTICATION_APIKEY_USERS: 'hello@dify.ai'

- AUTHORIZATION_ADMINLIST_ENABLED: 'true'

- AUTHORIZATION_ADMINLIST_USERS: 'hello@dify.ai'

- # uncomment to expose weaviate port to host

- # ports:

- # - "8080:8080"

-

- # The DifySandbox

- sandbox:

- image: langgenius/dify-sandbox:0.1.0

- restart: always

- cap_add:

- # Why is sys_admin permission needed?

- # https://docs.dify.ai/getting-started/install-self-hosted/install-faq#id-16.-why-is-sys_admin-permission-needed

- - SYS_ADMIN

- environment:

- # The DifySandbox configurations

- API_KEY: dify-sandbox

- GIN_MODE: release

- WORKER_TIMEOUT: 15

-

- # Qdrant vector store.

- # uncomment to use qdrant as vector store.

- # (if uncommented, you need to comment out the weaviate service above,

- # and set VECTOR_STORE to qdrant in the api & worker service.)

- # qdrant:

- # image: langgenius/qdrant:v1.7.3

- # restart: always

- # volumes:

- # - ./volumes/qdrant:/qdrant/storage

- # environment:

- # QDRANT_API_KEY: 'difyai123456'

- # # uncomment to expose qdrant port to host

- # # ports:

- # # - "6333:6333"

- # # - "6334:6334"

-

- # The nginx reverse proxy.

- # used for reverse proxying the API service and Web service.

- nginx:

- image: nginx:latest

- restart: always

- volumes:

- - ./nginx/nginx.conf:/etc/nginx/nginx.conf

- - ./nginx/proxy.conf:/etc/nginx/proxy.conf

- - ./nginx/conf.d:/etc/nginx/conf.d

- #- ./nginx/ssl:/etc/ssl

- depends_on:

- - api

- - web

- ports:

- - "80:80"

- #- "443:443"

主要包含如下几个模块及docker镜像

- api:langgenius/dify-api:0.6.6

- worker:langgenius/dify-api:0.6.6

- web:langgenius/dify-web:0.6.6

- db:postgres:15-alpine

- redis:redis:6-alpine

- weaviate:semitechnologies/weaviate:1.19.0

- sandbox:langgenius/dify-sandbox:0.1.0

- nginx:nginx:latest

docker compose一键部署安装

docker compose up -d从dockerhub逐个pulling依赖镜像,感觉还挺爽的

等待镜像下载部署完成后,打开webui:123.123.123.123:80,默认占用80端口,可以在docker compose配置文件中更改nignx端口。

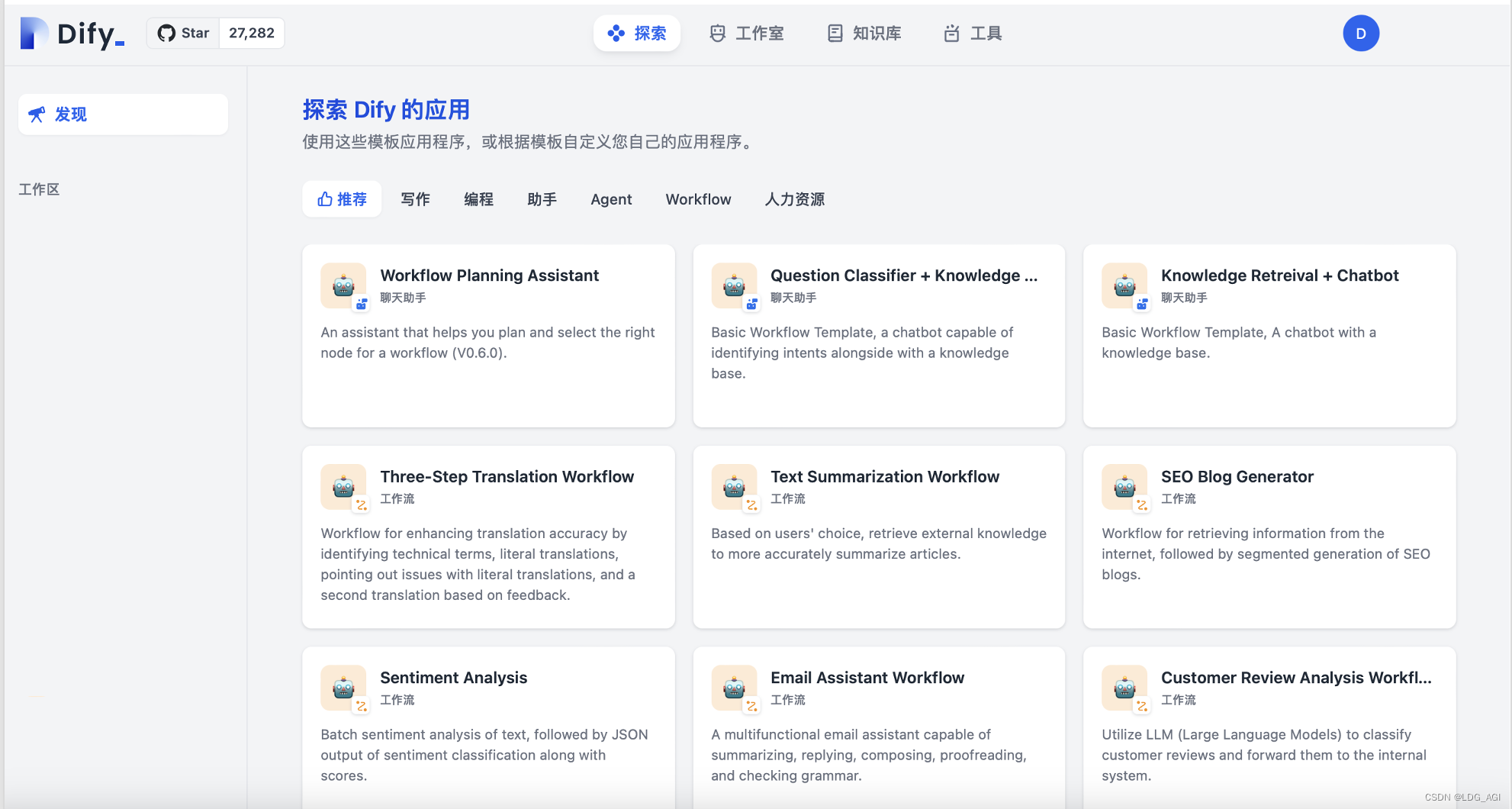

经过账号初始化等工作后,欢迎来到Dify工作界面,就是这么丝滑

3.Dify主要特色

3.1多种大语言模型集成

相较于fastGPT+oneApi的方案,集成度更高:

- 大模型厂商模型:仅需到大模型厂商注册账号,申请鉴权apikey,即可快速体验比较每个大模型厂商的优劣,项目还贴心的附带了每个厂商的注册跳转链接,每个厂商基本都会给几百万的token用于测试。

- 本地部署模型:支持Xinference,Ollama,OpenLLM,LocalAI等推理框架部署的模型一键接入

- HuggingFace开源模型:只需配置APIKEY和模型名字即可接入,不过要求服务器能翻墙噢

- OpenAI-API-compatible:接入兼容OpenAI规范的API,目前Xinference,OpenLLM等很多推理框架,都是直接支持OpenAI API接口规范的,但对于每家大模型厂商,一般都要设计自己的规范,可能是想构建生态,或者是与众不同?这里多说一下:对于国内互联网中小厂,如果不自建大模型,可能要试用或买入多加公司的大模型,多加大模型给多个业务部门使用,就要有一个代理平台专门计算成本,这个平台最好对业务暴露的是兼容OpenAI的API接口,如果不是的话,当接入Dify平台时,就需要包一层与OpenAI兼容的API接口。

3.2丰富工具内置+自定义工具支持

Dify内置了包含搜索引擎、天气预报、维基百科、SD等工具,同时自定义工具的配置化接入,团队成员一人接入,全组复用,高效!

3.3工作流

只需连接各个节点,既能在几分钟内快速完成AI智能体创作,且逻辑非常清晰。

3.4Agent 编排

编写提示词,导入知识库,添加工具,选择模型,运行测试,发布为API,一条龙创作!

还有很多特色,在此不再赘述了,附上一张官方的表格吧

4.总结

临下班了,本来只是想参加个话题活动,洋洋洒洒几千字。可能加入了太多感慨吧。希望感兴趣的朋友可以关注我、点赞、收藏和评论,您的鼓励是我持续码字的动力。

本文首先结合自己的工作写了一些对Agent AI智能体的见解,接着介绍了Dify框架快捷部署的过程,最后阐述了Dify框架的特点。个人认为Dify的发展会让Agent AI智能体开发提效,涌现更多有趣有价值的AI应用。

最后,写一下我对未来AI智能体发展的看法吧,从流量与用户来看,2000年-2004年,以新浪、搜狐、网易为代表的门户网站是流量入口,2004年-2014年,以百度为代表的搜索引擎是流量入口,2014-2024,以抖音、快手、微博、小红书为代表的移动互联网推荐系统是流量入口,抓住了流量入口就抓住了用户,抓住了用户就抓住了商业变现。2024-未来,极大的可能出现一家基于AI的平台型企业,通过AI智能体抓住流量,比如你要去哪玩,AI智能体在为你做出规划的过程中,夹杂酒店、航班的广告私货,你想吃什么,AI智能体夹杂着饭店的广告私货。

AI领域,乾坤未定,你我皆是黑马。

如果对AI感兴趣,可以接着看看我的其他文章:

《AI—工程篇》

AI智能体研发之路-工程篇(一):Docker助力AI智能体开发提效

AI智能体研发之路-工程篇(二):Dify智能体开发平台一键部署

AI智能体研发之路-工程篇(三):大模型推理服务框架Ollama一键部署

AI智能体研发之路-工程篇(四):大模型推理服务框架Xinference一键部署

AI智能体研发之路-工程篇(五):大模型推理服务框架LocalAI一键部署

《AI—模型篇》