- 1人脸表情识别相关研究_大学开展表情识别技术的相关研究工作

- 2当YOLOv5碰上PyQt5_yolov5加入pyqt5

- 3centos系列:【 全网最详细的安装配置Nginx,亲测可用,解决各种报错】_centos 安装nginx

- 4Python从入门到自闭(基础篇)

- 5EVO轨迹评估工具学习笔记_evo轨迹对准怎么从轨迹最开始对准

- 6学术英语视听说2听力原文_每天一套 | 高中英语听力专项训练(2)(录音+原文+答案)...

- 7深度解析:六个维度透视Claude3的潜能与局限_claude技术剖析

- 8git revert是个好东西_git revert 某个文件

- 9Jetson orin部署大模型示例教程_jetson部署

- 10YOLOv9:目标检测的新里程碑

Mobile ALOHA论文翻译:Learning Bimanual Mobile Manipulation with Low-Cost Whole-Body Teleoperation_mobile aloha: learning bimanual mobile manipulatio

赞

踩

Learning Bimanual Mobile Manipulation with Low-Cost Whole-Body Teleoperation

学习使用低成本的全身远程操作进行双手移动操作

Mobile ALOHA 前作翻译链接:Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware

文章目录

- Learning Bimanual Mobile Manipulation with Low-Cost Whole-Body Teleoperation

- 学习使用低成本的全身远程操作进行双手移动操作

- Abstract

- 摘要

- 1.Introduction

- 1.引言

- 2.Related Work

- 2.相关工作。

- 3.Mobile ALOHA Hardware

- 3.Mobile ALOHA 硬件

- 4.Co-training with Static ALOHA Data

- 4. 使用静态ALOHA数据进行联合训练

- 5.Tasks

- 5.任务

- 6.Experiments

- 6.实验

- 7.Ablation Studies

- 7.消融实验

- 8.User Studies

- 8.用户研究

- 9.Conclusion, Limitations and Future Directions

- 9.结论、局限性和未来方向

- Acknowledgments

- A.Appendix

- A. 附录

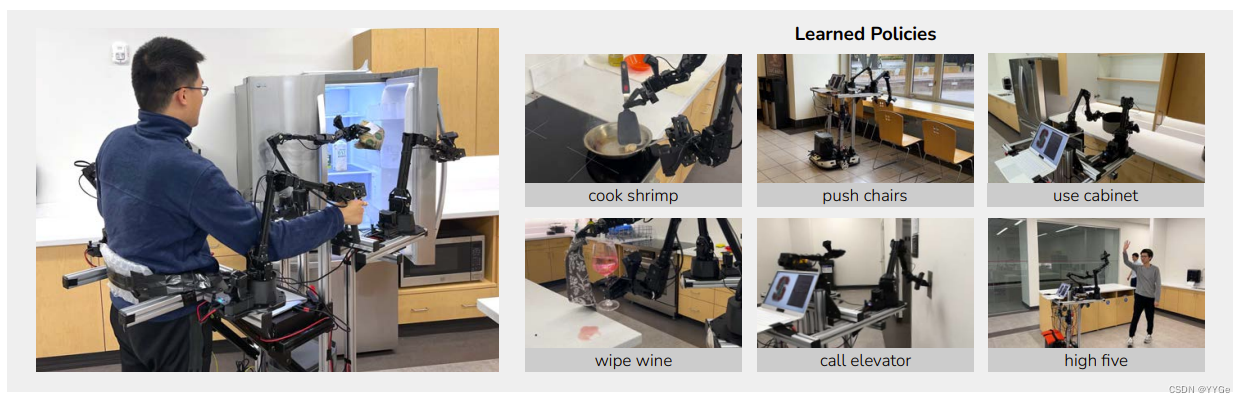

Figure 1: Mobile ALOHA . We introduce a low-cost mobile manipulation system that is bimanual and supports whole-body teleoperation. The system costs $32k including onboard power and compute. Left: A user teleoperates to obtain food from the fridge. Right: Mobile ALOHA can perform complex long-horizon tasks with imitation learning

图1:移动ALOHA。 我们介绍了一种低成本的移动操作系统,它是双手操作的,支持全身远程操作。该系统成本为32,000美元,包括机载电源和计算。左图:用户通过远程操作从冰箱中取食物。右图:移动ALOHA可以通过模仿学习执行复杂的长程任务。

Abstract

摘要

Imitation learning from human demonstrations has shown impressive performance in robotics. However, most results focus on table-top manipulation, lacking the mobility and dexterity necessary for generally useful tasks. In this work, we develop a system for imitating mobile manipulation tasks that are bimanual and require whole-body control. We first present Mobile ALOHA, a low-cost and whole-body teleoperation system for data collection. It augments the ALOHA system [104] with a mobile base, and a whole-body teleoperation interface. Using data collected with Mobile ALOHA, we then perform supervised behavior cloning and find that co-training with existing static ALOHA datasets boosts performance on mobile manipulation tasks. With 50 demonstrations for each task, co-training can increase success rates by up to 90%, allowing Mobile ALOHA to autonomously complete complex mobile manipulation tasks such as sauteing and serving a piece of shrimp, opening a two-door wall cabinet to store heavy cooking pots, calling and entering an elevator, and lightly rinsing a used pan using a kitchen faucet.

人类演示的模仿学习在机器人领域展现出了令人印象深刻的性能,但大多数研究集中在桌面操纵上,缺乏进行一般有用任务所需的机动性和灵活性。在这项工作中,我们开发了一个系统,用于模仿双手进行整体身体控制的移动操作任务。首先,我们介绍了Mobile ALOHA,这是一个用于数据收集的低成本整体身体远程操作系统。它通过增加移动底座和整体身体远程操作界面来扩展ALOHA系统[104]。利用使用Mobile ALOHA收集的数据,我们进行了监督行为克隆,并发现与现有静态ALOHA数据集一起训练可以提高移动操作任务的性能。对于每个任务的50个演示,联合训练可以将成功率提高多达90%,使Mobile ALOHA能够自主完成复杂的移动操作任务,如炒菜并上菜、打开两扇门的壁柜以存放重的炊具、呼叫并进入电梯,以及用厨房水龙头轻轻冲洗使用过的平底锅。

1.Introduction

1.引言

Imitation learning from human-provided demonstrations is a promising tool for developing generalist robots, as it allows people to teach arbitrary skills to robots. Indeed, direct behavior cloning can enable robots to learn a variety of primitive robot skills ranging from lane-following in mobile robots [67], to simple pick-and-place manipulation skills [12, 20] to more delicate manipulation skills like spreading pizza sauce or slotting in a battery [18, 104]. However, many tasks in realistic, everyday environments require whole-body coordination of both mobility and dexterous manipulation, rather than just individual mobility or manipulation behaviors. For example, consider the relatively basic task of putting away a heavy pot into a cabinet in Figure 1. The robot needs to first navigate to the cabinet, necessitating the mobility of the robot base. To open the cabinet, the robot needs to back up while simultaneously maintaining a firm grasp of the two door handles, motivating whole-body control. Subsequently, both arms need to grasp the pot handles and together move the pot into the cabinet, emphasizing the importance of bimanual coordination. Along a similar vein, cooking, cleaning, housekeeping, and even simply navigating an office using an elevator all require mobile manipulation and are often made easier with the added flexibility of two arms. In this paper, we study the feasibility of extending imitation learning to tasks that require whole-body control of bimanual mobile robots.

从人类提供的演示中进行模仿学习是发展通用型机器人的一种有前途的工具,因为它使人们能够向机器人教授任意技能。事实上,直接行为克隆可以使机器人学会各种基本机器人技能,从移动机器人的车道跟随[67],到简单的拾取和放置操纵技能[12, 20],再到更精细的操作技能,比如涂抹比萨酱或插入电池[18, 104]。然而,在现实的日常环境中,许多任务需要对机动性和灵巧操纵进行整体身体协调,而不仅仅是个体的机动性或操纵行为。例如,考虑图1中把一个重锅放入柜子的相对基本的任务。机器人首先需要导航到柜子,需要机器人底座的机动性。为了打开柜子,机器人需要在同时保持紧握两个门把手的情况下后退,需要整体身体的控制。随后,两只手都需要抓住锅的把手,并一起将锅放入柜子,强调了双手协调的重要性。类似地,烹饪、清洁、家务和甚至只是使用电梯在办公室移动都需要移动操作,并且通常通过使用两只手臂的额外灵活性来更容易完成。在本文中,我们研究将模仿学习扩展到需要整体身体控制双手移动机器人的任务的可行性。

Two main factors hinder the wide adoption of imitation learning for bimanual mobile manipulation. (1) We lack accessible, plug-and-play hardware for whole-body teleoperation. Bimanual mobile manipulators can be costly if purchased off-the-shelf. Robots like the PR2 and the TIAGo can cost more than $200k USD, making them unaffordable for typical research labs. Additional hardware and calibration are also necessary to enable teleoperation on these platforms. For example, the PR1 uses two haptic devices for bimanual teleoperation and foot pedals to control the base [93]. Prior work [5] uses a motion capture system to retarget human motion to a TIAGo robot, which only controls a single arm and needs careful calibration. Gaming controllers and keyboards are also used for teleoperating the Hello Robot Stretch [2] and the Fetch robot [1], but do not support bimanual or whole-body teleoperation. (2) Prior robot learning works have not demonstrated high-performance bimanual mobile manipulation for complex tasks. While many recent works demonstrate that highly expressive policy classes such as diffusion models and transformers can perform well on fine-grained, multi-modal manipulation tasks, it is largely unclear whether the same recipe will hold for mobile manipulation: with additional degrees of freedom added, the interaction between the arms and base actions can be complex, and a small deviation in base pose can lead to large drifts in the arm’s end-effector pose. Overall, prior works have not delivered a practical and convincing solution for bimanual mobile manipulation, both from a hardware and a learning standpoint.

两个主要因素阻碍了双手移动操作的模仿学习广泛应用。 (1) 我们缺乏易于获取、即插即用的整体身体远程操作硬件。如果直接购买,双手移动操作器可能成本高昂。像PR2和TIAGo这样的机器人可能超过20万美元,对于典型的研究实验室来说是无法承受的。还需要额外的硬件和校准来在这些平台上实现远程操作。例如,PR1使用两个触觉设备进行双手远程操作,并使用脚踏板控制底座[93]。之前的工作[5]使用运动捕捉系统将人类动作重定向到TIAGo机器人,它只能控制单臂并需要仔细校准。游戏控制器和键盘也用于远程操作Hello Robot Stretch [2]和Fetch机器人 [1],但不支持双手或整体身体远程操作。 (2) 先前的机器人学习工作未展示出针对复杂任务的高性能双手移动操作。尽管许多最近的研究表明,诸如扩散模型和transformers 等高度表达的策略类别在细粒度、多模态操纵任务上表现良好,但目前还不清楚是否相同的方法适用于移动操作:随着添加附加自由度,手臂和底座动作之间的相互作用可能会变得复杂,而底座姿态的小偏差可能导致手臂末端执行器姿态的大漂移。总体而言,先前的工作在硬件和学习的角度都未提供实际且令人信服的双手移动操作解决方案。

We seek to tackle the challenges of applying imitation learning to bimanual mobile manipulation in this paper. On the hardware front, we present Mobile ALOHA, a low-cost and whole-body teleoperation system for collecting bimanual mobile manipulation data. Mobile ALOHA extends the capabilities of the original ALOHA , the low-cost and dexterous bimanual puppeteering setup [104], by mounting it on a wheeled base. The user is then physically tethered to the system and backdrives the wheels to enable base movement. This allows for independent movement of the base while the user has both hands controlling ALOHA . We record the base velocity data and the arm puppeteering data at the same time, forming a whole-body teleoperation system.

在本文中,我们致力于解决将模仿学习应用于双手移动操作的挑战。在硬件方面,我们提出了Mobile ALOHA,这是一个用于收集双手移动操作数据的低成本整体身体远程操作系统。Mobile ALOHA通过将其安装在轮式底座上,扩展了原始ALOHA的能力,即低成本且灵巧的双手操纵设置[104]。用户随后通过物理连接到系统,并通过反向驱动车轮以启用底座移动。这允许用户在双手控制ALOHA的同时独立移动底座。我们同时记录底座速度数据和手臂操纵数据,形成了一个整体身体远程操作系统。

On the imitation learning front, we observe that simply concatenating the base and arm actions then training via direct imitation learning can yield strong performance. Specifically, we concatenate the 14-DoF joint positions of ALOHA with the linear and angular velocity of the mobile base, forming a 16- dimensional action vector. This formulation allows Mobile ALOHA to benefit directly from previous deep imitation learning algorithms, requiring almost no change in implementation. To further improve the imitation learning performance, we are inspired by the recent success of pre-training and co-training on diverse robot datasets, while noticing that there are few to none accessible bimanual mobile manipulation datasets. We thus turn to leveraging data from static bimanual datasets, which are more abundant and easier to collect, specifically the static ALOHA datasets from [81, 104] through the RT-X release [20]. It contains 825 episodes with tasks disjoint from the Mobile ALOHA tasks, and has different mounting positions of the two arms. Despite the differences in tasks and morphology, we observe positive transfer in nearly all mobile manipulation tasks, attaining equivalent or better performance and data efficiency than policies trained using only Mobile ALOHA data. This observation is also consistent across different class of state-of-the-art imitation learning methods, including ACT [104] and Diffusion Policy [18].

在模仿学习方面,我们观察到简单地连接底座和手臂动作,然后通过直接模仿学习进行训练可以产生强大的性能。具体而言,我们将ALOHA的14个自由度关节位置与移动底座的线性和角速度连接起来,形成一个16维动作向量。这种表达方式使Mobile ALOHA能够直接受益于先前的深度模仿学习算法,几乎不需要改变实现方式。为了进一步提高模仿学习性能,我们受到了在多样化机器人数据集上进行预训练和联合训练的最近成功经验的启发,同时注意到几乎没有可访问的双手移动操作数据集。因此,我们转而利用来自静态双手数据集的数据,这些数据更为丰富且更容易收集,具体来说是来自[81, 104]的静态ALOHA数据集,通过RT-X发布[20]。它包含825个任务与Mobile ALOHA任务不同的剧集,并具有两只手臂的不同安装位置。尽管任务和形态存在差异,我们观察到在几乎所有移动操作任务中都有积极的迁移,达到了与仅使用Mobile ALOHA数据训练的策略相当或更好的性能和数据效率。这一观察结果在不同类别的最新模仿学习方法中也是一致的,包括ACT [104]和Diffusion Policy [18]。

The main contribution of this paper is a system for learning complex mobile bimanual manipulation tasks. Core to this system is both (1) Mobile ALOHA, a low-cost whole-body teleoperation system, and (2) the finding that a simple co-training recipe enables data-efficient learning of complex mobile manipulation tasks. Our teleoperation system is capable of multiple hours of consecutive usage, such as cooking a 3-course meal, cleaning a public bathroom, and doing laundry. Our imitation learning result also holds across a wide range of complex tasks such as opening a two-door wall cabinet to store heavy cooking pots, calling an elevator, pushing in chairs, and cleaning up spilled wine. With co-training, we are able to achieve over 80% success on these tasks with only 50 human demonstrations per task, with an average of 34% absolute improvement compared to no co-training.

本文的主要贡献是一个用于学习复杂的移动双手操纵任务的系统。该系统的核心包括 (1) Mobile ALOHA,一个低成本的整体身体远程操作系统,以及 (2) 发现一个简单的联合训练方法能够实现对复杂移动操纵任务的高效学习。我们的远程操作系统能够连续使用多个小时,比如烹饪一顿三道菜的餐食、清理公共浴室和洗衣。我们的模仿学习结果在各种复杂任务上也保持一致,比如打开一个两扇门的壁柜以存放重的炊具、呼叫电梯、推椅子、清理溢出的葡萄酒等。通过联合训练,我们能够在每个任务只有50个人类演示的情况下,在这些任务上取得超过80%的成功率,相较于没有联合训练,平均提高了34%。

2.Related Work

2.相关工作。

Mobile Manipulation. Many current mobile manipulation systems utilize model-based control, which involves integrating human expertise and insights into the system’s design and architecture [9, 17, 33, 52, 93]. A notable example of modelbased control in mobile manipulation is the DARPA Robotics Challenge [56]. Nonetheless, these systems can be challenging to develop and maintain, often requiring substantial team efforts, and even minor errors in perception modeling can result in significant control failures [6, 51]. Recently, learning-based approaches have been applied to mobile manipulation, alleviating much of the heavy engineering. In order to tackle the exploration problem in highdimensional state and action spaces of mobile manipulation tasks, prior works use predefined skill primitives [86, 91, 92], reinforcement learning with decomposed action spaces [38, 48, 58, 94, 101], or whole-body control objectives [36, 42, 99]. Unlike these prior works that use action primitives, state estimators, depth images or object bounding boxes, imitation learning allows mobile manipulators to learn end-to-end by directly mapping raw RGB observations to whole-body actions, showing promising results through large-scale training using realworld data [4, 12, 78] in indoor environments [39, 78]. Prior works use expert demonstrations collected by using a VR interface [76], kinesthetic teaching [100], trained RL policies [43], a smartphone interface [90], motion capture systems [5], or from humans [8]. Prior works also develop humanoid teleoperation by using human motion capture suits [19, 22, 23, 26], exoskeleton [32, 45, 72, 75], VR headsets for visual feedbacks [15, 53, 65, 87], and haptic feedback devices [14, 66]. Purushottam et al. develop an exoskeleton suit attached to a force plate for wholebody teleoperation of a wheeled humanoid, However, there is no low-cost solution to collecting wholebody expert demonstrations for bimanual mobile manipulation. We present Mobile ALOHA for this problem. It is suitable for hour-long teleoperation, and does not require a FPV goggle for streaming back videos from the robot’s egocentric camera or haptic devices.

移动操纵。 许多当前的移动操纵系统采用基于模型的控制,涉及将人类的专业知识和见解整合到系统的设计和架构中[9, 17, 33, 52, 93]。模型驱动控制在移动操纵中的一个显著例子是DARPA机器人挑战[56]。然而,这些系统开发和维护通常较为困难,往往需要大量团队合作,而即使是感知模型中的细小错误也可能导致严重的控制故障[6, 51]。最近,学习为基础的方法已被应用于移动操纵,减轻了大量的工程任务。为了解决在移动操纵任务的高维状态和动作空间中的探索问题,先前的研究使用预定义的技能原语[86, 91, 92]、分解动作空间的强化学习[38, 48, 58, 94, 101],或整体身体控制目标[36, 42, 99]。与使用动作原语、状态估计器、深度图像或物体边界框的先前工作不同,模仿学习允许移动操作器通过将原始RGB观察直接映射到整体身体动作来进行端到端的学习,通过在室内环境中使用真实世界数据进行大规模训练[4, 12, 78],显示出令人期待的结果。 先前的工作使用了通过VR界面[76]、动作教学[100]、训练过的强化学习策略[43]、智能手机界面[90]、运动捕捉系统[5]或人类[8]收集的专家演示。 先前的工作还通过使用人类运动捕捉服[19, 22, 23, 26]、外骨骼[32, 45, 72, 75]、用于视觉反馈的VR头显[15, 53, 65, 87]和触觉反馈设备[14, 66]来开发人形远程操作。 Purushottam等人开发了一个与力板连接的外骨骼套装,用于轮式人形的整体身体远程操作,然而,目前还没有用于收集双手移动操作的整体身体专家演示的低成本解决方案。我们为这个问题提出了Mobile ALOHA。它适用于长时间的远程操作,并且不需要FPV护目镜来从机器人的主观相机实时传送视频或触觉设备。

Imitation Learning for Robotics. Imitation learning enables robots to learn from expert demonstrations [67]. Behavioral cloning (BC) is a simple version, mapping observations to actions. Enhancements to BC include incorporating history with various architectures [12, 47, 59, 77], new training objectives [10, 18, 35, 63, 104], regularization [71], motor primitives [7, 44, 55, 62, 64, 97], and data preprocessing [81]. Prior works also focus on multi-task or few-shot imitation learning, [25, 27, 30, 34, 46, 50, 88, 102], language-conditioned imitation learning [12, 47, 82, 83], imitation from play data [21, 57, 74, 89], using human videos [16, 24, 29, 60, 69, 80, 84, 96], and using task-specific structures [49, 83, 103]. Scaling up these algorithms has led to systems adept at generalizing to new objects, instructions, or scenes [12, 13, 28, 47, 54]. Recently, co-training on diverse real-world datasets collected from different but similar types of robots have shown promising results on single-arm manipulation [11, 20, 31, 61, 98], and on navigation [79]. In this work, we use a co-training pipeline for bimanual mobile manipulation by leveraging the existing static bimanual manipulation datasets, and show that our co-training pipeline improves the performance and data efficiency of mobile manipulation policies across all tasks and several imitation learning methods. To our knowledge, we are the first to find that co-training with static manipulation datasets improves the performance and data efficiency of mobile manipulation policies.

机器人的模仿学习。 模仿学习使机器人能够从专家演示中学习[67]。行为克隆(BC)是一个简单的版本,将观察映射到动作。对BC的增强包括使用各种体系结构整合历史信息[12, 47, 59, 77],新的训练目标[10, 18, 35, 63, 104],正则化[71],运动原语[7, 44, 55, 62, 64, 97]和数据预处理[81]。先前的工作还侧重于多任务或少样本模仿学习[25, 27, 30, 34, 46, 50, 88, 102],以及语言条件的模仿学习[12, 47, 82, 83],来自玩耍数据的模仿学习[21, 57, 74, 89],使用人类视频[16, 24, 29, 60, 69, 80, 84, 96],以及使用任务特定结构[49, 83, 103]。这些算法的扩展使得系统能够很好地推广到新的物体、指令或场景[12, 13, 28, 47, 54]。最近,对来自不同但相似类型的机器人收集的多样化真实世界数据集进行联合训练在单臂操纵[11, 20, 31, 61, 98]和导航[79]方面显示出有希望的结果。在这项工作中,我们通过利用现有的静态双手操纵数据集,使用联合训练流水线进行双手移动操作,并展示了我们的联合训练流水线在所有任务和多种模仿学习方法中提高了移动操作策略的性能和数据效率。据我们所知,我们是第一个发现使用静态操纵数据集进行联合训练可以提高移动操作策略的性能和数据效率的研究。

3.Mobile ALOHA Hardware

3.Mobile ALOHA 硬件

We develop Mobile ALOHA, a low-cost mobile manipulator that can perform a broad range of household tasks. Mobile ALOHA inherits the benefits of the original ALOHA system [104], i.e. the low-cost, dexterous, and repairable bimanual teleoperation setup, while extending its capabilities beyond table-top manipulation. Specifically, we incorporate four key design considerations:

Mobile: The system can move at a speed comparable to human walking, around 1.42m/s.

Stable: It is stable when manipulating heavy household objects, such as pots and cabinets.

Whole-body teleoperation: All degrees of freedom can be teleoperated simultaneously, including both arms and the mobile base.

Untethered: Onboard power and compute.

我们开发了Mobile ALOHA,这是一款低成本的移动操纵器,可以执行各种家庭任务。Mobile ALOHA继承了原始ALOHA系统[104]的优势,即低成本、灵巧和可修复的双手远程操作设置,同时将其能力扩展到桌面操纵以外。具体而言,我们融入了四个关键的设计考虑:

移动性:系统可以以接近人类行走速度的速度移动,约为1.42m/s。

稳定性:在操作重的家庭物品,如锅具和橱柜时,系统保持稳定。

整体身体远程操作:所有自由度都可以同时进行远程操作,包括双臂和移动底座。

无线连接:内置电源和计算。

We choose AgileX Tracer AGV (“Tracer”) as the mobile base following considerations 1 and 2. Tracer is a low-profile, differential drive mobile base designed for warehouse logistics. It can move up to 1.6m/s similar to average human walking speed. With a maximum payload of 100kg and 17mm height, we can add a balancing weight low to the ground to achieve the desired tip-over stability. We found Tracer to possess sufficient traversability in accessible buildings: it can traverse obstacles as tall as 10mm and slopes as steep as 8 degrees with load, with a minimum ground clearance of 30mm. In practice, we found it capable of more challenging terrains such as traversing the gap between the floor and the elevator. Tracer costs $7,000 in the United States, more than 5x cheaper than AGVs from e.g. Clearpath with similar speed and payload.

考虑到第1和第2点,我们选择了AgileX Tracer AGV(“Tracer”)作为移动底座。Tracer是一款专为仓库物流设计的低姿态差动驱动移动底座。它的移动速度可达1.6m/s,类似于普通人行走的速度。最大负载为100kg,高度为17mm,我们可以在地面附近增加平衡重量以实现所需的防翻稳定性。我们发现Tracer在可达性建筑物中具有足够的可通行性:它可以穿越高达10mm的障碍物和带载的斜坡,最小离地间隙为30mm。在实践中,我们发现它能够处理更具挑战性的地形,比如穿越楼层和电梯之间的间隙。Tracer在美国售价为7,000美元,比Clearpath等公司的类似速度和负载的AGV便宜5倍以上。

We then seek to design a whole-body teleoperation system on top of the Tracer mobile base and ALOHA arms, i.e. a teleoperation system that allows simultaneous control of both the base and the two arms (consideration 3). This design choice is particularly important in household settings as it expands the available workspace of the robot. Consider the task of opening a two-door cabinet. Even for humans, we naturally step back while opening the doors to avoid collision and awkward joint configurations. Our teleoperation system shall not constrain such coordinated human motion, nor introduce unnecessary artifacts in the collected dataset. However, designing a whole-body teleoperation system can be challenging, as both hands are already occupied by the ALOHA leader arms. We found the design of tethering the operator’s waist to the mobile base to be the most simple and direct solution, as shown in Figure 2 (left). The human can backdrive the wheels which have very low friction when torqued off. We measure the rolling resistance to be around 13N on vinyl floor, acceptable to most humans. Connecting the operator to the mobile manipulator directly also enables coarse haptic feedback when the robot collides with objects. To improve the ergonomics, the height of the tethering point and the positions of the leader arms can all be independently adjusted up to 30cm. During autonomous execution, the tethering structure can also be detached by loosening 4 screws, together with the two leader arms. This reduces the footprint and weight of the mobile manipulator as shown in Figure 2 (middle). To improve the ergonomics and expand workspace, we also mount the four ALOHA arms all facing forward, different from the original ALOHA which has arms facing inward.

我们随后寻求在Tracer移动底座和ALOHA双臂的基础上设计一个整体身体远程操作系统,即一个允许同时控制底座和两只手臂的远程操作系统(考虑到第3点)。这种设计选择在家庭环境中尤为重要,因为它扩展了机器人的可用工作空间。考虑打开一个两扇门的橱柜的任务。即使对于人类来说,在打开门的同时自然而然地后退以避免碰撞和尴尬的关节配置。我们的远程操作系统不应限制这种协调的人类动作,也不应在收集的数据集中引入不必要的人工成分。然而,设计一个整体身体远程操作系统可能具有挑战性,因为ALOHA领导手臂已经占用了双手。我们发现将操作员的腰部连接到移动底座是最简单和直接的解决方案,如图2(左)所示。人类可以驱动具有非常低摩擦力的车轮。我们测量在乙烯地板上的滚动阻力约为13N,对大多数人来说是可以接受的。直接将操作员连接到移动操纵器还可以在机器人与物体碰撞时提供粗略的触觉反馈。为了改善人体工程学,连接点的高度和领导手臂的位置都可以独立调整,最多可调整30cm。在自主执行期间,通过松开4颗螺钉,连接结构还可以与两个领导手臂一起拆卸。这样可以减小移动操纵器的占地面积和重量,如图2(中)所示。为了改善人体工程学并扩展工作空间,我们还安装了四只ALOHA手臂,它们都朝向前方,不同于原始的ALOHA手臂朝向内部。

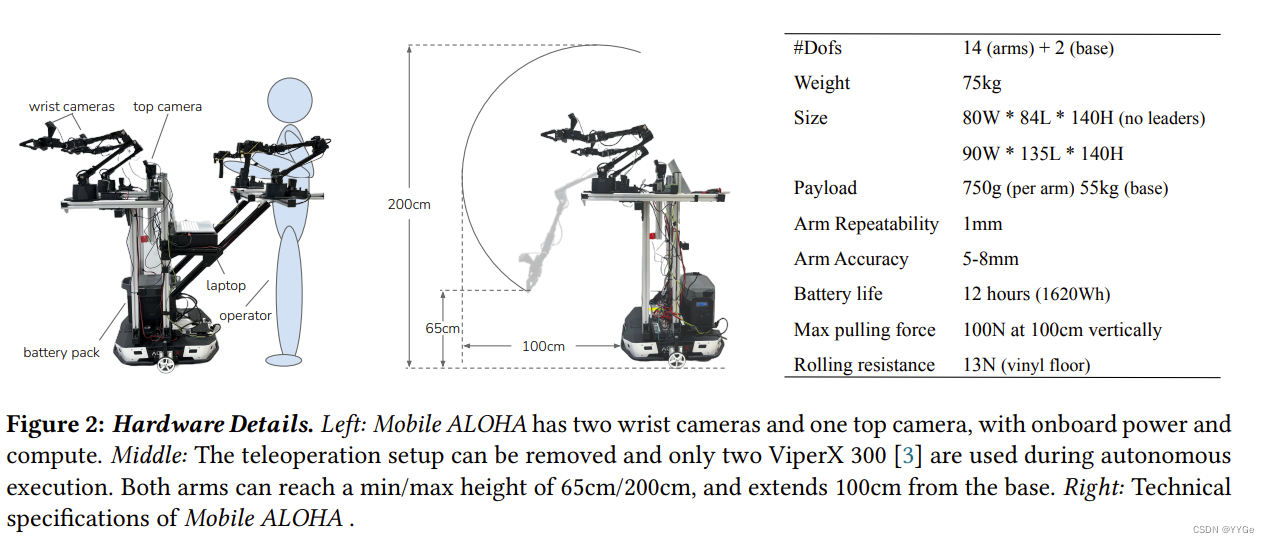

图2:硬件细节。左:Mobile ALOHA具有两个手腕摄像头和一个顶部摄像头,带有内置电源和计算。中:远程操作设置可以移除,只有在自主执行期间使用两个ViperX 300 [3]。两只手臂的高度范围是65cm/200cm,从底座延伸100cm。右:Mobile ALOHA的技术规格。

To make our mobile manipulator untethered (consideration 4), we place a 1.26kWh battery that weights 14kg at the base. It also serves as a balancing weight to avoid tipping over. All compute during data collection and inference is conducted on a consumer-grade laptop with Nvidia 3070 Ti GPU (8GB VRAM) and Intel i7-12800H. It accepts streaming from three Logitech C922x RGB webcams, at 480x640 resolution and 50Hz. Two cameras are mounted to the wrist of the follower robots, and the third facing forward. The laptop also accepts proprioception streaming from all 4 arms through USB serial ports, and from the Tracer mobile base through CAN bus. We record the linear and angular velocities of the mobile base to be used as actions of the learned policy. We also record the joint positions of all 4 robot arms to be used as policy observations and actions. We refer readers to the original ALOHA paper [104] for more details about the arms.

为了使我们的移动操纵器无线连接(考虑第4点),我们在底座上放置了一个重14kg、容量为1.26kWh的电池。它还作为平衡重量,以避免翻倒。在数据收集和推理期间,所有计算都在一台搭载Nvidia 3070 Ti GPU(8GB VRAM)和Intel i7-12800H的消费级笔记本电脑上进行。它接收来自三个Logitech C922x RGB网络摄像头的视频流,分辨率为480x640,帧率为50Hz。两个摄像头安装在跟随机器人的手腕上,第三个摄像头面向前方。笔记本电脑还通过USB串口接受来自所有4只手臂的自感知信息,以及通过CAN总线接受来自Tracer移动底座的自感知信息。我们记录了移动底座的线性和角速度,用作学习策略的动作。我们还记录了所有4只机器人手臂的关节位置,用作策略的观察和动作。关于手臂的更多细节,请参阅原ALOHA论文[104]。

With design considerations above, we build Mobile ALOHA with a $32k budget, comparable to a single industrial cobot such as the Franka Emika Panda. As illustrated in Figure 2 (middle), the mobile manipulator can reach between 65cm and 200cm vertically relative to the ground, can extend 100cm beyond its base, can lift objects that weight 1.5kg, and can exert pulling force of 100N at a height of 1.5m. Some example tasks that Mobile ALOHA is capable of includes:

• Housekeeping: Water plants, use a vacuum, load and unload a dishwasher, obtain drinks from the fridge, open doors, use washing machine, fling and spread a quilt, stuff a pillow, zip and hang a jacket, fold trousers, turn on/off a lamp, and self-charge.

• Cooking: Crack eggs, mince garlic, unpackage vegetables, pour liquid, sear and flip chicken thigh, blanch vegetables, stir fry, and serve food in a dish.

• Human-robot interactions: Greet and shake “hands” with a human, open and hand a beer to human, help human shave and make bed.

考虑到上述的设计要点,我们以3.2万美元的预算建造了Mobile ALOHA,与Franka Emika Panda等单个工业协作机器人的成本相当。如图2(中)所示,移动操纵器在垂直于地面的范围内可达65cm至200cm,可延伸100cm超出其底座,可举起重达1.5kg的物体,并在1.5m的高度施加100N的拉力。Mobile ALOHA能够完成的一些示例任务包括:

• 家务:浇水给植物,使用吸尘器,装载和卸载洗碗机,从冰箱里拿饮料,打开门,使用洗衣机,甩开和铺展被子,填充枕头,拉链和挂上夹克,折叠裤子,打开/关闭灯,并自动充电。

• 烹饪:打蛋,剁蒜,解包蔬菜,倒液体,煎和翻转鸡腿,焯水煮蔬菜,炒菜,以及将食物端到盘子里。

• 人机交互:与人打招呼并握手,打开并递给人一瓶啤酒,帮助人刮胡子和整理床铺。

We include more technical specifications of Mobile ALOHA in Figure 2 (right). Beyond the off-theshelf robots, we open-source all of the software and hardware parts with a detailed tutorial covering 3D printing, assembly, and software installation. The tutorial is on the project website

我们在图2(右)中包含了Mobile ALOHA的更多技术规格。除了现成的机器人外,我们将所有软件和硬件部分开源,并提供详细的教程,涵盖3D打印、装配和软件安装。该教程可以在项目网站上找到。

4.Co-training with Static ALOHA Data

4. 使用静态ALOHA数据进行联合训练

The typical approach for using imitation learning to solve real-world robotics tasks relies on using the datasets that are collected on a specific robot hardware platform for a targeted task. This straightforward approach, however, suffers from lengthy data collection processes where human operators collect demonstration data from scratch for every task on the a specific robot hardware platform. The policies trained on these specialized datasets are often not robust to the perceptual perturbations (e.g. distractors and lighting changes) due to the limited visual diversity in these datasets [95]. Recently, co-training on diverse real-world datasets collected from different but similar types of robots have shown promising results on single-arm manipulation [11, 20, 31, 61], and on navigation [79].

利用模仿学习解决实际机器人任务的典型方法是,使用在特定机器人硬件平台上为特定任务收集的数据集。然而,这种直接的方法在数据收集过程中存在问题,因为人类操作员需要在特定机器人硬件平台上为每个任务从零开始收集演示数据,这通常是一个耗时的过程。在这些专门的数据集上训练的策略通常对感知干扰(例如干扰物和光照变化)不具有鲁棒性,这是由于这些数据集中视觉多样性有限[95]。最近,在从不同但相似类型的机器人收集的多样化真实世界数据集上进行联合训练在单臂操纵[11, 20, 31, 61]和导航[79]方面显示出有希望的结果。

In this work, we use a co-training pipeline that leverages the existing static ALOHA datasets to improve the performance of imitation learning for mobile manipulation, specifically for the bimanual arm actions. The static ALOHA datasets [81, 104] have 825 demonstrations in total for tasks including Ziploc sealing, picking up a fork, candy wrapping, tearing a paper towel, opening a plastic portion cup with a lid, playing with a ping pong, tape dispensing, using a coffee machine, pencil hand-overs, fastening a velcro cable, slotting a battery, and handling over a screw driver. Notice that the static ALOHA data is all collected on a black table-top with the two arms fixed to face towards each other. This setup is different from Mobile ALOHA where the background changes with the moving base and the two arms are placed in parallel facing the front. We do not use any special data processing techniques on either the RGB observations or the bimanual actions of the static ALOHA data for our co-training.

在这项工作中,我们使用一个联合训练的流程,利用现有的静态ALOHA数据集来提高模仿学习在移动操纵中的性能,特别是针对双手臂动作。静态ALOHA数据集[81, 104]总共包含825个演示,涵盖的任务包括密封Ziploc袋、拿叉子、包装糖果、撕纸巾、打开带盖的塑料杯、玩乒乓球、提取胶带、使用咖啡机、递铅笔、扣上维尔克罗电缆、插入电池和递螺丝刀等。请注意,静态ALOHA数据集都是在一个黑色桌面上收集的,两只手臂固定朝向彼此。这个设置与Mobile ALOHA不同,后者的背景随着移动底座的移动而变化,并且两只手臂被放置在面向前方的平行位置。在我们的联合训练中,我们对静态ALOHA数据的RGB观察或双手臂动作都没有使用任何特殊的数据处理技术。

Denote the aggregated static ALOHA data as as Dstatic, and the Mobile ALOHA dataset for a task m as Dm mobile. The bimanual actions are formulated as target joint positions aarms ∈ R 14 which includes two continuous gripper actions, and the base actions are formulated as target base linear and angular velocities abase ∈ R 2 . The training objective for a mobile

manipulation policy π m for a task m is

E(o i ,ai arms,ai base)∼Dm mobile L(a i arms, ai base, πm(o i )) + E(o i ,ai arms)∼Dstatic L(a i arms, [0, 0], πm(o i )) ,

where o i is the observation consisting of two wrist camera RGB observations, one egocentric top camera RGB observation mounted between the arms, and joint positions of the arms, and L is the imitation loss function. We sample with equal probability from the static ALOHA data Dstatic and the Mobile ALOHA data Dm mobile. We set the batch size to be 16. Since static ALOHA datapoints have no mobile base actions, we zero-pad the action labels so actions from both datasets have the same dimension. We also ignore the front camera in the static ALOHA data so that both datasets have 3 cameras. We normalize every action based on the statistics of the Mobile ALOHA dataset Dm mobile alone. In our experiments, we combine this co-training recipe with multiple base imitation learning approaches, including ACT [104], Diffusion Policy [18], and VINN [63].

将聚合的静态ALOHA数据表示为Dstatic,将任务m的Mobile ALOHA数据集表示为Dm mobile。双手臂动作被构建为目标关节位置aarms ∈ R 14,其中包括两个连续的夹爪动作,底座动作被构建为目标底座线性和角速度abase ∈ R 2。任务m的移动操纵策略πm的训练目标是

![E(o i ,ai arms,ai base)∼Dm mobile L(a i arms, ai base, πm(o i )) + E(o i ,ai arms)∼Dstatic L(a i arms, [0, 0], πm(o i )),](https://img-blog.csdnimg.cn/direct/9ef4e74941d64d3b934c489ff412e61e.png)

其中oi是由两个手腕摄像头RGB观察、一个安装在手臂之间的主观顶部摄像头RGB观察和手臂的关节位置组成的观察,L是模仿损失函数。我们以相等的概率从静态ALOHA数据Dstatic和Mobile ALOHA数据Dm mobile中进行采样。我们将批次大小设置为16。由于静态ALOHA数据点没有底座动作,我们对动作标签进行零填充,以使两个数据集的动作具有相同的维度。我们还忽略静态ALOHA数据中的前置摄像头,以便两个数据集都有3个摄像头。我们基于Mobile ALOHA数据集Dm mobile的统计信息对每个动作进行归一化。在实验中,我们将这种联合训练的方法与多种底座模仿学习方法结合使用,包括ACT [104]、Diffusion Policy [18]和VINN [63]。

5.Tasks

5.任务

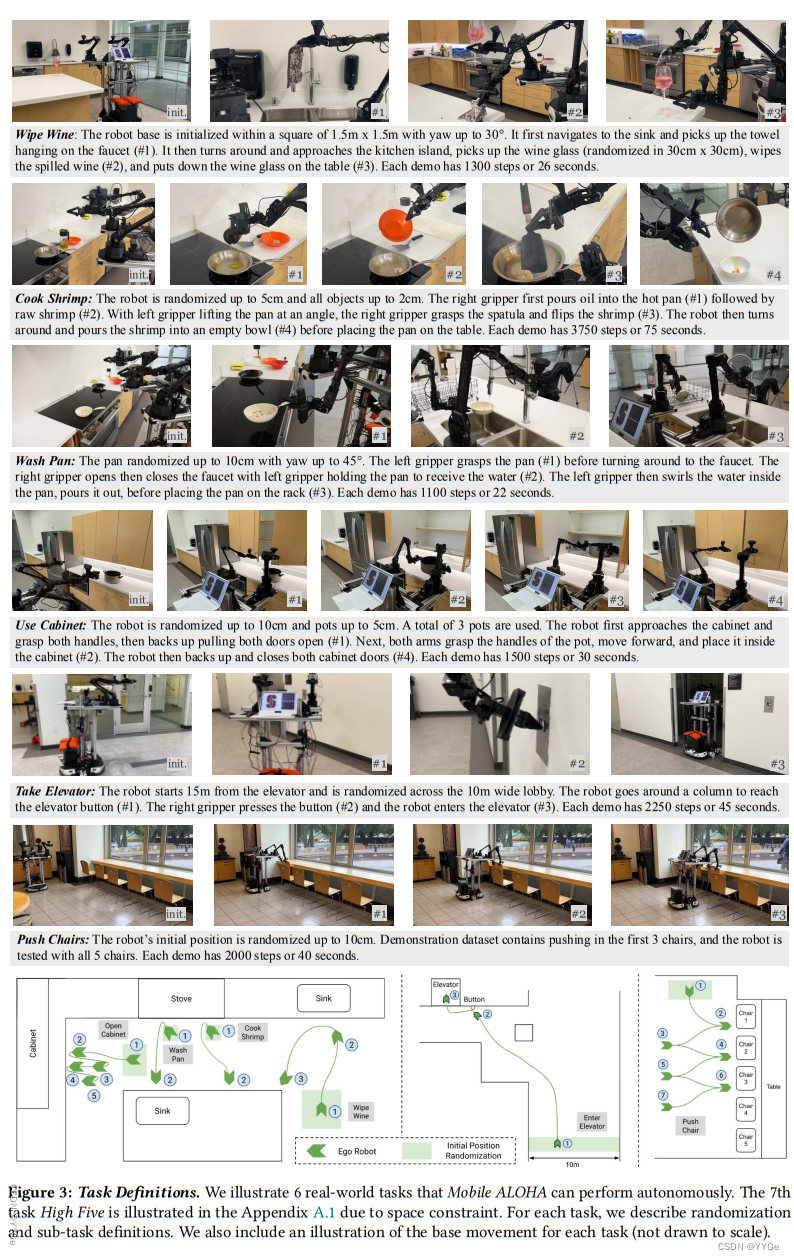

We select 7 tasks that cover a wide range of capabilities, objects, and interactions that may appear in realistic applications. We illustrate them in Figure 3. For Wipe Wine, the robot needs to clean up spilled wine on the table. This task requires both mobility and bimanual dexterity. Specifically, the robot needs to first navigate to the faucet and pick up the towel, then navigate back to the table. With one arm lifting the wine glass, the other arm needs to wipe the table as well as the bottom of the glass with the towel. This task is not possible with static ALOHA, and would take more time for a single-armed mobile robot to accomplish.

我们选择了7个任务,涵盖了在实际应用中可能出现的各种能力、物体和交互。我们在图3中进行了说明。对于"Wipe Wine"任务,机器人需要清理桌子上溢出的葡萄酒。这个任务需要机器人具备机动性和双手灵活性。具体而言,机器人需要首先导航到水龙头并拿起毛巾,然后返回到桌子。一只手提起酒杯,另一只手需要用毛巾擦拭桌子以及杯子底部。这个任务对于静态ALOHA来说是不可能完成的,对于单臂移动机器人来说则需要更多时间来完成。

图3:任务定义。我们说明了Mobile ALOHA可以自主执行的6个真实世界任务。由于空间限制,第7个任务High Five在附录A.1中进行了说明。对于每个任务,我们描述了随机化和子任务定义。我们还包含了每个任务的底座移动的示意图(未按比例绘制)。

For Cook Shrimp, the robot sautes one piece of raw shrimp on both sides before serving it in a bowl. Mobility and bimanual dexterity are also necessary for this task: the robot needs to move from the stove to the kitchen island as well as flipping the shrimp with spatula while the other arm tilting the pan. This task requires more precision than wiping wine due to the complex dynamics of flipping a half-cooked shrimp. Since the shrimp may slightly stick to the pan, it is difficult for the robot to reach under the shrimp with the spatula and precisely flip it over.

对于Cook Shrimp任务,机器人需要在将其放入碗中之前煎炸一片生虾的两面。这个任务也需要机动性和双手灵活性:机器人需要从炉子移动到厨房岛,同时用翻铲翻转虾,而另一只手则倾斜平底锅。由于翻转半煮熟的虾的复杂动力学,这个任务比擦拭葡萄酒需要更高的精度。由于虾可能稍微粘在锅上,机器人很难用翻铲达到虾的下面并精确地将其翻转过来。

For Rinse Pan, the robot picks up a dirty pan and rinse it under the faucet before placing it on the drying rack. In addition to the challenges in the previous two tasks, turning on the faucet poses a hard perception challenge. The knob is made from shiny stainless steel and is small in size: roughly 4cm in length and 0.7cm in diameter. Due to the stochasticity introduced by the base motion, the arm needs to actively compensate for the errors by “visuallyservoing” to the shiny knob. A centimeter-level error could result in task failure.

对于Rinse Pan任务,机器人拿起一个脏锅,在水龙头下冲洗它,然后将其放在晾碗架上。除了前两个任务中的挑战外,打开水龙头提出了一个难度很大的感知问题。旋钮是由闪亮的不锈钢制成,尺寸较小:大约4厘米长,直径0.7厘米。由于底座运动引入的随机性,手臂需要通过“视觉伺服”到发亮的旋钮来主动补偿错误。厘米级的误差可能导致任务失败。

For Use Cabinet, the robot picks up a heavy pot and places it inside a two-door cabinet. While seemingly a task that require no base movement, the robot actually needs to move back and forth four times to accomplish this task. For example when opening the cabinet door, both arms need to grasp the handle while the base is moving backward. This is necessary to avoid collision with the door and have both arms within their workspace. Maneuvers like this also stress the importance of whole-body teleoperation and control: if the arms and base control are separate, the robot will not be able to open both doors quickly and fluidly. Notably, the heaviest pot in our experiments weighs 1.4kg, exceeding the single arm’s payload limit of 750g while within the combined payload of two arms.

对于Use Cabinet任务,机器人拿起一个重锅并将其放入一个双门橱柜中。尽管看似不需要底座运动的任务,但机器人实际上需要四次前后移动才能完成此任务。例如,在打开橱柜门时,当底座向后移动时,两只手都需要抓住把手。这是为了避免与门碰撞并使两只手都处于它们的工作空间内。类似的操作也强调了整体身体的远程操作和控制的重要性:如果手臂和底座控制是分开的,机器人将无法迅速而流畅地打开两个门。值得注意的是,我们实验中最重的锅重1.4kg,超过了单臂750g的有效载荷限制,但在两只手臂的联合有效载荷范围内。

For Call Elevator, the robot needs to enter the elevator by pressing the button. We emphasize long navigation, large randomization, and precise wholebody control in this task. The robot starts around 15m from the elevator and is randomized across the 10m wide lobby. To press the elevator button, the robot needs to go around a column and stop precisely next to the button. Pressing the button, measured 2cm×2cm in size, requires precision as pressing the peripheral or pressing too lightly will not activate the elevator. The robot also needs to turn sharply and precisely to enter the elevator door: there is only 30cm in clearance between the robot’s widest part and the door.

对于Call Elevator任务,机器人需要通过按下按钮进入电梯。我们在这个任务中强调了长距离导航、大幅度随机化和精确的整体身体控制。机器人从电梯约15米的地方开始,并在10米宽的大厅内进行随机排列。为了按下电梯按钮,机器人需要绕过一根柱子并精确停在按钮旁边。按下按钮,按钮的尺寸为2cm×2cm,需要精确按压,因为按压边缘或按压过轻都无法激活电梯。机器人还需要迅速而精确地转身进入电梯门:机器人的最宽部分与门之间只有30厘米的空隙。

For Push Chairs, the robot needs to push in 5 chairs in front of a long desk. This task emphasizes the strength of the mobile manipulator: it needs to overcome the friction between the 5kg chair and the ground with coordinated arms and base movement. To make this task more challenging, we only collect data for the first 3 chairs, and stress test the robot to extrapolate to the 4th and 5th chair.

对于Push Chairs任务,机器人需要将长桌前的5把椅子推入。这个任务强调了移动机械手的力量:它需要通过协调的手臂和底座运动来克服5kg椅子与地面之间的摩擦。为了使这个任务更具挑战性,我们只为前3把椅子收集数据,并对机器人进行压力测试以推断第4和第5把椅子。

For High Five, we include illustrations in the Appendix A.1. The robot needs to go around the kitchen island, and whenever a human approach it from the front, stop moving and high five with the human. After the high five, the robot should continue moving only when the human moves out of its path. We collect data wearing different clothes and evaluate the trained policy on unseen persons and unseen attires. While this task does not require a lot of precision, it highlights Mobile ALOHA’s potential for studying human-robot interactions.

对于High Five任务,我们在附录A.1中包含了插图。机器人需要绕过厨房岛,每当有人从前面靠近时,停下来与人击掌。击掌后,只有当人移出其路径时,机器人才应继续移动。我们在不同的服装下收集数据,并在未见过的人和未见过的服装上评估训练有素的策略。虽然这个任务不需要很高的精度,但它突显了Mobile ALOHA在研究人机交互方面的潜力。

We want to highlight that for all tasks mentioned above, open-loop replaying a demonstration with objects restored to the same configurations will achieve zero whole-task success. Successfully completing the task requires the learned policy to react closeloop and correct for those errors. We believe the source of errors during the open-loop replaying is the mobile base’s velocity control. As an example, we observe >10cm error on average when replaying the base actions for a 180 degree turn with 1m radius. We include more details about this experiment in Appendix A.4.

我们要强调的是,对于上述所有任务,通过在将物体恢复到相同配置的情况下对演示进行开环重播,发现没有任务成功。成功完成任务要求学到的策略能够闭环地反应并纠正这些错误。我们认为在开环重播过程中出现错误的根本原因是移动底座的速度控制。例如,当重播具有1米半径的180度转弯的基本动作时,我们观察到平均误差超过10厘米。我们在附录A.4中提供了有关这个实验的更多细节。

6.Experiments

6.实验

We aim to answer two central questions in our experiments. (1) Can Mobile ALOHA acquire complex mobile manipulation skills with co-training and a small amount of mobile manipulation data? (2) Can Mobile ALOHA work with different types of imitation learning methods, including ACT [104], Diffusion Policy [18], and retrieval-based VINN [63]? We conduct extensive experiments in the real-world to examine these questions.

我们在实验中旨在回答两个核心问题:(1) Mobile ALOHA是否能够通过联合训练和少量移动操作数据获得复杂的移动操作技能?(2) Mobile ALOHA是否能够与不同类型的模仿学习方法一起工作,包括ACT [104]、Diffusion Policy [18]和检索型VINN [63]?我们在现实世界中进行了大量实验证明这些问题。

As a preliminary, all methods we will examine employ “action chunking” [104], where a policy predicts a sequence of future actions instead of one action at each timestep. It is already part of the method for ACT and Diffusion policy, and simple to be added for VINN. We found action chunking to be crucial for manipulation, improving the coherence of generated trajectory and reducing the latency from per-step policy inference. Action chunking also provides a unique advantage for Mobile ALOHA: handling the delay of different parts of the hardware more flexibly. We observe a delay between target and actual velocities of our mobile base, while the delay for positioncontrolled arms is much smaller. To account for a delay of d steps of the mobile base, our robot executes the first k − d arm actions and last k − d base actions of an action chunk of length k.

作为初步工作,我们将研究的所有方法都采用了“动作分块”[104]的方法,其中策略预测未来一系列动作,而不是每个时间步预测一个动作。这已经是ACT和Diffusion Policy方法的一部分,而对于VINN方法来说,添加这一特性也很简单。我们发现动作分块对于操作是至关重要的,它提高了生成轨迹的连贯性并减少了每步策略推理的延迟。动作分块还为Mobile ALOHA提供了独特的优势:更灵活地处理硬件不同部分的延迟。我们观察到我们的移动底座的目标速度和实际速度之间存在延迟,而对于位置控制的手臂,延迟要小得多。为了考虑移动底座的d步延迟,我们的机器人执行长度为k的动作块的前k−d个手臂动作和后k−d个底座动作。

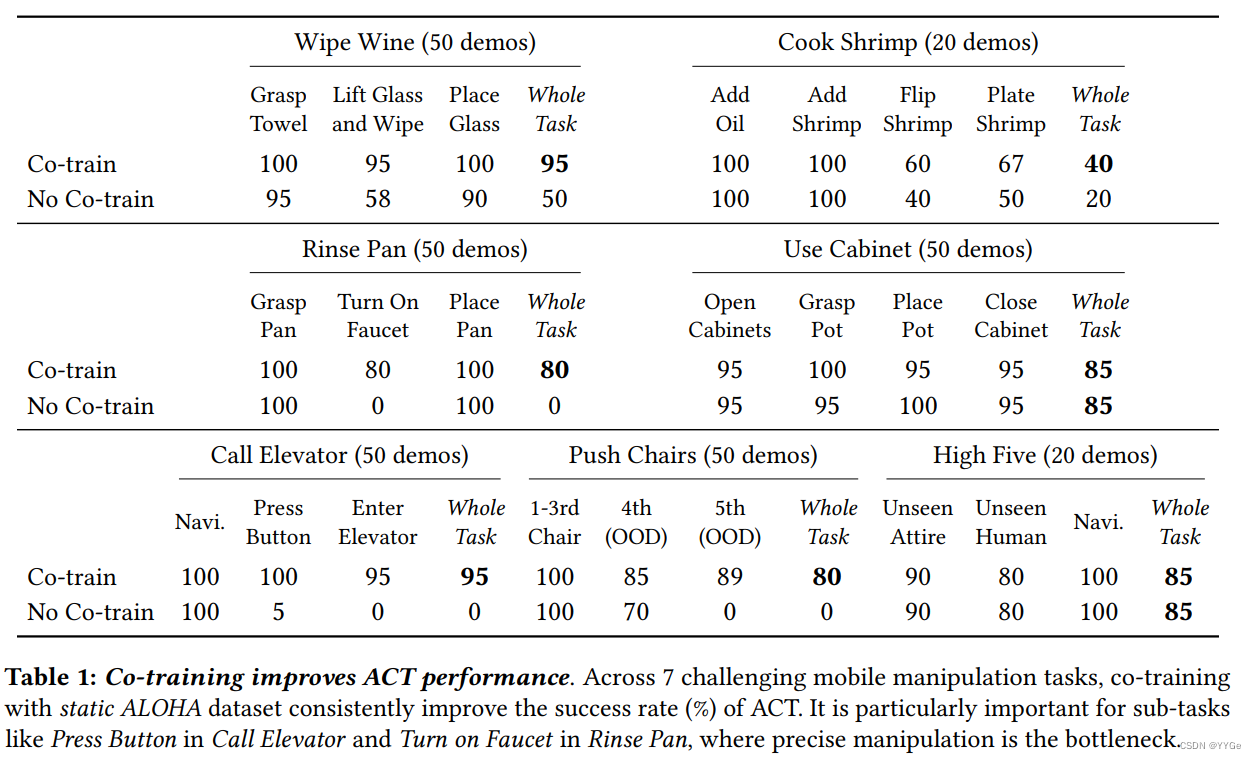

6.1.Co-training Improves Performance

6.1.联合训练提高性能

We start with ACT [104], the method introduced with ALOHA, and train it on all 7 tasks with and without co-training. We then evaluate each policy in the real-world, with randomization of robot and objects configurations as described in Figure 3. To calculate the success rate for a sub-task, we divide #Success by #Attempts. For example in the case of Lift Glass and Wipe sub-task, the #Attempts equals the number of success from the previous subtask Grasp Towel, as the robot could fail and stop at any sub-task. This also means the final success rate equals the product of all sub-task success rates. We report all success rates in Table 1. Each success rate is computed from 20 trials of evaluation, except Cook Shrimp which has 5.

我们首先使用ALOHA引入的方法ACT [104]开始,对所有7个任务进行训练,分别使用联合训练和不使用联合训练的方式。然后,在现实世界中评估每个策略,随机设置机器人和物体的配置,如图3所述。为了计算子任务的成功率,我们将#成功除以#尝试次数。例如,在举杯和擦玻璃子任务中,#尝试次数等于前一个子任务“拿毛巾”的成功次数,因为机器人在任何子任务都可能失败并停止。这也意味着最终成功率等于所有子任务成功率的乘积。我们在表1中报告了所有成功率。每个成功率是通过20次评估的平均计算得出的,Cook Shrimp任务除外,它只有5次评估。

在表1中,可以看到联合训练明显提高了ACT(模仿学习)在7个具有挑战性的移动操控任务中的性能。这在涉及到精确操纵的子任务中尤为明显,例如Call Elevator任务中的按按钮和Rinse Pan任务中的打开水龙头。

With the help of co-training, the robot obtains 95% success for Wipe Wine, 95% success for Call Elevator, 85% success for Use Cabinet, 85% success for High Five, 80% success for Rinse Pan, and 80% success for Push Chairs. Each of these tasks only requires 50 indomain demonstrations, or 20 in the case of High Five. The only task that falls below 80% success is Cook Shrimp (40%), which is a 75-second long-horizon task for which we only collected 20 demonstrations. We found the policy to struggle with flipping the shrimp with the spatula and pouring the shrimp inside the white bowl, which has low contrast with the white table. We hypothesizethat the lower success is likely due to the limited demonstration data. Co-training improves the whole-task success rate in 5 out of the 7 tasks, with a boost of 45%, 20%, 80%, 95% and 80% respectively. For the remaining two tasks, the success rate is comparable between co-training and no co-training. We find co-training to be more helpful for sub-tasks where precise manipulation is the bottleneck, for example Press Button, Flip Shrimp, and Turn On Faucet. In all of these cases, compounding errors appear to be the main source of failure, either from the stochasticity of robot base velocity control or from rich contacts such as grasping of the spatula and making contact with the pan during Flip Shrimp. We hypothesize that the “motion prior” of grasping and approaching objects in the static ALOHA dataset still benefits Mobile ALOHA, especially given the invariances introduced by the wrist camera [41]. We also find the co-trained policy to generalize better in the case of Push Chairs and Wipe Wine. For Push Chairs, both co-training and no co-training achieve perfect success for the first 3 chairs, which are seen in the demonstrations. However, co-training performs much better when extrapolating to the 4th and 5th chair, by 15% and 89% respectively. For Wipe Wine, we observe that the co-trained policy performs better at the boundary of the wine glass randomization region. We thus hypothesize that co-training can also help prevent overfitting, given the low-data regime of 20-50 demonstrations and the expressive transformer-based policy used.

在联合训练的帮助下,机器人在Wipe Wine任务中获得95%的成功率,在Call Elevator任务中获得95%的成功率,在Use Cabinet任务中获得85%的成功率,在High Five任务中获得85%的成功率,在Rinse Pan任务中获得80%的成功率,在Push Chairs任务中获得80%的成功率。这些任务每个只需要50个领域内演示,或者在High Five任务中只需要20个。唯一一个成功率低于80%的任务是Cook Shrimp任务(40%),这是一个时长为75秒的长时任务,我们只收集了20个演示。我们发现在使用铲子翻转虾并将虾倒入白碗的过程中,机器人在此任务中表现较差,这可能是由于有限的演示数据导致的。联合训练在7项任务中提高了整体任务成功率,分别提升了45%、20%、80%、95%和80%。对于另外两项任务,联合训练和非联合训练的成功率相当。我们发现联合训练对于精确操纵是更有帮助的,例如按按钮、翻虾和打开水龙头等子任务。在所有这些情况下,主要的失败原因似乎是由于机器人基座速度控制的随机性或在翻虾过程中使用铲子抓取和与平底锅接触等复杂的接触引起的。我们假设在静态ALOHA数据集中抓取和接近对象的“运动先验”仍然有益于Mobile ALOHA,尤其是考虑到手腕相机引入的不变性。我们还发现联合训练对于Push Chairs和Wipe Wine的泛化效果更好。对于Push Chairs,联合训练和非联合训练在前3把椅子上都能够完美成功,这些都在演示中看到。然而,在对第4和第5把椅子进行推断时,联合训练的性能要好得多,分别提高了15%和89%。对于Wipe Wine,我们观察到联合训练的策略在酒杯随机化区域的边缘表现更好。因此,我们推测联合训练还可以帮助防止过度拟合,尤其考虑到20-50次演示和使用Transformer的表达丰富的策略所处的低数据情境。

6.2.Compatibility with ACT, Diffusion Policy, and VINN

6.2.与ACT、Diffusion Policy和VINN的兼容性

We train two recent imitation learning methods, Diffusion Policy [18] and VINN [63], with Mobile ALOHA in addition to ACT. Diffusion policy trains a neural network to gradually refine the action prediction. We use the DDIM scheduler [85] to improve inference speed, and apply data augmentation to image observations to prevent overfitting. The co-training data pipeline is the same as ACT, and we include more training details in the Appendix A.3. VINN trains a visual representation model, BYOL [37] and uses it to retrieve actions from the demonstration dataset with nearest neighbors. We augment VINN retrieval with proprioception features and tune the relative weight to balance visual and proprioception feature importance. We also retrieve an action chunk instead of a single action and find significant performance improvement similar to Zhao et al… For co-training, we simply co-train the BYOL encoder with the combined mobile and static data

我们使用Mobile ALOHA训练两种最近的模仿学习方法,Diffusion Policy [18] 和VINN [63],以及ACT。Diffusion Policy训练一个神经网络逐渐优化动作预测。我们使用DDIM调度器 [85] 提高推理速度,并对图像观察进行数据增强以防止过拟合。联合训练数据管道与ACT相同,关于更多的训练细节请参见附录A.3。VINN训练一个视觉表示模型,BYOL [37] 并使用它从最近邻的演示数据集中检索动作。我们使用 proprioception 特征增强VINN检索,并调整相对权重以平衡视觉和 proprioception 特征的重要性。我们还检索一个动作块而不是一个单独的动作,并发现与Zhao等人类似的显著性能改进。对于联合训练,我们简单地与综合的移动和静态数据一起联合训练BYOL编码器。

In Table 2, we report co-training and no cotraining success rates on 2 real-world tasks: Wipe Wine and Push Chairs. Overall, Diffusion Policy performs similarly to ACT on Push Chairs, both obtaining 100% with co-training. For Wipe Wine, we observe worse performance with diffusion at 65% success. The Diffusion Policy is less precise when approaching the kitchen island and grasping the wine glass. We hypothesize that 50 demonstrations is not enough for diffusion given it’s expressiveness: previous works that utilize Diffusion Policy tend to train on upwards of 250 demonstrations. For VINN + Chunking, the policy performs worse than ACT or Diffusion across the board, while still reaching reasonable success rates with 60% on Push Chairs and 15% on Wipe Wine. The main failure modes are imprecise grasping on Lift Glass and Wipe as well as jerky motion when switching between chunks. We find that increasing the weight on proprioception when retrieving can improve the smoothness while at a cost of paying less attention to visual inputs. We find co-training to improve Diffusion Policy’s performance, by 30% and 20% for on Wipe Wine and Push Chairs respectively. This is expected as co-training helps address overfitting. Unlike ACT and Diffusion Policy, we observe mixed results for VINN, where co-training hurts Wipe Wine by 5% while improves Push Chairs by 20%. Only the representations of VINN are cotrained, while the action prediction mechanism of VINN does not have a way to leverage the out-ofdomain static ALOHA data, perhaps explaining these mixed results.

在表2中,我们报告了在两个真实世界任务Wipe Wine和Push Chairs上进行联合训练和非联合训练的成功率。总体而言,Diffusion Policy在Push Chairs上的表现与ACT相似,都在联合训练时达到了100%。对于Wipe Wine,我们观察到扩散在65%的成功率下表现较差。Diffusion Policy在靠近厨房岛和抓取酒杯时不够精确。我们假设50个演示对于Diffusion来说不够,因为它的表达力较强:先前利用Diffusion Policy的工作通常在250次以上的演示上进行训练。对于VINN + Chunking,该策略在整体上表现不及ACT或Diffusion,但在Push Chairs上仍然达到了合理的成功率,为60%,在Wipe Wine上为15%。主要的失败模式是在Lift Glass和Wipe时抓取不准确,以及在切换块时的抖动运动。我们发现在检索时增加 proprioception 权重可以提高平稳性,但代价是减少对视觉输入的关注。我们发现联合训练可以提高Diffusion Policy的性能,在Wipe Wine和Push Chairs上分别提高了30%和20%。这是预期的,因为联合训练有助于解决过拟合问题。与ACT和Diffusion Policy不同,我们观察到对于VINN,联合训练的效果参差不齐,联合训练对Wipe Wine的影响为-5%,而对Push Chairs的影响为20%。只有VINN的表示被联合训练,而VINN的动作预测机制没有办法利用领域外的静态ALOHA数据,这可能解释了这些混合的结果。

7.Ablation Studies

7.消融实验

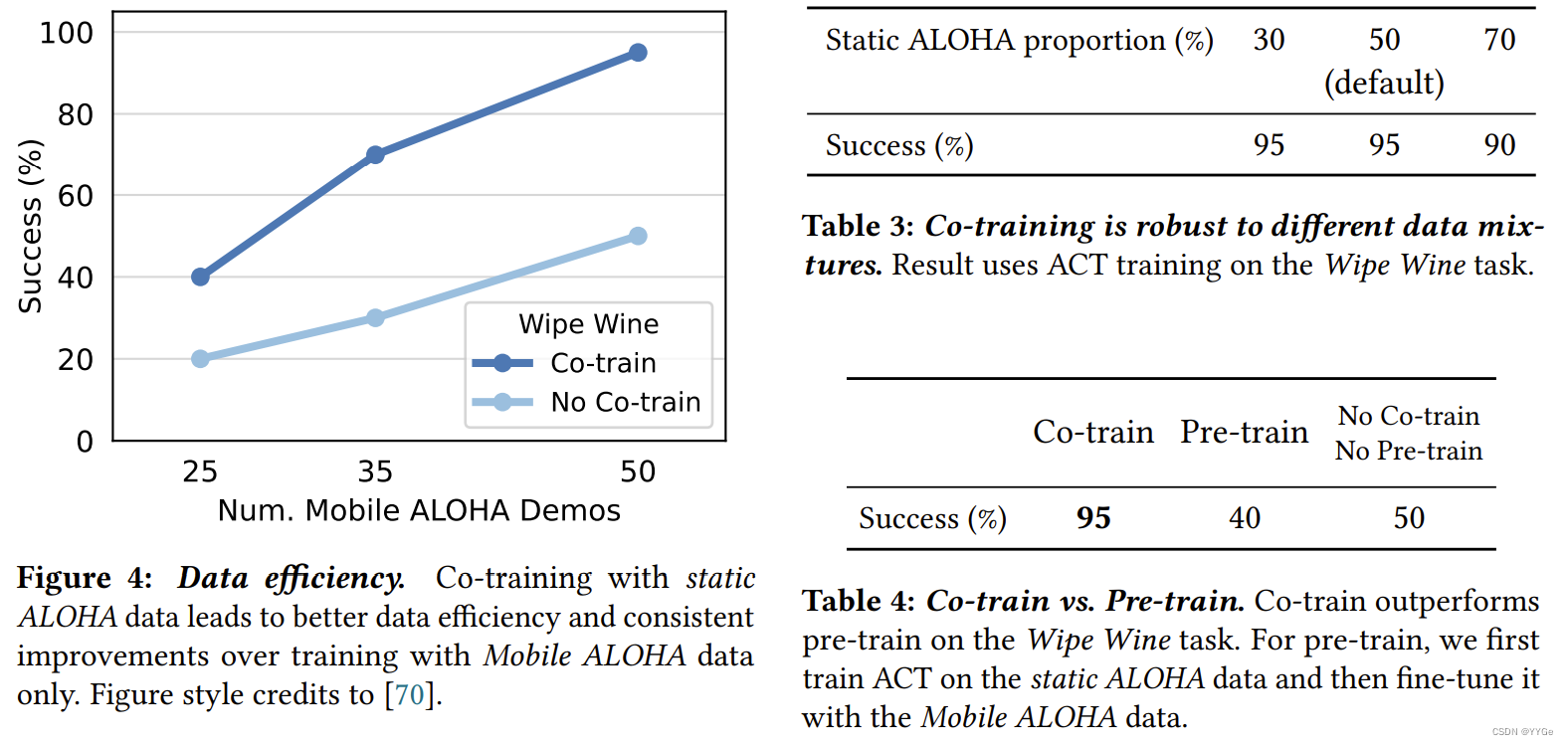

Data Efficiency. In Figure 4, we ablate the number of mobile manipulation demonstrations for both co-training and no co-training, using ACT on the Wipe Wine task. We consider 25, 35, and 50 Mobile ALOHA demonstrations and evaluate for 20 trials each. We observe that co-training leads to better data efficiency and consistent improvements over training using only Mobile ALOHA data. With co-training, the policy trained with 35 in-domain demonstrations can outperform the no co-training policy trained with 50 in-domain demonstrations, by 20% (70% vs. 50%).

数据效率。 在图4中,我们对使用ACT进行Wipe Wine任务的联合训练和非联合训练的移动操作演示数量进行了消融。我们考虑了25、35和50个Mobile ALOHA演示,并进行了每个20次的评估。我们观察到,联合训练提高了数据效率,并在仅使用Mobile ALOHA数据进行训练的情况下持续改进。通过联合训练,使用35个领域内演示训练的策略可以胜过不使用联合训练的使用50个领域内演示训练的策略,提高了20%(70% vs. 50%)。

图4:数据效率。使用静态ALOHA数据进行联合训练可以提高数据效率,并在仅使用Mobile ALOHA数据进行训练的基础上稳定改进。图形风格由[70]提供。

表3:联合训练对不同数据混合方式具有鲁棒性。结果使用ACT在Wipe Wine任务上进行训练。

表4:联合训练 vs 预训练。在Wipe Wine任务中,联合训练优于预训练。对于预训练,我们首先在静态ALOHA数据上训练ACT,然后用Mobile ALOHA数据进行微调。

Co-training Is Robust To Different Data Mixtures. We sample with equal probability from the static ALOHA datasets and the Mobile ALOHA task dataset to form a training mini-batch in our cotraining experiments so far, giving a co-training data sampling rate of roughly 50%. In Table 3, we study how different sampling strategies affect performance on the Wipe Wine task. We train ACT with 30% and 70% co-training data sampling rates in addition to 50%, then evaluate 20 trials each. We see similar performance across the board, with 95%, 95% and 90% success respectively. This experiment suggests that co-training performance is not sensitive to different data mixtures, reducing the manual tuning necessary when incorporating co-training on a new task.

联合训练对不同数据混合的鲁棒性。 在迄今为止的联合训练实验中,我们从静态ALOHA数据集和Mobile ALOHA任务数据集中等概率抽样以形成训练小批量,从而得到联合训练数据采样率约为50%。在表3中,我们研究了不同抽样策略如何影响Wipe Wine任务的性能。我们使用30%和70%的联合训练数据采样率以及50%进行ACT训练,然后进行每个20次的评估。我们看到整体上性能相似,分别为95%,95%和90%的成功率。该实验表明,联合训练的性能对于不同的数据混合并不敏感,减少了在将联合训练纳入新任务时需要手动调整的工作。

Co-training Outperforms Pre-training. In Table 4, we compare co-training and pre-training on the static ALOHA data. For pre-training, we first train ACT on the static ALOHA data for 10K steps and then continue training with in-domain task data. We experiment with the Wipe Wine task and observe that pre-training provides no improvements over training solely on Wipe Wine data. We hypothesize that the network forgets its experience on the static ALOHA data during the fine-tuning phase.

联合训练优于预训练。 在表4中,我们比较了对静态ALOHA数据进行联合训练和预训练的效果。对于预训练,我们首先在静态ALOHA数据上对ACT进行1万步的预训练,然后继续使用领域内任务数据进行微调。我们进行了Wipe Wine任务的实验,并观察到预训练在仅使用Wipe Wine数据进行训练时没有改进。我们假设网络在微调阶段遗忘了在静态ALOHA数据上的经验。

8.User Studies

8.用户研究

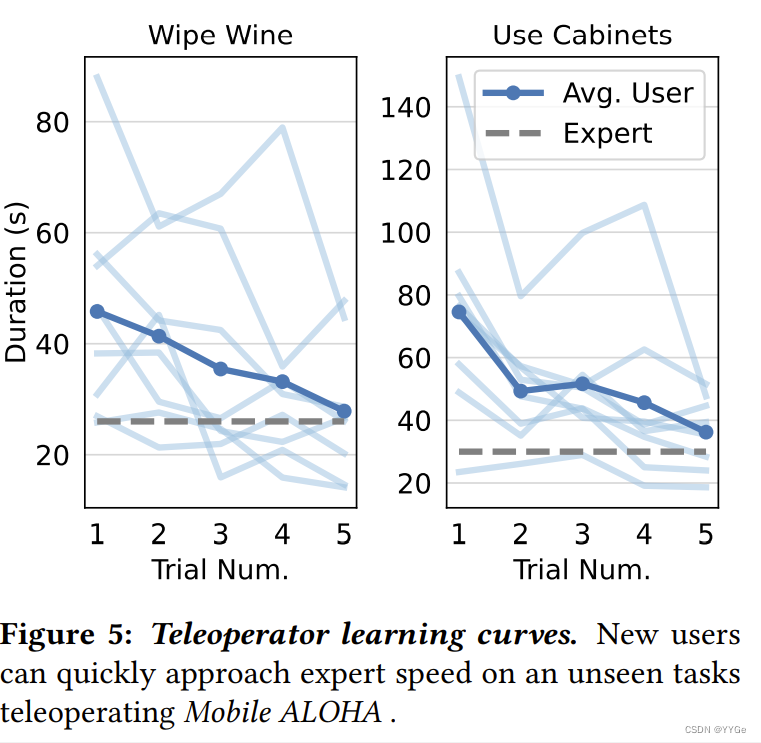

We conduct a user study to evaluate the effectiveness of Mobile ALOHA teleoperation. Specifically, we measure how fast participants are able to learn to teleoperate an unseen task. We recruit 8 participants among computer science graduate students, with 5 females and 3 males aged 21-26. Four participants has no prior teleoperation experience, and the remaining 4 have varying levels of expertise. None of the them have used Mobile ALOHA before. We start by allowing each participant to freely interact with objects in the scene for 3 minutes. We held out all objects that will be used for the unseen tasks during this process. Next, we give each participants two tasks: Wipe Wine and Use Cabinet. An expert operator will first demonstrate the task, followed by 5 consecutive trials from the participants. We record the completion time for each trial, and plot them in Figure 5. We notice a steep decline in completion time: on average, the time it took to perform the task went from 46s to 28s for Wipe Wine (down 39%), and from 75s to 36s for Use Cabinet (down 52%). The average participant can also to approach speed of expert demonstrations after 5 trials, demonstrating the ease of use and learning of Mobile ALOHA teleoperation.

我们进行了一项用户研究,以评估Mobile ALOHA远程操作的有效性。具体而言,我们测量参与者学习远程操作未见过任务的速度。我们在计算机科学研究生中招募了8名参与者,其中有5名女性和3名男性,年龄在21-26岁之间。其中4名参与者没有远程操作经验,另外4名有不同水平的专业知识。他们中没有人在此之前使用过Mobile ALOHA。我们首先允许每个参与者在场景中自由与物体互动3分钟。在此过程中,我们保留了将在未见过任务中使用的所有物体。接下来,我们为每个参与者提供两个任务:擦拭葡萄酒和使用橱柜。专业操作员将首先演示任务,然后是参与者的5次连续尝试。我们记录了每次尝试的完成时间,并在图5中绘制了它们。我们注意到完成时间呈急剧下降的趋势:平均而言,执行任务所需的时间从46秒减少到28秒(减少39%)的擦拭葡萄酒任务,从75秒减少到36秒(减少52%)的使用橱柜任务。在5次尝试后,平均参与者也能够达到专业演示的速度,展示了Mobile ALOHA远程操作的易用性和学习性。

图5:操作员学习曲线。新用户可以迅速在未见过的任务上接近专家的速度,远程操作Mobile ALOHA。

9.Conclusion, Limitations and Future Directions

9.结论、局限性和未来方向

In summary, our paper tackles both the hardware and the software aspects of bimanual mobile manipulation. Augmenting the ALOHA system with a mobile base and whole-body teleoperation allows us to collect high-quality demonstrations on complex mobile manipulation tasks. Then through imitation learning co-trained with static ALOHA data, Mobile ALOHA can learn to perform these tasks with only 20 to 50 demonstrations. We are also able to keep the system accessible, with under $32k budget including onboard power and compute, and open-sourcing on both software and hardware.

总体而言,我们的论文涉及双臂移动操作的硬件和软件方面。通过在ALOHA系统中增加移动底座和全身远程操作,我们能够收集复杂移动操作任务的高质量演示。然后,通过与静态ALOHA数据联合训练的模仿学习,Mobile ALOHA可以学会执行这些任务,仅需20到50次演示。我们还能够保持系统的可访问性,预算不超过32,000美元,包括板载电源和计算,并在软硬件上进行开源。

Despite Mobile ALOHA’s simplicity and performance, there are still limitations that we hope to address in future works. On the hardware front, we will seek to reduce the occupied area of Mobile ALOHA. The current footprint of 90cm x 135cm could be too narrow for certain paths. In addition, the fixed height of the two follower arms makes lower cabinets, ovens and dish washers challenging to reach. We are planning to add more degrees of freedom to the arms’ elevation to address this issue. On the software front, we limit our policy learning results to single task imitation learning. The robot can not yet improve itself autonomously or explore to acquire new knowledge. In addition, the Mobile ALOHA demonstrations are collected by two expert operators. We leave it to future work for tackling imitation learning from highly suboptimal, heterogeneous datasets.

尽管Mobile ALOHA在简单性和性能方面表现出色,但仍存在一些局限性,我们希望在未来的工作中加以解决。在硬件方面,我们将寻求减小Mobile ALOHA的占用面积。目前的占地面积为90cm x 135cm,在某些路径可能太窄。此外,两个随从机械臂的固定高度使得难以到达较低的橱柜、烤箱和洗碗机。我们计划为机械臂的升降添加更多的自由度以解决这个问题。在软件方面,我们将我们的策略学习结果限制在单任务模仿学习中。机器人尚不能自主改进自己或探索以获取新知识。此外,Mobile ALOHA的演示由两名专业操作员收集。我们将未来的工作留给处理来自高度次优、异构数据集的模仿学习。

Acknowledgments

We thank the Stanford Robotics Center and Steve Cousins for providing facility support for our experiments. We also thank members of Stanford IRIS Lab: Lucy X. Shi and Tian Gao, and members of Stanford REAL Lab: Cheng Chi, Zhenjia Xu, Yihuai Gao, Huy Ha, Zeyi Liu, Xiaomeng Xu, Chuer Pan and Shuran Song, for providing extensive helps for our experiments. We appreciate much photographing by Qingqing Zhao, and feedbacks from and helpful discussions with Karl Pertsch, Boyuan Chen, Ziwen Zhuang, Quan Vuong and Fei Xia. This project is supported by the Boston Dynamics AI Institute and ONR grant N00014-21-1-2685. Zipeng Fu is supported by Stanford Graduate Fellowship.

我们感谢斯坦福大学机器人中心和Steve Cousins为我们的实验提供设施支持。我们还感谢斯坦福IRIS实验室的成员:Lucy X. Shi和Tian Gao,以及斯坦福REAL实验室的成员:Cheng Chi,Zhenjia Xu,Yihuai Gao,Huy Ha,Zeyi Liu,Xiaomeng Xu,Chuer Pan和Shuran Song,为我们的实验提供了广泛的帮助。我们非常感谢Qingqing Zhao的摄影,以及Karl Pertsch,Boyuan Chen,Ziwen Zhuang,Quan Vuong和Fei Xia的反馈和有益的讨论。该项目得到了波士顿动力人工智能研究所和ONR资助N00014-21-1-2685的支持。Zipeng Fu得到了斯坦福研究生奖学金的支持。

A.Appendix

A. 附录

A.1. High Five

A.1. 亲密问候

We include the illustration for the High Five task in Figure 6. The robot needs to go around the kitchen island, and whenever a human approach it from the front, stop moving and high five with the human. After the high five, the robot should continue moving only when the human moves out of its path. We collect data wearing different clothes and evaluate the trained policy on unseen persons and unseen attires. While this task does not require a lot of precision, it highlights Mobile ALOHA’s potential for studying human-robot interactions.

我们在图6中提供了“亲密问候”任务的插图。在这个任务中,机器人需要绕过厨房岛,每当有人从前面走过时,停下来与人亲密问候。在亲密问候后,只有当人离开它的路径时,机器人才会继续移动。我们采集了穿着不同衣服的数据,并在未见过的人和服装上评估了经过训练的策略。虽然这个任务不需要很高的精度,但它突显了Mobile ALOHA在研究人机互动方面的潜力。

A.2. Example Image Observations

A.2. 示例图像观察

Figure 7 showcases example images of Wipe Wine captured during data collection. The images, arranged sequentially in time from top to bottom, are sourced from three different camera angles from left to right columns: the top egocentric camera, the left wrist camera, and the right wrist camera. The top camera is stationary with respect to the robot frame. In contrast, the wrist cameras are attached to the arms, providing close-up views of the gripper in action. All cameras are set with a fixed focal length and feature auto-exposure to adapt to varying light conditions. These cameras stream at a resolution of 480 × 640 and a frame rate of 30 frames per second.

图7展示了在数据收集过程中捕获的“擦拭葡萄酒”的示例图像。这些图像从上到下按时间顺序排列,分别来自左右两个不同的摄像机角度:顶部的自我中心摄像机、左腕摄像机和右腕摄像机。顶部摄像机相对于机器人框架是静止的。相反,腕部摄像机安装在机器人的手臂上,提供了夹持器活动的特写视图。所有摄像机都设置有固定的焦距,并具有自动曝光功能,以适应不同的光照条件。这些摄像机以480×640的分辨率和30帧每秒的帧率进行实时传输。

A.3. Experiment Details and Hyperparameters of ACT, Diffusion Policy and VINN

A.3. ACT、Diffusion Policy和VINN的实验细节和超参数

We carefully tune the baselines and include the hyperparameters for the baselines and co-training in Table 5, 6, 7, 8, 9.

我们仔细调整了基线,并在表5、6、7、8、9中包含了基线和共同训练的超参数。

A.4. Open-Loop Replaying Errors

A.4. 开环重播误差

Figure 8 shows the spread of end-effector error at the end of replaying a 300 steps (6s) demonstration. The demonstration contains a 180 degree turn with radius of roughly 1m. At the end of the trajectory, the right arm would reach out to a piece of paper on the table and tap it gently. The tapping position are then marked on the paper. The red cross denotes the original tapping position, and the red dots are 20 replays of the same trajectory. We observe significant error when replaying the base velocity profile, which is expected due to the stochasticity of the ground contact and low-level controller. Specifically, all replay points are biased to the left side by roughly 10cm, and spread along a line of roughly 20cm. We found our policy to be capable of correcting such errors without explicit localization such as SLAM.

图8显示了在重播300步(6秒)演示结束时末端执行器误差的分布。演示包含了一个半径约1米的180度转弯。在轨迹的末端,右臂将伸出到桌子上的一张纸上,并轻轻拍打它。拍打的位置然后在纸上标记出来。红色十字标记原始的拍打位置,红色点是相同轨迹的20次重播。我们观察到在重播基础速度轮廓时存在显着的误差,这是由于地面接触的随机性和低级控制器的随机性所致。具体来说,所有的重播点都向左偏移大约10厘米,并沿着大约20厘米的一条线分布。我们发现我们的策略能够在没有显式定位(如SLAM)的情况下纠正这种错误。