热门标签

热门文章

- 1windows的cmd命令中如何使用延迟方法【ping -n 30 127.0.0.1 > null】_ping -n 3 127.0.0.1>nul

- 2JS中的惰性函数_js 惰性函数

- 3自动驾驶系统概述

- 4html+css_css

- 5python小波包分解_小波包获得某个节点信号的几个细节问题

- 6修改system.img的文件的权限和属性:使用make.ext4fs的方法_system.ext4.win

- 7Win10 配置ADB安装2023.7.12版本_adb windows

- 8Redis学习笔记:基础篇数据结构与对象_embstr 是哪两个单词

- 9大数据毕业设计 opencv指纹识别系统 - python 图像识别_基于opencv的毕业设计

- 10LocalDB的使用详解

当前位置: article > 正文

HuggingFace 模型离线使用最佳方法!

作者:菜鸟追梦旅行 | 2024-04-06 13:27:39

赞

踩

HuggingFace 模型离线使用最佳方法!

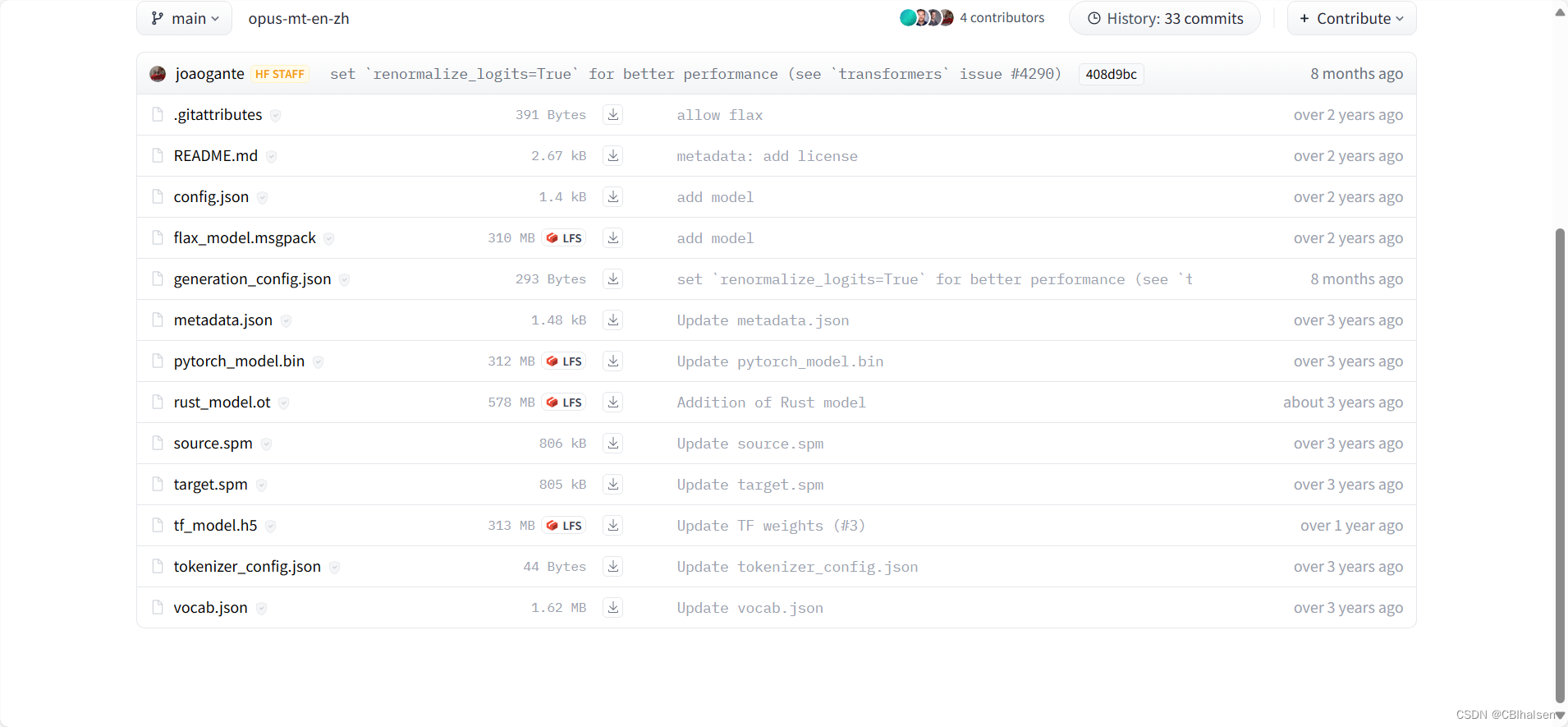

以:Helsinki-NLP/opus-mt-en-zh (translation)模型为例:

如图,假设我们的运行环境基于python,那么我们通常只需要以下文件:

"config.json", "pytorch_model.bin", "tokenizer_config.json", "vocab.json", "source.spm", "target.spm"

其余文件如:rust,我们并不需要下载, 不使用tesorflow ,tf_model.h5也不需下载/

huggingface 官方提供以下几种下载方式:

git lfs install

git clone https://huggingface.co/Helsinki-NLP/opus-mt-en-zh

# If you want to clone without large files - just their pointers GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/Helsinki-NLP/opus-mt-en-zh

有完全克隆库,也有只克隆指针文件,但都无法根据我们的语言需求指定下载我们需要的部分文件:

所以本文推荐使用以下代码进行模型下载:

- import requests

- import os

-

- def download_file(url, dest_folder, file_name):

- """

- 下载文件并保存到指定的文件夹中。

- """

- response = requests.get(url, allow_redirects=True)

- if response.status_code == 200:

- with open(os.path.join(dest_folder, file_name), 'wb') as file:

- file.write(response.content)

- else:

- print(f"Failed to download {file_name}. Status code: {response.status_code}")

-

- def download_model_files(model_name):

- """

- 根据模型名称下载模型文件。

- """

- # 文件列表

- files_to_download = [

- "config.json",

- "pytorch_model.bin",

- "tokenizer_config.json",

- "vocab.json",

- "source.spm",

- "target.spm" # 如果模型不使用SentencePiece,这两个文件可能不需要

- ]

-

- # 创建模型文件夹

- model_folder = model_name.split('/')[-1] # 从模型名称中获取文件夹名称

- if not os.path.exists(model_folder):

- os.makedirs(model_folder)

-

- # 构建下载链接并下载文件

- base_url = f"https://huggingface.co/{model_name}/resolve/main/"

- for file_name in files_to_download:

- download_url = base_url + file_name

- print(f"Downloading {file_name}...")

- download_file(download_url, model_folder, file_name)

-

- # 示例使用

- model_name = "Helsinki-NLP/opus-mt-zh-en"

- download_model_files(model_name)

如果需要增添模型文件,可在文件列表进行修改。

同时:当我们使用:

pipeline 本地运行模型时,虽然会下载python_model, 但是仍需要联网访问config.json不能实现离线运行,所以,当使用本文上述下载代码完成模型的下载后:

运行以下案例代码:

- from transformers import pipeline, AutoTokenizer

- import os

-

- os.environ['TRANSFORMERS_OFFLINE']="1"

- # 示例文本列表

- texts_to_translate = [

- "你好",

- "你好啊",

- # 添加更多文本以确保总token数超过400

- ]

-

- # 指定模型的本地路径

- model_name = "./opus-mt-zh-en" # 请替换为实际路径

- # 创建翻译管道,指定本地模型路径

- pipe = pipeline("translation", model=model_name)

- # 获取tokenizer,指定本地模型路径

- tokenizer = AutoTokenizer.from_pretrained(model_name)

-

- result = pipe(texts_to_translate)

- print(result)

建议保留:

os.environ['TRANSFORMERS_OFFLINE']="1",放弃向huggingface联网访问。

结果如下:

- 2024-03-29 15:57:52.211565: I tensorflow/core/util/port.cc:113] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

- 2024-03-29 15:57:53.251227: I tensorflow/core/util/port.cc:113] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

- [{'translation_text': 'Hello.'}, {'translation_text': 'Hello.'}]

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/菜鸟追梦旅行/article/detail/372167?site=

推荐阅读

相关标签