- 1程序员必修课--sql思维举重训练

- 2数据库慢sql日志监控等_druid.stat.slowsqlmillis

- 3webview最全面详解(一)了解官方文档

- 4Node.js安装和配置初体验

- 5Hibernate:Caused by: java.lang.ClassNotFoundException: oracle.sql.BLOB

- 6C语言二叉树详解

- 7【云上探索实验室】快速入门AI 编程助手 Amazon CodeWhisperer ——码上学堂领学员招募_亚马逊云科技云上探索实验室

- 8Git-TortoiseGit完整配置流程_tortoisegit 配置流程

- 9推荐一款可私有部署的企业知识分享与团队协同软件_私有化部署 团队协同

- 10GPT1:Improving Language Understanding by Generative Pre-Training

使用 Python 进行自然语言处理第 4 部分:文本表示_自然语言处理文本表示

赞

踩

本文是我系列文章的第四篇,涵盖了我在 2023 年 3 月为 WomenWhoCode 数据科学跟踪活动提供的会议。早期的文章在这里:第 1 部分(涵盖 NLP 简介)、第 2 部分(涵盖 NLTK 和 SpaCy 库)、第 3 部分(涵盖文本预处理技术)

二、文本表示

- 文本数据以字母、单词、符号、数字或所有这些的集合的形式存在。例如“印度”、“”、“Covid19”等。

- 在将机器学习/深度学习算法应用于文本数据之前,我们必须以数字形式表示文本。单个单词和文本文档都可以转换为浮点数的向量。

- 将标记、句子表示为数字向量的过程称为“嵌入”,这些向量的多维空间称为嵌入空间。

- 深度神经网络架构,如递归神经网络、长短期记忆网络、转换器,需要以固定维数值向量的形式输入文本。

2.1、一些术语:

- 文档:文档是许多单词的集合。

- 词汇:词汇是文档中一组独特的单词。

- 令牌:令牌是离散数据的基本单元。它通常指的是一个单词或一个标点符号。

- 语料库:语料库是文档的集合。

- 上下文 :单词/标记的上下文是文档中左右两侧围绕它的单词/标记。

- 向量嵌入:基于向量的文本数字表示称为嵌入。例如,word2vec 或 GLoVE 是基于语料库统计的无监督方法。tensorflow 和 keras 等框架支持“嵌入层”。

2.2 文本表示形式应具有以下属性:

- 它应该唯一地标识一个单词(必须是双射)

- 应捕捉单词之间的形态、句法和语义相似性。在Eudlidean空间中,相关词应该比不相关的词更接近。

- 在这些表示形式上应该可以进行算术运算。

- 计算单词相似性和关系等任务应该很容易使用表示。

- 它应该很容易从单词映射到它的嵌入,反之亦然。

2.3 一些突出的文本表示技术:

- One-Hot 编码

- Bag of Words 模型 — 带有 n-gram 的 CountVectorizer 和 CountVectorizer

- TF-IDF模型

- Word2Vec 嵌入

- GloVe 嵌入

- FastText 嵌入

- 像 ChatGPT 和 BERT 这样的转换器使用自己的动态嵌入。

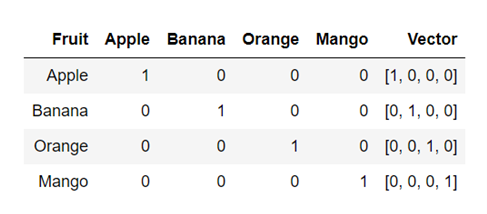

One-Hot 编码:

这是将文本表示为数字向量的最简单技术。每个单词都表示为 0 和 1 的唯一“One-Hot”二进制向量。对于词汇表中的每个唯一单词,向量包含一个 1,其余所有值均为 0,向量中 1 的位置唯一标识一个单词。

例:

单词 Apple、Banana、Orange 和 Mango 的 OneHot 矢量示例

- from sklearn.preprocessing import OneHotEncoder

- import nltk

- from nltk import word_tokenize

- document = "The rose is red. The violet is blue."

- document = document.split()

- tokens = [doc.split(" ") for doc in document]

-

- wordids = {token: idx for idx, token in enumerate(set(document))}

- tokenids = [[wordids[token] for token in toke] for toke in tokens]

-

- onehotmodel = OneHotEncoder()

- vectors = onehotmodel.fit_transform(tokenids)

- print(vectors.todense())

2.4 词袋表示:CountVectorizer

详情请见:https://en.wikipedia.org/wiki/Bag-of-words_model

词袋 (BoW) 是一种无序的文本表示形式,用于描述文档中单词的出现情况。它具有文档中已知单词的词汇表和已知单词存在的度量。词袋模型不包含有关文档中单词顺序或结构的任何信息。

来自维基百科的示例:

文件1:约翰喜欢看电影。玛丽也喜欢电影。

文件2:玛丽也喜欢看足球比赛。

词汇1:“John”,“likes”,“to”,“watch”,“movies”,“Mary”,“likes”,“movies”,“too”

词汇2: “Mary”,“also”,“likes”,“to”,“watch”,“football”,“games”

BoW1 = {“John”:1,“likes”:2,“to”:1,“watch”:1,“movies”:2,“Mary”:1,“too”:1};

BoW2 = {“Mary”:1,“also”:1,“likes”:1,“to”:1,“watch”:1,“football”:1,“games”:1};

Document3 是 document1 和 document2 的并集(包含文档 1 和文档 2 中的单词)

文档3:约翰喜欢看电影。玛丽也喜欢电影。玛丽还喜欢看足球比赛。

BoW3: {“John”:1,“likes”:3,“to”:2,“watch”:2,“movies”:2,“Mary”:2,“too”:1,“also”:1,“football”:1,“games”:1}

让我们编写一个函数来预处理文本,然后再用向量表示文本。

接下来,我们使用 Sklearn 库中的 CountVectorizer 将预处理的文本转换为词袋表示。

2.5 词袋表示:n-grams

Simpe Bag-of-words 模型不存储有关单词顺序的信息。n-gram 模型可以存储此空间信息。

单词/标记称为“克”。n-gram 是出现在文本文档中的一组连续的 n 标记。

unigram 表示 1 个单词,bigrams 表示两个单词,trigram 表示一组 3 个单词......

例如,对于文本(来自维基百科):

文件1:约翰喜欢看电影。玛丽也喜欢电影。

二元模型会将文本解析为以下单位,并像在简单的 BoW 模型中一样存储每个单位的术语频率。

[“约翰喜欢”, “喜欢”, “看”, “看电影”, “玛丽喜欢”, “喜欢电影”, “电影也”,]

词袋模型可以看作是 n-gram 模型的一个特例,其中 n=1

- #https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.CountVectorizer.html

- from sklearn.feature_extraction.text import CountVectorizer

-

- document = ["The rose is red.", "The violet is blue.", "This is some text, just for demonstration"]

- ngram_countvect = CountVectorizer(ngram_range = (2, 2), stop_words = 'english')

- #ngram_range paramenter to count vectorizer indicates the lower and upper boundary of the range of n-values for

- #different word n-grams or char n-grams to be extracted. All values of n such such that min_n <= n <= max_n will be used.

- #For example an ngram_range of (1, 1) means only unigrams, (1, 2) means unigrams and bigrams, and (2, 2) means only bigrams.

-

- matrix = ngram_countvect.fit_transform(document)

- vocabulary = ngram_countvect.get_feature_names_out()

- matrix.todense()

三、Tf-IDF 矢量化器 : 术语频率 — 反向文档频率

可以在此处找到对 TF-IDF 矢量化器的非常好的解释

- Tf-Idf 分数,即文档“d”中术语/单词“w”的 tfidf(w,D),是两个指标的乘积——术语频率 (tf) 和逆文档频率 (idf)。即 tfidf(w, d, C) = tf(w,d)*idf(w,d,C)

- 其中 w 是术语或单词,d 是文档,C 是包含包括文档 d 在内的总共 N 个文档的语料库。

- 术语频率 tf(w,d) 是文档 d 中单词 w 的频率。术语频率可以根据文档的长度进行调整(出现次数的原始计数除以文档中的字数),它可以是对数缩放频率(e.g. log(1 + 原始计数)),也可以是布尔频率(例如,如果术语在文档中出现,则为 1,如果术语未出现,则为 0)。

- 文档频率:是一组 N 个文档(语料库)中一个术语/单词 w 的出现频率。逆文档频率是衡量一个词在语料库中常见或稀有程度的指标。IDF 较少,这个词更常见,反之亦然。单词的 IDF 是通过将语料库中的文档总数除以包含单词的文档数量的对数来计算的。逆文档频率是衡量术语/单词信息量的指标。经常出现的单词信息量较少。一个单词的反向文档频率是在一组文档(语料库)中计算的。

- from sklearn.feature_extraction.text import TfidfVectorizer

-

- document = ["The rose is red.", "The violet is blue.", "This is some text, just for demonstration"]

-

- tf_idf = TfidfVectorizer(min_df = 0., max_df = 1., use_idf = True)

- tf_idf_matrix = tf_idf.fit_transform(document)

- tf_idf_matrix = tf_idf_matrix.toarray()

- tf_idf_matrix

3.1 词嵌入

上述文本表示方法通常不能捕捉到单词的语义和上下文。为了克服这些限制,我们使用 Embeddings。嵌入是通过训练大型数据集的模型来学习的。这些嵌入通过考虑句子中的相邻单词和句子中单词的顺序来捕获单词的上下文。三个突出的词嵌入是:Word2Vec、GloVe、FastText

Word2Vec

- 是在大型文本语料库上训练的无监督模型。它创建了单词词汇表和表示词汇表的向量空间中单词的分布式和连续密集向量表示。它捕获上下文和语义的相似性。

- 我们可以指定单词嵌入向量的大小。向量的总数基本上是词汇表的大小。

- Word2Vec 中有两种不同的模型架构类型——CBOW(连续词袋)模型、Skip Gram 模型

CBOW 模型 — 尝试根据源上下文词预测当前目标词。Skip Gram 模型尝试预测给定目标词的源上下文词。

如果您希望在词汇表中查看所有向量,请使用以下代码:

- #All the vectors for all the words in our input text

- words = wordtovector.wv.index_to_key

- wvs = wordtovector.wv[words]

- wvs

或者将它们转换为 pandas 数据帧

- import pandas as pd

- df = pd.DataFrame(wvs, index = words)

- df

3.2 GloVe

- 全局向量 (GloVe) 是一种为 Word2Vec 等单词生成密集向量表示的技术。它首先创建一个由(词、语境)对组成的巨大的词语共现矩阵。此矩阵中的每个元素都表示上下文中单词的频率。矩阵分解技术可用于近似该矩阵。由于 Glove 是在 globar 词-词共现矩阵上训练的,它使我们能够拥有一个具有有意义的子结构的向量空间。

- Spacy 库支持 GloVe 嵌入。为了使用英语语言嵌入,我们需要下载管道“en_core_web_lg”,即大型英语语言管道。我们使用 SpaCy 获得标准的 300 维 GloVe 词向量。

如果您希望看到单词“violet”的手套向量,请使用代码

glove_vec_df.loc['violet']想查看词汇的所有向量吗?

glovevectors使用TSNE可视化数据点

- from sklearn.manifold import TSNE

- import matplotlib.pyplot as plt

- tsne = TSNE(n_components = 2, random_state = 42, n_iter = 250, perplexity = 3)

- tsneglovemodel = tsne.fit_transform(glovevectors)

- labels = vocab

- plt.figure(figsize=(12, 6))

- plt.scatter(tsneglovemodel[:, 0], tsneglovemodel[:, 1], c='red', edgecolors='r')

- for label, x, y in zip(labels, tsneglovemodel[:, 0], tsneglovemodel[:, 1]):

- plt.annotate(label, xy=(x+1, y+1), xytext=(0, 0), textcoords='offset points')

3.3 快速文本

FastText 在 Wikipedia 和 Common Crawl 上进行了训练。它包含在维基百科和爬行上训练的 157 种语言的词向量。它还包含用于语言识别和各种监督任务的模型。您可以在 gensim 库中试验 FastText 向量。

- import warnings

- warnings.filterwarnings("ignore")

-

- from gensim.models.fasttext import FastText

- import nltk

- document = ["The rose is red.", "The violet is blue.", "This is some text, just for demonstration"]

- tokenized_corpus = [nltk.word_tokenize(doc) for doc in document]

-

- fasttext_model = FastText(tokenized_corpus, window = 5, min_count = 1, sg = 1)

- import warnings

- warnings.filterwarnings("ignore")

-

- from gensim.models.fasttext import FastText

- import nltk

- document = ["The rose is red.", "The violet is blue.", "This is some text, just for demonstration"]

- tokenized_corpus = [nltk.word_tokenize(doc) for doc in document]

-

- fasttext_model = FastText(tokenized_corpus, window = 5, min_count = 1, sg = 1)

-

- print('Embedding')

- print(fasttext_model.wv['blue'])

-

- print('Embedding Shape')

- print(fasttext_model.wv['blue'].shape)

要查看词汇表中单词的向量,可以使用以下代码

- words_fasttext = fasttext_model.wv.index_to_key

- wordvectors_fasttext = fasttext_model.wv[words]

- wordvectors_fasttext

在本系列的下一篇文章中,我们将介绍文本分类。