- 1Oracle物化视图(Materialized View)_oracle 物化视图

- 2基于FPGA的并行DDS设计

- 3python渗透工具编写学习笔记:9、web漏洞检测与利用脚本_python语言写网络渗透测试脚本

- 42024.5月更新大麦autojs代码,实现app端自动抢票_autojs 大麦

- 5AI辅写疑似度高风险?七招助你化险为夷!_论文ai风险高怎么解决

- 6Android Studio入门:Android应用界面详解(上)(View、布局管理器)_android studio的view

- 7腾讯后台开发一面(凉)_腾讯后端一面

- 8最新版IDEA(或Android Studio)Lombok的使用方法_android lombok

- 9Centos7防火墙与IPTABLES详解_centos7 iptables 和防火墙

- 10《动手学ROS2进阶篇》8.2RVIZ2可视化移动机器人模型_如何重装rviz2

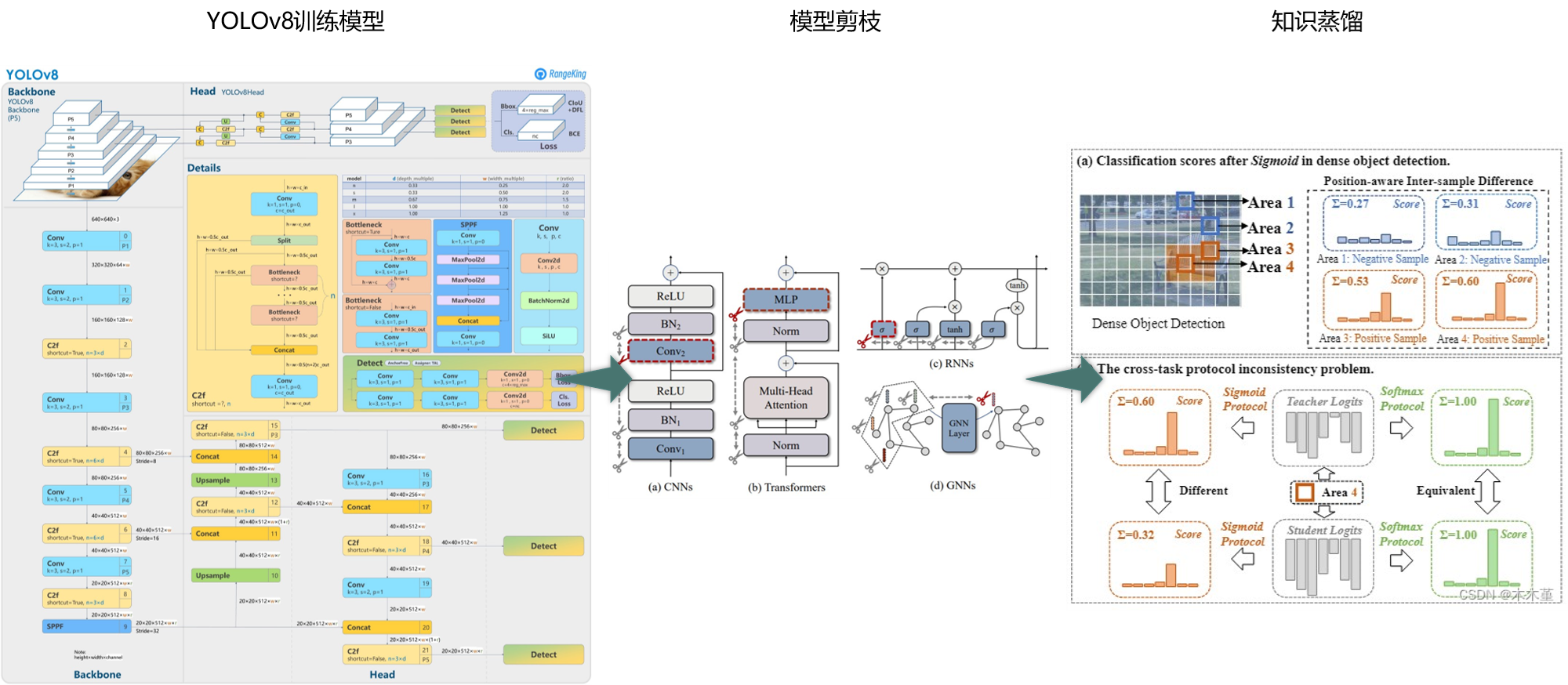

基于YOLOv8的剪枝+知识蒸馏=无损轻量化_yolov8模型的剪枝与蒸馏

赞

踩

1.实验结果(自制数据集)

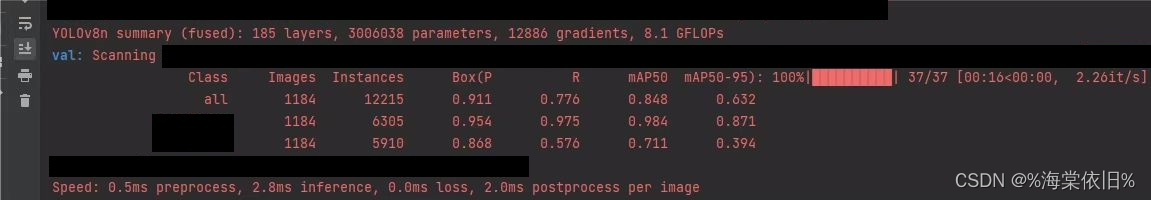

(1)YOLOv8n剪枝+蒸馏(YOLOv8s为教师模型):

Base:mAP 84.8%,Parameters 3.01M,GFLOPs 8.1G,FPS 188.7

Prune(蒸馏微调训练):mAP 84.9%,Parameters 1.67M,GFLOPs 5.0G,FPS 217.4

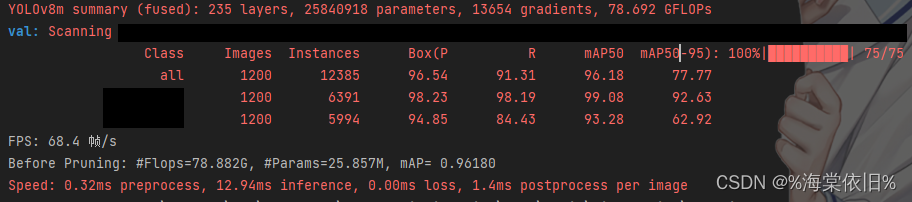

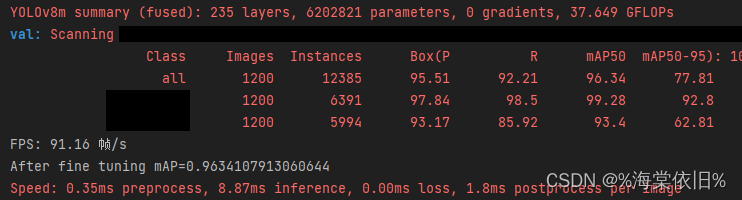

(2)YOLOv8m剪枝+蒸馏(YOLOv8m自蒸馏):

Base:mAP 96.18%,Parameters 25.84M,GFLOPs 78.692G,FPS 68.4

Prune(蒸馏微调训练):mAP 96.34%,Parameters 6.20M,GFLOPs 37.649G,FPS 91.2

2.支持的方法

(1)剪枝方法:

l1:https://arxiv.org/abs/1608.08710

lamp:https://arxiv.org/abs/2010.07611

slim:https://arxiv.org/abs/1708.06519

group_norm:https://openaccess.thecvf.com/content/CVPR2023/html/Fang_DepGraph_Towards_Any_Structural_Pruning_CVPR_2023_paper.html

group_taylor:https://openaccess.thecvf.com/content_CVPR_2019/papers/Molchanov_Importance_Estimation_for_Neural_Network_Pruning_CVPR_2019_paper.pdf

(2)知识蒸馏:

Logits蒸馏:

(Bridging Cross-task Protocol Inconsistency for Distillation in Dense Object Detection)https://arxiv.org/pdf/2308.14286.pdf

CrossKD(Cross-Head Knowledge Distillation for Dense Object Detection)https://arxiv.org/abs/2306.11369

NKD(From Knowledge Distillation to Self-Knowledge Distillation: A Unified Approach with Normalized Loss and Customized Soft Labels)https://arxiv.org/abs/2303.13005

DKD(Decoupled Knowledge Distillation) https://arxiv.org/pdf/2203.08679.pdf

LD(Localization Distillation for Dense Object Detection) https://arxiv.org/abs/2102.12252

WSLD(Rethinking the Soft Label of Knowledge Extraction: A Bias-Balance Perspective) https://arxiv.org/pdf/2102.00650.pdf

Distilling the Knowledge in a Neural Network https://arxiv.org/pdf/1503.02531.pd3f

RKD(Relational Knowledge Disitllation) http://arxiv.org/pdf/1904.05068。

特征蒸馏:

CWD(Channel-wise Knowledge Distillation for Dense Prediction)https://arxiv.org/pdf/2011.13256.pdf

MGD(Masked Generative Distillation)https://arxiv.org/abs/2205.01529

FGD(Focal and Global Knowledge Distillation for Detectors)https://arxiv.org/abs/2111.11837

FSP(A Gift from Knowledge Distillation: Fast Optimization, Network Minimization and Transfer Learning)https://openaccess.thecvf.com/content_cvpr_2017/papers/Yim_A_Gift_From_CVPR_2017_paper.pdf

PKD(General Distillation Framework for Object Detectors via Pearson Correlation Coefficient) https://arxiv.org/abs/2207.02039

VID(Variational Information Distillation for Knowledge Transfer) https://arxiv.org/pdf/1904.05835.pdf

Mimic(Quantization Mimic Towards Very Tiny CNN for Object Detection)https://arxiv.org/abs/1805.02152

代码复现不易,有偿获取。