- 1CVPR 2023最佳论文候选出炉,12篇上榜!武大、港中文、商汤等国内机构多篇入选...

- 2Redis入门学习笔记【二】Redis缓存

- 3git 如何撤销 commit、git commit 提交之后如何取消本次提交、如何更改提交的内容_撤销commit

- 4狂揽十个offer!软件测试必备技能:写出高效的软件测试用例?_软件设计测试用例题

- 5ofd轻阅读---采用Typescript全新开发,让阅读、批注更方便!_ofd阅读器csdn

- 6C++11新特性总结

- 7陈丹琦团队新作:教你避免成为任天堂的被告

- 8AI大模型安全挑战和安全要求解读_人工智能 版权

- 9uniapp 实现可以左右滑动页面+导航栏_uniapp左侧导航右侧内容

- 10字体属性(笔记)

深度学习笔记(14.keras实现LSTM文本生成)_shakespeare.txt

赞

踩

摘要

Ng深度学习课程第五部分序列化模型,keras实现LSTM文本生成。语料是90k大小的Shakespeare文本。

程序地址:https://github.com/ConstellationBJUT/Coursera-DL-Study-Notes

实验网络结构

Tx:序列长度,这里是一个句子包含Tx个字符。

xt:t=1…Tx,表示输入序列,维度(m, Tx,char_num),m表示训练样本数(也就是m个句子),Tx句子字符数(这里每句字符数相同),char_num表示整个文本中出现的字符数。每个xt是一个长度为char_num的one_hot向量,只有一位是1,表示一个字符。

y_hat:最后一个cell的输出y_hat是维度(char_num, 1)的向量,每个位置表示对应字符出现的概率。

程序说明

参考代码git地址:https://github.com/keras-team/keras/blob/master/examples/lstm_text_generation.py

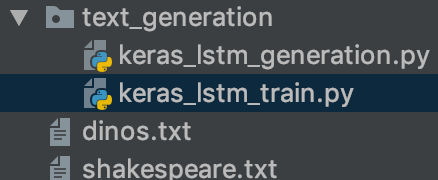

1.文件结构

需要安装TensorFlow和keras,直接pip install

下图的python文件中,有很详细的注释,代码很少,keras封装的犀利。

(1)keras_lstm_train.py,模型训练和保存

(2)keras_lstm_generation.py,模型加载和生成指定字符长度的句子

(3)shakespeare.txt,训练样本

2.模型训练程序

from keras.callbacks import LambdaCallback from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from keras.optimizers import RMSprop import numpy as np # 加载数据文件 with open('../shakespeare.txt', 'r') as f: text = f.read() print('corpus length:', len(text)) # 生成字符_id字典,有标点符号和a~z组成 chars = sorted(list(set(text))) char_num = len(chars) print('total chars:', char_num) char_indices = dict((c, i) for i, c in enumerate(chars)) indices_char = dict((i, c) for i, c in enumerate(chars)) # cut the text in semi-redundant sequences of maxlen characters maxlen = 40 # 每个句子由40个字符组成,也就是有40个cell step = 3 # 每隔3个字符,获取maxlen个字符作为一个句子 sentences = [] # 存放句子的数组,每个句子是一个输入x next_chars = [] # label,每个句子后边紧跟的字符作为输出y for i in range(0, len(text) - maxlen, step): sentences.append(text[i: i + maxlen]) next_chars.append(text[i + maxlen]) print('nb sequences:', len(sentences)) print('Vectorization...') # 训练数据转换为模型认识的格式,每个句子对应一个输出字符 # 训练数据x,维度(句子数,cell数,xi维度) x = np.zeros((len(sentences), maxlen, char_num), dtype=np.bool) # 训练label y,维度(句子数,yi维度) y = np.zeros((len(sentences), char_num), dtype=np.bool) # 将x,y转为one_hot,每个输入句子由maxlen个one_hot向量组成 for i, sentence in enumerate(sentences): for t, char in enumerate(sentence): x[i, t, char_indices[char]] = 1 y[i, char_indices[next_chars[i]]] = 1 # build the model: a single LSTM print('Build model...') model = Sequential() model.add(LSTM(128, input_shape=(maxlen, char_num))) model.add(Dense(char_num, activation='softmax')) optimizer = RMSprop(learning_rate=0.01) model.compile(loss='categorical_crossentropy', optimizer=optimizer) def on_epoch_end(epoch, _): None print_callback = LambdaCallback(on_epoch_end=on_epoch_end) model.fit(x, y, batch_size=128, epochs=10, callbacks=[print_callback]) # 存储模型 model.save('shakes_model.h5')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

3.生成文本程序

from keras.models import load_model import numpy as np import random with open('../shakespeare.txt', 'r') as f: text = f.read() print('corpus length:', len(text)) chars = sorted(list(set(text))) char_num = len(chars) print('total chars:', char_num) char_indices = dict((c, i) for i, c in enumerate(chars)) indices_char = dict((i, c) for i, c in enumerate(chars)) # cut the text in semi-redundant sequences of maxlen characters maxlen = 40 # 需要和kears_lstm_train.py里边一样 # build the model: a single LSTM print('load model...') model = load_model('shakes_model.h5') def sample(preds, temperature=1.0): """ helper function to sample an index from a probability array :param preds: 模型正向传播计算得到的向量a,维度(char_num, 1) :param temperature: 多样性控制,值越大,随机性越强 :return: """ preds = np.asarray(preds).astype('float64') preds = np.log(preds) / temperature # 下面个两行做了softmax exp_preds = np.exp(preds) preds = exp_preds / np.sum(exp_preds) # 按照概率preds做一次随机抽样试验,返回一个和preds维度相同0,1向量,1表示该位置选中 probas = np.random.multinomial(1, preds, 1) # 返回1所在位置,根据位置可以去找到对应字符 return np.argmax(probas) def generate_output(text_len=10): """ 生成文本 :param text_len: 生成的text文本长度 :return: """ diversity = 1.0 generated = '' # 最终生成的文本 # 从文本中随机选取位置截取maxlen字符作为初始输入句子 start_index = random.randint(0, len(text) - maxlen - 1) sentence = text[start_index: start_index + maxlen] for i in range(text_len): # 输入句子x_pred x_pred = np.zeros((1, maxlen, len(chars))) # 生成one_hot向量 for t, char in enumerate(sentence): x_pred[0, t, char_indices[char]] = 1. # 预测输出 preds = model.predict(x_pred, verbose=0)[0] next_index = sample(preds, diversity) next_char = indices_char[next_index] # 将句子字符串左移一位,新字符加载句子末尾,作为新的输入 sentence = sentence[1:] + next_char generated += next_char return generated text_len = random.randint(4, 200) print('text len:', text_len) new_text = generate_output(text_len) print(new_text)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

实验结果

doth that less thea part, and I in fils.

They like

整个文本迭代了10次,生成单词感觉都不是很对,标点符号也有,感觉语料多点,多迭代迭代,应该还是可以的。

总结

(1)感觉keras的lstm简洁

(2)比自己之前实现的lstm用起来灵活的多,可以选择优化和loss函数。

(3)应该用个word2vec的embedding,扔进去好好训练试试,估计笔记本可能会爆炸。