热门标签

热门文章

- 1centos7上搭建http服务器以及设置目录访问_chown apache:apache html/

- 2会议安排算法

- 3关于Mysql1251解决办法_mysql 1251

- 4RedHat最新yum源配置教程(详细)_redhat配置yum源

- 5linux Centos 7 在tp5.1上Swoole的搭建IM_tp5.1 im

- 6npm install常见报错及问题_npm install 报错

- 7C++ Primer 0x09 练习题解

- 8深入探索Android卡顿优化(上)_安卓system_server占用高

- 9【MATLAB第74期】#源码分享 | 基于MATLAB的ARX-ARMAX线性自回归移动平均外生模型(结合最小二乘思路)_matlab arx

- 10K8S实战-交付dubbo服务到k8s集群(四)使用blue ocean流水线构建dubbo-demo-service_k8s dubboservice

当前位置: article > 正文

[Python+OpenCv]近似图像差异检测

作者:知新_RL | 2024-02-18 02:33:43

赞

踩

图像差异检测

最新源码已更新(包含测试图像):

https://github.com/XuPeng23/CV/tree/main/Difference%20Detection%20In%20Similar%20Images

图像压缩包解压一下和python文件放一个目录应该就能直接跑起来了

一、摘要

两幅相似的图像,如果整体位置一样,仅仅是在一些地方有差异(如图1-1),那么只需要相减就能得出差异部分。而现实情况往往复杂得多,由于拍摄时间、角度不同,两幅图像可能存在视角、灰度、旋转、尺度等的差异(如图1-2),因此找出两幅图像之间的变换关系是实现该功能的前提。

图1-1 整体位置相同的两幅图像

图1-2 存在各种差异的两幅相似图像

二、算法实现

2.1 特征匹配

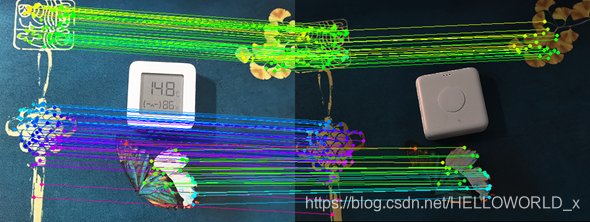

本文使用单应性变换来表示两幅图像之间的变换关系,得出单应性变换需要至少3对匹配对,在图像特征匹配部分使用了SIFT描述子,因为考虑到SIFT拥有良好的灰度不变性、旋转不变性和尺度不变性。在两幅图像中找出SIFT特征点并进行匹配得到预匹配集,接着通过与相邻匹配对的距离比例关系剔除一部分匹配对,剩下的匹配对认为是比较好的,用来找出单应性变换。

# 载入图像 img1 = cv2.imread('./datahomo6/img1.png') img2 = cv2.imread('./datahomo6/img2.png') sift = cv2.xfeatures2d.SIFT_create() # 检测关键点 kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) # 关键点匹配 FLANN_INDEX_KDTREE = 0 index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 6) search_params = dict(checks = 10) flann = cv2.FlannBasedMatcher(index_params, search_params) matches = flann.knnMatch(des1,des2,k=2) good = [] for m,n in matches: if m.distance < 0.6*n.distance: good.append(m)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

此步运行结果:

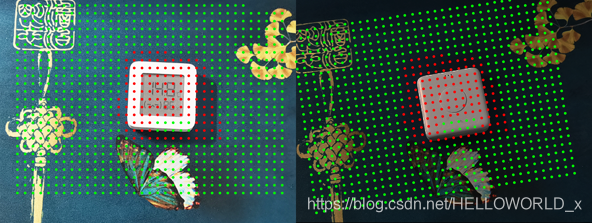

2.2 建立单应性变换并确定检测区域

单应性变换需要至少3对点对来算出,单应性矩阵在此不多介绍,本文通过OpenCv中的findHomography函数得出单应性矩阵,并将左图的检测点映射到右图的对应位置。左图监测点位置范围由存在认为正确的匹配对的区域位置决定(匹配对覆盖范围越广监测点也可以覆盖越广)。

# 得到单应性变换 M, mask = cv2.findHomography(pts_src, pts_dst, cv2.RANSAC,5.0) # 检测范围确定 interval = 2.5*size # 监测点间隔 searchWidth = int((xMaxLeft - xMinLeft)/interval) searchHeight = int((yMaxLeft - yMinLeft)/interval) searchNum = searchWidth * searchHeight demo_src = np.float32([[0] * 2] * searchNum * 1).reshape(-1,1,2) for i in range(searchWidth): for j in range(searchHeight): demo_src[i+j*searchWidth][0][0] = xMinLeft + i*interval demo_src[i+j*searchWidth][0][1] = yMinLeft + j*interval # 单应性变换 左图映射到右图的位置 demo_dst = cv2.perspectiveTransform(demo_src,M) # 转换成KeyPoint类型 kp_src = [cv2.KeyPoint(demo_src[i][0][0], demo_src[i][0][1], size) for i in range(demo_src.shape[0])] kp_dst = [cv2.KeyPoint(demo_dst[i][0][0], demo_dst[i][0][1], size) for i in range(demo_dst.shape[0])] # 计算这些关键点的SIFT描述子 keypoints_image1, descriptors_image1 = sift.compute(img1, kp_src) keypoints_image2, descriptors_image2 = sift.compute(img2, kp_dst) # 差异点 diffLeft = [] diffRight = [] # 分析差异 for i in range(searchNum): shreshood = 470 difference = 0 for j in range(128): d = abs(descriptors_image1[i][j]-descriptors_image2[i][j]) difference = difference + d*d difference = math.sqrt(difference) # 右图关键点位置不超出范围 if (demo_dst[i][0][1]>= 0) & (demo_dst[i][0][0] >= 0): if difference <= shreshood: cv2.circle(output, (demo_src[i][0][0],demo_src[i][0][1]),1, (0, 255, 0), 2) cv2.circle(output, (int(demo_dst[i][0][0]+width),demo_dst[i][0][1]),1, (0, 255, 0), 2) if difference > shreshood: if func == 1: cv2.circle(output, (demo_src[i][0][0],demo_src[i][0][1]),1, (0, 0, 255), 2) cv2.circle(output, (int(demo_dst[i][0][0]+width),demo_dst[i][0][1]),1, (0, 0, 255), 2) if func == 2: diffLeft.append([demo_src[i][0][0],demo_src[i][0][1]]) diffRight.append([demo_dst[i][0][0],demo_dst[i][0][1]])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

之后将差异大于阈值的点对输出:

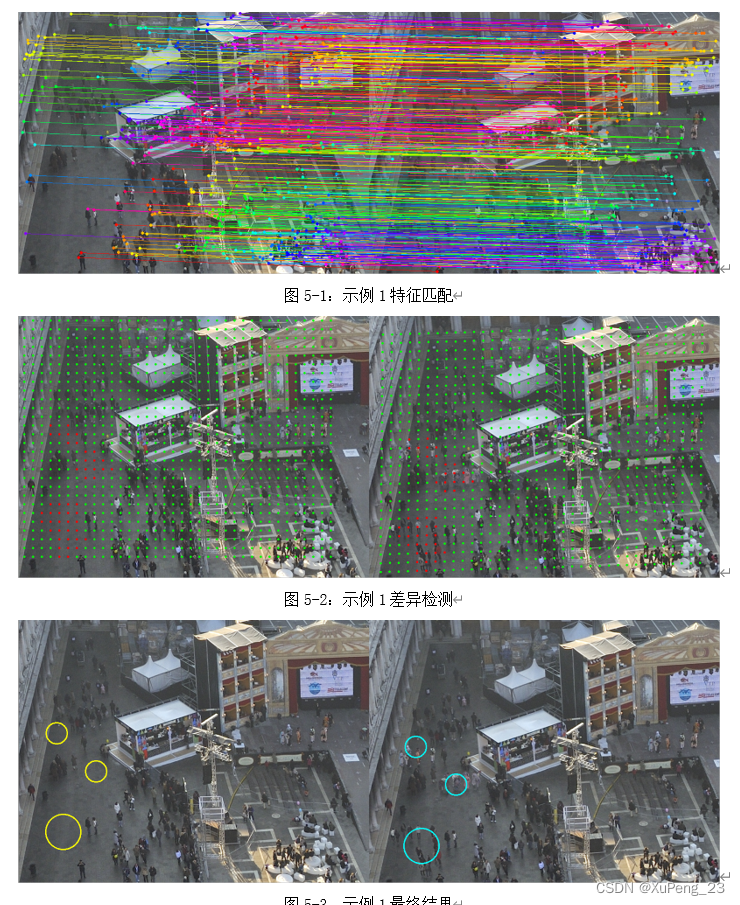

2.3 聚类操作

最后把检测到的差异点聚类一下

本文内容由网友自发贡献,转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/103826

推荐阅读

相关标签